Can Auditable AI Improve Fairness in Models?

Last Updated on February 5, 2021 by Editorial Team

Author(s): Tobi Olabode

Fairness, Opinion

Not unique but very useful

I was reading an article on Wired about the need for auditable AI. Which would be third party software evaluating bias in AI systems. While it sounded like a good idea. But I couldn’t help think it’s already been done before. With Google’s what if tool.

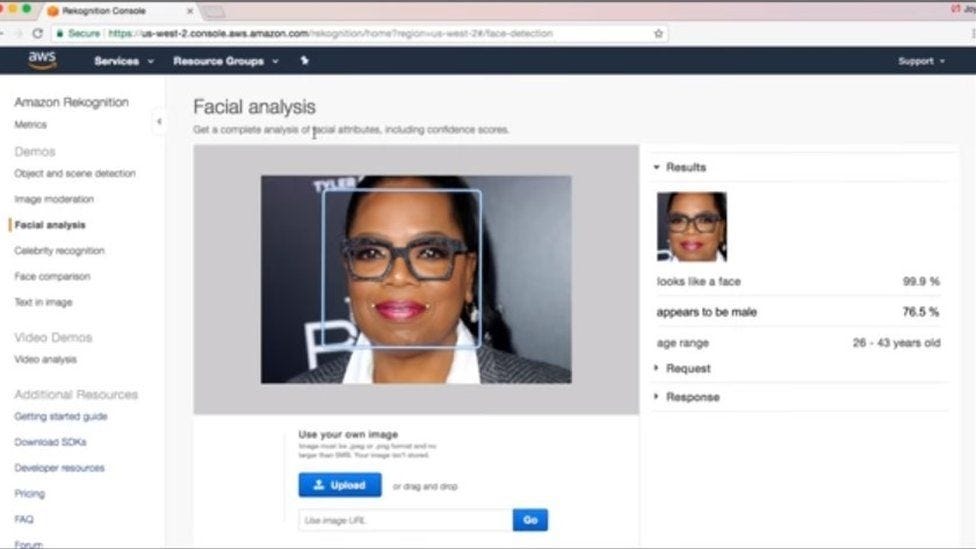

The author explained that the data can be tested. By checking how the AI responds by changing some of the variables. For example, if the AI judges if someone should get a loan. Then what the audit would do. Is check that does the race effect getting the loan. Or gender, etc. So if a person with the same income but different gender. Denied a loan. Then we know the AI harbors some bias.

But the author makes it sound that it’s very unique or never been done before. But Google’s ML tools already have something like this. So the creator of the AI can already audit the AI themselves.

But there is power by using a third party. That third party can publish a report publicly. And also won’t be hiding data that is unfavorable to the AI. So a third party can keep the creator of the AI more accountable. Then doing the audit on your own.

Practically how will this work?

Third parties with auditable AI

We know that not all AI will need to be audited. Your cat vs dogs does not need to be audited.

The author said this will be for high stakes AI. Like medical decisions. Or justice and criminal. Hiring. Etc. This makes sense.

But for this to work seems like the companies will need to buy-in. For example, if an AI company decides to do an audit and find out their AI is seriously flawed. And no one wants their product because of it. Then companies are less likely to do so.

Maybe first the tech companies should have some type of industry regulator. That makes standards on how to audit AI. And goals for one to achieve. Government initiative will be nice. But I don’t know if the government has the know-how at the moment. To create regulation like this.

Auditing the AI. Will require domain knowledge.

The variables needed to change in the loan application. Is different for AI that decides if a patient should get medicine. The person or team doing the auditing. Will need to know what they are testing. The loan application AI can be audited for racism or sexism. The medicine AI can be audited for certain symptoms or previous diseases. But domain knowledge is highly needed.

For the medicine example. A doctor is very likely to part of the auditing team.

On the technical side, you may want to ask the creators to add extra code to make auditing easier. Like some type of API that sends results to the auditable AI. Creating an auditable AI for every separate project. Will get bogged down fast. Some type of formal standard will be needed to make life easier for the auditor and creator of the AI.

This auditable AI idea sounds a bit like pen testing in the cybersecurity world. As your stress testing (ethically) the systems. In this context, we are stress-testing how the AI makes a decision. Technically you can use this same idea. For testing adversarial attacks on the AI. But that is a separate issue entirely.

From there it may be possible to create a standard framework. On how one will test AI. But this depends on the domain of the AI. Like I said above. Because of that, it may not scale as well. Or likely the standards will need to be limited. So, it can cover most auditable AI situations.

Common questions for when auditing one AI:

How to identify important features relating to the decision?

Which features could be classed as discrimination if a decision is based on them?

i.e. Gender, race, age

How to make sure the AI does not contain any hidden bias?

And so on.

It may be possible that auditable AI. Can be done by some type of industry board. So it can act as its regulator. So they can set their frameworks on how to craft auditable AI. With people with domain knowledge. And people who are designing the audited AI. To keep those ideas and metrics in mind. When developing the AI in the first place.

The audible AI by a third-party group. Could work as some type of oversight board. Or regulator. Before the important AI gets released to the public.

It is a good idea, to do regular audits on the AI. After the release. As new data would have been incorporated into the AI. Which may affect the fairness of the AI.

Auditable AI is a good step, but not the only step

I think most of the value comes with the new frameworks overseeing how we implement AI. In many important areas. Auditable AI is simply a tool. To help with that problem.

In some places, auditable AI tools will likely be internal. I can’t imagine the military opening up their AI tools. To the public. But it will be useful for the army that AI can make good decisions. Like working out what triggers a drone to label an object and enemy target.

Auditable AI may simply be a tool for debugging AI. Which is a great thing don’t get me wrong. Something that we all need. But may not be earth-shattering.

And what many people find out. About dealing with large corporations or bodies like governments. That they may drop your report. And continue what they were doing anyway. A company saying it opening its AI. For third party scrutiny is great. PR wise. But will they be willing to make the hard decisions? When the auditable AI tells you. Your AI has a major bias. And fixing that will cause a serious drop in revenue.

Will the company act?

Auditable AI is a great tool that we should develop and look into. But it will not solve all our ethical problems with AI.

If you want to learn more about the intersection between society and tech. Check out my mailing list.

Can Auditable AI Improve Fairness in Models? was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.