Spaceship Titanic- A Machine Learning Project-I for Beginners

Last Updated on February 7, 2024 by Editorial Team

Author(s): Kamireddy Mahendra

Originally published on Towards AI.

“Exploration in space is the testament to human curiosity reaching for the stars.”

Spaceship Titanic — A Machine Learning Project

It is an innovative venture that combines cutting-edge technology with the boundless possibilities of space exploration.

Through the integration of machine learning algorithms, this project aims to enhance the capabilities of the Spaceship Titanic, empowering it to navigate the cosmos with unprecedented efficiency and accuracy.

This project is considered from Kaggle competitions for knowledge gaining for those who start their journey in machine learning.

Click Here to see the project details.

Context & Problem Statement from the Kaggle website:

Welcome to the year 2912, where your data science skills are needed to solve a cosmic mystery. We’ve received a transmission from four lightyears away and things aren’t looking good.

The Spaceship Titanic was an interstellar passenger liner launched a month ago. With almost 13,000 passengers on board, the vessel set out on its maiden voyage, transporting emigrants from our solar system to three newly habitable exoplanets orbiting nearby stars.

While rounding Alpha Centauri en route to its first destination — the torrid 55 Cancri E — the unwary Spaceship Titanic collided with a spacetime anomaly hidden within a dust cloud. Sadly, it met a fate similar to its namesake from 1000 years before. Though the ship stayed intact, almost half of the passengers were transported to an alternate dimension!

To help rescue crews retrieve the lost passengers, you are challenged to predict which passengers were transported by the anomaly using records recovered from the spaceship’s damaged computer system.

solution

This is a classification problem i.e. we need to find whether the passenger was transported or not.

Here we can use different algorithms related to classification problems.

let’s explore the data first.

import numpy as np

import pandas as pd

data=pd.read_csv('/kaggle/input/spaceship-titanic/train.csv')

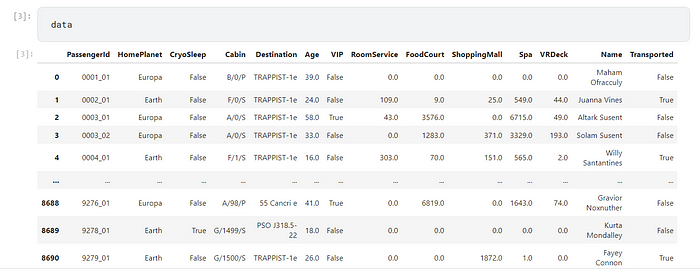

data

data.shape

data.columns

data.describe()

data.isnull().sum()

One thing we should remember there is no right and wrong in machine learning solutions. all we get is accurate or not accurate solutions.

It is always preferred to good results, as we prepared good models that will give good results anyway.

We iterate through different models and where we get good results that we treat as good solutions. I hope you know that all those things will be measured by different metrics.

While preparing models, it is our choice to choose features. based on them the model will be designed. If we consider more features that will give good accuracy, that also leads to overfitting. I hope you are aware of all the underlying concepts.

features=['HomePlanet', 'CryoSleep', 'Destination', 'Age',

'VIP', 'RoomService', 'FoodCourt', 'ShoppingMall', 'Spa', 'VRDeck']

train_data=data.drop('Transported', axis=1)

train_data

train_data=train_data[features]

train_data

y_train=data['Transported']

y_train

x_train.shape

x_val.shape

y_train.shape

y_val.shape

we import the necessary algorithms and techniques from Sci-kit Learn. as you see here in the code below. we trained the model using training data, and we can test with validation data, and we can find all metrics to make sure our model is accurate enough to predict test results accurately.

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

x_train, x_val, y_train, y_val=train_test_split(train_data, y_train, train_size=0.9, test_size=0.1, random_state=1)

d_model=DecisionTreeClassifier(random_state=1)

r_model=RandomForestClassifier(n_estimators=500, max_depth=9, random_state=1)

lr_model=LogisticRegression()

d_model=d_model.fit(x_train,y_train)

r_model=r_model.fit(x_train,y_train)

lr_model=lr_model.fit(x_train,y_train)

dpred=d_model.predict(x_val)

rpred=r_model.predict(x_val)

lrpred=lr_model.predict(x_val)

Finally, we will predict the test data with our prepared models and we can check which one gives accurate results i have attached that image below.

We can observe different metrics using all three models we used to predict. Both random forest and Logistic regression metrics are almost the same.

test_data=pd.read_csv("/kaggle/input/spaceship-titanic/test.csv")

test=test_data[features]

test=pd.get_dummies(test).fillna(0)

test

dpred=d_model.predict(test)

rpred=r_model.predict(test)

lrpred=lr_model.predict(test)

dpred

output=pd.DataFrame({'PassengerId': test_data.PassengerId, 'Transported':dpred})

output.to_csv("submission3.csv",index=False)

output2=pd.DataFrame({'PassengerId': test_data.PassengerId, 'Transported':rpred})

output2.to_csv('submission4.csv',index=False)

lrpred

output3=pd.DataFrame({'PassengerId': test_data.PassengerId, 'Transported':lrpred})

output3.to_csv('submission5.csv',index=False)

I have used three models. Decision tree classifier, random forest classifier, and logistic regressor.

In my first iteration, I used a few features, and I got a public score of 69. I realize that the model is underfitted. Then I used more features with different models then the public score started increasing. finally, for the logistic regressor, the public score becomes 78.7. i.e., is the highest score till now. Many optimizations are possible to improve the score up to 1.

I hope this article helps you to add a bit of knowledge about the machine learning project and algorithms to prepare models and predict results.

Kindly Support me by clapping and providing feedback, which helps me to work on delivering quality content and gives me motivation to move forward. Follow me to catch any updates from me.

Thank you:)

Reference: Kaggle

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.