Imbalanced Classification

Last Updated on January 18, 2021 by Editorial Team

Author(s): Nithin Raj Anantha

Data Science

What is Imbalanced Classification and Why you should be wary of it?

Consider this, you are modeling a classification problem to detect if the patient does or does not have cancer. You test it on your validation data and get an accuracy of 99%, Incredible, you think. But it seems too good to be true, so you decide to dig a little deeper, that’s when you find out that your data had only 5% of the people that had cancer.

So, your model is biased towards people who don’t have cancer and is most likely to predict the same, more often than not irrespective of their medical condition. This is the case of Imbalanced Classification.

Accuracy paradox

So, if there is one thing that we learned from the above example it is that accuracy is not a reliable metric for predictive models when used for classification problems. This is because, in classification problems, we’re typically more concerned about the errors that we make and the target class is usually the area of interest that we’re trying to focus on. This is called the accuracy paradox. So, to overcome this we turn to some other reliable metrics known as Precision and recall, which can be calculated from a confusion matrix.

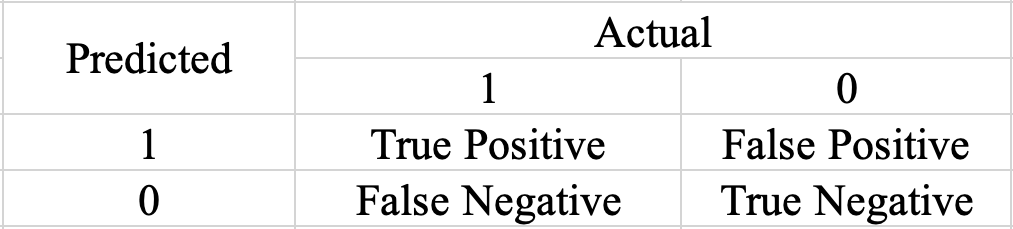

Confusion Matrix:

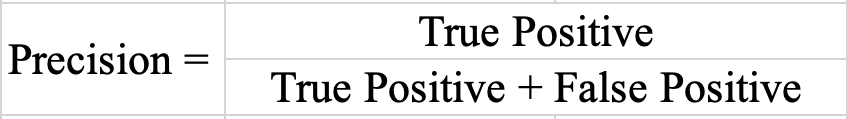

Precision: Of all the patients that we predicted; what fraction actually has cancer

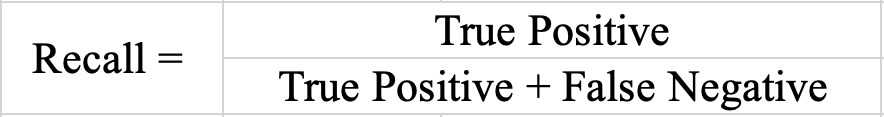

Recall: Of all the patients that have cancer, what fraction did we predict to have cancer

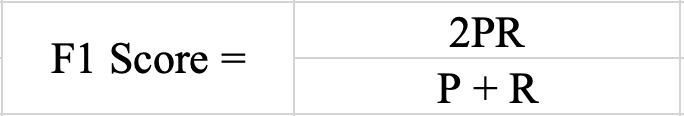

F1 score: This is useful while comparing multiple models with varying precision and recall values.

So, with the models having high precision and recall you are much more confident about your prediction.

Why does it occur?

Data imbalance is a common scenario we observe in our daily lives. Below stated are some of the reasons why that’s the case:

Biased Sampling: The data collection was not at all random. This can be due to the fact that data collection was focused only on a narrow geographical region, time frame, etc. For example, you went out to conduct a survey to know which baseball team has the most fans Red Sox or the Yankees in Boston, and you found out that Red Sox’s fanbase is downright dominating the records. But this is because of the biased sampling that occurs due to the narrow scope of geography.

Measurement Errors: This problem arises from the errors made in data collection. These errors include applying the wrong class at the time of data entry or a case of damage or impairment to the data to cause an imbalance.

Problem domain: The training data might not be a good representation of the problem we are dealing with. Sometimes it may be because the process that generates data may do it quickly and easily for one class but takes more time, cost, or computational power for generating the other. So, it becomes relatively difficult to generate data for the other class.

Real-world scenarios:

You can find these types of imbalances in data-intensive applications ranging from civilian applications to security and defense-related applications. Below are examples of problem domains that are inherently imbalanced.

- Claim Prediction

- Fraud Detection

- Loan Default Prediction

- Churn Prediction

- Spam Detection

- Anomaly Detection

- Outlier Detection

- Intrusion Detection

- Conversion Prediction

Each of the above-stated cases has its own specific problems for the imbalance in their data and we can also note that their minority classes are very rare in occurrence as well, So it is hard to predict them with very little data.

Solution:

You can always consider a slight imbalance to be a no big deal, like a ratio of 4:6 between the two classes is fine, but the problem is instigated when that ratio starts to drift away in one specific direction.

Most of the standard ML algorithms assume an equal distribution of classes, so minority classes are not modeled properly. Imbalanced classification is yet a problem to be solved completely, but in the meanwhile, you could consider the following methods to solve the issue:

Collecting more data: This practice is often underrated but if you have a chance to gather more data then you should definitely consider doing it as it could bring in some balanced perspective to the classes.

Resampling: We can basically restructure the data to restore it into balance. This can be done in two ways:

- Oversampling: Making copies of instances from the Minority class

- Under-sampling: Deleting the instances from the Majority class

Synthetic data: You can use algorithms to generate synthetic samples as in the data that is acquired by randomly sampling the attributes from the instances in the Minority class. One of the most popular methods is SMOTE which elaborates to be Synthetic Minority Oversampling technique. This is an oversampling technique by name but instead of just duplicating the values it creates synthetic samples. But we need to keep in mind that by doing this the non-linear relationships between the attributes may not be preserved.

Threshold: We generally categorize our classes based on the probability threshold, we say it to be in class 1 if P>0.5, or else it is in class 0 otherwise. We can consider tuning the probability threshold to our convenience which indirectly impacts our precision and recall.

- Suppose we increase the threshold to be 0.9, we can confidently tell the small set of patients they do have cancer, so this increases precision

- But conversely, if we decrease the threshold to 0.2, we can account for more patients who might have cancer, we are not entirely sure but this is a risk worth considering, so this increases the recall.

You need to tune the threshold to suit your dataset and find a good precision/recall tradeoff to get the best results.

Regularization or penalization: You can add additional costs to the classifier for not considering the minority class. This shifts the attention towards the minority class as it adds bias to the model.

Well, that’s just a drop in the ocean and you can try many more creative and innovative ideas to eliminate the problem of imbalanced classification. Just keep in mind that you cannot know for sure which method is going to work best for your dataset, so just ‘be the scientist’ and proceed with a trial and error method to find the best one that suits your scenario.

Fun fact: Many people confuse Imbalance with Unbalance, but they are relatively different. Unbalanced classification refers to the class that was balanced once but is not anymore, whereas imbalance refers to the class that is inherently not balanced.

There you go, now you have a solid understanding of what imbalanced classification is and how it can cause some serious misinterpretations with skewed results. You have also learned how to combat this issue which will help you build a better model.

Thank you for taking the time and reading this article. Do share your thoughts regarding this!! Until next time, Bye.

Peace ✌️

References:

Randy Lao. “Machine Learning: Accuracy Paradox.” LinkedIn, www.linkedin.com/pulse/machine-learning-accuracy-paradox-randy-lao/.

Brownlee, Jason. “A Gentle Introduction to Imbalanced Classification.” Machine Learning Mastery, 14 Jan. 2020, machinelearningmastery.com/what-is-imbalanced-classification/.

Brownlee, Jason. “8 Tactics to Combat Imbalanced Classes in Your Machine Learning Dataset.” Machine Learning Mastery, 15 Aug. 2020, machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/.

Imbalanced Classification was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.