AI Image Fusion and DGX GH200

Last Updated on November 6, 2023 by Editorial Team

Author(s): Luhui Hu

Originally published on Towards AI.

In the realm of Computer Vision (CV), the ability to stitch together partial images and measure dimensions isn’t just an advanced trick — it’s a vital skill. Whether you’re creating a panoramic view from your smartphone, measuring the distance between objects in a surveillance video, or analyzing scientific images, both image stitching and measuring play a crucial role. This article aims to demystify these two fascinating aspects of CV. Then I’ll share about cloud AI infra, data-center AI powerhouse.

The Art of Stitching

Image stitching isn’t just an algorithmic challenge; it’s an art form. Stitching algorithms strive to seamlessly combine multiple images into one, expansive output, free from seams, distortion, and color inconsistency. Open-source methods vary in complexity from traditional feature-matching algorithms like SIFT and SURF to deep learning models like DeepStitch.

Traditional vs. Deep Learning

- Traditional Methods: Algorithms like SIFT (Scale-Invariant Feature Transform) and SURF (Speeded-Up Robust Features) use key points and descriptors to find overlapping regions between images. These methods are fast and work well for simple use cases but can struggle in more complex scenes.

- Deep Learning Models: Solutions like DeepStitch go beyond by using neural networks to find optimal stitching points, providing higher accuracy, especially in complex scenes.

Below are available open-source algorithms or libraries for image stitching and panoramas.

Measuring in a 2D World

Image stitching allows us to expand our visual horizon, but what about understanding the world within that field of vision? That’s where image measuring comes in. From using simple Euclidean distance calculations in a calibrated setup to leveraging deep learning models that can identify and measure objects, the techniques are diverse.

Simple to Complex

- Calibration Methods: Techniques like camera calibration provide a way to relate pixel dimensions to real-world dimensions. Once calibrated, even simple geometric formulas can yield accurate measurements.

- Object Detection and Tracking: Deep learning models like YOLO or SSD are proficient at identifying objects in both images and real-time videos, paving the way for automated measuring.

Below are available open-source methods for measuring and photogrammetry.

Stitching and Measuring: Two Sides of the Same Coin

You might wonder why we’re discussing both stitching and measuring together. The reason is they often go hand-in-hand. For example, in surveillance applications, a stitched panoramic view of a location can be used to track and measure the distance between multiple targets accurately. In medical imaging, stitched images from different angles can provide a more comprehensive view, facilitating more precise measurements.

TL;DR for CV Pixels

Whether you’re a hobbyist, a researcher, or someone intrigued by the applications of CV, both stitching and measuring are integral techniques to understand. While traditional algorithms offer a quick and straightforward approach, the advent of deep learning has opened the door to unprecedented levels of accuracy and complexity. It’s a thrilling time to delve into the world of CV, where the boundary between the pixel and the panorama continues to blur, offering us a clearer view of the bigger picture.

The field is advancing at a rapid pace, and it’s crucial to stay updated with the latest algorithms and methodologies. So go ahead, stitch your way through panoramas, and measure your world, one pixel at a time!

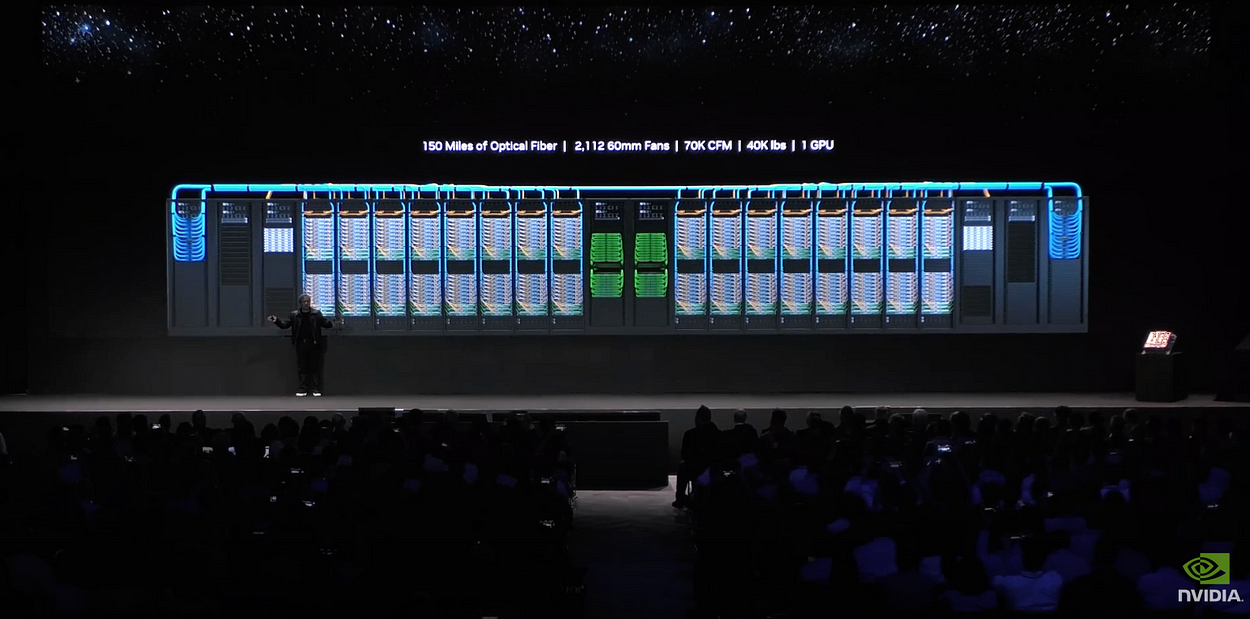

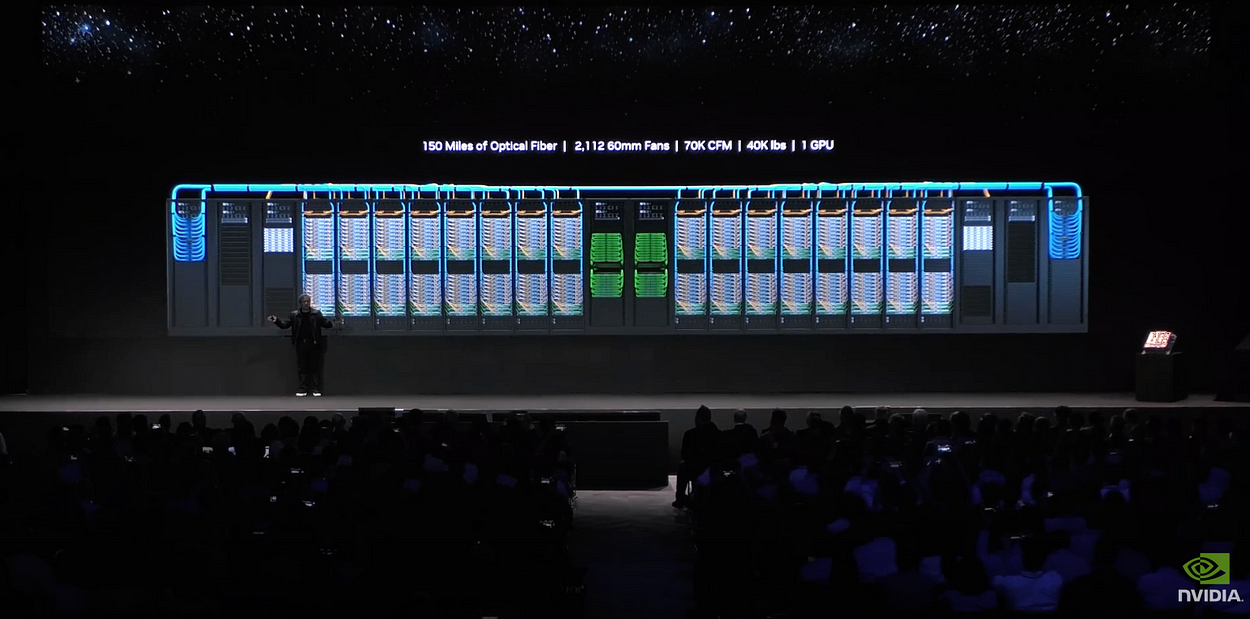

AI Giant Foundation: DGX GH200

After leveraging AI/CV for advanced stitching and measuring capabilities, we also recognize the foundational role of GPUs in powering our AI-driven solutions. In this transformative age of AI, Nvidia’s DGX GH200 AI Supercomputer stands as a monumental advancement. This computational behemoth, with a single GPU as heavy as four elephants, redefines what’s possible.

Far from being just a large machine, its unparalleled capability offers a stunning 144TB of shared memory across 256 NVIDIA Grace Hopper Superchips (GH200). This empowers developers with nearly 500x more memory, enabling the creation of complex, large-scale models to tackle today’s most challenging problems. Truly, it’s not just a machine, but the future of AI materialized.

Towards GH200

NVIDIA GH200 Grace Hopper Superchip integrates NVIDIA’s Grace and Hopper architectures through NVLink-C2C, offering a coherent CPU+GPU (H100) memory model optimized for AI and HPC applications. As the ninth-generation data center GPU, H100 Tensor Core introduces a new Transformer Engine, boasting up to 9X faster AI training and 30X faster AI inference compared to its predecessor, A100.

In a strategic rebranding move in May 2020, Nvidia transitioned its Tesla GPGPU line to Nvidia Data Center GPUs to sidestep brand confusion with Tesla automobiles. Originally competing with AMD’s Radeon Instinct and Intel’s Xeon Phi, these GPUs supported CUDA or OpenCL programming and were pivotal in deep learning and computational tasks.

Spanning ten generations, each with distinct micro-architectures — Tesla, Fermi, Kepler, Maxwell, Pascal (P100), Volta (V100), Turing (T4), Ampere (A100, A40), Hopper (H100), and Ada Lovelace (L40) — Nvidia’s data center GPUs have consistently pushed the envelope in deep learning and scientific computing.

DGX GH200 vs GH200 vs H100

What's the difference between a DGX GH200, a GH200, and an H100?

gpus.llm-utils.org

NVIDIA Announces DGX GH200 AI Supercomputer

NVIDIA today announced a new class of large-memory AI supercomputer – an NVIDIA DGX™ supercomputer powered by NVIDIA®…

nvidianews.nvidia.com

NVIDIA Grace Hopper Superchip

A breakthrough accelerated CPU designed from the ground up for giant-scale AI and HPC applications.

www.nvidia.com

NVIDIA Grace Hopper Superchip Data Sheet

The NVIDIA Grace™ Hopper™ architecture brings together the groundbreaking performance of the NVIDIA Hopper GPU with the…

resources.nvidia.com

Nvidia Tesla – Wikipedia

Toggle the table of contents From Wikipedia, the free encyclopedia Manufacturer Nvidia Introduced May 2, 2007;16 years…

en.wikipedia.org

NVIDIA Supercomputing Solutions

Learn how NVIDIA Data Center GPUs- for training, inference, high performance computing, and artificial intelligence …

www.nvidia.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.