The Cost Trap of AI Agents

Last Updated on November 18, 2024 by Editorial Team

Author(s): AI Rabbit

Originally published on Towards AI.

This member-only story is on us. Upgrade to access all of Medium.

Have you ever wondered how multiple AI agents can interact seamlessly while keeping costs under control? When working with multiple AI agents in AutoGen, understanding and managing token consumption is critical to both cost optimisation and system performance. Let’s look at how tokens are consumed in different conversation patterns and explore some strategies for efficient token usage.

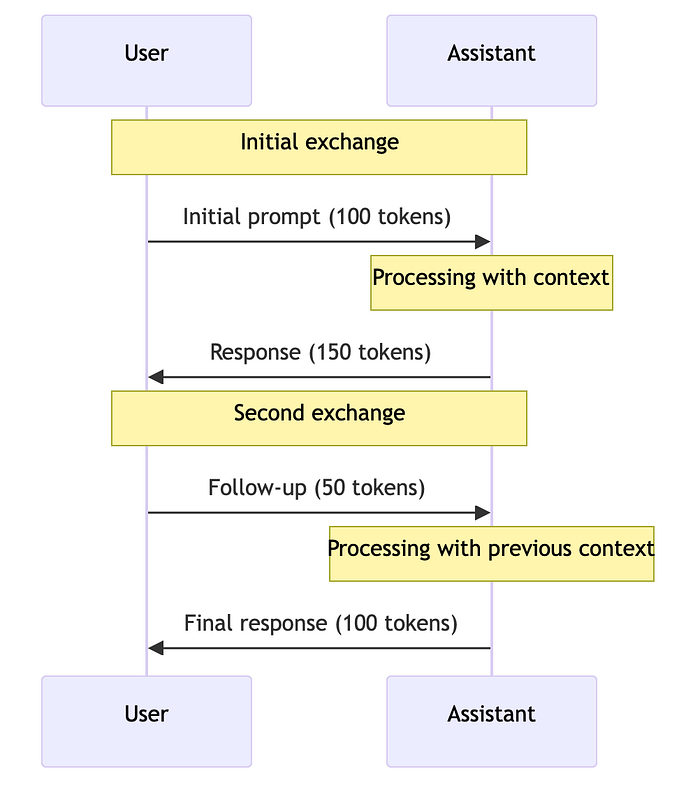

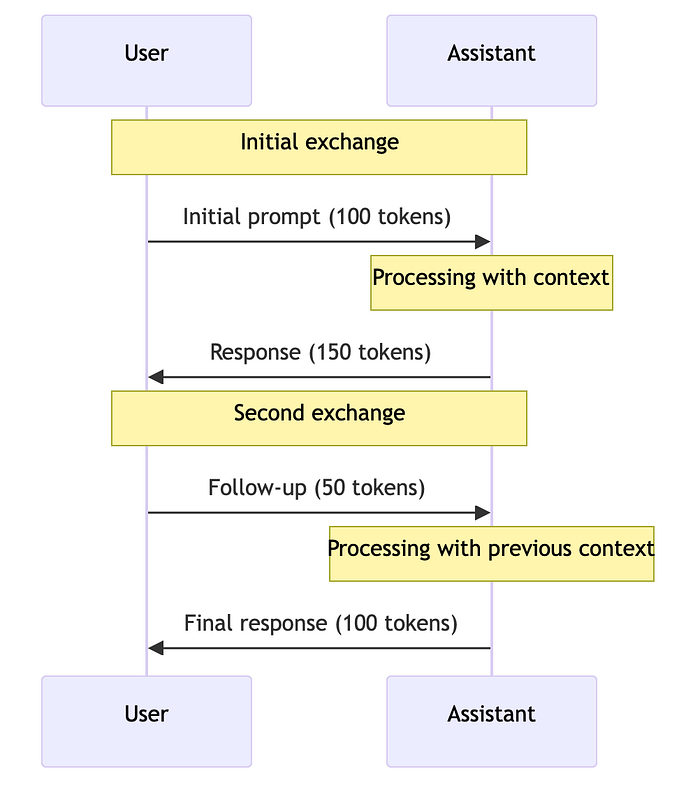

How do tokens add up in a simple interaction between you and an assistant?

Token accumulation:

First exchange: 250 tokens (100 input + 150 output)Second exchange: 200 tokens (50 input + 100 output)Total: 450 tokens

Isn’t it fascinating how each interaction builds on the previous one?

What happens when multiple agents join the conversation? Let’s have a look:

Token accumulation:

Initial task: 100 tokensAgent1 processing: 220 tokens (100 input + 120 output)Agent2 processing: 370 tokens (220 input + 150 output)Agent3 processing: 470 tokens (370 input + 100 output)Final result: 550 tokens (470 input + 80 output)

Can you see how quickly tokens can accumulate in a group chat scenario?

But How do you keep track of all these tokens?

AutoGen provides a middleware-based approach to tracking token usage. Let’s explore a token counter middleware implementation:

public class TokenCounterMiddleware : IMiddleware{ private read-only List<ChatCompletionResponse>… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.