RAG for Beginners

Author(s): Omer Mahmood

Originally published on Towards AI.

Learn about Retrieval-Augmented Generation (RAG) and how it’s used in Generative AI applications

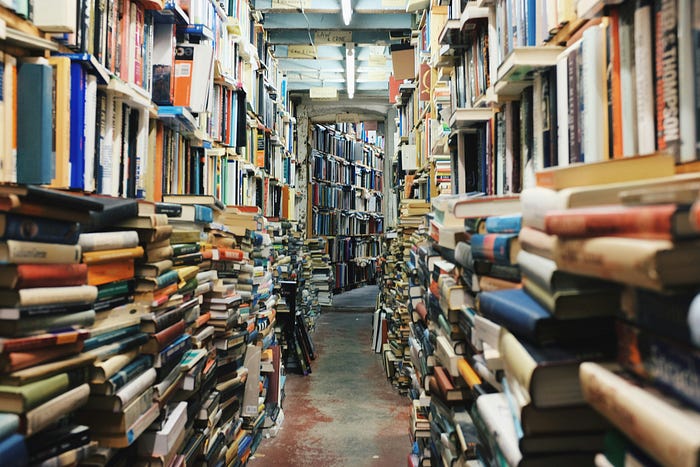

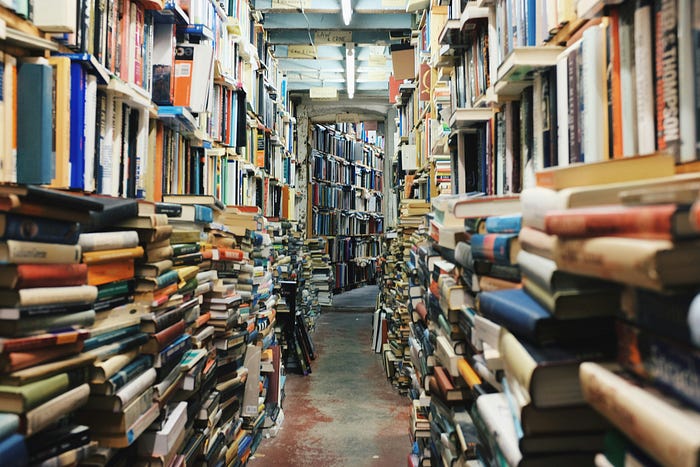

Photo by Glen Noble on Unsplash

If you’re a regular subscriber, or you found your way here from my last GenAI fundamentals post about “Getting started with Vector Databases” — U+1F44BU+1F3FC Welcome!

U+270DU+1F3FC Is there a fundamental GenAI topic you would like me to cover in a future post? Drop a comment below!

⏩ This time, we’re going to learn about Retrieval-Augmented Generation (RAG), an industry-standard that is commonly integrated with a common VectorDB pipeline to produce better results in Large Language Model (LLM) use cases without needing to retrain the underlying model.

During my research for this post, I came across a relatable analogy for the role RAG plays in generative AI-powered applications, such as LLM chatbots…

Imagine yourself in the scene of a New York City courtroom drama. Cases are brought in front of a judge, often they will make decisions on the outcome based on their general understanding of the law.

Every now and again, there will be a case that requires specialist knowledge — such as an employment dispute or medical malpractice — in this instance the judge will look to precedents (decisions made in previous similar cases) to help inform their judgment.

It is usually a court clerk who is responsible for… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.