Page by Page Review: Mixtral of Experts (8x7B)

Last Updated on January 11, 2024 by Editorial Team

Author(s): Dr. Mandar Karhade, MD. PhD.

Originally published on Towards AI.

A completely opensource model that could dominate the entrepreneurial scene in the field of Generative AI

TLDR:

Core points:

Mixtral is a Sparse Mixture of Experts (SMoE)It has 8 expert models. Each of the size 7B parametersAt any given point 2, experts are at work competingEvery token can effectively access 47B parametersOnly 13B parameters are active at any given point (important for VRAM)It has a context size of 32K and comes in 2 flavors (Chat and Instruct)Chat Mixtral 8x7B outperforms LLaMA 70B and GPT-3.5 handily in maths, code, and other languagesFine-tuned Instruct model surpasses GPT-3.5-Turbo, Claude-2.1, Gemini Pro, and LLaMA-2 70B.It is completely Open Source (Apache 2.0)

In short,

If you are an enthusiast or serious about developing commercial applications and have thought about using LLaMA-2 or have been pondering shelling out monies to OpenAI or Anthropic, you should certainly stop and try Mixtral 8x7B first. You might end up maintaining complete control over your IP and the model! Cheers to my Entrepreneurial friends and those who are keen on dabbling in new things.

Mixtral is based on a transformer architecture

In a Transformer model, the MoE layer. Mixtral supports a fully dense context length of 32k tokens, and the feedforward blocks are replaced by a mixture of expert layers.

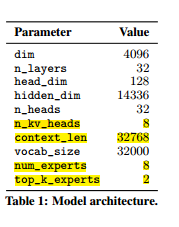

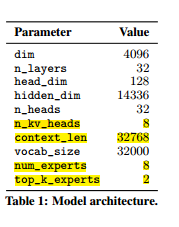

Model architecture summary, as shown below, includes — 8 experts with 2 experts… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI