OpenAI Blows Open the Gates to Enterprise AI

Last Updated on November 6, 2023 by Editorial Team

Author(s): Jorge Alcántara Barroso

Originally published on Towards AI.

Welcome to the future of enterprise solutions: a space being taken over by Artificial Intelligence (AI), and, more specifically, OpenAI’s new B2B focus.

With the explosion of tools and technologies, enterprises are riding a wave of innovation like never before. But as offerings proliferate and updates roll out at breakneck speed, it can be a dizzying challenge to navigate this landscape and decide what is right for your company.

That’s where this introduction comes in. To be your compass in the AI jungle, walking you through the two most important changes that have taken place this Summer and are forcing the industry to reinvent how we think about AI SaaS in the workplace.

- Fine-Tuning for ChatGPT — Create a model you can chat with that knows about whatever you want to train it on.

- Enterprise ChatGPT — Private company deployments of ChatGPT with all the power offered to Plus users and more!

Read on to understand these seismic updates for what they are and what they’re not.

This isn’t just about staying updated with the latest trends; it’s about leveraging the pinnacle of what AI has to offer right now. And trust us, you don’t want to miss out on this ride. The latest updates leave no excuse for any company that was waiting to get onto this train. Even the most skeptical will be able to see why the combination of Enterprise-grade privacy and flexible customization is poised to finally take OpenAI on its path to positive revenue with an excellent product-market fit.

The Rise of Customizable AI Enterprise Tools

The game changed when OpenAI released Fine-Tuning for GPT-3.5 Turbo. If you thought AI was already making waves in the enterprise space, think again. This new capability brought something to the table that was only possible with smaller and open-source models — customization. But what does that mean for your enterprise, and why should you care? Well, because customization has proven to be the defining factor in terms of performance & scalability when aiming for a specific use case.

GPT-3.5 Turbo and Fine-Tuning

GPT-3.5 Turbo isn’t just another pre-trained language model; it’s already prepared to follow instructions (work well as a chat agent). But now it comes with the capability to be fine-tuned to a degree of specificity that previous iterations couldn’t touch. This essentially allows you to make the model “your own” teaching it the nuances of your domain, the intricacies of your brand’s voice, or even the specific problem-solving strategies you require.

Recent publications from OpenAI show that a fine-tuning GPT-3.5 can render better results than GPT-4 for the designed use case. Furthermore, the cost per token of interacting with fine-tuned 3.5 is 60–80% cheaper than GPT-4 (8k context window) calls.

Interestingly, the input/output cost is different in rate, which makes it even more interesting to use fine-tuned GPT-3.5 for use cases where low-input large-output is expected.

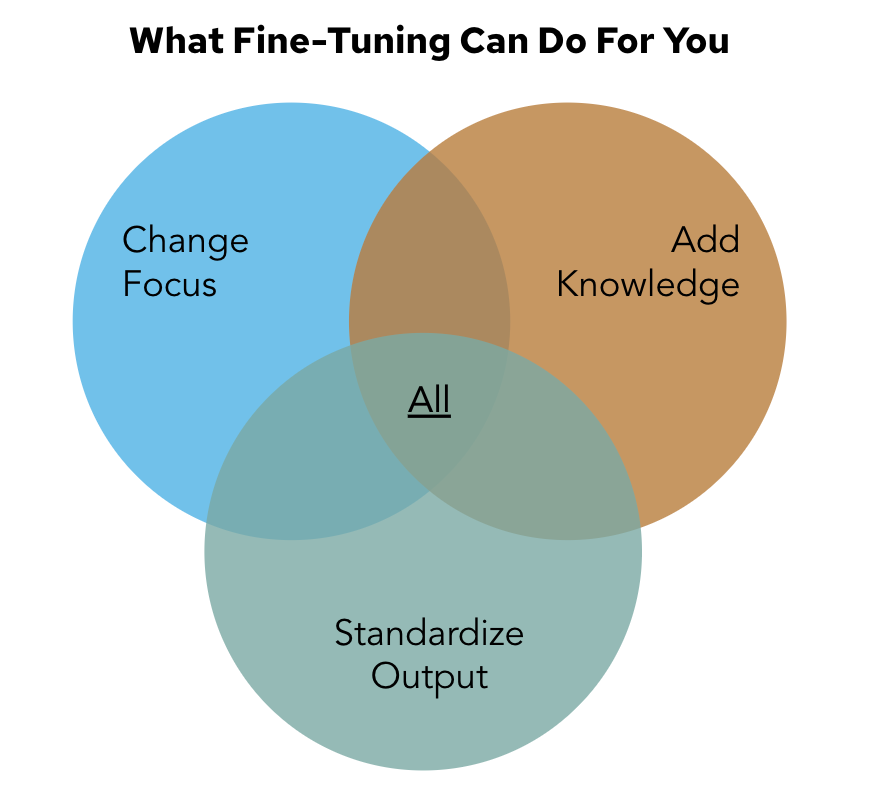

What is Fine-Tuning Anyway?

Alright, you’re pumped about the customizable superpowers that fine-tuning brings to GPT-3.5 Turbo. But let’s take a step back and dig into what fine-tuning actually is. Because, let’s be honest, “fine-tuning” sounds cool but might seem a tad technical for some.

The Essence of Fine-Tuning

Imagine you’ve got a sports car. It’s already blazing fast right off the production line. But now you’ve decided you want it to be the best on a specific track. You won’t just replace the whole engine; instead, you tweak the existing machinery — perhaps you modify the gear ratios, or maybe you invest in better aerodynamics. You’re fine-tuning it.

In a similar vein, fine-tuning takes an already-trained AI model and customizes it for specific tasks or domains. You’re not building a new model from scratch; you’re just giving the existing one a “minor” makeover to improve its performance in your desired niche.

Steps in Fine-Tuning

- Data Preparation: Gather domain-specific data.

- Preprocessing: Clean and format this data to make it compatible.

- Training: The fine-tuning itself — train the model on your tailored data.

- Evaluation: Assess the model’s performance in the specific domain.

- Deployment: Integrate the model into your application.

So, Why Does It Matter?

Okay, that sounds interesting, but should you care? Yes!, Here’s why:

Reduced API Costs

When you fine-tune a model, it becomes more efficient at generating relevant outputs. This means fewer API calls and, by extension, lower costs. Companies have reported a decrease in API costs by up to 40% after fine-tuning their models. This can even be larger if you break up your use case to use the least smart (cheapest) model for each step that still accomplishes your goals.

Performance Uplift

A generic model tries to be a jack-of-all-trades. But a fine-tuned model becomes a master of one — your specific domain. The result? Improved accuracy and less need for human intervention.

Differentiated Solutions

A model fine-tuned with your selection of data will perform differently from any other version out there. This enables companies to truly create unique outputs, opening a door for those startups building LLM wrappers to step up and make their solution less easily replicable.

The Precedence of Prompt Engineering

While fine-tuning is akin to turning your sports car into a track champion, there are performance boosts you can achieve without heading into the garage for a full overhaul. This is, of course, our well-known discipline of Prompt Engineering — your low-hanging fruit in the AI optimization garden.

Fine-Tuning Isn’t Always Step One

Don’t get me wrong; fine-tuning is a powerful tool. However, it’s resource-intensive, requiring both computational power and domain-specific data. So before you dive deep into customization, ask yourself, “Can I get good enough results without changing the underlying model?”

Prompt Engineering: Your Quick Win

If you’re new to the term, prompt engineering is basically the art of crafting queries or instructions in a way that guides the AI model to generate desired outputs. No model adjustments, no added costs. Simple text tweaks can result in dramatically different and highly targeted outputs.

Here’s a basic example:

- General Query: “Tell me about coffee.”

- Engineered Prompt: “Summarize the history, health benefits, and brewing methods of coffee.”

The second prompt is designed to elicit a more structured and comprehensive answer, allowing you to maximize the utility of the base model.

Advanced Techniques: Prompt Chaining & Function Calling

Let’s level up. Prompt chaining involves breaking down a complex task into smaller, digestible queries and feeding the output of one as the input to another. For instance, asking the model to first summarize a document and then translate that summary into another language.

Function calling is a more recent innovation that enables the use of pre-built functions within a chat session to accomplish specific tasks. This is like giving your model a set of utilities to pull from, streamlining processes like data extraction or text summarization even further.

Why Prioritize Prompt Engineering?

Three reasons:

- Speed: Real-time results, no waiting for model training.

- Cost-Efficiency: Zero extra costs, especially useful if you’re still experimenting with AI capabilities.

- Flexibility: Easy to adjust and refine, enabling agile iterations to match your evolving needs.

Before you invest in a fine-tuned model, test the waters with prompt engineering. Sometimes, the difference between a good solution and the best solution is just a well-engineered question.

Control & Compliance: The Enterprise Edition

The cornerstone of an enterprise AI deployment: Security and Compliance. Let’s get real; all the bells and whistles of a technology solution mean nothing if it can’t stand up to the rigorous security standards your organization requires. This is where OpenAI’s Enterprise Offering, announced on Aug 28th, comes into play.

Although the announcement was just made this week, OpenAI shows that a long list of very respectable clients have already been using their Enterprise offering without it being yet public.

Details are still scarce, and implementation today requires dedicated interaction with OpenAI’s sales teams. Still, the solution promises to fulfill the four C’s that the industry has been looking for — Control, Compliance, Customization & Collaboration.

Control: Admin Console

The Enterprise version will ship with an intuitive Admin Console. This is your control center, providing a dashboard to manage API keys, monitor usage, and set permissions at a granular level. It’s where governance meets agility — right from setting quotas to delegating roles, the console puts you in the driver’s seat.

Compliance: Enterprise-Grade Security

This is the bedrock on which the enterprise edition is built. Expect state-of-the-art encryption both at rest and in transit. Compliance standards such as GDPR and SOC 2 are met (no word on HIPAA, which Anthropic provides), ensuring you’re never on the wrong side of legal obligations.

Customization: Tailored Retention & Fine-Tune

In addition to the fine-tuning capabilities we have discussed, OpenAI ensures that you will have control over how data is handled. The enterprise version comes with options to set custom data retention policies, ensuring you can comply with any regulations or internal guidelines about how long to hold onto your data.

Furthermore, and although I haven’t been able to validate this, the company communication states that the addition of company knowledge will have an easy-to-use interface to add data sources for the model that can take effect company-wide.

Collaboration: Shareable Chat Templates

Working in a silo has been an issue since the start of ChatGPT. First with shareable conversations and now with templates, OpenAI is acknowledging that the modern workspace is all about collaboration. With chat templates, teams will be able to distribute, test, and improve conversational designs without risking unauthorized access or modification. And yes, these are secure links that adhere to best practices in privacy & protection.

Specific Use-Cases Reaping the Rewards

Fine-tuning shines across diverse sectors, and its potential is immense:

- Customer Service: Forget canned responses. Businesses are now fine-tuning GPT-3.5 Turbo to understand their products inside out, providing customer support that’s both automated and astonishingly adept.

- Content Writing: Something our clients have often asked about is how to ensure that content written by AI will follow their brand style and guidelines. This is usually achieved through red-teaming, but still is not very accurate. With these changes, your brand can have a clear message that will be honored across your generated content.

- Formatted Outputs: Current Apps built on LLMs commonly try to extract JSON, Markdown, or other specific formatting out of the responses. This usually comes with risks, as the model may not always reply in perfect format. So, Devs have had to build test & retry flows. Now, you can educate your model to better follow your format needs.

- Legal Sector: Models tuned to the documents that your company has already processed can sift through legal jargon to produce summaries or even draft initial versions of legal documents that match the legal arguments made and use the latest jurisprudence. If you don’t want to be in the news for citing imagined cases, this is the way to go.

- Healthcare & Research: Custom-tailored models assist with medical diagnoses, offering preliminary suggestions based on years of journals and studies.

Early Adopters and Their Triumphs

From global giants to sprightly startups, many have jumped on the fine-tuning bandwagon and are reaping tangible benefits. A notable case is Salesforce, which has integrated fine-tuned GPT-3.5 Turbo into its customer relationship management (CRM) software. The result? A 35% reduction in average handle time for customer support queries.

A Note on the Horizon

While we’ve covered the plethora of capabilities OpenAI’s newly minted Enterprise edition promises, it’s worth mentioning that full details are still behind the veil of OpenAI’s sales engagement. As a result, keep an eye on this space — this article may undergo updates to reflect new insights as they come to light.

Moreover, as the enterprise landscape begins to dabble more intensely with fine-tuning options, expect additional articles to delve deeper into emerging use cases, performance metrics, and best practices. In a rapidly evolving domain, staying in the know is not just advantageous — it’s essential for a competitive edge.

Sources

- OpenAI Developer Guide: Fine-Tuning

- OpenAI Blog: ChatGPT Enterprise

- OpenAI Blog: Function Calling and Other API Updates

- OpenAI Pricing: API Cost by 1k Tokens

- Deeplearning.ai — Fine-Tuning Large Language Models

- How Salesforce Research Uses Generative AI as a Force for Good

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.