How To Use AI To Improve the Literature Review Process

Last Updated on January 25, 2024 by Editorial Team

Author(s): Alberto Paderno

Originally published on Towards AI.

If AI will take my job, it might as well start with literature reviews

The current problem in scientific research

We live in an era dominated by technology and AI, yet our access to scientific literature remains substantially unchanged in the last 50 years.

Let’s take medical literature as an example. PubMed remains the main access point for most researchers, and it functions as an old-style repository. There are no data aggregation functionalities; the search is based on basic criteria that mostly only rely on the article’s title, abstract, and keyword. There are no ways to interact with or analyze the content. No advanced data visualization.

And it isn’t PubMed’s fault. It does what it is intended to do: give access to research from a wide variety of Journals.

However, the current research process is clunky and time-consuming. As researchers and medical practitioners, when we need to get updated information on a specific topic, the process is usually the same (either with PubMed or other scientific repositories):

- Check for systematic reviews and meta-analyses.

- Aggregate and compare the results of the different systematic reviews and meta-analyses.

- Read and analyze the main articles in the reviews.

- Check for cross-references.

If reviews are not available:

- Check for recent articles on the topic.

- Get an idea of the overall trend.

- Compare patient numerosity in the different articles, level of evidence, and research-group experience.

Formulating a quick and impartial perspective on a specific subject is often difficult. One typical issue is anchoring bias, which occurs when individuals disproportionately depend on the initial information they receive about a topic. Additionally, selection bias plays a big role when the available literature or data is chosen based on accessibility or the author’s preferences rather than a comprehensive overview of the subject. These are just a few examples of the various issues that can skew judgment in forming opinions during the research process.

How can AI help?

Dedicated solutions

Some dedicated solutions for literature search and review are currently available. This is a great sign, and we are moving in the right direction. However, their practical utility in specialistic fields is still limited. Some examples are:

Connected Papers (www.connectedpapers.com), Litmaps (www.litmaps.com), and Research Rabbit (www.researchrabbit.ai) visually represent the research network on a topic connecting relevant articles. The outcome is a graphical literature map that can provide an overall view of the articles around that domain. Other than visual representation, these tools are also helpful in identifying cross-references.

Elicit (www.elicit.org) aims to use AI to answer research questions by summarizing the available literature. However, the current outcome is still far from a comprehensive analysis of all the articles. It can be useful for quick data extraction, but it sometimes misses key publications, and it’s not possible to manually upload a series of papers we are interested in. It’s an intriguing approach, but to date, its ideal target is the non-expert who wants to get scientifically-proven generic insights on a new topic.

Finally, Scite (www.scite.ai) allows users to see how a scientific paper has been cited by providing the context of the citation and classifying it as supporting, mentioning, or disputing the cited claim. This is an excellent addition to the toolbox, but the aim is to assist the experienced researcher in the conventional review process rather than creating an entirely new path.

Generalized approaches

Other than dedicated solutions, conventional LLM-based AI tools can also be beneficial.

Difficult not to mention ChatGPT — difficult not to mention it anywhere in the AI field, to be honest. However, its use cases are limited to generic topics and superficial knowledge that do not require high-level expertise. The web search tool can help in getting up-to-date knowledge, but the search is not limited to the academic literature and is frequently too generic. Hallucinations are also a significant problem; they can be pop up in the main text (quite rarely) or in citations (pretty frequently).

Well, let’s avoid making this error:

Humiliated lawyers fined $5,000 for submitting ChatGPT hallucinations in court: 'I heard about this…

The judge scolded the lawyers for doubling down on their fake citations.

fortune.com

Perplexity.ai (www.perplexity.ai) is another interesting option. It is possible to limit the search to the academic domain, and I’ve found that in terms of information retrieval and summarization, it provides better results than ChatGPT — at least in the medical field. Nevertheless, it’s far from summarizing specialized topics comprehensively since it tends to focus on the first search results.

A personalized pipeline for using LLMs in literature reviews

A potential option is to develop simple research pipelines based on basic Python code and LLM APIs to extract and summarize the needed data. There are different approaches, and this is a quick practical example.

Step 1 — Preliminary screening

In highly specialistic topics, we want to be sure that the articles we select for the initial screening are comprehensive. Unfortunately — for now — this is still a manual step (at least in my hands). However, there are some tricks. For example, ChatGPT helps convert a narrative research question into an effective PubMed research string. This might require some iteration, but it saves a lot of time down the line. You also might want to perform different searches with distinct strings.

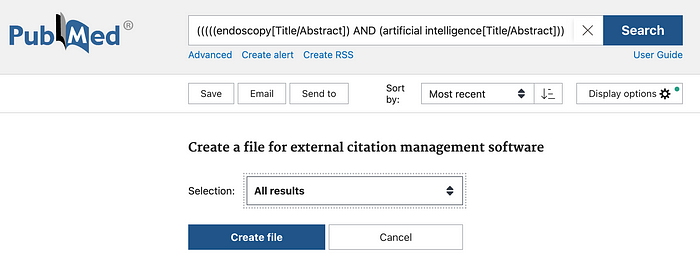

Step 2 — Exporting results

After selecting the articles, you can press “Send to” on the PubMed research page and create the file.

Step 3 — Importing results in a citation manager

If you do research consistently, you probably already use a citation manager. My example is with Zotero (www.zotero.org), but it’s easily applicable to all the other apps. Here, you can merge and rearrange articles, exclude duplicates, and have an overall view of the collection.

With Zotero, it is possible to export a library in CSV format containing all the relevant information (including the full abstract), allowing you to subsequently manage it as a Pandas Dataframe.

Step 4 — Processing the data

First, let’s import the dataset:

import pandas as pd

df = pd.read_csv('Review_2024.csv')

df.head()

In this case, the main fields I’m interested in are: ‘Title’ and ‘Abstract Note’ (column containing the full abstract). Many other fields are available for different types of analysis.

At this point, it’s possible to automatically analyze and categorize titles and abstracts using an LLM. I think that Gemini Pro is a good choice at this time. Two main reasons:

- The outputs are of adequate quality

- The API is FREE! (For now)

This is just a quick example:

import google.generativeai as genai

import json

GOOGLE_API_KEY = 'YOUR_API_KEY'

genai.configure(api_key=GOOGLE_API_KEY)

def query_gemini(title, abstract):

model = genai.GenerativeModel('gemini-pro')

prompt = ("""

Given the following title and abstract, output a text in JSON format specifying:

- "endoscopy" : "yes" or "no" - If related to endoscopy or not

- "subspeciality": "Laryngology", "Head and Neck Surgery", "Otology", etc

- "number_of_patients_analyzed": "number of patients included in the study"

- "objective":"study objective here"

- "objective_short": "very short summary of the study objective in max 5 words"

- "AI_technique" : "Resnet", "Generic CNN", etc

- "Short_summary" : "Short summary of the study"

"""

"\n\nTitle: {}\nAbstract: {}\n").format(title, abstract)

response = model.generate_content(prompt)

return response.text.strip().lstrip('``` JSON\n').rstrip('\n```')

Here, we structure the prompt using the JSON format, and then we get it ready to be added to the dataframe. After different tries, this is the way to make the output more consistent and easier to parse.

columns = ['endoscopy', 'subspeciality', 'number_of_patients_analyzed', 'objective', 'objective_short', 'AI_technique', 'Short_summary']

for col in columns:

df[col] = None

Then, let’s add the required columns to the main dataframe.

for start in range(0, len(df), 300):

end = start + 300

responses = []

for index, row in df.iloc[start:end].iterrows():

response = query_gemini(row['Title'], row['Abstract Note'])

try:

parsed_response = json.loads(response)

for col in columns:

df.loc[index, col] = parsed_response.get(col, None)

except json.JSONDecodeError:

print(f"Failed to parse response for row {index}")

chunk_df = df.iloc[start:end]

chunk_df.to_excel(f'/YOUR_FOLDER/dataset_chunk_{start//300 + 1}.xlsx', index=False)

Finally, let’s start the process. I prefer to create different dataset chunks (every 300 articles, in this case) during the process. Gemini Pro is free until 60 QPM but it sometimes tends to limit access for a few minutes after a high number of requests. With this approach, you’ll be able to retain most of the work even after a crash.

After all of that, you’ll have a fully categorized literature search. It’s possible to analyze the distribution of topics (either with conventional statistical software or ChatGPT), get an overall idea of the different articles thanks to short summaries, etc. This is an interesting approach when you are thinking about writing a systematic review and meta-analysis following the current research and reporting guidelines, such as the PRISMA statement.

Future perspectives

The process is still time-consuming and highly manual. A potential development would be the introduction of “smart” article repositories that would automatically execute these parsing, classification, and summarization steps as an article is added. This automation would streamline the research pipeline and enhance accuracy and consistency in processing and presenting information. Let’s face it, systematic reviews and meta-analyses should not be performed by groups of researchers at a single point in time but should be interactive and updated after each article is added to the topic. This type of scientific dashboard would significantly enhance how research is accessed and distributed.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.