Google’s Symbol Tuning is a New Fine-Tuning Technique that In-Context Learning in LLMs

Last Updated on August 7, 2023 by Editorial Team

Author(s): Jesus Rodriguez

Originally published on Towards AI.

The new method can become the foundation of new fine-tuning techniques.

I recently started an AI-focused educational newsletter, that already has over 160,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:

TheSequence U+007C Jesus Rodriguez U+007C Substack

The best source to stay up-to-date with the developments in the machine learning, artificial intelligence, and data…

thesequence.substack.com

Thanks to scaling up language models, machine learning has experienced a revolutionary surge, enabling the accomplishment of challenging reasoning tasks through in-context learning. However, a lingering issue remains: language models show sensitivity to prompt variations, suggesting a lack of robust reasoning. These models often necessitate extensive prompt engineering or instructional phrasing, and even exhibit peculiar behaviors like unaltered task performance despite exposure to incorrect labels. In their latest research, Google unveils a fundamental characteristic of human intelligence: the ability to learn novel tasks through reasoning with just a few examples.

Google’s breakthrough paper, titled “Symbol tuning improves in-context learning in language models,” introduces an innovative fine-tuning method called symbol tuning. This technique accentuates input-label mappings, leading to significant enhancements in in-context learning for Flan-PaLM models across diverse scenarios.

Symbol Tuning

Google Research introduces “Symbol Tuning,” a potent fine-tuning technique that addresses the limitations of conventional instruction tuning methods. While instruction tuning can enhance model performance and in-context understanding, it comes with a drawback: models might not be compelled to learn from examples since the tasks are redundantly defined through instructions and natural language labels. For instance, in sentiment analysis tasks, models can simply rely on the provided instructions, disregarding the examples altogether.

Symbol tuning proves particularly beneficial for previously unseen in-context learning tasks, excelling where traditional methods falter due to underspecified prompts devoid of instructions or natural language labels. Furthermore, models tuned with symbols demonstrate exceptional prowess in algorithmic reasoning tasks.

The most remarkable outcome is the substantial improvements in handling flipped-labels presented in-context. This achievement highlights the model’s superior capacity to leverage in-context information, even surpassing pre-existing knowledge.

Symbol tuning offers a remedy by fine-tuning models on examples devoid of instructions and replaced natural language labels with semantically-unrelated labels like “Foo,” “Bar,” etc. In this setup, the task becomes ambiguous without consulting the in-context examples. Reasoning over these examples becomes crucial for success. Consequently, symbol-tuned models exhibit improved performance on tasks that demand nuanced reasoning between in-context examples and their labels.

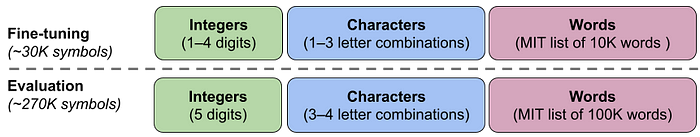

To evaluate the effectiveness of symbol tuning, the researchers utilized 22 publicly-available natural language processing (NLP) datasets with classification-type tasks, considering discrete labels. Labels were remapped to random choices from a pool of approximately 30,000 arbitrary labels belonging to three categories: integers, character combinations, and words.

The experiments involved symbol tuning on Flan-PaLM models, specifically Flan-PaLM-8B, Flan-PaLM-62B, and Flan-PaLM-540B. Additionally, Flan-cont-PaLM-62B (abbreviated as 62B-c) was tested, representing Flan-PaLM-62B at a scale of 1.3 trillion tokens instead of the usual 780 billion tokens.

The symbol-tuning procedure necessitates models to engage in reasoning with in-context examples to perform tasks effectively, as prompts are designed to prevent learning solely from relevant labels or instructions. Symbol-tuned models excel in settings that demand intricate reasoning between in-context examples and labels. To explore these settings, four in-context learning scenarios were defined, varying the level of reasoning required between inputs and labels for learning the task (depending on the availability of instructions/relevant labels).

The results demonstrated performance improvements across all settings for models 62B and larger, with modest enhancements in settings with relevant natural language labels (ranging from +0.8% to +4.2%), and substantial improvements in settings without such labels (ranging from +5.5% to +15.5%). Remarkably, when relevant labels were unavailable, symbol-tuned Flan-PaLM-8B surpassed Flan-PaLM-62B in performance, and symbol-tuned Flan-PaLM-62B outperformed Flan-PaLM-540B. This suggests that symbol tuning empowers smaller models to match the performance of larger models on these tasks, thereby significantly reducing inference compute requirements (approximately saving ∼10X in compute).

In general, symbol tuning shows significant improvements in in-context learning tasks, particularly for underspecified prompts. The technique also shows stronger performance than traditional fine-tuning in reasoning tasks and are more able to use in-content information to override prior knowledge. Overrall, symbol tuning can become one of the most interesting fine-tuning techniques.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.