Distilled DeepSeek-R1 at work with a Naive RAG

Last Updated on January 30, 2025 by Editorial Team

Author(s): Claudio Giorgio Giancaterino

Originally published on Towards AI.

From the chatbot arena leaderboard, DeepSeek-R1 has surpassed its competitor OpenAI o1, and the popularity of this model is growing daily, especially because it is open-source and competes with proprietary models, reaching extraordinary results with limited resources. Moreover, this new Large Language Model has created a rumour in the stock market.

Before starting with a hands-on activity, let’s dive into DeepSeek-R1.

DeepSeek AI, a Chinese Company, has introduced a series of Large Language Models that improve reasoning capabilities through Reinforcement Learning (RL). DeepSeek-V3-Base model was used as a Foundation Model for both DeepSeek-R1-Zero and DeepSeek-R1. It was pre-trained on a dataset of 14.8 trillion tokens, and it uses a large Mixture-of-Experts (MoE) architecture with 671 billion total parameters.

The first model is DeepSeek-R1-Zero, which was trained using Reinforcement Learning (RL) directly on the Foundation Model without prior Supervised Fine-Tuning (SFT). It exhibited behaviours such as self-verification and long Chain-of-Thought (CoT) reasoning, but despite these capabilities, DeepSeek-R1-Zero faces challenges such as poor readability and language mixing. DeepSeek-R1 was built upon DeepSeek-R1-Zero by incorporating a multi-stage training pipeline that includes cold-start data and further Reinforcement Learning. The model uses a small amount of high-quality, human-readable Chain-of-Thought (CoT) data for initial fine-tuning, addressing the readability issues of DeepSeek-R1-Zero. After fine-tuning DeepSeek-V3-Base on the cold start data, is applied the same large-scale Reinforcement Learning training process as employed in DeepSeek-R1-Zero. Distilled models were created by transferring the reasoning capabilities of the larger DeepSeek-R1 model to smaller, more efficient ones, generating a large dataset of training samples used to fine-tune smaller, open-source models from the Qwen and Llama families. To improve the comprehension of the topic, I recommend reading “The Illustrated DeepSeek-R1” by Jay Alammar.

I’ve decided to employ DeepSeek-R1 in a common activity for a Large Language Model, a knowledge base Q&A, by building a simple RAG (Retrieval-Augmented Generation).

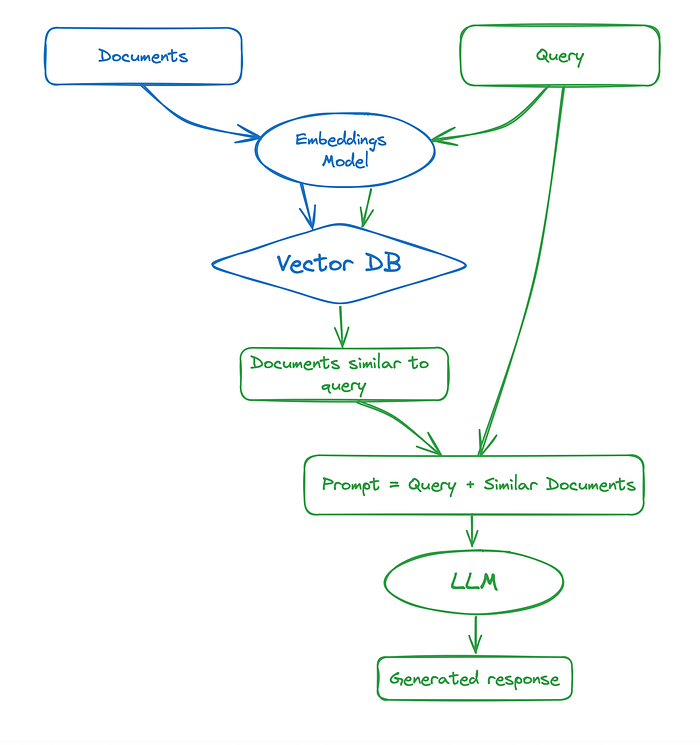

Retrieval-Augmented Generation (RAG) is a technique that combines the capabilities of a pre-trained Large Language Model (LLM) with an external data source to produce more accurate responses. RAG tackles LLMs limitations, such as ‘hallucinations’ or generating incorrect content, by referencing external knowledge to supplement the LLMs’ internal knowledge.

Consider planning a trip to a place you’ve never visited before. You can query a database to retrieve detailed information about the locality, which can help you plan your trip more effectively.

The steps involved are:

- Data collection;

- Data chunking;

- Document embeddings and storing information in a vector database;

- User query embedding;

- Information retrieval with the most similar chunks from the indexed data;

- Data augmentation of the retrieved chunks with the context of the user query;

- Response generation.

For this study, I’ve employed a distilled version of DeepSeek from Hugging Face, deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B, that can run the RAG with LangChain on the Colaboratory Notebook, and I trialled a knowledge base Q&A with its paper.

The main steps involved are:

-Setting up the FAISS (Facebook AI Similarity Search) index using pre-trained Hugging Face embeddings, converting documents into vectors, and then setting up a retriever that can return the top 3 most similar documents to a given query based on these embeddings.

db = FAISS.from_documents(chunked_docs,

HuggingFaceEmbeddings(model_name='BAAI/bge-base-en-v1.5'))

retriever = db.as_retriever(

search_type="similarity",

search_kwargs={'k': 3}

)

-Building a pipeline for an AI-assistant Q&A, dynamically obtaining context from user query, and then a text generation, leveraging pre-trained models and document retrieval for richer replies.

# Pipeline for text generation

text_generation_pipeline = pipeline(

model=model,

tokenizer=tokenizer,

task="text-generation",

temperature=0.2,

do_sample=True,

repetition_penalty=1.1,

return_full_text=False,

max_new_tokens=500,

)

llm = HuggingFacePipeline(pipeline=text_generation_pipeline)

# Prompt template to match desired output format

prompt_template = """

You are a professional AI researcher, give an help in study. Use the following context to answer the question using information provided by the paper:

{context}

Question: {question}

"""

prompt = PromptTemplate(

input_variables=["context", "question"],

template=prompt_template,

)

llm_chain = prompt | llm | StrOutputParser()

rag_chain = (

{"context": retriever, "question": RunnablePassthrough()}

| llm_chain

)

Here are the asked questions with the answers:

question = "What are the advantages of using reinforcement learning directly on a base model, as demonstrated by DeepSeek-R1-Zero?"

</think>

The advantages of using reinforcement learning directly on a base model, as demonstrated by DeepSeek-R1-Zero, include:

1. **Direct Application of RL**: DeepSeek-R1-Zero applies reinforcement learning directly to the base model without requiring any pre-trained or fine-tuned data.

2. **Chain-of-Thought (CoT) Utilization**: The model leverages CoT techniques inherently during its training process, allowing it to develop sophisticated problem-solving abilities through exploration and refinement.

3. **Self-Learning Capabilities**: By applying RL directly, DeepSeek-R1-Zero enables it to learn and improve its reasoning and decision-making processes independently, enhancing its overall performance.

4. **Enhanced Problem-Solving Through RL**: The integration of reinforcement learning within the base model allows for dynamic and adaptive learning, where the model can adapt its strategies based on feedback and outcomes observed during training.

These features collectively demonstrate how direct application of reinforcement learning enhances the model's ability to reason and solve complex tasks effectively.

The above answer appear overall correct and exaustive. The last point maybe could be redundant.

question = "What is cold-start data and why is it used in DeepSeek-R1 training?"

</think>

Cold-start data refers to initial training data that is scarce or not available during the initial phase of a machine learning model's development. It is often used when there is limited data available to train a model effectively.

In the context of DeepSeek-R1 training:

- **Cold-start data** is utilized to initialize the model's knowledge base.

- The model starts with minimal information but gradually gains expertise through repeated training cycles.

- This approach helps the model adapt better to new challenges and improve its overall performance over time.

By leveraging cold-start data, DeepSeek-R1 can efficiently learn and refine its capabilities, making it more effective at solving complex problems.

The second answer is not properly correct, is too general. In the research cold-start data refers to a small amount of carefully curated, high-quality training data used to fine-tune the base model before Reinforcement Learning (RL) is applied.

question = "What are DeepSeek-R1-Zero and DeepSeek-R1?"

</think>

DeepSeek-R1-Zero and DeepSeek-R1 are both models developed by DeepSeek, but they represent different versions or iterations of the same system.

- **DeepSeek-R1**:

- **Description**: It is a language model developed by DeepSeek, designed for tasks such as text generation, question answering, and problem-solving.

- **Key Features**:

- **Language Model**: Utilizes transformer-based architectures to handle sequence modeling tasks.

- **Inference Speed**: Achieves high inference speeds, making it suitable for real-world applications.

- **Task Capabilities**: Supports a wide range of tasks including natural language understanding, generation, and reasoning.

- **Performance**: Demonstrates strong performance across multiple domains, as evidenced by its results in benchmarks like ARXIV.

- **DeepSeek-R1-Zero**:

- **Description**: This version introduces a "pure RL" approach, which likely refers to reinforcement learning techniques optimized for sequential decision-making tasks.

- **Key Features**:

- **Reinforcement Learning (RL)**: Emphasizes learning through trial and error, focusing on optimizing long-term rewards.

- **Cold Start Data**: Utilizes pre-trained models to enhance initial performance before fine-tuning on new tasks.

- **Iterative Fine-Tuning**: Gradually improves upon initial models through repeated training cycles.

- **Performance**: As mentioned, achieves comparable performance to OpenAI's o1-1217 model on various tasks.

Both models are part of the DeepSeek suite, showcasing advancements in language modeling and reinforcement learning capabilities.

Also in this situation, the answer is too general. It doesn’t explain the difference between the two models. DeepSeek-R1-Zero is developed using pure Reinforcement Learning (RL) without any Supervised Fine-Tuning (SFT) as a preliminary step. DeepSeek-R1 is built upon DeepSeek-R1-Zero and incorporates multi-stage training, including a “cold start” with a small amount of curated data before the RL stage, to improve reasoning performance further and address issues like poor readability and language mixing founded in DeepSeek-R1-Zero.

Changing “return_full_text” to True from the “text_generation_pipeline” you obtain metadata merged in the answer. Switching the temperature to 0.6 the reasoning capacity increases but could generate confusion. For instance, in the first question, the model generates a multiple choices answer and then selects one with motivations.

Last thoughts

What has impressed me is the powerful human speaking ability and reasoning capacity in a distilled model despite, the responses most of the time are convincing but general and not focused on the question. This is a small model, so the DeepSeek-R1 should be tried to have a real understanding of capacity.

References:

DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

DeepSeek-R1: Features, o1 Comparison, Distilled Models & More | DataCamp

what-is-retrieval-augmented-generation | DataCamp

Retrieval-Augmented Generation for Large Language Models: A Survey

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.