Easy-Peasy AI: 3 Open Source Frameworks to Add AI to Your Apps

Last Updated on July 17, 2023 by Editorial Team

Author(s): Tabrez Syed

Originally published on Towards AI.

As the capabilities of artificial intelligence, specifically large language models (LLMs), continue to grow and evolve, developers are looking to incorporate AI features into their applications. While simple tasks like text completion and summarization can be handled by directly calling APIs provided by OpenAI or Cohere, building complex features requires effort and tooling.

This was first pointed out by Jon Turow and his team at Madrona, who noted

Developers must invent their own tools for steps such as prompt engineering, fine-tuning, distillation, and assembling and managing pipelines that refer queries to the appropriate endpoint.

From: “Foundation Models: The future isn’t happening fast enough — Better tooling will make it happen faster,” Madrona Ventures

In this article, we’ll explore three open-source AI frameworks that can help developers build AI features faster But first, let’s take a closer look at what capabilities these frameworks need to offer to be effective.

Foundational Model Abstraction: To build AI-powered apps, developers need access to the latest and greatest foundational models. However, as new models emerge, keeping up with upgrades and changes can be challenging.

Prompt Management: Prompts are becoming increasingly important in AI, serving as the language we use to communicate with foundational models. However, developing and managing prompts can be a complex undertaking for developers.

Context Management: As developers chain multiple calls to LLMs, managing context and coordinating data between calls can be a significant hurdle, particularly when building complex AI-powered apps.

So who are the new players in the AI framework space? Will they become what Rails, Laravel, and Express became for web development?

Dust.tt

Dust.tt was one of the first open-source AI frameworks. Written in Rust, it allows developers to chain calls to LLMs with other tools to extract the desired output. Dust.tt works with language models such as OpenAI, AI21, and Cohere and has integration blocks for Google Search, curl, and web scraping (wrapping browserless.io).

Key Concept:

A Dust.tt app comprises a series of blocks executed sequentially. Each block produces outputs and can refer to the outputs of previously executed blocks. Dust.tt app developers define each block’s specification and configuration parameters.

Example:

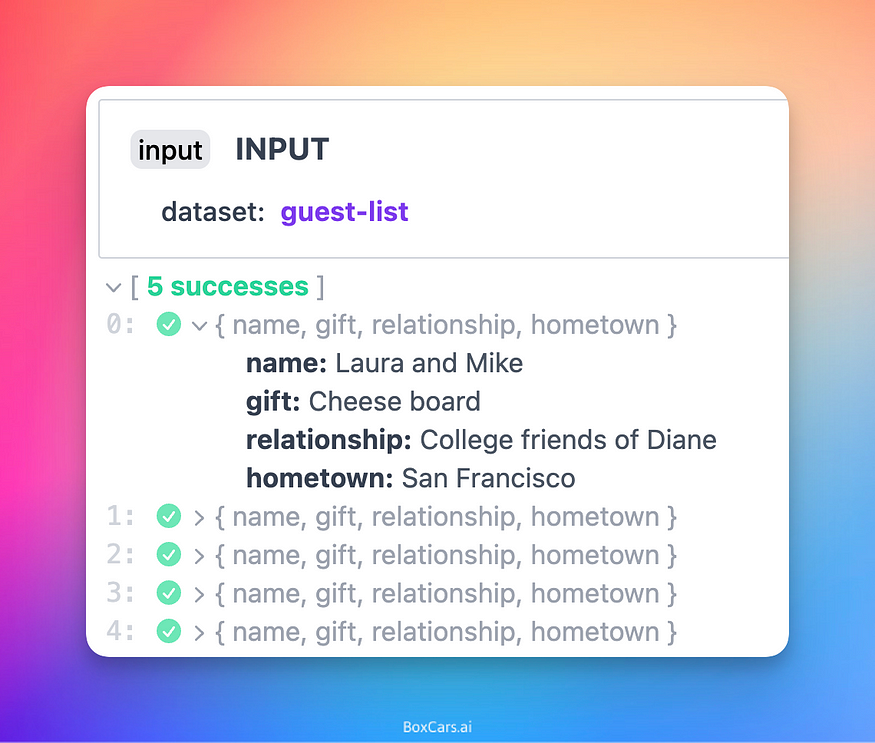

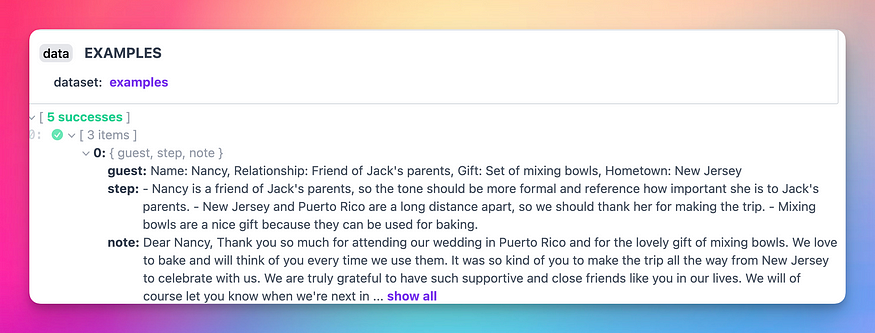

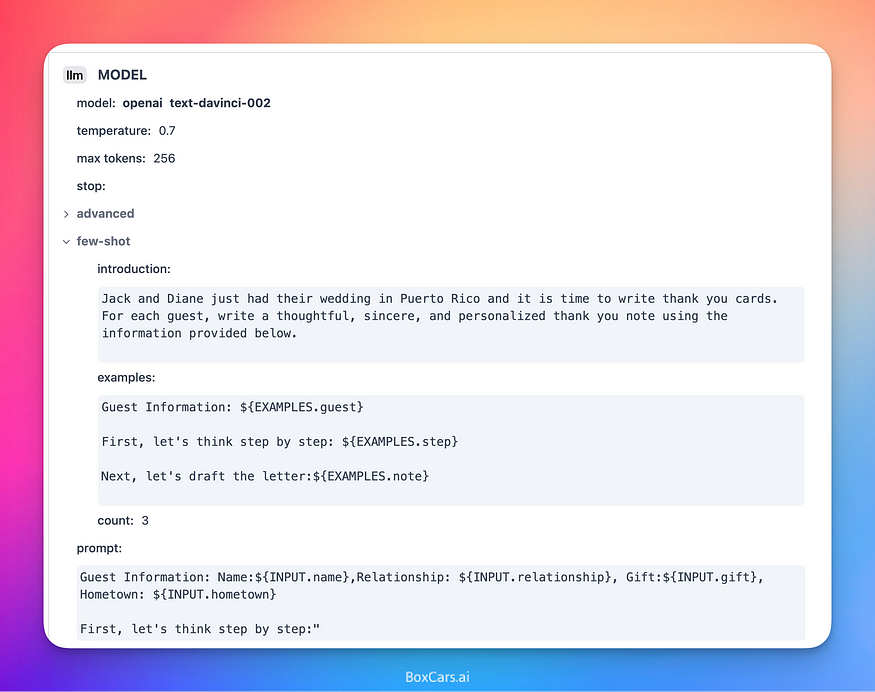

In the example below (from Dust docs), the Dust app uses AI to write personalized thank-you letters for wedding gifts:

First we set up an input block with the guest list

Then we set up a training block with examples

Then we call the LLM with the input and training examples

And finally, we extract the results

Langchain

Langchain is an open-source Python library that allows developers to build AI-powered applications using Language Models (LLMs) in a composable manner. With Langchain, you can create chatbots, question-answering systems, and other AI-powered agents.

Key Concept:

Developers use “chains” to connect components to build their AI applications. The library provides a set of building blocks, including input/output, processing, and utility blocks, that can be combined to create a custom chain.

Example

In this example (from their docs), the code asks an LLM to name a company that makes a product, and then it asks the LLM to write a catchphrase for the company.

from langchain.prompts import PromptTemplate

from langchain.llms import OpenAI

from langchain.chains imporpt LLMChain

from langchain.chains import SimpleSequentialChain

llm = OpenAI(temperature=0.9)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

chain = LLMChain(llm=llm, prompt=prompt)

# Run the chain only specifying the input variable.

print(chain.run("colorful socks"))

second_prompt = PromptTemplate(

input_variables=["company_name"],

template="Write a catchphrase for the following company: {company_name}",

)

chain_two = LLMChain(llm=llm, prompt=second_prompt)

overall_chain = SimpleSequentialChain(chains=[chain, chain_two], verbose=True)

# Run the chain specifying only the input variable for the first chain.

catchphrase = overall_chain.run("colorful socks")

print(catchphrase)LangChain also has agents. An Agent enables you to dynamically build chains based on user input and the tools you provide.

In the code below (from Langchain docs), an agent is initialized and given the tools for searching and running mathematical operations.

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

tools = load_tools(["serpapi", "llm-math"], llm=llm)

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

"Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.991298452658078."

Langchain has become popular and now boasts a large number of integrations. A new project is underway to port it to TypeScript, making it possible to build AI-powered JavaScript apps.

Some currently available integrations are OpenAI, Cohere, GooseAI, Hugging Face Hub, Petals, CerebriumAI, PromptLayer, SerpAPI, GoogleSearchAPI, WolframAlphaAPI, NatBot, Wikipedia, Elasticsearch, FAISS, Manifest, OpenSearch, DeepLake.

BoxCars

Disclosure: I am working on the BoxCars open-source project.

BoxCars is a Langchain-inspired open-source gem that simplifies adding AI functionality to Ruby-on-Rails apps.

Key Concept

In BoxCars, a train comprises an engine (LLM) and boxcars (tools). The train coordinates calls to the LLM using the tools specified. For eg, The following code uses an LLM, Google search, and a calculator.

boxcars = [Boxcars::Calculator.new, Boxcars::Serp.new]

train = Boxcars.train.new(boxcars: boxcars)

puts train.run "What is pi times the square root of the average temperature in Austin TX in January?"

BoxCars is designed with the same philosophy as Ruby on Rails. It includes an ActiveRecord BoxCar, making integrating natively with rails apps easy. In the example below, the ActiveRecord BoxCar is set up with models (Ticket, User, Comments) and then asked questions across the models. The LLM produces ActiveRecord code that returns the results.

helpdesk = Boxcars::ActiveRecord.new(name: 'helpdesk', models: [Ticket, User, Comment])

helpdesk.run "how many comments do we have on open tickets?"

**`> Entering helpdesk#run**how many comments do we have on open tickets?

Comment.where(ticket: Ticket.where(status: 0)).count

Answer: 4

**< Exiting helpdesk#run**`

Out[7]:

`"Answer: 4"`

It’s still early

These frameworks are less than a year old and evolving rapidly; undoubtedly, there will be more in the future. The good news is that if you’re considering adding AI features to your apps, help is on the way.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.