Centroid Neural Network for Clustering with Numpy

Last Updated on January 6, 2023 by Editorial Team

Author(s): LA Tran

Deep Learning

Let’s elevate potentials that are not paid much attention

Centroid neural network (CentNN) is an efficient and stable clustering algorithm that has been successfully applied to numerous problems. CentNN does not require a pre-determined learning coefficient but still yields competitive clustering results compared to K-means Clustering or Self-Organizing Map (SOM) whose results heavily depend on the initial parameters. For those who neither know about nor understand this great algorithm, you are welcome to read my explanation with visual examples here. And now, let’s make CentNN clear with several lines of code.

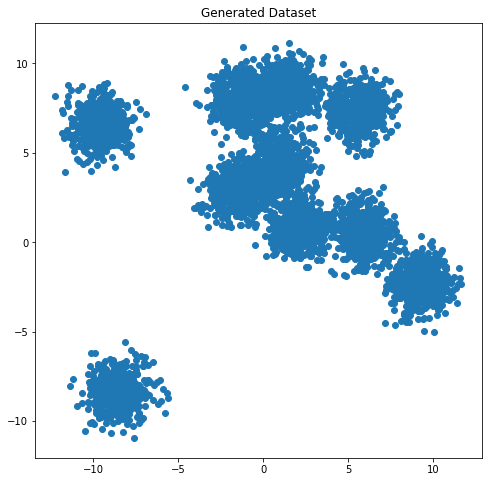

I would like to choose the 2-d data clustering problem as the explaining example in this post because it is very straightforward to understand and watch and as it is an efficient tool to explain clustering algorithms.

Firstly, let’s import several necessary libraries and generate a dataset with 10 centers.

Some necessary subroutines that are prepared for the implementation, everything is available at my github:

Again, I assume that all of you guys already understood this algorithm. Otherwise, I highly recommend that you read the theory before going ahead. You can check my explanation for this algorithm here.

Now, let’s start training the algorithm:

Step 1:

Find the centroid c for all data, then split c into 2 weights w1, w2 with a small Ɛ.

Here are the 2 weights:

[0.43965411 2.88785116]

[0.33965411 2.78785116]

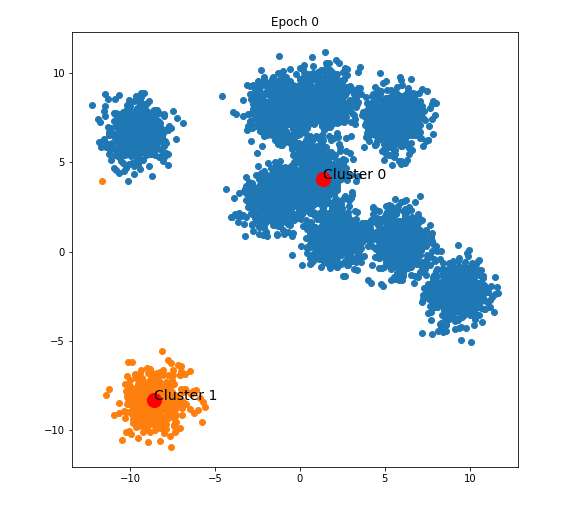

Step 2:

Find the winner neuron for each x in X.

The result after epoch 0:

Step 3:

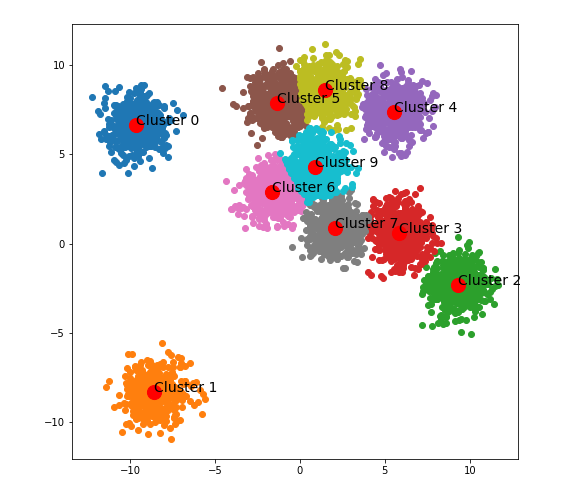

From epoch 1 to the end, keep finding the winner neuron for every single data and updating winner neurons and loser neurons until reaching the desired number of clusters.

The algorithm stops after 81 epochs for the example dataset:

Final clustering result:

Final centroids:

[-9.66726188 6.62956078]

[-8.57296482 -8.31582399]

[ 9.30888233 -2.32652503]

[5.82090263 0.57715316]

[5.55404696 7.36294438]

[-1.32316256 7.86651609]

[-1.63557118 2.87845278]

[2.08983389 0.85729017]

[1.47692106 8.56662456]

[0.90838486 4.30719839]

In this post, I have introduced to all of you a tutorial on the implementation of the Centroid Neural Network (CentNN) algorithm using Numpy. You guys can find my implementation of CentNN here. If you feel it helps, please do not hesitate to give it a star. You are welcome to visit my Facebook page which is for sharing things regarding Machine Learning: Diving Into Machine Learning.

The next post in my series of CentNN will be the combination of vector quantization (block quantization) and CentNN in image compression application.

That’s all for today. Thanks for spending time!

References

[1] Centroid Neural Network: An Efficient and Stable Clustering Algorithm

[2] My implementation of CentNN with Numpy

Centroid Neural Network for Clustering with Numpy was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.