HydraSum: Disentangling Stylistic Features in Text Summarization… (Paper Review/Described)

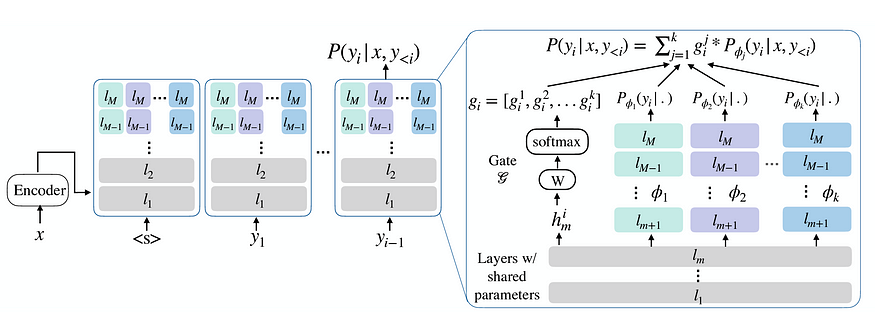

Author(s): Ala Alam Falaki Originally published on Towards AI. Training Is it possible to train a model with transformer architecture to learn generating summaries with different styles? Figure 1. The multi-decoder architecture scheme. (Image from [1]) While it’s true that deep learning …

Exploring the Power of the Transformers Library for Natural Language Processing

Author(s): Rafay Qayyum Originally published on Towards AI. Natural language processing (NLP) is a branch of Artificial Intelligence that deals with giving computers the ability to understand text and spoken words in the same way human beings can.NLP has made significant advancements …

CompressedBART: Fine-Tuning for Summarization through Latent Space Compression (Paper Review/Described)

Author(s): Ala Alam Falaki Originally published on Towards AI. Paper title: A Robust Approach to Fine-tune Pre-trained Transformer-based Models for Text Summarization through Latent Space Compression. “Can we compress a pre-trained encoder while keeping its language generation abilities?”This is the main question …