Underwater Trash Detection using Opensource Monk Toolkit

Last Updated on July 19, 2023 by Editorial Team

Author(s): Abhishek Annamraju

Originally published on Towards AI.

Computer Vision

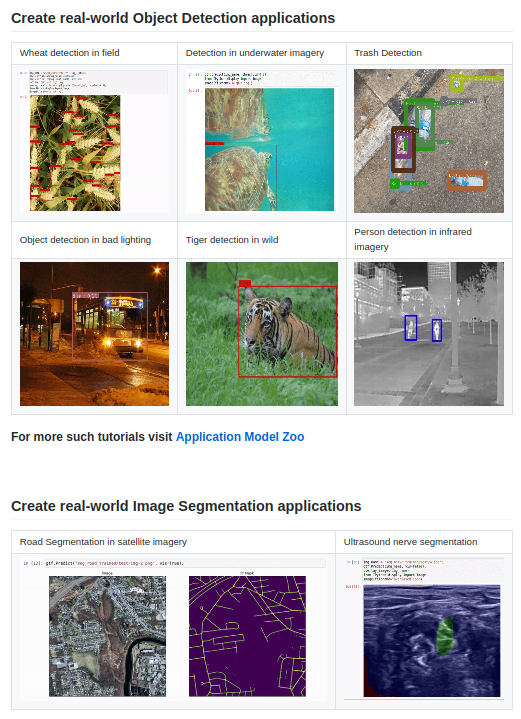

The entire code for this application is available in Monk Object Detection Library’s Application Model Zoo

Introduction

Underwater Waste is a huge environmental problem affecting aquatic habitat drastically. Marine debris includes plastic, non-bio-degradable industrial waste, sewage sludge, radioactive material dumps, etc.

As per the statistics published at Condor Ferries

U+2605 More than 100K marine animals die due to plastic waste

U+2605 It is estimated that around 5.25 trillion plastic pieces exist in our oceans

U+2605 70 % of waste debris sinks in the ocean, around 15% floats, and the rest is washed ashore.

The great pacific garbage patch, also known as pacific trash vortex spans around 617K miles between Hawaii and California. And this is still a small part of the entire marine pollution.

To tackle this issue a lot of initiatives are being taken up like

▹ The OceanCleanUp Project

▹ Clean Ocean Project

▹ Seabin Project

… And many more!

A crucial part of these projects is the use of robots

U+2605 to clean up the larger areas in a shorter period as compared to manual cleanup drives.

U+2605 to access areas where human divers cannot reach

A critical component for these robots is to identify different objects and take actions accordingly and this is where Deep Learning and Machine Vision enters the space!!!

About the Dataset and the Library

Let’s dive in and add a minimalistic contribution as deep learning engineers to make this world a better place

To create a detector we used Trash-ICRA19 Dataset

* Contains 5K+ Training images and 1K+ Test Images

* Data was sourced from the J-EDI dataset of marine debris

* The dataset is labeled with bounding box annotations over trash as well as marine life. (For simplicity we train only over trash data)

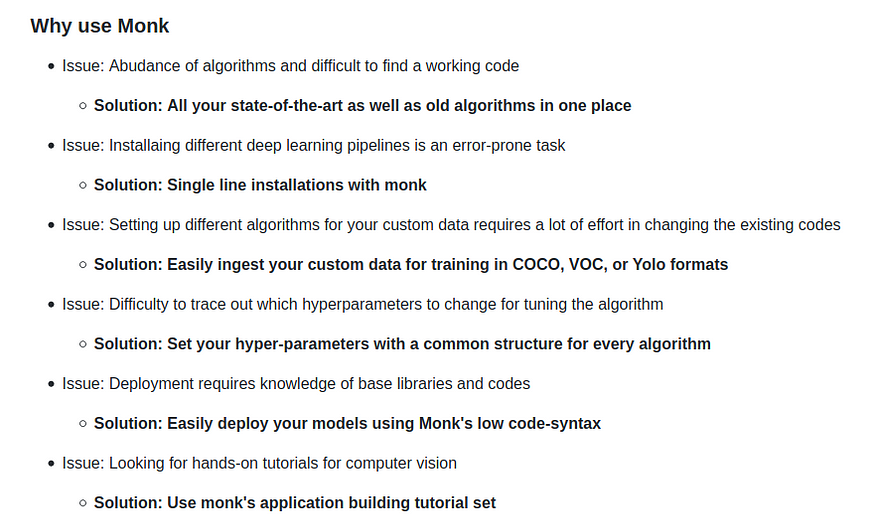

For training and inference, we will be using Monk Object Detection Library

* It serves as a low code easily installable wrapper over major object detection algorithms

* One such algorithm chosen over 15+ pipelines is MMdetection

Monk libraries make life easier for developers with

U+2605 One step installations

U+2605 Low code syntax — Train in less than 10 lines of code

U+2605 Export models for easy inference

U+2605 Easy syntax to ingest custom data in any object detection pipeline. Get over the hassles of changing codes and configurations to insert custom dataset for training.

Installation

Installation is quite simple

* Clone the library

* Run installation script

Support available for

▹ Python — 3.6

▹ Cuda — 9.0, 9.2, 10.0, 10.1, 10.2

▹ Runs on colab too!!!

Data Preparation

The Training dataset is labeled as per Pascal VOC format (XML files)

And the training engine requires the data to be in COCO format

Code for the same (From downloading to formatting the dataset) is available in this Jupyter Notebook

Training

Training using the original MMdetection library involves a lot of changes to configuration files and parametric arguments to the codes. With Monk, it is easier to do the same using simple pythonic syntax.

Complete code available in this jupyter notebook. (Mentioned below are important snippets from the same)

U+2605 Step-1 — Import and initiate the Training Engine

U+2605 Step -2 — Add training and validation dataset paths to the detector

U+2605 Step -3 — Set Dataset Parameters

* Note: A batch size of 8 requires 10 GB of RAM

U+2605 Step -4 — Select Model Type from 35 different model types available

For this tutorial, we will be using retinanet_r50_fpn (More details about this model in Appendix)

U+2605 Step -5— Set learning rate and sgd optimizer parameters

U+2605 Step -6 — Set number of epochs for training and validation

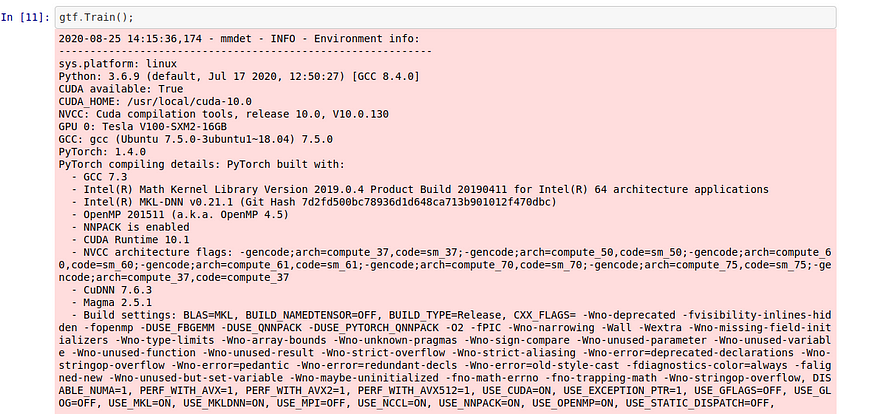

U+2605 Start the training process!!!

Once the model is trained we test the model on sample images or videos

Inference

Running inference using Monk is even simpler

U+2605 Step-1 — Import and initiate the Inference Engine

U+2605 Step-2 — Load the trained Model

U+2605 Step-3— Run inference on image

On an Nvidia V-100 GPU, the detector runs at 15 fps on average. For pruning and optimizing the model, it needs to be fed into a TensorRT engine, a tutorial for which will be released very soon.

And done!!! The same engine can be deployed anywhere. Tutorials to deploy on raspi-board and Nvidia nano boards will be released soon.

You may use the library to experiment with different models like cascaded RCNN or faster RCNN; or even experiment with different pipelines such as Tensorflow object detection APIs V1.0 or V2.0, or YoloV3, etc from Monk Object Detection Library

Happy Coding!

Appendix — 1

About RetinaNet Architecture and training details

As per the information provided on Keras website

RetinaNet is a

U+2605 single-stage detector

U+2605 uses a feature pyramid network to efficiently detect objects at multiple scales

U+2605 introduces a new loss, the Focal loss function, to alleviate the problem of the extreme foreground-background class imbalance

As a base network, in this tutorial, we have used Resnet50 model for feature extraction from images

More supporting literature on retinanet

* Object Detection with Retinanet

* The intuition behind retinanet

Additional details on Training

* Features are extracted using Resnet50 as the base model

* Focal loss is used for classification branch of the retinanet

* L1 regression loss is used for the FPN bbox estimator branch

* SGD optimizer with momentum functionality is used for training

* Learning rate was scheduled to decrease every one-third of total epochs

Appendix — 2

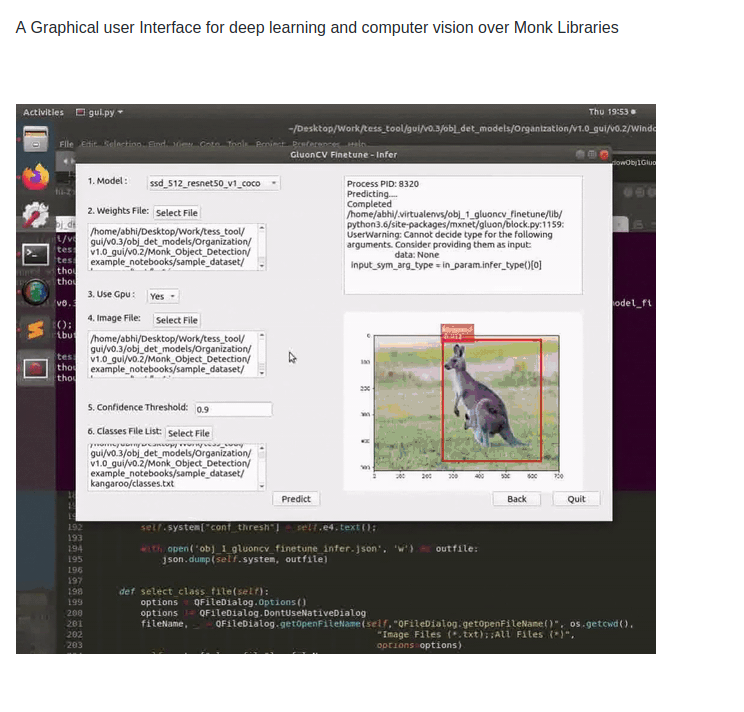

More Information on Monk Libraries

The entire set comprises of 3 libraries:

U+2605 Monk Image Classification

U+2605 Monk Object Detection

U+2605 Monk Studio

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.