The NLP Cypher | 03.28.21

Last Updated on July 24, 2023 by Editorial Team

Author(s): Ricky Costa

Originally published on Towards AI.

NATURAL LANGUAGE PROCESSING (NLP) WEEKLY NEWSLETTER

The NLP Cypher U+007C 03.28.21

A Step Forward in Open Sourcing GPT-3

In the 02.21.21 newsletter, we highlighted EleutherAI’s ambitions for building an open-sourced version of the uber large GPT-3 175B param model. And this week, they released two versions in the sizes of 1.3B and 2.7B params as a stepping stone towards paradise. Here’s how the current GPT models stack up. U+1F447

“The release includes:

The full modeling code, written in Mesh TensorFlow and designed to be run on TPUs.

Trained model weights.

Optimizer states, which allow you to continue training the model from where EleutherAI left off.

A Google Colab notebook that shows you how to use the code base to train, fine-tune, and sample from a model.”

Their notebook requires a Google storage bucket to access their data since TPUs can’t be read from local file systems. You can set up a free-trial fairly easily, they provide a link in the notebook.

Colab:

Google Colaboratory

Edit description

colab.research.google.com

Code:

EleutherAI/gpt-neo

An implementation of model & data parallel GPT2 & GPT3 -like models, with the ability to scale up to full GPT3 sizes…

github.com

Hacker Side Note:

Earlier this year, EleutherAI apparently suffered a DDOS attack. Connor Leahy, a co-founder, tweeted a visualization of abnormal traffic receiving a bunch of HTTP 403s on the pile dataset. If you would like to help donate to their cause (and towards secured hosting), go here: SITE.

Visualization of abnormal traffic

Millions of Translated Sentences in 188 Languages

Woah, that’s a lot of translated corpora. Helsinki-NLP collection is menacing collection of monolingual data that includes:

“translations of Wikipedia, WikiSource, WikiBooks, WikiNews and WikiQuote (if available for the source language we translate from)”

Helsinki-NLP/Tatoeba-Challenge

Automatically translated data sets that can be used for data augmentation Translations have been done with models…

github.com

Backprop AI U+007C Finetune and Deploy ML Models

Library can fine-tune models with 1 line of code.

Features:

- Conversational question answering in English

- Text Classification in 100+ languages

- Image Classification

- Text Vectorization in 50+ languages

- Image Vectorization

- Summarization in English

- Emotion detection in English

- Text Generation

backprop-ai/backprop

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models. Solve a variety of tasks with…

github.com

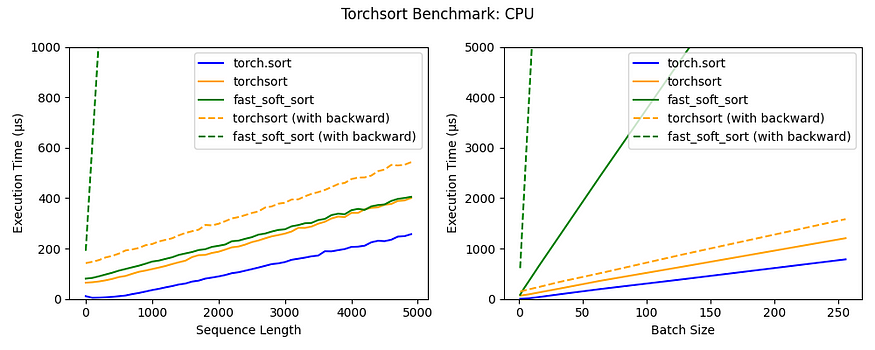

TorchSort

Mr. Koker hacked a Google library for sorting and ranking and converted it into PyTorch, (faster than the original) U+1F649. Areas where ranking is used: Spearman’s rank correlation coefficient, top-k accuracy and normalized discounted cumulative gain (NDCG).

Benchmarks:

teddykoker/torchsort

Fast, differentiable sorting and ranking in PyTorch. Pure PyTorch implementation of Fast Differentiable Sorting and…

github.com

Dive Into Graphs (DIG) U+007C A New Graph Library

This new library helps in four research areas:

- Graph Generation

- Self-supervised Learning on Graphs

- Explainability of Graph Neural Networks

- Deep Learning on 3D Graphs

divelab/DIG

DIG: Dive into Graphs is a turnkey library for graph deep learning research. DIG: A Turnkey Library for Diving into…

github.com

Awesome Newspapers U+007C A Possible Data Source?

A curated list of online newspapers covering 79 languages and 7,102 sources. The data hasn’t been scraped it just indexes the sources.

divkakwani/awesome-newspapers

A curated list of online newspapers covering 79 languages and 7102 sources. Purpose This list provides newspaper…

github.com

State of Search U+007C The DeepSet Connection

DeepSet walks you down memory lane in open domain qa/search. Beginning with the 2 stage Retriever Reader (ha! remember reading the Chen et al. 2017 paper?), then to RAG or generated responses (as opposed to extractive), and finally heading into summarizing (using Pegasus), and their latest “Pipelines” initiative. They also discuss a future initiative of using a query classifier to classify which type of retrieval their software should use (dense vs. shallow). This is really cool because it shows their interest in using hierarchy in AI decision-making by using… AI. U+1F440

Haystack: The State of Search in 2021

How to build a semantic engine for a better search experience

medium.com

Stanford’s Ode to Peeps in the Intelligence Community to Adopt AI

An interesting U+1F9D0 white paper from Stanford giving advice and warning to the US intelligence community about adopting AI and staying up-to-date with the fast moving field to stay competitive. They also recommend an open-sourced intelligence agency. U+1F9D0

“…one Stanford study reported that a machine learning algorithm could count trucks transiting from China to North Korea on hundreds of satellite images 225 times faster than an experienced human imagery analyst — with the same accuracy.”

HAI_USIntelligence_FINAL.pdf

Edit description

drive.google.com

Matrix Multiplication — Reaching N²

What will it take to multiply a pair of n-by-n matrices in only n2 steps??

FYI, matrix multiplication is the engine of all deep neural networks. The latest improvement, “shaves about one-hundred-thousandth off the exponent of the previous best mark.” Take that Elon!

Matrix Multiplication Inches Closer to Mythic Goal

For computer scientists and mathematicians, opinions about “exponent two” boil down to a sense of how the world should…

www.quantamagazine.org

The Repo Cypher U+1F468U+1F4BB

A collection of recently released repos that caught our U+1F441

GENRE (Generative Entity Retrieval)

GENRE uses a sequence-to-sequence approach to entity retrieval (e.g., linking), based on a fine-tuned BART architecture. Includes Fairseq and Hugging Face support.

facebookresearch/GENRE

The GENRE (Generarive ENtity REtrieval) system as presented in Autoregressive Entity Retrieval implemented in pytorch…

github.com

Connected Papers U+1F4C8

Shadow GNN

A library for graph representational learning. It currently supports six different architectures: GCN, GraphSAGE, GAT, GIN, JK-Net and SGC.

facebookresearch/shaDow_GNN

Hanqing Zeng, Muhan Zhang, Yinglong Xia, Ajitesh Srivastava, Andrey Malevich, Rajgopal Kannan, Viktor Prasanna, Long…

github.com

Connected Papers U+1F4C8

Unicorn on Rainbow U+007C A Commonsense Reasoning Benchmark

Rainbow brings together six pre-existing commonsense reasoning benchmarks: aNLI, Cosmos QA, HellaSWAG, Physical IQa, Social IQa, and WinoGrande. These commonsense reasoning benchmarks span both social and physical common sense.

allenai/rainbow

Neural models of common sense. This repository is for the paper: Unicorn on Rainbow: A Universal Commonsense Reasoning…

github.com

Connected Papers U+1F4C8

TAPAS [Extended Capabilities]

This recent paper describes an extension to Google’s TAPAS table parsing capabilities to open-domain QA!!

google-research/tapas

Code and checkpoints for training the transformer-based Table QA models introduced in the paper TAPAS: Weakly…

github.com

Connected Papers U+1F4C8

MMT-Retrieval: Image Retrieval and more using Multimodal Transformers

Library for using pre-trained multi-modal transformers like OSCAR, UNITER/ VILLA or M3P (multilingual!) for image search and more.

UKPLab/MMT-Retrieval

This project provides an easy way to use the recent pre-trained multimodal Transformers like OSCAR, UNITER/ VILLA or…

github.com

Connected Papers U+1F4C8

AdaptSum: Towards Low-Resource Domain Adaptation for Abstractive Summarization

The first benchmark to simulate the low-resource domain Adaptation setting for abstractive Summarization systems with a combination of existing datasets across six diverse domains: dialogue, email , movie review, debate, social media, and science, and for each domain, reduce the number of training samples to a small quantity so as to create a low-resource scenario.

TysonYu/AdaptSum

Paper accepted at the NAACL-HLT 2021: AdaptSum: Towards Low-Resource Domain Adaptation for Abstractive Summarization …

github.com

Connected Papers U+1F4C8

CoCoA

CoCoA is a dialogue framework providing tools for data collection through a text-based chat interface and model development in PyTorch (largely based on OpenNMT).

stanfordnlp/cocoa

CoCoA is a dialogue framework written in Python, providing tools for data collection through a text-based chat…

github.com

Connected Papers U+1F4C8

Dataset of the Week: MasakhaNER

What is it?

A collection of 10 NER datasets for select African languages: Amharic, Hausa, Igbo, Kinyarwanda, Luganda, Naija Pidgin, Swahili, Wolof, and Yoruba. The repo also contains model training scripts.

Where is it?

masakhane-io/masakhane-ner

This repository contains the code for training NER models, scripts to analyze the NER model predictions and the NER…

github.com

Every Sunday we do a weekly round-up of NLP news and code drops from researchers around the world.

For complete coverage, follow our Twitter: @Quantum_Stat

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.