How Do Diffusion Models Work? Simple Explanation: No Mathematical Jargon, Promised!

Last Updated on June 3, 2024 by Editorial Team

Author(s): Suhaib Arshad

Originally published on Towards AI.

Background Knowledge

Essentially, there are 3 common types of generative models: Generative Adversarial Networks (GANs), Variational Autoencoder, and Flow-based models. Although they have proven their spot as high-quality image-generating models, they fall short on a few aspects. GAN models have symptoms of unstable training due to which they are harder to converge; along with that, they also suffer from a lack of diversity while producing output. VAEs have a massive tradeoff to make between how accurately they can recreate the input data and how well they can organize the data in the hidden space. Flow models need high computational power because they must use reversible steps to generate data.

How do diffusion models come into play?

Inspired by these challenges came the origin story of diffusion models.

In deep learning, diffusion models have already replaced State-of-the-art generative frameworks like GANs or VAEs. With Diffusion models showcasing the following capabilities over others:

- Outperforms GANs in terms of high-quality and high-fidelity image samples.

- The training process is very stable compared to GANs.

- Capitalizes on the probabilistic framework similar to VAEs, ensuring a structured latent space.

Real World Applications of Diffusion models

- Along with ChatGPT, there has been a skyrocket in development of technology and the diffusion model is one of the biggest byproducts of it.

- Plenty of papers published have proven the extraordinary capability of Diffusion models, such as diffusion beating GANs on image synthesis. Some of the most popular Diffusion models are DALL-E 3 by OpenAI, Stable Diffusion 3 by Stability AI, and Midjourney. DALL-E 3 is an image generation model by OpenAI (images below are generated using DALL-E 3).

- With the current wave in the success of Diffusion models, many AI enthusiasts might be interested in understanding the diffusion algorithm under the hood.

Here are the top 3 use cases where diffusion models can be used:

Image Generation: Diffusion models have a proven ability to generate high-quality images from random noise, iteratively refining the process.

Resolution Enhancer: These models have the ability to take a low resolution image and turn it into a higher resolution one.

Image Inpainting: An interesting use case where we can use diffusion models to in-paint part of the image that we want to change or remove.

What Exactly are Diffusion Models?

The concept of the diffusion model is little over 9 years old. In 2015 there was a paper published “Deep Unsupervised Learning using Nonequilibrium Thermodynamics” [1].

The name “diffusion” is comparative to how particles change state, or “diffuse”, when in contact with a medium, similar to how molecules react when placed in different mediums like: 🥶 a freezing space (solid state) or 💥in a boiling space (gaseous state).

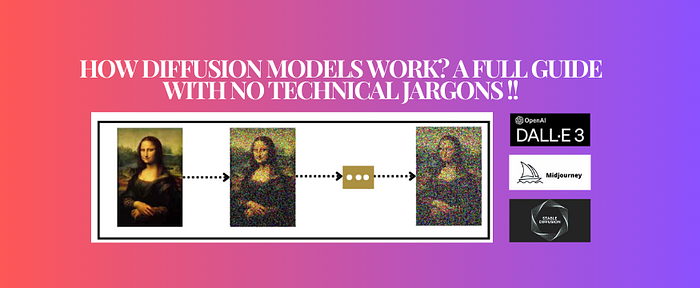

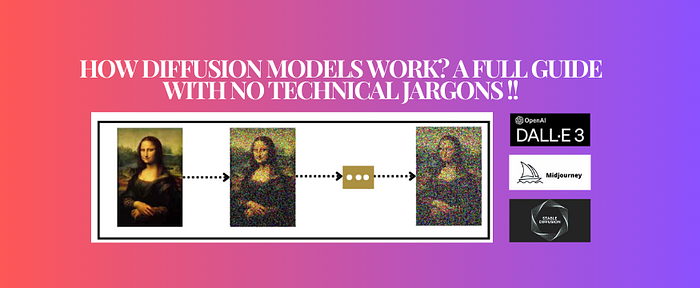

Similar to non-equilibrium statistical physics, the idea behind diffusion models in deep learning is to slowly and iteratively destroy the structure of a data distribution through a forward diffusion process. And then gradually learn to reverse the diffusion process to restore the structure in data, resulting in a high diversity and high-quality image-generating model.

Diffusion models undergo a series of sequential steps to slowly add random noise to data/Image and then learn to reverse the diffusion process to get the desired or generated data/Image samples back from the noise.

Forward diffusion process

Going beyond the definition, let me explain how the forward process works in simple words. Basically, every image follows some non-random distribution, which we don't know of. The core idea is to destroy that distribution by sequentially adding noise and at the end we will end up with pure noise.

How do we add noise to the image?

We use something called a variance scheduler for adding noise to the data.

The scheduler controls the rate at which noise is added at each time step.

Its values can range from 0 to 1. The values are usually kept low to prevent variance from exploding. A 2020 paper published by UC Berkeley [2] uses a linear schedule so the output looks like that:

Hower in a 2021 paper by OpenAI [3] decided that using a linear schedule is inefficient for the process. As you can notice from the above figure, a vast majority of the information gets lost halfway through the total steps. So they came up with their own schedule ,called a cosine schedule (Fig. 11). This change in approach of scheduling noise helped in reducing the number of steps down to 50.

Reverse diffusion process

The whole objective of the reverse diffusion process is to retrieve images back from pure noise (Also called Denoising). For that reason, we use neural networks. Similar to the working of GANs, we train neural networks to produce like a generator does in the GAN model. The distinction being that instead of doing all the work in one step, like GAN networks do, we will split the process into multiple timesteps and remove noise gradually. Although this approach is time taking, it is more efficient and easier to train.

A common misconception related to the reverse diffusion process is that it predicts the noise to be removed from an input. The truth is that the Diffusion model predicts the complete noise to be removed in a given timestep. So, each time we try to predict the noise using a neural network, we subtract part of it and move to the next step. This means that if we have timestep t=1000 then our Diffusion model tries to predict the entire noise on which removal we should get to t=0, not t=999.

There are 2 key takeaways from this example:

- You can use fewer timesteps in your schedule when doing the inference after the model is trained.

- You can use a different schedule when doing the inference.

First should be obvious when your network predicts the noise that is already quite good, you can make larger “jumps”. Larger because the β range remains the same, only the slope changes. The second is less straightforward, but you can use different schedules with different slopes (e.g. you can train with a linear schedule and inference with a cosine schedule).

Architecture

The Model architecture commonly used for diffusion models is a U-Net [4] architecture. This is a base architecture for diffusion models (which will evolve in cases of stable diffusion where we add a latent layer for image embeddings).

Skip Connections

Within a diffusion model, we add skip connections in order to retain information from previous layers and pass it on to upcoming layers. This component especially helps to capture and procure high-resolution details from the model as we go through the process of downsampling.

Timestep Embeddings

Embeddings are especially useful in determining the progress of the model in the diffusion process. It carries information about the current timestep that gets passed on to the model, enabling it to either generate or remove noise data depending on the inferred stage of the process.

In each step of the process, add an embedding with information about the current timestep. To do that, we need to use sinusoidal encoding for encoding timestep t, and some kind of embedder for our prompt. We do that for all downsample and upsample blocks.

Embedder

The role of an embedder is to turn input data into a lower-dimensional latent space that can be efficiently processed by the subsequent layers of the network.

Embedders similar to CLIP, that can be used as prompts in order to generate images with textual commands like DALL-E. Outputs from the positional encoding and text embedder are concatenated to each other and passed into downsample and upsample blocks.

Downsample Block

Downsample blocks are solely used to reduce the resolution of the image data, encapsulating large and grainy features of the image, hence reducing the overall computational complexity.

Self-Attention Block

The objective of Self-attention mechanism is to weight in importance of inputs against each other within a sequence and modify their influence on the output. Self-attention in diffusion works by blurring/restricting the regions that diffusion models can interact with, at each iteration. This helps in stability and high-quality image generation.

Upsample Block

In perfect contrast to downsample blocks, the function of Up sample blocks is to finetune the images where it iteratively refines and sharpens the images. Reconstructing high-resolution outputs back up from the low-resolution features.

Training process

- Initiate/Repeat training process

- Start with Sampling an image from our dataset.

- Followed by sampling timestep ‘t’

- And then sampling noise from the normal distribution.

- Lastly, optimize the objective through gradient descent.

- We repeat the complete process over and over again until it converges, and our model gets ready.

Summary

- Broadly speaking, there is a forward diffusion and reverse diffusion process in the grand scheme of diffusion architecture; Forward process adds noise to the input image in a controlled manner with the help of a noise schedule, while the Reverse diffusion removes noise or de-noises the data, over a series of multiple steps to generate a unique image.

- Clearing a common misconception: Diffusion model aims to predict the complete noise at any given step, and not just the difference in noise between step t and t-1.

- There are 3 components involved in the diffusion process that we will summarize below: Noise Scheduler, Neural Network (U-net based architecture) and Timestep Embedding.

- The decision to select the right noise scheduler for the task, depends on how much noise you want to sample at a given step

tand a number of steps to be taken until there is a purely noised image. You can also consider experimenting with different schedules for inference and training. - With the training phase, the neural network takes the noisy image along with the timestep embedding as inputs and learns to produce denoised outputs. Which will act as an approximation to the original data distribution. The neural network training helps the model to differentiate well between the actual and predicted noise added during the forward process. While in the sampling phase, the neural network gets extremely good at denoising data with each step, hence giving a coherent and refined image in accordance with the learned noise schedule and time step embeddings.

- During the diffusion process, timestep embeddings can act as a way to encode the specific time step 𝑡 into a format understandable to the neural network. Generally, we go for sinusoidal positional embeddings or text embedders in cases of conditional text input prompt.

FAQs

Q1) When do we particularly Use Forward and Reverse diffusion processes, during both Training and Inferencing?

- Forward diffusion process:

- During training: The sole objective of forward diffusion process is to add noise to the data progressively by means of a noise scheduler. The scheduler adds gaussian noise into the original data distribution, over a series of time steps.

- During Inferencing: Forward Diffusion process is not directly used in case of inferencing.

2. Reverse diffusion process:

- During training: The model gets trained with an objective of predicting noise added at each step. So, that way it learns to denoise data and retain data distribution similar to the original one.

- During Inferencing: Iteratively new samples get generated whilst subtracting the noise. This denoised output, although resembles the features of the training data distribution but are unique instances on their own.

Q2) How do Diffusion Models create diversity in the Output Image?

- The diversity in output images of a diffusion model, like DALL-E, is created through following key steps:

- Initiating the process by adding random noise to the image. The noise is nothing but distortion in the image by the addition of random pixels to the image.

- Over multiple timesteps, the model adds more and more noise to the image, t to a point that there is only pure noise left in the image (this is called Forward diffusion process). Note: although the model adds random noise, it has a standard procedure to control how much noise to be added at each timestep.

- After there is pure noise in the image, the model begins denoising the image (Reverse diffusion process), in a gradual manner. Through training, the model gets better at predicting a less noisy version at a given timestep.

- During the denoising process in diffusion, the model samples from a Gaussian probability distribution & controls the random noise that gets eliminated from the image at each step.

- In cases of conditional diffusion models such as DALL-E or Midjourney, there is a tiny tweak in the process. Although the randomness in the denoising process stays the same, there is an additional conditional input prompt like text, image, or both, used to generate unique images but in a guided manner.

- The randomness introduced by the reverse diffusion process at each timestep guarantees high-diversity image generation, given any conditional prompt. This forms the crux of creative, versatile and artistic images being generated with nothing but plain English.

Q3) Why does “stable” mean in Stable Diffusion? How Is it different from diffusion models?

The “Stable” in Stable Diffusion means that both the forward & reverse diffusion process are happening in a low-dimensional latent space instead of a high-dimensional space ensuring stability or a stable diffusion process.

Stable diffusion uses the following techniques to ensure stability in its diffusion process:

- During reverse diffusion, the model ensures that the noise is being removed while latent space is being maintained.

- Adding Regularization to the denoising process prevents the model from making abrupt changes between steps.

- Being cautious while using noise scheduler to prevent unstable training.

- In the end, we bring back the image from its latent space to the pixel space, leading to a high-resolution image. Below is a detailed architecture of the stable diffusion process.

Q4) For a layman, how is it possible for a model to generate image based on a simple text input prompt?

- With relation to text-to-image generation model, conditional diffusion works by aligning the model’s image outputs with the semantic context of the input textual prompt. Here’s are some procedures to achieve this:

- Diffusion models chained with embedding from Pre-Trained LLMs:

- Firstly, the text prompt is encoded using a Pre-trained LLM (like BERT or GPT 2/3). The transformer-based model is equipped to convert text prompts into a high-dimensional vector to encapsulate its semantic context.

- Next, the generated embeddings from LLMs are chained with the diffusion model to ensure that the model fully understands the context of the text prompt.

2. Training Diffusion models on Paired Data:

- With this approach, we directly map text-image pairs in our training data, so that the diffusion model can learn the correlation between Images and their corresponding descriptions.

- With the loss function we aim to penalize outputs that misalign with the input prompt, this ensures that the model generates output images semantically in-line with the text prompt.

3. Tuning Classifier-Free Guidance Parameter:

- Few diffusion models use a technique called as Classifier Free Guidance. CFG is controllable parameter, that helps users set the fidelity-creativity threshold for the model. If we chose high fidelity, the model would take a stricter approach and stick to the prompt vs high creativity where it makes more imaginative interpretations of the prompt.

To summarize, there are various approaches to take for conditioning a diffusion model based on received text input prompt. It could be through chaining diffusion models with language model embeddings or mapping paired text-image data and training diffusion models on it, or using a classifier-free guidance that we can tune the fidelity-creativity parameter with. The end goal of all these approaches is to ensure that the model’s image output is perfectly aligned with the semantic context of the given text prompt.

Resources for diffusion models

Aman’s AI Journal • Primers • Diffusion Models

Step by Step visual introduction to Diffusion Models. — Blog by Kemal Erdem

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.