Generating Adversaries for CNNs: My Cat Is a Goldfish, so Dont Tax It.

Last Updated on March 21, 2023 by Editorial Team

Author(s): Akhil Theerthala

Originally published on Towards AI.

Discover how to fool CNNs with unexpected images, like a cat that’s actually a goldfish! Learn how to generate adversaries for convolutional neural networks (CNNs) with this informative article.

AI has become ubiquitous in our daily lives, with many applications developed to automate manual work. This has inevitably increased the competition for jobs in the market. As a result of this intense competition, many young graduates are being constantly rejected for different.

One such graduate is Robert. He is a young man living in a single-room house looking for a job. Robert had made a mistake in his engineering. He entered the world of AI later than his batchmates. While many of his friends were doing internships in different companies, Robert started learning about Neural networks, random forests etc.

Time passed, and it was time for his university placement season. He kept applying to many companies. However, he was rejected every time. Dejected, he started using the off-campus opportunities.

One day he was returning home after an interview. On his way home, he saw a cat in a cardboard box moaning. He took a look at the cat, which was utterly skinny. It hardly had any flesh. He felt sad for the poor cat and decided to raise it.

After a few days, he discovered that he was required to pay a tax for keeping a pet in his home. He started worrying about the expenses as he was living with minimum amenities.

He researched ways to avoid paying the tax. Hence, he was scrolling through the web for ideas. One website listed the tax rate for different kinds of animals. He discovered from the article that there was no tax on raising goldfish.

Luckily for him, his government has recently launched an automated registration process. He looked into the registration process and found that he needed to submit an image of the cat in the application. A model would then analyze the image and determine the appropriate amount of tax to be charged.

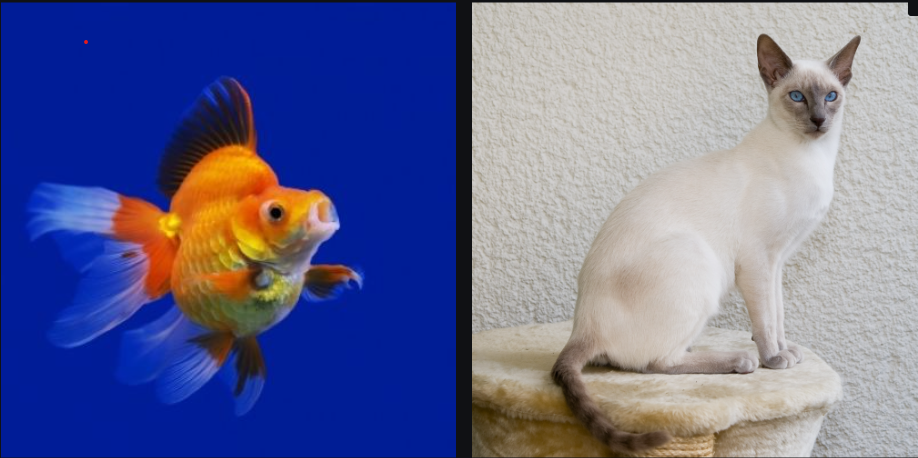

Hence, he started making a plan. However, he didn’t know whether a human oversees the identification. Therefore, he should manipulate the image so the model recognizes it as a goldfish, but humans can still find it as a cat.

If something went wrong, he could blame the model. Being a beginner in ML, he started looking into adversarial ML.

Resnet50 is a model that is 50 layers deep. It has blocks of convolutional modules, followed by linear layers leading to the output. It is trained on the ImageNet dataset. This dataset contains millions of images of different categories. The following image is the brief set of transformations an input image undergoes before generating an output probability vector.

The above image illustrates the complexity of the computations in a neural network designed to classify images into different categories. It takes images with a shape of (3,224,224) as input. After all the calculations, a (1,1000) array is popped out, which contains the probabilities of the image belonging to a respective class.

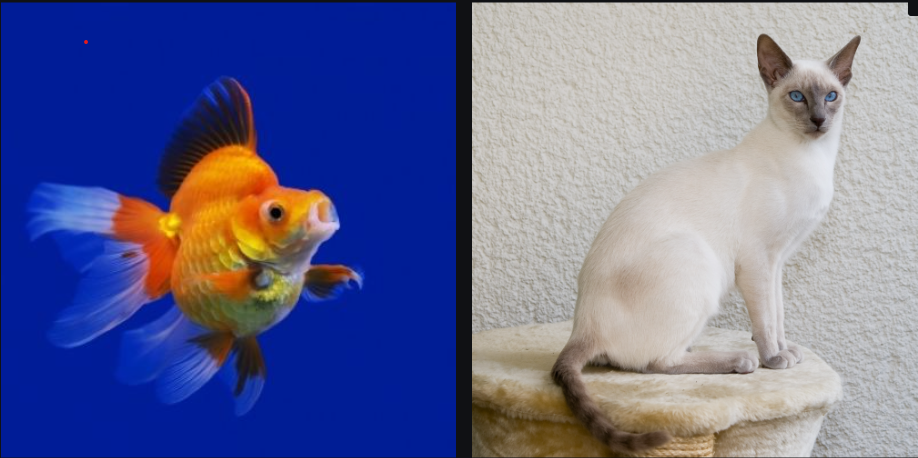

With this information, he began working on his plan. He took an image of his cat and then verified the class in which his cat was classified. After completing the necessary pre-processing and transformations, he loaded the resnet50 model from the torchvision.models subpackage.

from torchvision.models import resnet50

#importing the model

model = resnet50(pretrained=true)

#switching to inference mode.

model.eval()

#testing the image.

pred=model(cat_tensor)

print(imagenet_classes[pred.max(dim=1)[1].item()])

In the above snippet, imagenet_classes is a JSON file containing the index to the class mapping of the ImageNet dataset. He looked at the output from the model.

After confirming everything was in order, he began working on his project. He divided the entire work into two parts:

- To disrupt the model from identifying the cat as a cat.

- Direct the model to identify his cat as a goldfish.

Part-1: Getting the model to misclassify.

Well, now that he verified the functionality of the model. He stwondered“ What is a possible way to avoid the model detecting the image as a cat?”

There are many ways in which he can do that. He was confused. Feeling confused, he sought his friend’s opinion..

“There are many ways we can do that. However, the simplest approach would be to add noise to the image.”

His friend explained as follows,

“ The only thing the model sees is a matrix of numbers. It makes a few computations using the entries of the matrix. If we slightly manipulate the numbers, we can find a way to manipulate the results.” “However, we need to ensure that the image still looks like the original to the human eye. So, let us restrict the manipulations to a range. Say (ϵ, –ϵ). “

After getting some direction, Robert returned to his room and started coding.

So, essentially, he was trying to change the array entries by a slight amount, i.e., the manipulations must lay within a range ( ϵ, — ϵ) where ϵ is a small value. But what noise should we use to manipulate these computations?

Okay. He had found a direction. He needs to manipulate the array values so that the final probability values get manipulated. But the model should also not detect all the cats as goldfish. Hence, the changes should be minute.

Here is a code snippet in PyTorch that he used.

# Initializing noise and setting an optimiser to update the noise over each iteration. noise = torch.zeros_like(cat_tensor, requires_grad=True) opt = optim.SGD([noise], lr=1e-1)

# Setting the range of manipulation.

epsilon = 2./255

# Iteratively updating the noise, to increase the loss value.

for t in range(30):

pred = model(norm(cat_tensor + delta))

loss = -nn.CrossEntropyLoss()(pred, torch.LongTensor([341]))

#print for every 5 iterations.

if t % 5 == 0:

print(t, loss.item())

# Clear the gradients

opt.zero_grad()

#backprop

loss.backward()

opt.step()

#limit the manipulation range

delta.data.clamp_(-epsilon, epsilon)

He got the following trend in the loss values with the above code.

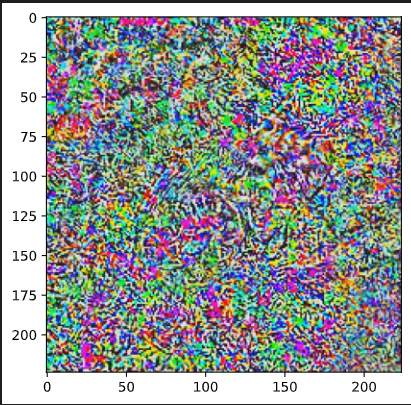

Finally, the actual probability of the original class, the “cat”, for the manipulated image is 1.49* 10–8. Whereas the probability predicted for the original image is ~0.996. The following is the image of the final noise:

He looked into the results. The model is now predicting his cat as a chihuahua. It had a probability of 0.99998.

Part-2: Forcing the model to detect the image as a goldfish.

He understood what he needed to do by looking at the earlier code. He only needed to manipulate the loss function. If he did that, then his work would be complete.

“How should I manipulate the loss?” Robert thought. Earlier, he only tried to increase the error between the prediction and the original target. After a few minutes, he found that he should also minimize the error between the model’s predictions and the target label for “goldfish”.

Hence, he changed the optimization step slightly.

loss = (-nn.CrossEntropyLoss()(pred, torch.LongTensor([248])) + nn.CrossEntropyLoss()(pred, torch.LongTensor([1])))

He trained the model with the new loss function. After training for 30 epochs, he wasn’t satisfied with his results. Hence, he trained for a more significant number of epochs.

Now, he saw the final result:

max_class = pred.max(dim=1)[1].item()

print("Predicted class: ", imagenet_classes[max_class])

print("Predicted probability:", nn.Softmax(dim=1)(pred)[0,max_class].item())

Which gives the output:

Predicted class: goldfish Predicted probability: 0.9999934434890747

After seeing the result, he was satisfied. Slowly he became curious. He wanted to see if the noise added to the image had any patterns. Hence, he plotted the noise using plt.imshow.

Here is what the noise looked like:

He didn’t notice any specific patterns naked to the human eye. He wanted to investigate more. However, he remembered that he only had a day before he would be fined for not registering his pet. Hence, he finally decided to take a step back, compare the manipulated image with the original image and finally submit it.

He sighed with relief. He didn’t notice any differences between the two images. However, he was filled with fear and guilt. He didn’t want to get in trouble. The only thought that remained in his mind was, “What should I do if I get busted?”

After thinking a lot for a day, it was evening 4 PM on the final day. He reluctantly filled in all his application details and uploaded the image. However, right before he clicked submit, he got a call.

In normal cases, he wouldn’t have lifted the call. However, he doubted the weird coincidence. He slowly lifted the call.

“Congratulations Robert. We welcome you to join our team.” A female voice buzzed through the speaker.

“Team? May I know what this is about?”

“This is about the role of ML Engineer that you interviewed for last month. I have mailed you your package details and the offer intent letter. Could you sign it and send me a confirmation mail?”

“…” He was confused. After getting rejected by countless companies, he finally bagged a job. He was in disbelief.

“Robert?”

“Oh. Yeah. Thanks a lot for the call. I will send the signed copy soon.” Robert replied fast and cut the call.

He screamed in happiness. After settling down, he looked at his computer screen. He laughed for a few seconds, uploaded the original image instead of the manipulated one, and submitted the application.

After submitting it, he thought about what he had done for the past few days. He suddenly felt embarrassed about the way he thought. “Why did I think like that? The world doesn’t even revolve around me. “

He wanted to cry when he recalled his thoughts about getting busted and other idiotic stories he had built up. This story is something he is never going to share with anyone. Clearing his mind, he slowly walked out of his room, excited about the future.

This is a brief and simple example to generate adversarial examples. I initially didn’t want to try this story-based approach until I got better at writing. But I had to start somewhere. I hope you found it fun. I know that Robert is not a clear and fleshed-out character. I wanted to do it in a better way too. But I think my future characters and settings will be a bit better.

There are far more advanced techniques that give better and more stealthy results. Approaches that use different methods like fault injection, ones that depend upon the faults inherent in the hardware, etc. The field of Adversarial Machine learning is rapidly increasing, and its importance will keep rising over time as more and more companies try to incorporate ML models in their products.

Thanks for reading this article till the end. You are the best! Stay tuned to get new updates on my latest articles. Feel free to comment with your suggestions and opinions in the comment section. Hope to see you again! ✌️

Originally published at https://www.neuronuts.in on March 13, 2023.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.