AI Governance for Generative AI: A Framework for Organizations Across Maturity

Author(s): Mishtert T

Originally published on Towards AI.

This white paper is based on my observations and experiences, and is my view.

Executive Summary

I initially wanted to jump straight into defining AI governance, but I realize I need to understand why this topic feels so overwhelming right now. The rapid adoption of generative AI has created what feels like a perfect storm. Let me correct that. It’s more like trying to build the plane while flying it.

Organizations are grappling with the implementation of generative AI while simultaneously establishing governance frameworks. This reminds me of when I first encountered database normalization; the theory seemed clear, but applying it to real-world scenarios revealed layers of complexity I hadn’t anticipated.

This white paper examines AI governance specifically for generative AI, considering how organization size, AI journey phase, and data maturity influence governance approaches.

The central question that keeps emerging is:

How can organizations balance innovation speed with responsible AI practices?

The Generative AI Governance Challenge

I’m finding myself questioning whether traditional AI governance frameworks even apply to generative AI. The technology’s unpredictable outputs and ability to generate new content create governance challenges that feel fundamentally different from previous AI applications.

The core governance principles remain the same: transparency, accountability, fairness, and safety. What changes is how we apply these principles to systems that can hallucinate, generate biased content, or produce outputs we never explicitly trained them to create.

Generative AI governance encompasses the policies, frameworks, and practices that guide ethical development, deployment, and use of these systems.

The purpose extends beyond compliance. It’s about building trust while enabling innovation.

Organization Size and Governance Approaches

Small and Medium Enterprises (SMEs)

Smaller organizations often have advantages in agility and decision-making speed.

For SMEs, effective AI governance is characterized by simplicity, flexibility, and ethical alignment. What does “simplicity” actually mean in practice?

Key governance strategies for SMEs include:

- Appointing clear roles within existing structures: Unlike large enterprises with dedicated AI ethics officers, SMEs can designate a data manager or IT lead to oversee AI ethics and compliance.

- Creating simple but comprehensive policies: A marketing firm using AI-driven customer analytics can develop straightforward policies ensuring no data sharing without explicit customer consent

- Conducting regular bias and fairness checks: Even with limited resources, SMEs must implement processes to identify and address potential biases in AI outputs

Research shows that 65% of SMEs struggle to implement AI governance due to cost and complexity barriers. This suggests the problem is more significant than I first thought.

SMEs should frame Governance as a Growth Enabler, not a Barrier.

This highlights the urgent need for governance models that reflect the realities of smaller organizations, focusing on practical, accessible controls rather than enterprise-scale bureaucracy.

For SMEs, governance should be right-sized: clear policies, basic oversight, and incremental improvements can deliver significant benefits without overwhelming limited resources.

Large Enterprises

Enterprise AI governance requires more structured approaches due to scale and regulatory requirements.

I keep coming back to this question.

How do large organizations maintain consistency across diverse business units while allowing for innovation?

Large organizations typically implement:

- Formal governance frameworks: Comprehensive AI governance with rigorous policies and processes.

- Cross-functional AI committees: Representatives from business units impacted by AI, acting as two-way conduits for strategy and feedback.

- Dedicated AI leadership: Executive-level AI leaders responsible for strategy, governance, partnerships, and adoption.

IBM has established an AI Ethics Council that reviews new AI products to ensure compliance with ethical AI principles. Microsoft has similarly embedded six ethical principles (fairness, reliability, privacy, inclusiveness, transparency, and accountability) into its product development lifecycle.

AI Journey Phases and Governance Evolution

Stage 1: Experiment and Prepare

Organizations at this stage focus on education, policy formulation, and experimentation. The governance approach should be:

- Awareness-building: Conversations about AI are happening, but not strategically.

- Basic policy development: Initial ethical guidelines aligned with organizational values.

- Risk assessment: Identifying potential AI applications and associated risks.

I’m wondering if organizations sometimes skip this foundational stage in their eagerness to deploy AI. That could explain why so many AI initiatives fail later.

Stage 2: Build Pilots and Capabilities

At this stage, AI appears in proof-of-concept projects and pilot initiatives. Governance requirements include:

- Pilot project oversight: Clear success metrics and evaluation criteria.

- Cross-functional collaboration: Stakeholder engagement and feedback mechanisms.

- Initial risk management: Automated anomaly detection and performance monitoring

Stage 3: Industrialize AI

Organizations move AI projects to production with formal governance structures. This phase requires:

- Operational governance: Project approval workflows and model validation procedures.

- Performance monitoring: Continuous assessment of AI system outputs.

- Compliance frameworks: Alignment with regulatory requirements.

Stage 4: Systemic Integration

Every new digital project considers AI applications. Governance becomes embedded in organizational DNA:

- Enterprise-wide policies: Comprehensive frameworks covering all AI applications.

- Autonomous monitoring: AI systems that self-monitor for bias, drift, and performance issues.

- Continuous optimization: Regular refinement of governance practices

Wait, is this linear progression realistic?

Organizations might advance in some areas while remaining basic in others.

The journey likely involves iterations and setbacks.

Data Maturity and AI Governance

This section has been particularly challenging for me to conceptualize. Data maturity and AI governance are so intertwined that separating them feels artificial.

Level 1: Initial Data Maturity

Organizations with siloed data and minimal governance face significant AI readiness challenges.

- State: Manual reporting, disconnected data sources.

- AI Governance Needs: Basic data classification and access controls.

- Focus: Identifying key data assets and establishing fundamental integration.

Can organizations even implement meaningful AI governance without basic data governance? The evidence suggests not really.

Level 2: Developing Data Maturity

Pilot-level integrations and early governance structures emerge.

- State: Initial automation, basic data quality monitoring.

- AI Governance Needs: Data lineage tracking, initial bias detection.

- Focus: Building centralized data stores and automated ETL processes.

Level 3: Defined Data Maturity

Centralized platforms with automated pipelines and formal governance.

- State: Enterprise data architecture with standardized processes.

- AI Governance Needs: Advanced monitoring, explainability frameworks.

- Focus: Expanding AI initiatives across business units.

Key insight is that data maturity directly constrains AI governance capabilities.

Level 4: Managed Data Maturity

Enterprise-wide access with real-time capabilities.

- State: Formalized policies, real-time data pipelines.

- AI Governance Needs: Automated compliance monitoring, advanced bias detection.

- Focus: Operationalizing AI across critical business processes.

Level 5: Optimized Data Maturity

Fully integrated, governed, and automated environment.

- State: Autonomous data management with continuous optimization.

- AI Governance Needs: Predictive governance, autonomous risk mitigation.

- Focus: AI-driven innovation and competitive advantage.

Generative AI-Specific Governance Considerations

Generative AI introduces unique challenges that traditional AI governance frameworks don’t fully address.

Unique Risks and Challenges

- Output Quality and Hallucinations: GenAI models can produce confident-sounding but factually incorrect information. This reminds me of a statistics class where we learned about Type I and Type II errors , but hallucinations feel like a completely new category of error.

- Intellectual Property and Copyright: Popular generative AI tools train on massive datasets with unclear provenance. When these tools create content, determining ownership becomes problematic. You should worry if you are using Generative AI for creative purposes.

- Bias Amplification: GenAI can perpetuate and amplify biases present in training data. I’m wondering: How do we measure bias in creative outputs where “correctness” is subjective?

- Data Privacy Violations: Personal information might be inadvertently collected and exposed through AI-generated content. Sometimes, because of an inexperienced team that is unaware of the implications.

Governance Framework Adaptations

Organizations need to modify traditional AI governance for generative AI:

- Enhanced Monitoring: Continuous monitoring of AI-generated content for bias, quality, and appropriateness

- Human Oversight Requirements: Establishing clear protocols for human review of AI outputs.

- Content Filtering: Implementing robust filtering mechanisms to prevent harmful or inappropriate content generation.

- Audit Trails: Maintaining detailed logs of AI decision-making processes for accountability

I’m questioning whether current monitoring technologies are sophisticated enough for these requirements. You may need bespoke solutions for your organization.

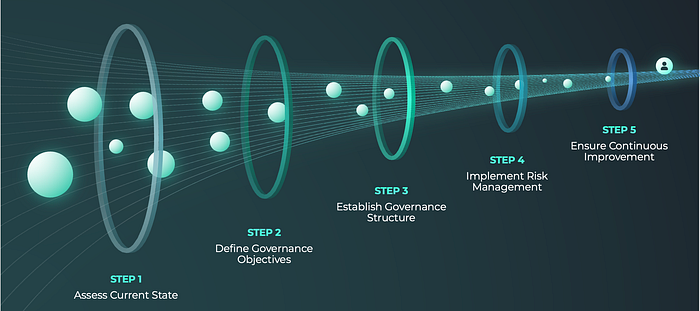

Implementation Framework

Let me try to synthesize everything I’ve learned into a practical framework. I’m finding this synthesis challenging because each organization’s context is so different.

Step 1: Assess Current State

Organizations should evaluate their:

- AI Maturity Level: Using established frameworks like the MIT CISR model or a custom model developed by any of the experienced service providers in the space.

- Data Maturity: Assessing data governance, quality, and accessibility.

- Organizational Readiness: Leadership commitment, resource availability, and cultural factors.

Step 2: Define Governance Objectives

Based on organizational size and maturity:

- SMEs: Focus on ethical guidelines, basic risk management, and simple monitoring

- Large Enterprises: Comprehensive frameworks with formal oversight and advanced monitoring

Step 3: Establish Governance Structure

Create appropriate organizational structures:

- Leadership: Dedicated AI leaders or committees.

- Cross-functional Teams: Representatives from impacted business units.

- Clear Ownership: Designated product managers for AI solutions.

Should organizations need to iterate through these steps rather than complete them sequentially?

Step 4: Implement Risk Management

Deploy appropriate risk management based on organizational capabilities:

- Automated Monitoring: Performance alerts, bias detection, anomaly identification.

- Human Review Processes: Clear protocols for content evaluation.

- Incident Response: Procedures for addressing AI system failures

Step 5: Ensure Continuous Improvement

Establish mechanisms for ongoing refinement:

- Regular Assessments: Periodic evaluation of governance effectiveness.

- Stakeholder Feedback: Channels for collecting user and stakeholder input.

- Regulatory Monitoring: Staying current with evolving regulations.

Challenges and Considerations

I keep encountering the same fundamental tension.

How do organizations balance innovation speed with governance rigor?

There’s no simple answer, but I think the key lies in proportionate responses.

Common Pitfalls

- Over-governance: Implementing such strict controls that innovation stagnates.

- Under-governance: Moving too quickly without adequate safeguards.

- One-size-fits-all approaches: Applying enterprise governance frameworks to SMEs or vice versa.

Success Factors

- Executive Commitment: Leadership must champion both innovation and responsible AI use.

- Cultural Change: Organizations need to develop AI literacy across all levels.

- Adaptive Frameworks: Governance structures must evolve with technology and regulation

Actually, “success” in AI governance might look different for each organization.

For some, it’s regulatory compliance; for others, it’s maintaining public trust or competitive advantage.

Future Considerations

The regulatory landscape continues to evolve rapidly. The EU AI Act, expected to be enforced by 2026, will significantly impact how organizations approach AI governance.

I’m wondering how organizations should prepare for regulations that don’t yet exist.

Organizations need to consider:

- Regulatory Compliance: Preparing for emerging laws and standards.

- Technology Evolution: Adapting governance as AI capabilities advance.

- Stakeholder Expectations: Meeting evolving public and customer expectations

Conclusion

I started this paper trying to provide a comprehensive framework, but I’ve learned that AI governance isn’t a destination — it’s an ongoing process of adaptation and learning.

The key insights from this analysis are:

- Context Matters: Governance approaches must match organizational size, AI maturity, and data capabilities.

- Generative AI is Different: Traditional AI governance frameworks need modification for generative AI applications.

- Balance is Critical: Organizations must balance innovation with responsibility.

- Evolution is Necessary: Governance frameworks must adapt as technology and regulations evolve.

Is perfect AI governance even possible, or should we aim for “best possible” governance that enables responsible innovation? I think the latter is more realistic and probably more valuable.

Organizations should focus on building adaptive governance capabilities rather than perfecting initial frameworks.

The goal isn’t to eliminate all risks but to manage them appropriately while enabling beneficial AI applications.

The path forward requires continuous learning, stakeholder engagement, and a willingness to adjust approaches as we collectively learn more about governing these powerful technologies.

About the Author:

Transformation & AI Architect | Governance Advocate | Mediator & Arbitrator

An analytics and transformation leader with over 24 years of experience, advising on change, risk management, and embracing technology while emphasizing corporate governance. As a mentor, Mishtert aids organizations in excelling amid evolving landscapes.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.