A Comprehensive Introduction to Graph Neural Networks

Last Updated on July 25, 2023 by Editorial Team

Author(s): Anay Dongre

Originally published on Towards AI.

Graph Neural Networks (GNNs) is a type of neural network designed to operate on graph-structured data. In recent years, there has been a significant amount of research in the field of GNNs, and they have been successfully applied to various tasks, including node classification, link prediction, and graph classification. In this article, we will provide a comprehensive introduction to GNNs, including the key concepts, architectures, and applications.

Introduction to Graph Neural Networks

Graph Neural Networks (GNNs) are a class of neural networks that are designed to operate on graphs and other irregular structures. GNNs have gained significant popularity in recent years, owing to their ability to model complex relationships between nodes in a graph. They have been applied in various fields, such as computer vision, natural language processing, recommendation systems, and social network analysis.

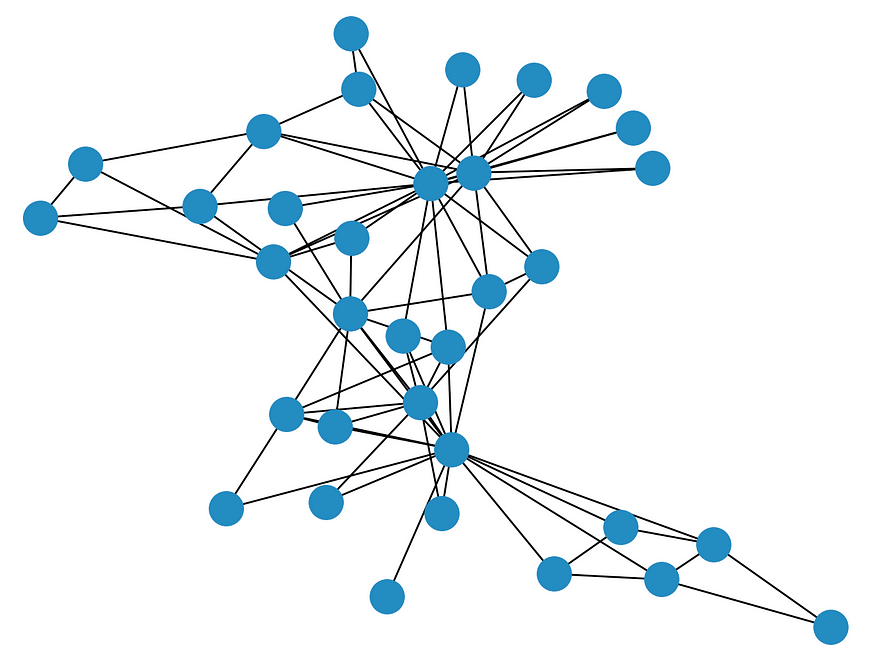

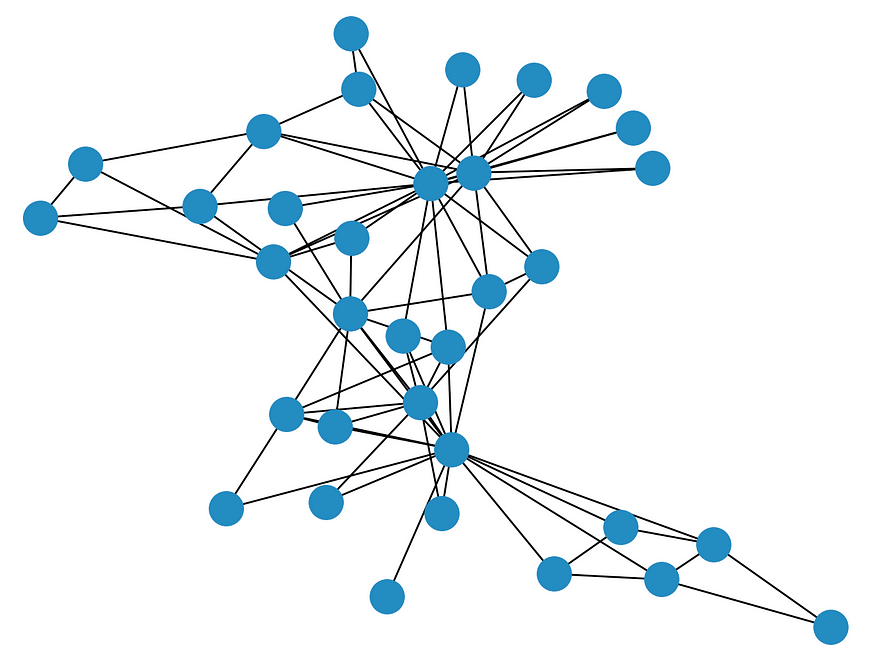

Unlike traditional neural networks that operate on a regular grid or sequence, GNNs are capable of modeling arbitrary graph structures. Graphs can be thought of as a collection of nodes and edges, where the nodes represent entities, and the edges represent relationships between them. GNNs take advantage of this structure by learning a representation for each node in the graph that takes into account its local neighborhood.

The idea behind GNNs is to use message passing to propagate information across the graph. At each iteration, information from each node’s neighbors is aggregated and used to update the node’s representation. This process is repeated for a fixed number of iterations or until convergence. The resulting node representations can then be used for downstream tasks such as classification, regression, or clustering.

GNNs can be viewed as a generalization of traditional convolutional neural networks (CNNs) and recurrent neural networks (RNNs) to graph-structured data. CNNs and RNNs are both designed to operate on regular grids and sequences, respectively. GNNs, on the other hand, can operate on arbitrary graphs and are, therefore, more expressive.

There are several challenges associated with training GNNs, such as overfitting, inductive bias, and scalability. Overfitting can occur when the model is too complex and has too many parameters relative to the size of the dataset. Inductive bias refers to the assumptions that are built into the model based on prior knowledge about the problem. Scalability is a concern because the computational complexity of GNNs can be high, especially for large graphs.

Despite these challenges, GNNs have shown impressive results on a variety of tasks and have the potential to revolutionize many fields. As such, they are an active area of research and are expected to be an important area of development for years to come.

Key Concepts of Graph Neural Networks

The key concepts of GNNs are as follows:

- Graph representation: A graph is represented as a set of nodes (also known as vertices) and edges (also known as links or connections) that connect pairs of nodes. Each node may have features associated with it, which describe the attributes of the entity that the node represents. Similarly, each edge may have features associated with it, which describe the relationship between the nodes it connects.

- Message passing: GNNs operate by passing messages between nodes in a graph. Each node aggregates information from its neighbors, which it uses to update its own representation. The information passed between nodes is typically a combination of the features of the nodes and edges and may be weighted to give more or less importance to different neighbors.

- Node representation: The updated representation of a node is typically learned through a neural network, which takes as input the aggregated information from the node’s neighbors. This allows the node to incorporate information from its local neighborhood in the graph.

- Graph representation: GNNs can also operate on entire graphs rather than individual nodes. In this case, the graph is represented as a set of node features, edge features, and global features that describe the properties of the graph as a whole. The GNN processes the graph representation to output a global representation, which can be used for tasks such as graph classification.

- Deep GNNs: GNNs can be stacked to form deep architectures, which allow for more complex functions to be learned. Deep GNNs can be trained end-to-end using backpropagation, which allows the gradients to be efficiently propagated through the network.

- Attention mechanisms: GNNs can incorporate attention mechanisms, which allow the network to focus on specific nodes or edges in the graph. Attention mechanisms can be used to weigh the information passed between nodes or to compute a weighted sum of the representations of neighboring nodes.

- GNN variants: There are several variants of GNNs, which differ in their message passing and node representation functions. These include Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), GraphSAGE, and many others. Each variant has its own strengths and weaknesses and is suitable for different types of tasks.

GNN Architectures

- Graph Convolutional Networks (GCNs)

Graph Convolutional Networks (GCNs) are one of the most widely used GNN architectures. GCNs operate by performing a series of graph convolutions, which apply a linear transformation to the feature vectors of each node and its neighbors. The output of each convolution is fed into a non-linear activation function and passed to the next layer.

2. Graph Attention Networks (GATs)

Graph Attention Networks (GATs) are a more recent development in the field of GNNs. GATs use attention mechanisms to compute edge weights, which are used to control the flow of information between nodes in the graph. This allows GATs to learn more sophisticated relationships between nodes and their neighbors.

3. GraphSAGE

GraphSAGE is another popular GNN architecture that uses a multi-layer perceptron to aggregate information from a node’s local neighborhood. Unlike GCNs, GraphSAGE uses a fixed-length feature vector for each node, which can be more efficient for large graphs.

4. Graph Isomorphism Network (GIN)

The Graph Isomorphism Network (GIN) is a GNN architecture that is designed to be invariant to graph isomorphism. GIN uses a series of neural networks to compute a set of graph-level features, which are then used to predict the target variable.

5. Message Passing Neural Networks (MPNNs)

Message Passing Neural Networks (MPNNs) are a class of GNN architectures that use a message-passing scheme to update the state of each node in the graph. At each iteration, each node sends a message to its neighbors, which is then used to update the node’s state. This process is repeated for a fixed number of iterations.

6. Neural Relational Inference (NRI)

Neural Relational Inference (NRI) is a GNN architecture that is designed to model the dynamics of interacting particles in a graph. NRI uses a series of neural networks to predict the state of each particle at each time step, based on the state of the other particles in the graph.

7. Deep Graph Infomax (DGI)

Deep Graph Infomax (DGI) is a GNN architecture that is designed to learn representations of graphs that are useful for downstream tasks. DGI uses a two-stage training process, where the first stage is an unsupervised pre-training step that learns graph-level representations, and the second stage is a supervised fine-tuning step that uses the learned representations for a downstream task.

Applications of GNNs

Graph neural networks (GNNs) have shown promising results in various applications, ranging from social networks and recommendation systems to drug discovery and materials science. Here are some of the major applications of GNNs:

- Social network analysis: GNNs can be used to analyze social networks, predict the links between users, and classify users based on their social network behavior.

- Recommender systems: GNNs can be used to build more accurate recommender systems that take into account the relationships between items and users.

- Computer vision: GNNs can be used to perform tasks such as object detection and segmentation, where the relationships between objects and their context are important.

- Natural language processing: GNNs can be used to analyze the relationships between words and their context in natural language text.

- Drug discovery: GNNs can be used to predict the properties of chemical compounds and their interactions with biological targets, accelerating the drug discovery process.

- Materials science: GNNs can be used to predict the properties of materials and their interactions with other materials, enabling the development of new materials with specific properties.

- Traffic analysis: GNNs can be used to predict traffic flows and congestion, and optimize traffic routing.

- Financial forecasting: GNNs can be used to analyze financial data, predict market trends and identify anomalies.

- Cybersecurity: GNNs can be used to detect and classify security threats in networks and systems.

- Robotics: GNNs can be used to control robotic systems, enabling more accurate and efficient navigation and manipulation.

References

- Liu, Zhiyuan, and Jie Zhou. “Introduction to graph neural networks.” Synthesis Lectures on Artificial Intelligence and Machine Learning 14.2 (2020): 1–127.

- Zhou, J., et al. “Graph neural networks: A review of methods andapplications.” arXiv preprint arXiv:1812.08434 (2018).

- Yun, Seongjun, et al. “Graph transformer networks.” Advances in neural information processing systems 32 (2019).

- Wu, Zonghan, et al. “A comprehensive survey on graph neural networks. CoRR abs/1901.00596 (2019).” arXiv preprint arXiv:1901.00596 (2019).

- Dragen1860. “GitHub — Dragen1860/Graph-Neural-Network-Papers: Curated Lists for Graph Neural Network, Graph Convolutional Network, Graph Attention Network, Etc.” GitHub, github.com/dragen1860/Graph-Neural-Network-Papers.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.