Machine Learning at Scale: Why PySpark MLlib Still Wins in 2025

Author(s): Yuval Mehta

Originally published on Towards AI.

Machine learning may be glamorous when you’re tuning models on Kaggle datasets or demoing GPT wrappers. But in production? It’s a grind.

You’re not just building a model. You’re building a system, one that takes in unfiltered data from real users, transforms it across distributed nodes, trains a model that doesn’t crash mid-run, and pushes predictions on a daily or even hourly basis. And that’s where PySpark MLlib shines, not as a modeling tool, but as infrastructure.

MLlib in 2025: Not Just Surviving, Still Scaling

PySpark MLlib isn’t flashy. It won’t give you every modern architecture or bleeding-edge ensemble trick. But it still handles what 90% of enterprise machine learning actually looks like: massive datasets, repeatable training jobs, consistent pipelines, and scale without excuses.

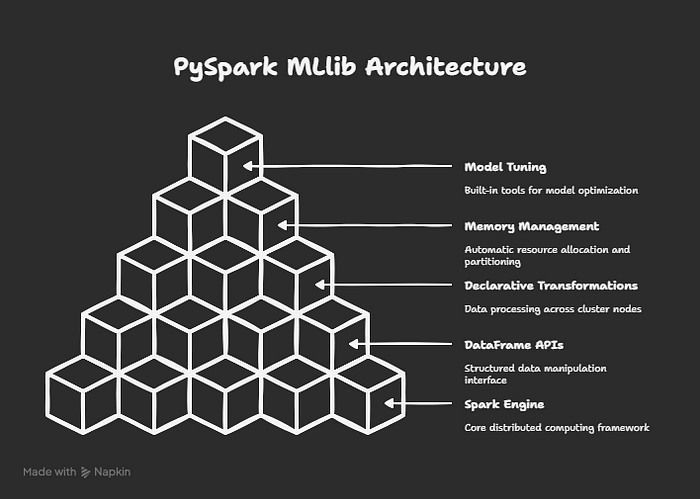

Built directly on Spark’s engine, MLlib pipelines leverage:

- DataFrame APIs (not RDDs anymore),

- Declarative transformations across nodes,

- Automatic memory and partition management, and

- Built-in model tuning and evaluation tools.

All of it runs natively on distributed clusters. No wrappers. No hacks. Just infrastructure that’s been battle-tested for a decade.

Pipelines Are the Product

Forget the model for a second.

What you really want in production is a pipeline. Something that reliably:

- Ingests 100M+ rows of raw data,

- Featurizes, encodes, scales, and joins them,

- Trains models in a distributed way, and

- Outputs predictions without breaking down over drift, scale, or time.

That’s the product. Not the model weights. Not the validation AUC. The end-to-end pipeline.

MLlib pipelines let you declare that entire flow. Each step, from StringIndexer to LogisticRegressionbecomes a stage. These stages fit, transform, and evaluate at scale, without forcing you to hand-stitch every part. More importantly, they're reusable, savable, and deployable. No copy-pasting from notebooks to job schedulers.

Why Scale Demands a Different Mindset

Here’s the thing most teams get wrong when moving from scikit-learn to MLlib:

They treat scale as a hardware problem, not a system problem.

But when you move from 100k records to 100 million, everything changes:

- In-memory joins break down.

collect()calls crash drivers.- You can’t track lineage across handcrafted functions.

- Feature drift, skew, and pipeline inconsistencies destroy reproducibility.

MLlib forces you to think differently. It rewards declarative pipelines, consistent schema definitions, and clean feature lineage. Not because it’s opinionated, but because it knows what happens when systems get big.

2025 Upgrades That Actually Matter

Spark has been evolving quietly, and smartly. If you haven’t touched MLlib in a few years, here’s what’s new and worth noting:

Unified DataFrame-Only API

The old RDD-based MLlib is long deprecated. Everything is now built on Spark SQL’s Catalyst optimizer, meaning transformations are faster, safer, and memory-aware.

Adaptive Query Execution (AQE)

Spark 3.5+ optimizes your pipeline at runtime. It can change join strategies, rebalance partitions, and adapt to skew based on actual data characteristics , not just what you assumed.

Pandas UDF Support

Yes, you can now write Pandas UDFs in your pipeline. That means you can plug in custom logic without giving up distributed execution. A rare and welcome middle ground between ease and scale.

GPU Acceleration

With DeepspeedTorchDistributor and unified executor integration, MLlib now supports training on distributed GPUs. This brings PyTorch into Spark workflows, without rewriting pipelines.

A Use Case That Hits Hard: Churn Prediction for 50M Customers

Let’s get concrete, no code, just context.

You work at a telco. You’ve got:

- Daily usage logs (1TB+),

- Customer support chat summaries (semi-structured),

- Subscription and billing info (relational),

- And a model to predict customer churn before it happens.

What MLlib gives you:

- Pipeline structure to encode all your categorical variables across millions of users,

- Vector assemblers to track features cleanly,

- CrossValidator to test multiple

regParamandelasticNetParamvalues, distributedly, - And a final

.fit()that doesn’t just finish, but does so without blowing up your memory.

Your pipeline runs daily. It auto-retrains. It logs every step. And because it’s saved as a single MLlib object, it can be loaded tomorrow, retrained next week, and served via MLflow or custom batch jobs.

This isn’t science fiction. It’s what companies are doing every day, with MLlib under the hood.

What MLlib Isn’t (And That’s Okay)

To be clear, MLlib isn’t perfect.

- It doesn’t have CatBoost or LightGBM.

- You won’t find cutting-edge neural nets.

- Custom training loops are harder to write.

- Its verbosity can be annoying for prototyping.

But that’s the price you pay for scale. MLlib isn’t about shiny. It’s about stability. If you need transformers, fine-tuned embeddings, or zero-shot generalization, use Hugging Face.

But if your ML system must handle billions of rows, run without fail, and integrate directly with your data lake, MLlib is still unmatched.

Tuning Isn’t a Nice-to-Have — It’s Required

Most teams that complain MLlib is “underperforming” skipped this part.

MLlib comes with native CrossValidator and TrainValidationSplit. Use them.

Set up param grids. Let Spark parallelize and evaluate multiple models. Sure, it takes time but it saves you from shipping a fragile model that performs great in your notebook and fails in the wild.

MLlib also supports model evaluation with:

BinaryClassificationEvaluatorMulticlassClassificationEvaluatorRegressionEvaluator

These evaluators compute metrics in-distribution, without needing to pull predictions to a single node.

At scale, that’s survival.

When to Use MLlib (and When Not To)

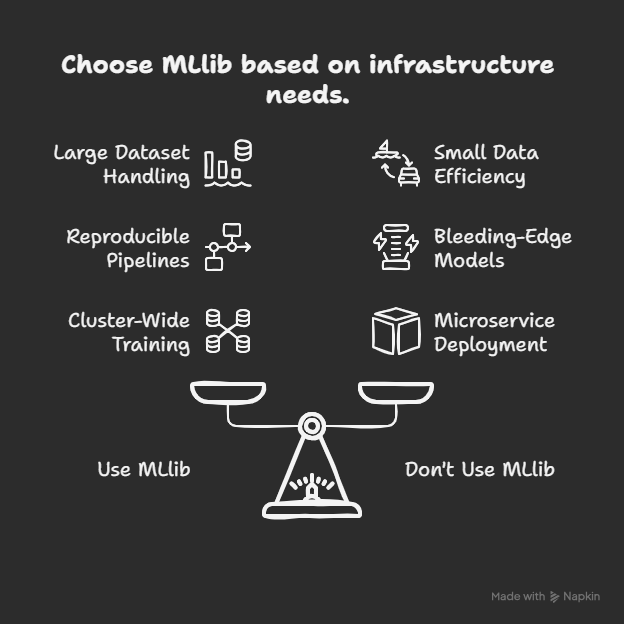

📈 Use MLlib when:

- Your dataset doesn’t fit in memory

- You want full pipeline reproducibility

- You’re working with Spark anyway (ETL + modeling)

- You need cluster-wide training and validation

- Fault-tolerance and job orchestration matter

🧪 Don’t use MLlib when:

- You need bleeding-edge architecture

- Your data is small and fast-moving

- You’re deploying microservices or fine-tuned models with custom inference logic

MLlib isn’t always the best fit. But when it fits, it fits like infrastructure.

MLlib Is Not the Future of ML — It’s the Foundation

Tools come and go. Model APIs evolve. But pipelines? Pipelines stay.

And PySpark MLlib gives you a pipeline structure built to last across versions, across team handoffs, across data drifts and production outages.

It’s not glamorous. It’s not hyped. But when you’re on call at 2 a.m. trying to figure out why your churn model broke after a schema change, MLlib’s declarative, testable, trackable flow will feel like the smartest decision you made all year.

📚 Resources for the Modern MLlib Engineer

- Spark MLlib Docs (3.5+)

- Adaptive Query Execution Deep Dive

- PySpark with GPUs via DeepspeedTorchDistributor

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.