First-Time-Right Code Generation: Detailed Best Practices for AI-Assisted Development Teams

Author(s): Mishtert T

Originally published on Towards AI.

As Someone who’s spent countless hours debugging code that seemed perfect at first glance, I’ve learned that AI coding tools can be both a blessing and a curse.

The question isn’t whether these tools make us faster; they do.

The real question is whether they make us better.

Cursor and GitHub Copilot are two examples of contemporary AI coding aids that can significantly reduce development cycles. However, empirical studies show that 25–50% of initial AI suggestions contain functional or security defects.

Achieving first-time-right (FTR) code, the code that compiles, passes tests, meets standards, and is production-ready on the first commit, requires disciplined practices that go beyond merely accepting AI output.

This technical playbook, written from my perspective, translates research findings and experience into habits, guardrails, and automation patterns. These elements ensure that every engineer consistently ships FTR code while maintaining productivity.

Why First-Time-Right Matters

First-Time-Right emerged from manufacturing floors, where discovering defects after production meant scrapping entire batches or costly recalls. But wait, is software really the same? I think software might be worse.

In my experience, I’ve seen how a single overlooked vulnerability can persist for years. Manufacturing defects affect individual products, but code defects can impact every user, every transaction, indefinitely.

Let me break down why this matters so much in practice:

- Debug cycles destroy momentum: I’ve been there — you’re in flow state, then boom, you get pulled back to code you wrote last week. The research shows these interruptions consume 30 to 45 minutes per fix, but honestly, that feels conservative when you factor in the mental context switching

- Security holes compound over time: This is what really keeps me up at night. Manufacturing defects are contained, but code vulnerabilities? Studies reveal that nearly half of AI-generated code snippets contain CWE-listed weaknesses

- Trust erosion creates team friction: I’ve watched this happen firsthand. When AI suggestions consistently fail, developers start second-guessing everything. Pipeline congestion adds insult to injury; each failed CI round steals another 12 minutes from the team’s flow

I need to clarify something important. FTR isn’t about achieving perfection on the first try. That would be impossible and frankly, paralyzing. It’s about establishing processes that catch problems before they escape into production.

The Hidden Cost of “Good Enough” Code

A team member used GitHub Copilot to generate an authentication function. The code compiled, looked professional, and passed basic tests. Two weeks later, it was discovered it had a vulnerability that could expose user data. The 15 minutes saved during coding stretched to 6 hours of emergency patching.

This pattern repeats across the industry. That’s not a minor issue; it’s a systematic problem requiring systematic solutions.

Anatomy of First-Time-Right in AI-Enhanced Workflows

My experience with AI coding tools has taught me that success requires discipline, not just better prompts. That’s only partially true. Better prompts matter enormously, but they’re just one piece of the puzzle.

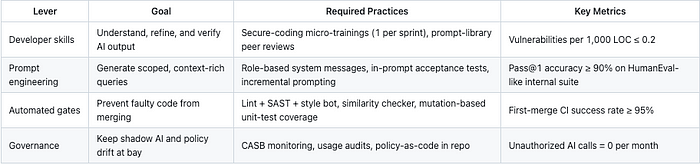

The most effective approach I’ve found combines four elements:

- Granular prompting: Break complex features into 15–20 line increments rather than asking for complete functions

- Mandatory explanation: Never commit AI-generated code until you can explain its logic and edge cases

- Automated verification: Use static analysis tools and mutation testing to catch issues before human review

- Iterative refinement: Ask the AI to explain its code, then optimize based on that explanation

The teams that succeed with AI coding tools treat them as junior developers who need supervision, not as super code generators.

1. Precision Prompt Engineering 🎯

1.1 Adopt Role-Anchored System Messages

Begin every Copilot Chat or Cursor session with a reusable system prompt that enforces organizational conventions:

You are a Senior <LANG> engineer in <ORG>.

Generate secure, idiomatic, unit-tested code that follows <STYLE_GUIDE_URL>.

Never expose secrets; prefer dependency injection; include docstring and

pytest-style tests.

A Microsoft internal study shows that explicit role framing raises the correct-answer rate by 18–24 points.

1.2 Decompose Work into Atomic Prompts

Large “one-shot” feature requests yield hallucinations and style drift. Qodo’s 2025 survey found that 65% of low-quality answers arise from the missing contexts.

Break features into 15–20 line increments:

- Signature stub prompt: Define function API, types, docstring skeleton.

- Implementation prompt: Supply signature, complex branch logic comments.

- Test prompt: Request parameterized unit tests that enforce edge cases.

1.3 Embed Acceptance-Test Hints

Attach Gherkin-style scenarios or examples directly inside the prompt:

# Examples

# add(2,3) -> 5

# add(-4,4) -> 0

LLMs conditioned with example-driven prompts achieve up to 1.9% higher first pass (pass@1) and 74.8% fewer tokens than CoT on HumanEval

1.4 Iterative Refinement Loop

After the initial generation:

- Explain: Ask the model to paraphrase its code to surface hidden flaws.

- Constrain: Request optimizations (“O(n) not O(n²)”) or security tightening.

- Regenerate: Limit iterations to ≤3 to avoid diminishing returns and token waste.

2. Developer Verification Discipline 🔍

2.1 “Explain-before-Accept” Rule

No suggestion should be committed until the developer can verbally explain its flow and edge cases during the PR description.

This improves comprehension gaps highlighted in empirical studies, where 44% of rejected AI code failed due to unclear intent.

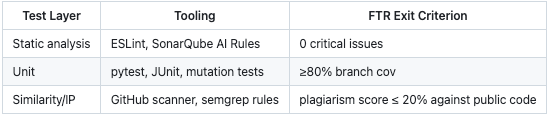

2.2 Mandatory Triple Test Triad

CI blocks merge on any failure, ensuring faults surface before review.

2.3 Secure-by-Default Snippet Library

Maintain an internal, vetted snippet repository (patterns for auth, DB access, error handling). Developers can reference these in prompts.

Use company::auth::jwt.verify_token() for user validation.

Snippet reuse reduced vulnerability density by 38% in a 6-month JetBrains pilot

3. Automated Quality Gates 🚪

3.1 AI-Tagging Pre-Commit Hook

Git hook tags Copilot-generated lines (`# AI-GEN`) via diff heuristics. The SAST pipeline then applies stricter thresholds to tagged regions (e.g., high-severity CWE blockers).

3.2 Policy-as-Code

Store YAML rules in `/ai-policies/`.

ai:

allow_public_code_match: false

require_tests: true

secret_scans: true

Pipelines fail fast on policy violations, enforcing uniform governance across repos.

3.3 Smart CI Parallelism

To save CI minutes, start with lightweight linters and stop downstream jobs if they don’t work. This allows large businesses to recover up to 19% of pipeline time.

4. Continuous Feedback and Learning 🔄

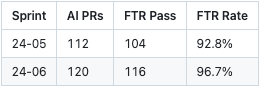

4.1 PR-Label Metrics

Tag merged PRs with `ai-ftr-pass` or `ai-ftr-fail`. Weekly script aggregates:

Trend dashboards spotlight teams needing coaching.

4.2 Chatbot Telemetry Review

To improve prompt templates, aggregate prompt-completion pairs, anonymize them, and assess them once a month. Google Cloud research shows prompt-library curation cuts future token usage by 31%

4.3 Champion Round-tables

Nominate one “AI champion” per squad. Monthly sessions share failures and fixes (e.g., context size hacks, better test prompts). Peer learning improved perceived code quality by 81% where adopted.

5. Quick-Reference Checklist for Engineers ✅

- Use/refer to the approved role-based system prompt.

- Limit prompt scope to ≤20 LOC goal.

- Include examples and edge cases.

- Ask the model to explain and optimize the prompt.

- Add or accept only when the explanation is clear.

- Ensure unit tests are generated and pass locally.

- Push; allow CI to validate SAST, coverage, and similarity.

- Review PR diff; tag reviewer on AI-generated blocks.

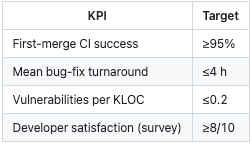

6. Measuring Success 📏

Final Thoughts

Let me be completely honest about this. Implementing FTR practices requires upfront investment. Teams need to establish new workflows, train developers on prompt engineering, and set up automated quality gates.

Some organizations might resist this additional overhead. But the alternative of continuing to treat AI-generated code as immediately production-ready is ultimately more expensive and riskier.

With AI tools becoming more sophisticated and widely adopted, the volume of potentially problematic code is increasing exponentially.

The solution isn’t to abandon AI coding tools. It’s to use them more intelligently. First-Time-Right practices provide the framework for doing exactly that, harnessing AI’s speed while maintaining human oversight and quality standards.

The goal isn’t to replace human judgment with AI efficiency. It’s to augment human capabilities with AI assistance, creating a development process that’s both faster and more reliable than either approach alone.

About the Author:

Transformation & AI Architect | Governance Advocate | Mediator & Arbitrator

An analytics and transformation leader with over 24 years of experience, advising on change, risk management, and embracing technology while emphasizing corporate governance. As a mentor, Mishtert aids organizations in excelling amid evolving landscapes.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.