Beyond A/B Testing: How Contextual Bandits Revolutionize Experimentation in Machine Learning

Last Updated on December 18, 2024 by Editorial Team

Author(s): Joseph Robinson, Ph.D.

Originally published on Towards AI.

This member-only story is on us. Upgrade to access all of Medium.

Not a member? Read for free here (friend link).

In Part 1, we introduced the concept of Contextual Bandits, a powerful technique for solving real-time decision-making problems. In Part 2, we compared Contextual Bandits to Supervised Learning, highlighting the advantages of adaptive optimization over static learning.

Now, we focus on an age-old question in experimentation: A/B Testing or Contextual Bandits?

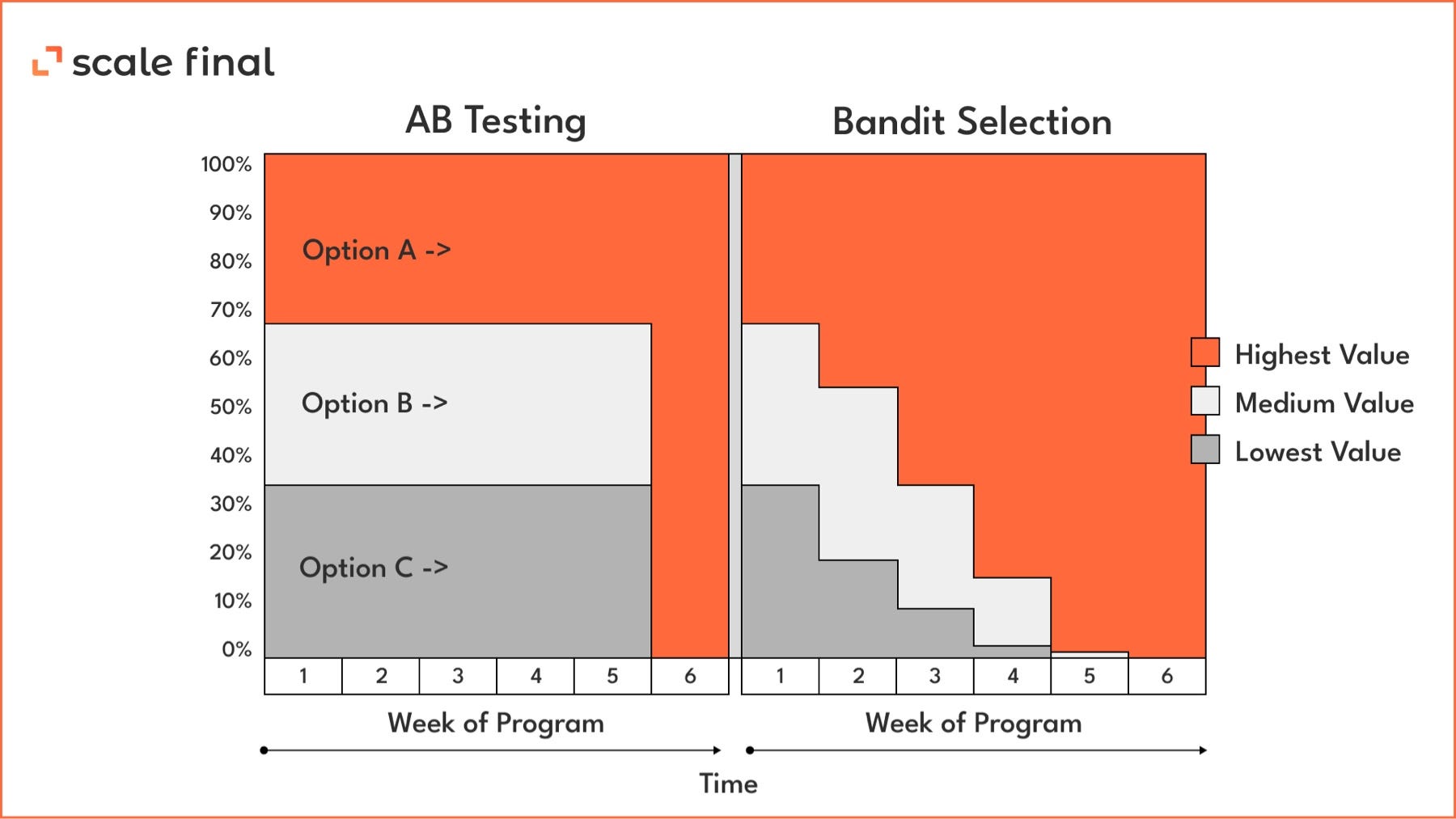

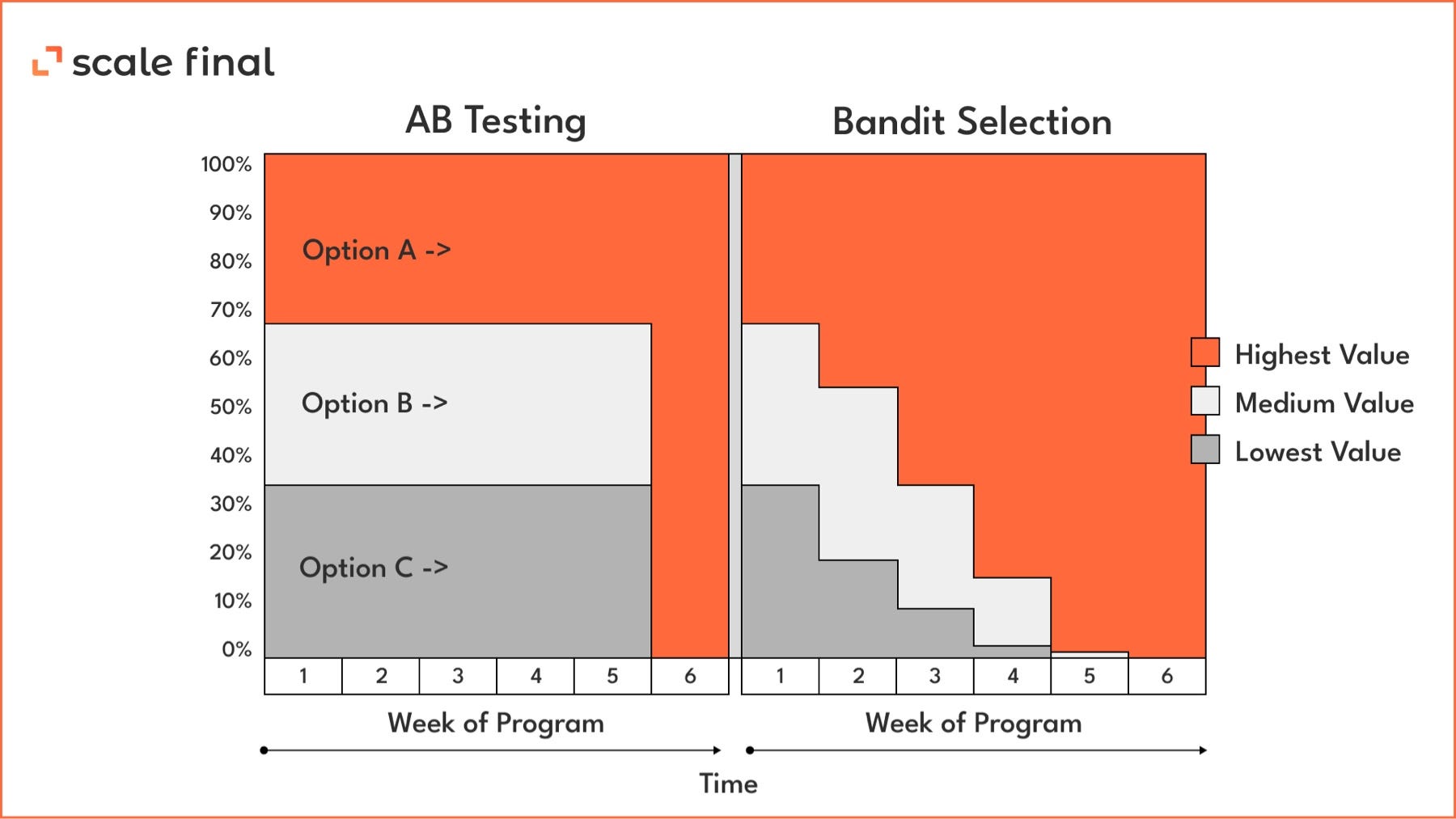

A/B Testing has been the gold standard for experimentation for years, offering simplicity and clear insights. However, it has limitations, particularly in dynamic environments where user behavior evolves and opportunity costs compound during fixed testing periods. Enter Contextual Bandits, a method that balances exploration (i.e., trying new options) with exploitation (i.e., leveraging what works) to optimize real-time decisions and personalize experiences.

This blog will explain when and why to choose each approach using a clear 5-factor decision framework. You’ll have a practical toolkit to align experimentation methods with your business goals, data constraints, and operational complexity by the end.

Is it time to keep it simple, or should you adapt dynamically?

Let’s find out. 🚀

· A/B Testing vs. Contextual Bandits: A Quick Recap ∘ A/B Testing: Static Allocation and Global Decisions ∘ Contextual Bandits: Adaptive… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.