Q&A Bot Using Gen-AI

Last Updated on November 4, 2024 by Editorial Team

Author(s): Dr. Ori Cohen

Originally published on Towards AI.

In this article, I’ll describe how to build a simple Q&A bot using state-of-the-art retrieval techniques and language models; the bot enables users to ask specific questions about machine learning and receive precise answers derived from the Machine Learning and Deep Learning Compendium, which is a rich repository of knowledge that I have been curating for years.

The ML Compendium is available as an open-source book on GitHub, so feel free to give it a star!

GitHub – orico/www.mlcompendium.com: The Machine Learning & Deep Learning Compendium was a list of…

The Machine Learning & Deep Learning Compendium was a list of references in my private & single document, which I…

github.com

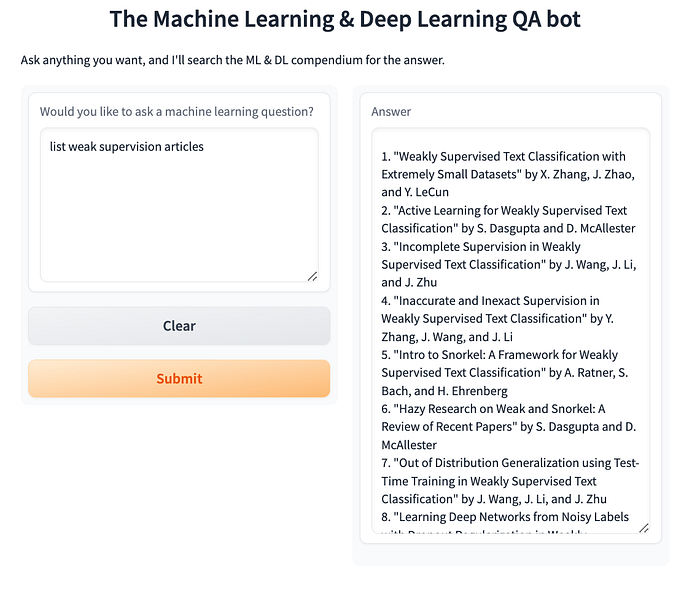

With a simplistic interface deployed on Hugging Face, this bot aims to make technical deep dives and explorations into machine learning concepts more accessible while offering a reliable resource for fast, detailed responses.

Building a Q&A Bot Using Langchain, Pinecone, and OpenAI

Building an effective and intelligent Q&A bot requires the integration of various components for document retrieval, vector storage, and natural language understanding. In this section, we will explore how to construct a Q&A bot capable of answering questions about the knowledge contained in the ML Compendium.

Key Components

1. OpenAI API: Provides ChatGPT-4o API access for completions used in document similarity searches and answering queries

2. Langchain: A framework for building applications powered by large language models (LLMs), specifically handling document retrieval and question-answering (QA) tasks.

3. Pinecone: A vector database used to store and retrieve document embeddings efficiently.

4. Gradio Interface: The frontend interface for users to interact with the bot.

Let’s break down the core components and processes involved in this bot.

Step-by-Step Implementation

1. Imports & Environment Setup

The code first fetches essential API keys and configurations from environment variables, such as `OPENAI_API_KEY` and `PINECONE_API_KEY`. These tokens are critical for connecting to services like OpenAI and Pinecone, which power various aspects of the Q&A bot.

2. Loading and Splitting Documents

The compendium’s knowledge is fetched from a GitBook using the `GitbookLoader` provided by Langchain. This loader fetches all paths of the book and splits the content into manageable chunks using the `RecursiveCharacterTextSplitter`. The chunks are typically around 1,000 characters long, allowing for efficient processing and embedding.

3. Embeddings Generation

The document chunks are transformed into vector representations (embeddings) using OpenAI’s embedding model. These embeddings are crucial for similarity-based search, allowing the bot to retrieve the most relevant documents for a given user query.

4. Initializing the Vector DB Index

The embeddings are stored and retrieved using Pinecone’s vector database. If the index already exists, it loads the existing embeddings; otherwise, it creates a new index and populates it with document vectors. Pinecone supports fast similarity search using cosine distance, and the vectors are stored with the metadata necessary for retrieval.

5. Setting Up the Q&A Chain

Langchain’s `RetrievalQA` chain is configured to perform similarity searches on the vector store, retrieving relevant documents based on user queries. The `OpenAI` language model is then used to construct answers from these retrieved documents, allowing for both direct and contextual answers.

The “stuff” parameter in Langchain combines all retrieved documents into the model’s input at once, allowing the language model to process them together for a response.

6. Query Processing

The `QAbot` function handles user queries. It takes the input query, searches the Pinecone vector store for the most similar documents, and passes the documents to the `RetrievalQA` chain to generate an answer.

7. User Interface with Gradio

Gradio is used to build a simple and interactive user interface for the bot. It provides a text box for user input (queries) and displays the bot’s responses. This interface is straightforward, making it easy for users to ask questions and receive answers.

Conclusion

The Q&A bot leverages large language models and vector-based search to generate answers, but due to the non-deterministic nature of LLMs, responses may vary between attempts, even while using this implementation of RAG, with similar queries.

This can lead to a wide variability of answers. In such cases, it is often helpful to rephrase or slightly adjust the query to help the bot retrieve more relevant documents and produce a more accurate answer. This rephrasing can guide the model towards a clearer interpretation of the user’s intent, ensuring a more accurate response.

Additionally, tweaking the language model’s parameters, such as reducing the temperature setting, can help produce more deterministic and consistent answers, minimizing artistic freedom and ensuring responses are more focused and predictable.

Finally, As you can see, this implementation illustrates how useful integrations between Langchain, Pinecone, and OpenAI can be in building an intelligent Q&A system. By leveraging document retrieval, embeddings, and LLMs, the system provides contextual answers based on the ML & DL compendium. The bot is user-friendly, scalable, and efficient, offering a valuable resource for anyone looking to deepen their understanding of machine learning.

Dr. Ori Cohen holds a Ph.D. in Computer Science, specializing in Artificial Intelligence. He is the author of the ML & DL Compendium and founder of StateOfMLOps.com.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI