Survey on Retrieval Augmented Generation (RAG)

Author(s): Kyosuke Morita

Originally published on Towards AI.

RAG development so far and the future

Given the improvement of the Large Language Models (LLMs), the popularity of Gen AI is also increasing. Introducing a successful Gen AI tool has multiple advantages, such as reducing costs by having a chatbot rather than a human and increasing productivity. Retrieval Augmented Generation (RAG) system [1] is one of the most popular methods that can be applied in the real world. This method uses the pre-trained LLMs and injects external knowledge into them so that the LLM can answer something about the injected knowledge that was not available when it was trained but injected knowledge.

This article reviews existing literature to summarise the RAG developments and known challenges and explores their solutions.

Table of Contents

RAG overviewRAG vs Fine-tuningRAG evaluationRAG performance improvementDiscussionReferences

As briefly mentioned above, RAG is a method that enhances the ability of LLMs by letting LLMs access external vectorised data storage. This technique is especially effective for knowledge-intensive NLP tasks such as question-answering tasks.

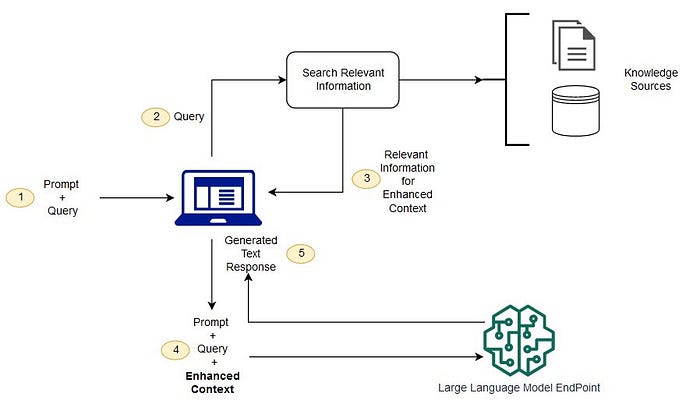

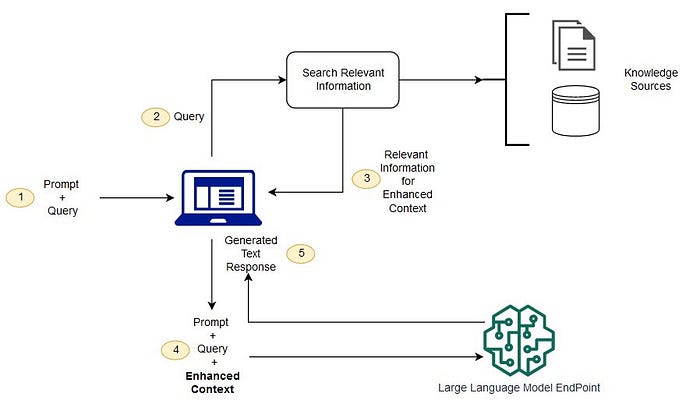

The image below demonstrates the RAG proposed conceptual flow overview.

Image from https://aws.amazon.com/blogs/machine-learning/question-answering-using-retrieval-augmented-generation-with-foundation-models-in-amazon-sagemaker-jumpstart/

In the RAG system, there are 2 important components namely, a Retriever and a Generator. Given a Prompt/Query from an end user, a retriever searches the relevant information from the vectorised knowledge storage. The pipeline queries… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI