A Movie Recommendation System Using Nearest Neighbours and TensorFlow.

Last Updated on January 5, 2024 by Editorial Team

Author(s): Rakesh M K

Originally published on Towards AI.

We get recommendations from lot many websites and mobile applications like Netflix, amazon, Flipkart even in ‘medium’. There are many ways to develop a recommendation system, which depends on the data available. I am using data from IMDB, where movie names, along with their descriptions, are used to develop a Nearest Neighbor algorithm-based recommendation engine.

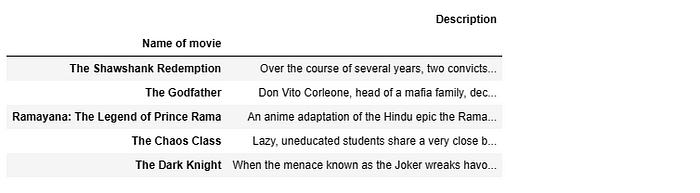

The data.

Around 10,000 movies with descriptions are scraped from IMDB and the dataframe looks like below. Step-by-step procedure is explained further with Python code.

Vectorization of text data.

The descriptions in the dataframe are in text format which needs to be converted into numerical form for further processing. This process is called vectorization, which is done with the help of the TensorFlow vectorization layer.

import tensorflow as tf

from tensorflow.keras.layers import TextVectorization

'''make a list of descriptions'''

text = desc['Description'].tolist()

max_vocab_length = 20000 #expected number of words in the whole descriptions

max_length=25 #length of vector

'''define a vectorization layer'''

text_vectorizer = TextVectorization(max_tokens = max_vocab_length, #how many words in the vocabulory

standardize="lower_and_strip_punctuation",

split="whitespace",

ngrams=None ,# create group of words

output_mode="int",

output_sequence_length=max_length) #length of sequence

text_vectorizer.adapt(text) #adapt/fit the model with text data

text_vector = text_vectorizer(text) #convert the text to vector

text_vector[:2] #check first two vectors

<tf.Tensor: shape=(2, 25), dtype=int64, numpy=

array([[ 110, 3, 623, 5, 441, 57, 29, 2290, 396,

2, 257, 896, 19626, 6, 857, 1044, 103, 6086,

5988, 0, 0, 0, 0, 0, 0],

[ 1458, 7984, 7539, 436, 5, 2, 646, 38, 199,

4, 1518, 110, 8, 958, 4, 8, 2156, 78,

696, 346, 8, 1396, 4568, 764, 3]], dtype=int64)>

Embedding of vectorized text

Once we obtain the vectorized description, the embedding of these vectors is done so that the semantic meaning and contextual relationships within the text will not be lost. Embedding converts the vectors into a dense matrix, as you can see towards the end of code the lock.

from tensorflow.keras.layers import Embedding

'''define an embedding layer'''

embedding = Embedding(input_dim = max_vocab_length,

output_dim=128, # number of embeddings for each element in the vector

input_length = max_length)

'''convert vectors to embedds'''

text_embed = embedding(text_vector)

text_embed[:1] # check embedding of first vector

<tf.Tensor: shape=(1, 25, 128), dtype=float32, numpy=

array([[[ 0.03698475, -0.01323603, 0.03809222, ..., -0.04719925,

0.02724651, -0.03825681],

[-0.0113389 , -0.03626518, 0.02645263, ..., 0.0156747 ,

-0.00186366, 0.02241227],

[-0.00682613, 0.03866451, 0.03083095, ..., -0.04306443,

-0.03722394, 0.00240369],

...,

[ 0.04011244, 0.01590748, 0.00230861, ..., -0.04234166,

-0.04710745, -0.00629392],

[ 0.04011244, 0.01590748, 0.00230861, ..., -0.04234166,

-0.04710745, -0.00629392],

[ 0.04011244, 0.01590748, 0.00230861, ..., -0.04234166,

-0.04710745, -0.00629392]]], dtype=float32)>

As you can see above, each vector will be converted to a dense matrix or tensor (A tensor is nothing but numerical representation of data) of shape ( max_length, output_dim).

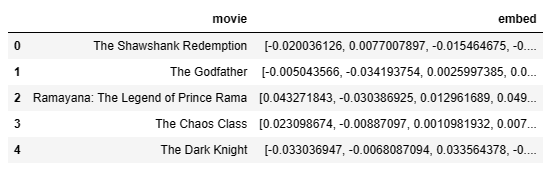

Create Dataframe of movies and corresponding embeddings.

The data frame with movie names and embeddings as columns is created for future reference and implementation of the Nearest Neighbors algorithm.

flattened_embed = [tf.reshape(embed, shape=(-1,)) for embed in text_embed] # flatten the embeddings

numeric_embed = [embed.numpy() for embed in flattened_embed] #convert to numpy

movie_df = pd.DataFrame({'movie' : df['Name of movie'].tolist() , 'embed' : numeric_embed }) # final dataframe

Nearest Neighbors Implementation

The Nearest Neighbors algorithm searches for a given number of neighbors with respect to the given movie name within the specified radius. The metric to find the neighbors can be choses accordingly (euclidean, minkowski, cosine etc.). For more details more details visit: Distance computations (scipy.spatial.distance) — SciPy v1.11.4 Manual.

The choice of distance metric will not make much difference in this case, as I have experienced. The parameter n_neighbors can be chosen as per the required recommendations. To get 5 recommendations, I am choosing it as 6 since the algorithm outputs the result with the query included. Let’s set up the recommendation engine as below.

from sklearn.neighbors import NearestNeighbors

n_neighbors = 6 #no of recommendations +1

knn = NearestNeighbors(n_neighbors=n_neighbors, metric = 'euclidean')

knn.fit(movie_df['embed'].tolist()) #fit the model

A function to return recommendations:

def recommendMovie(movieName):

query_movie_name = movieName

query_embedding = movie_df.loc[query_movie_name, 'embed']

distances, indices = knn.kneighbors([query_embedding])

nearest_movie_names = desc_df.index[indices[0]].tolist()

return nearest_movie_names

Get movie recommendations.

Choose a movie of your favor and pass it to the above function to get 5 recommendations. I am choosing the movie ‘The Chaos Class’ to get recommend top 5 similar movies.

print(f'Top 5 recommended movies for you: {recommendMovie("The Chaos Class")[1:]}')

Top 5 recommended movies for you:

['Margaret',

'The Young and the Damned',

'Resolution',

'Facing Windows',

'Creep 2']

Conclusion.

The recommendation is solely based on the description of the movie. Meta data like rating, cast, metascore are excluded since I was experimenting to get movies with similar description or storyline. Other features also can be included as per the purpose of the recommendation. To adjust the number of recommendations the parameter n_neighbors can be changed while fitting the model.

To read about recommendation system using metadata and cosine similarity, visit the page: https://ai.plainenglish.io/a-movie-recommendation-engine-by-leveraging-metadata-1722971ff507

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.