Beach Reading: a Short History of Pre-Trained Models

Author(s): Patrick Meyer Originally published on Towards AI. Step into a world of wonder and amazement as we unravel the captivating tale of artificial intelligence’s incredible journey. From the birth of the first neural network in the 1950s to the rise of …

Modern NLP: A Detailed Overview. Part 3: BERT

Author(s): Abhijit Roy Originally published on Towards AI. In my previous articles about transformers and GPTs, we have done a systematic analysis of the timeline and development of NLP. We have seen how the domain moved from sequence-to-sequence modeling to transformers and …

How To Train a Seq2Seq Summarization Model Using “BERT” as Both Encoder and Decoder!! (BERT2BERT)

Author(s): Ala Alam Falaki Originally published on Towards AI. BERT is a well-known and powerful pre-trained “encoder” model. Let’s see how we can use it as a “decoder” to form an encoder-decoder architecture. Photo by Aaron Burden on Unsplash The Transformer architecture …

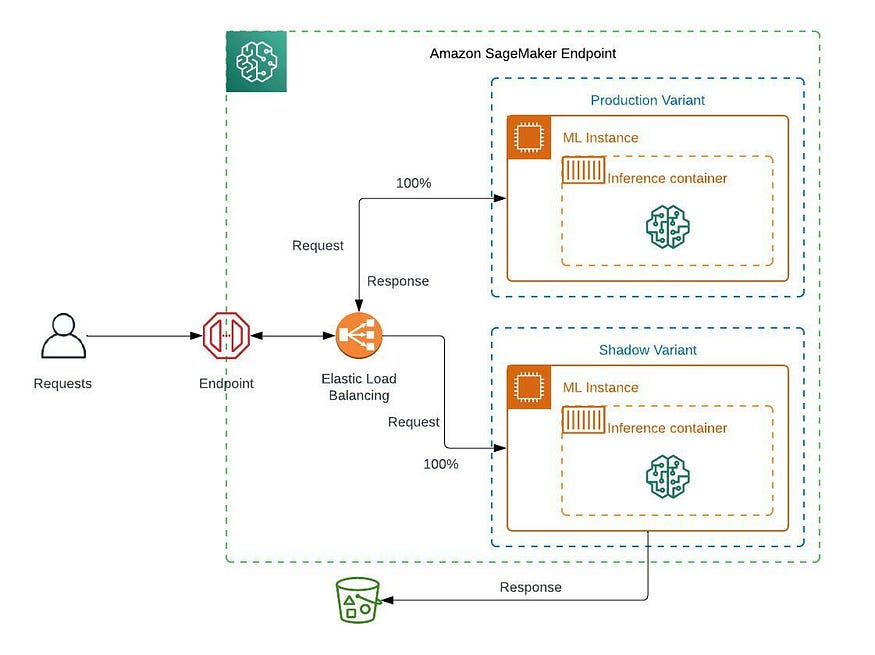

Shadow Deployment of ML Models With Amazon SageMaker

Author(s): Vinayak Shanawad Originally published on Towards AI. Validate the performance of new ML models by comparing them to production models with Amazon SageMaker shadow testing AWS has announced the shadow model deployment strategy support in Amazon SageMaker in AWS re:Invent 2022. …

Identify Trending Machine Learning Topics in Science With Topic Modeling

Author(s): Konstantin Rink Originally published on Towards AI. Using BERTopic to identify the most important ML topics This member-only story is on us. Upgrade to access all of Medium. Photo by Christopher Burns on Unsplash Topic modeling has become a popular technique …

Ask, and You Shall Receive: Building a question-answering system with Bert

Author(s): Lan Chu Originally published on Towards AI. [Find the data and code for this article here.] Photo by Janasa Dasiuk on unsplash QA is the task of extracting the answer from a given document. It needs the context, which is the …

Understanding BERT

Author(s): Shweta Baranwal Originally published on Towards AI. Source: Photo by Min An on Pexels Natural Language Processing BERT (Bidirectional Encoder Representations from Transformers) is a research paper published by Google AI language. Unlike previous versions of NLP architectures, BERT is conceptually …