Modeling the Thousand Brains Theory of Intelligence

Last Updated on July 8, 2022 by Editorial Team

Author(s): Joy Lunkad

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

In this post –

According to the thousand brains theory of intelligence, I proposed a novel convolutional architecture.

I also presented a soft proof of why convolutions can be used as a foundation for cortical mini-columns. This raises the question that whether the success of the convolutional neural network architecture serves as weak empirical proof for the 1000 brains theory.

Introduction

Instead of attempting to explain the Thousand Brains Theory here, I would recommend some of these resources where people far brighter than I have done so, far better than I could.

The Thousand Brains Theory of Intelligence proposes that rather than learning one model of an object (or concept), the brain builds many models of each object. Each model is built using different inputs, whether from slightly different parts of the sensor (such as different fingers on your hand) or from different sensors altogether (eyes vs. skin). The models vote together to reach a consensus on what they are sensing, and the consensus vote is what we perceive. It’s as if your brain is actually thousands of brains working simultaneously.

- Machine Learning Street Talk

- Lex Fridman Podcast

- Talks at google

- Thousand Brain Theory of Intelligence book

Inspiration

I was watching an episode of Machine Learning Street Talk in which Jeff Hawkins explained his thousand brains theory. I thought the theory was brilliant and cool, and when he said that there were no implementations of the cortical columns algorithm, I knew I had to give it a shot.

I came up with architecture after wondering how I could create, in a way, a simplified yet optimized implementation of the algorithm.

This is only a humble attempt to model the human brain by a 21-year-old. I don’t have much knowledge about neuroscience so all criticisms of the architecture are appreciated. I strongly believe this is how science advances and for me, there will be no greater honor than if someone out there can use my work as a stepping stone.

Architecture design

This architecture consists of many parts, which is why I’ll try to break it down piece by piece.

Input

This model is a convolutional model and its input will be an RGB image.

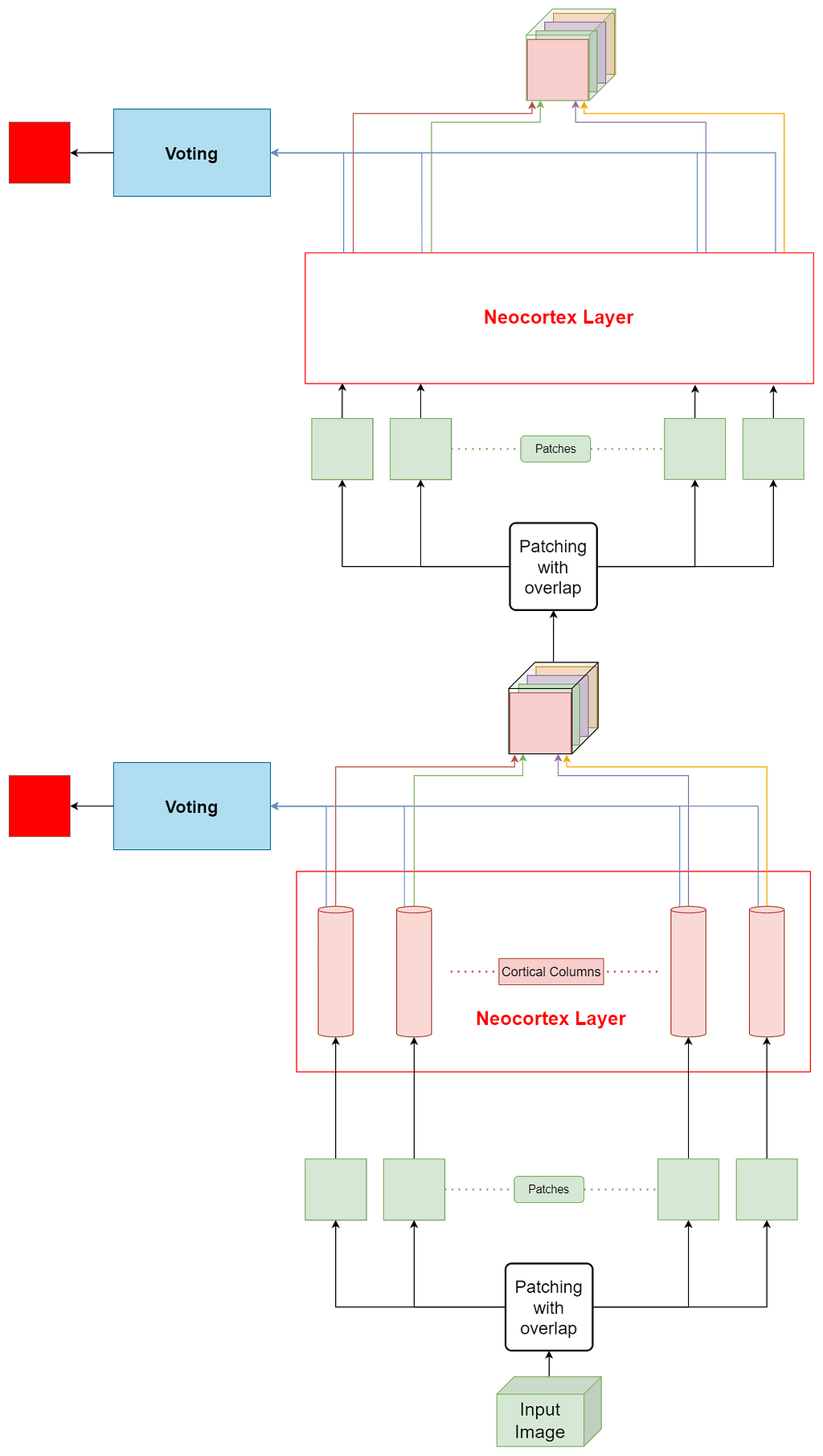

According to the thousand brains theory, each cortical column only receives input from a small part of our retina. Jeff compares this input to viewing the world through a straw. Apart from this, there is a significant overlap in the inputs received by each column.

This is why I propose using a function that divides the image into overlapping patches. This is distinct from the patching operation used by Vision transformers. Let us also define a hyperparameter Overlap_ratio, which indicates how much of one patch overlaps with another.

Modeling cortical mini-columns

All cortical minicolumns inside a column receive a similar input. Each of this cortical mini-columns votes to establish a consensus after computing individual outputs. This output is a cortical column’s output.

Soft proof for why we can use convolutions as a foundation for cortical mini-columns

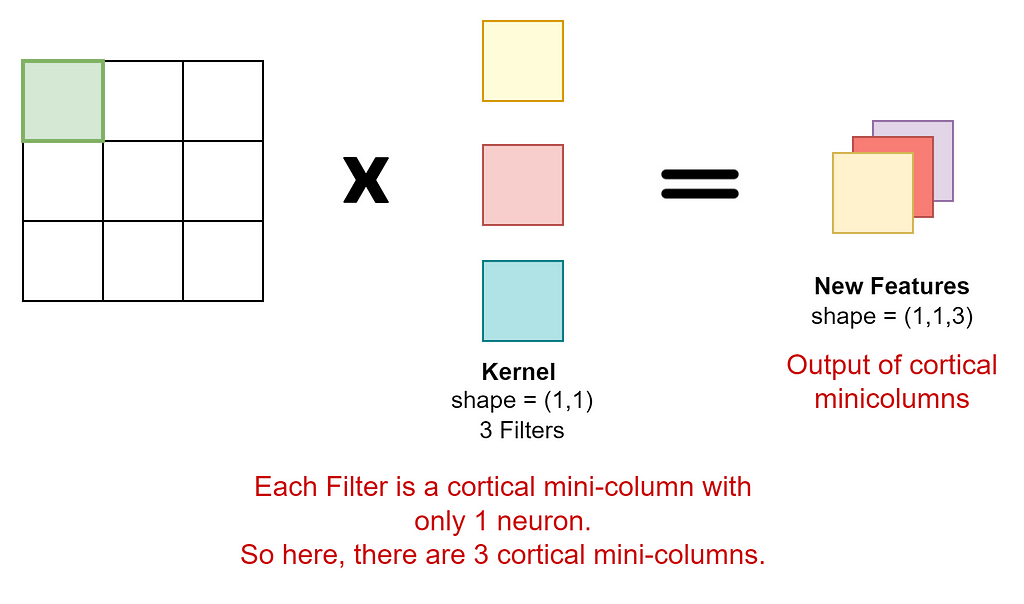

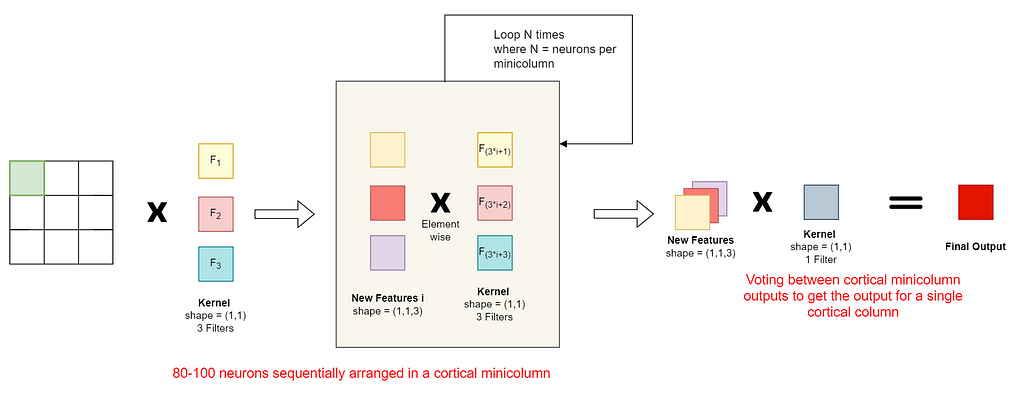

I would like to first prove that each filter in a convolutional layer is roughly equivalent to a minicolumn with only one neuron. This might sound funny but this is just the base we build upon.

I found this easiest to visualize with a (1,1) kernel though this should extrapolate to larger kernel sizes. For simplicity, I will be only showing the operations on a (1,1) input, shown by the green square.

A kernel’s operations can be divided into 2 functions.

- On a 2D input(without the channel dimension), only one of these operations can be seen, which is the W.X + B operation. This operation model the behavior of a neuron. The input remains exactly the same for all filters. Each filter only outputs a single feature. Thus, we can safely assume that a kernel’s first operation is a cortical minicolumn with only 1 neuron.

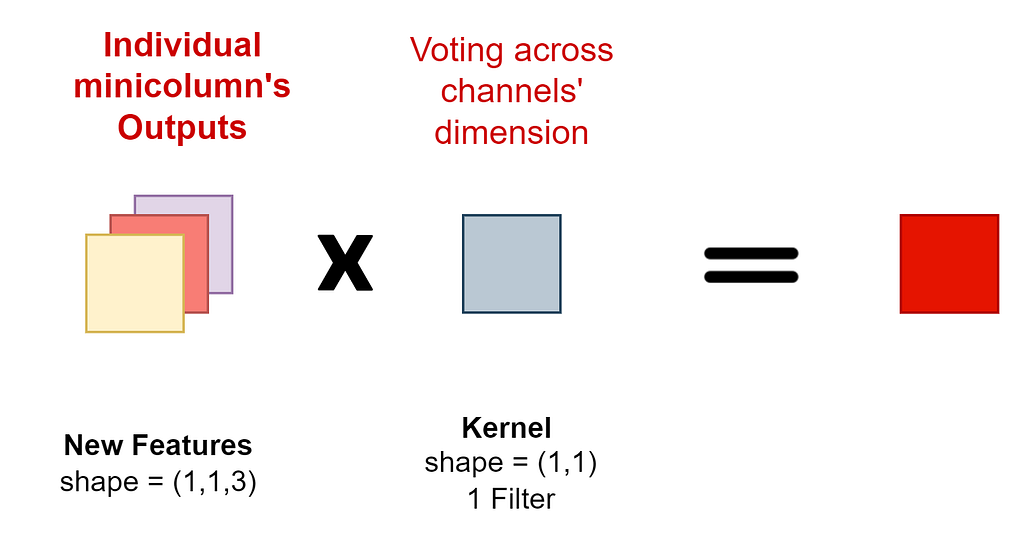

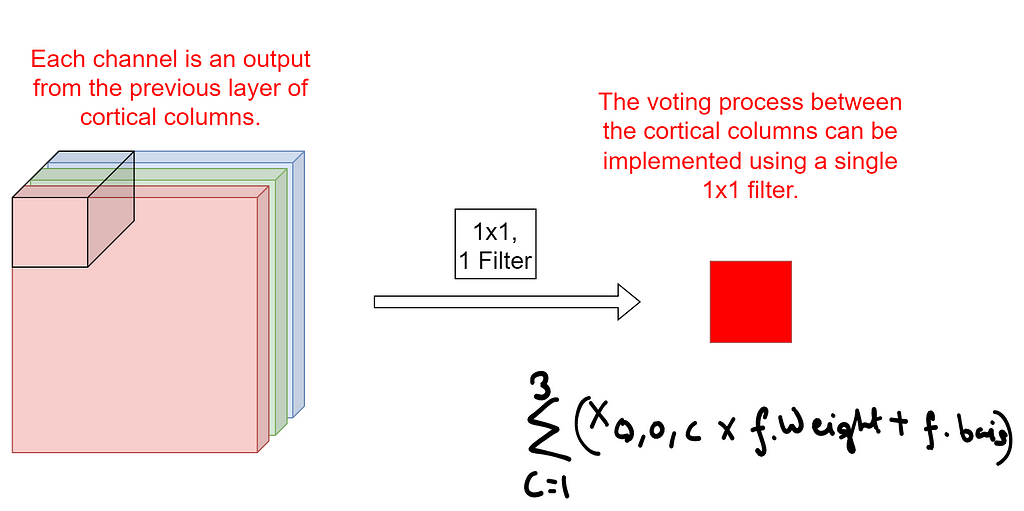

2. To visualize the second function of a kernel, let’s use the output of the previous step. The filter will sum the values in each channel. This function serves as the voting mechanism because each channel represents the output of a cortical mini-column.

Similarly, the voting process between cortical columns can also be implemented using a single 1×1 filter.

New proposed cortical convolutional layer

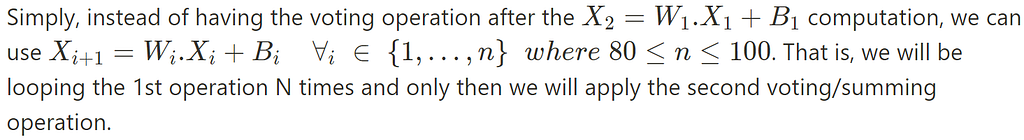

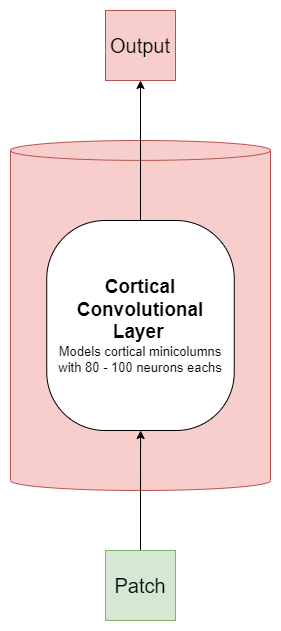

Well, a glaring issue with this correlation is that these minicolumns only have one neuron. Since the actual cortical mini-column has between 80–100 neurons, we can fix this architecture and call the new layer — the cortical convolutional layer.

This is a visualization of a cortical convolutional layer with 3 minicolumns and N neurons per minicolumn.

To model 50–100 minicolumns in a cortical column, all we need to do is to increase the number of filters to match that.

We can also easily match the 100,000 neurons in a cortical column proposed by the thousand brain theory by increasing the number of filters and the number of neurons in each minicolumn.

New Neocortex Layer

Using the cortical convolutional layer, we can model a cortical column.

If we arrange these cortical columns parallelly, we can create the rough equivalent of a neocortex layer. This layer is visualized in the next section.

New Architecture

Putting it all together,

- We take an image and convert it into patches. These patches must overlap each other.

- Every patch is fed into a cortical column such that all patches are fed into the neocortex layer.

- Inside a column, there is a cortical convolutional layer that models 50–100 minicolumns. A minicolumn is modeled using filters.

- The output from the neocortex layer goes into the voting mechanism to generate the final output, shown by the red square.

- The output can also again be converted into patches and sent into the second neocortex layer. Thus, this makes neocortex layers stackable providing the network with depth.

Additional components

- The first 3 neocortex layers in our brain(v1, v2, v4) all receive input directly from the retina. So to model that, in this architecture, we can take the patches of the input image, and combine it with the patches generated by the output of the previous neocortex layer. This could be a residual connection where we concatenate a patch of the input image to a patch of the previous neocortex layer’s output over the channels dimension.

- Since convolutions serve as our foundation, the many years of ConvNets research may be exploited to rapidly improve the architecture. For example, we can model residual connections inside the cortical convolutional layer or even the neocortex layer. Additionally, we can discuss ideas like ConvBlocks, which combine a few convolutional layers with a max-pooling layer. Even normalization layers are quite simple to the add-in. There is a plethora of established research that we can use to improve the performance of this model.

- There is also the possibility of combining the neocortex layers recurrently and generating an output at every timestep.

Conclusions

Here, I proposed a new convolutional architecture that, in my opinion, better models our brain according to the thousand brains theory than traditional convolutional networks. It models some of the major aspects of the cortical column theory but as it is just the first iteration, there are many components to add. I would love to somehow model grid cells and reference frames in the future. Also the minicolumn here, only has one type of neuron, the point neuron. Modeling many different neurons that exist in our brains is also the scope of future work.

It was really cool how many explanations I could find on the internet about the thousand brain theories and neuroscience. Thank you, everyone!

Questions

- Can CNNs serve as weak empirical proof for the thousand brains theory?

- Do you think the design for the cortical convolutions improves upon convolutional kernels according to neuroscience and the 1000 brains theory?

Modeling the Thousand Brains Theory of Intelligence was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.