Grounding DINO: Achieving SOTA Zero-Shot Learning Object Detection

Last Updated on July 17, 2023 by Editorial Team

Author(s): Rohini Vaidya

Originally published on Towards AI.

Enabling Robust and Flexible Detection of Novel Objects without Extensive Retraining or Data Collection

The lack of flexibility is a major challenge with most object detection models as they are specifically trained to recognize only a limited set of predetermined classes. Expanding or modifying the list of identifiable objects requires collecting and labeling additional data, followed by retraining the model from scratch, which is inevitably a time-consuming and costly process.

The aim of zero-shot detectors is to disrupt the current norm by enabling the detection of novel objects without the need to retrain the model. Only by modifying the prompt, the model can effectively identify and detect any objects described to it.

Introduction

The objective of the Grounding DINO model is to create a robust framework for unspecified objects through the use of natural language inputs, referred to as open-set object detection.

The motivation behind grounding DINO stems from the impressive advancements achieved by Transformer based detectors. DINO forms the basis of a robust open-set detector that not only delivers superior object detection results but also enables us to include multi-level textual data through grounded pre-training.

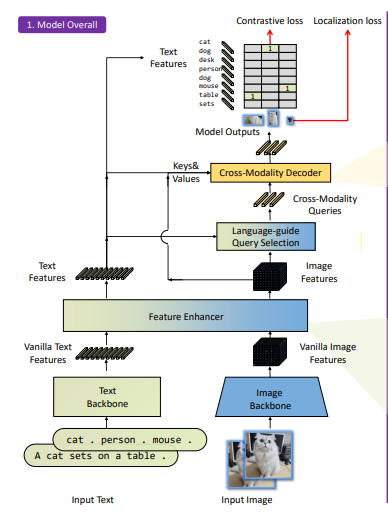

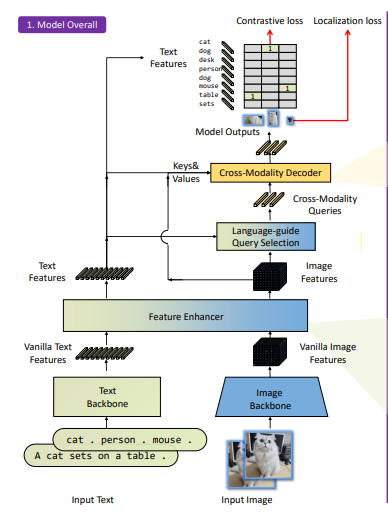

The architecture of Grounding DINO

The grounding DINO model is a single-decoder-dual-encoder architecture. It comprises an image backbone that extracts image features, a text backbone for text extraction, a feature enhancer for combining image and text features, a language-guided module for query selection, and a cross-modality decoder for refining boxes. Figure 1 displays the entire framework.

Initially, we extract plain image features and plain text features from an image backbone and a text backbone, respectively. We then combine these two features through a feature enhancer module to achieve cross-modality feature fusion. After the integration of text and image features, we select cross-modality queries from image features using a language-guided query selection module. These selected cross-modality queries are sent to a cross-modality decoder to obtain desired features from the two modalities and update them. Finally, the output queries of the last decoder layer are utilized to predict object boxes and extract corresponding phrases.

Performance of Grounding DINO

Grounding DINO attained a score of 52.5 AP in the COCO detection zero-shot transfer benchmark even without the aid of training data from COCO. Its performance further improves to 63.0 AP once it undergoes finetuning using COCO data.

By combining Grounding Dino with SAM, Stable Diffusion, and Tag2Text we are able to achieve highly accurate and visually appealing image segmentation results.

Let’s get started with the implementation.

Implementation

- Grounding DINO

The primary objective of this model is to detect everything within an image provided by a text prompt.

After cloning the git hub repository of Grounding DINO and then installing all the requirements, you can run the following command.

!wget https://github.com/IDEA-Research/GroundingDINO/releases/download/v0.1.0-alpha/groundingdino_swint_ogc.pth

!export CUDA_VISIBLE_DEVICES=0

!python grounding_dino_demo.py \

--config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py \

--grounded_checkpoint groundingdino_swint_ogc.pth \

--input_image "Your input image here" \

--output_dir "outputs" \

--box_threshold 0.3 \

--text_threshold 0.25 \

--text_prompt "Your text prompt here" \

--device "cuda"

2. Grounding DINO + SAM(Detect and segment everything with text prompt)

The objective of this model is to create a very interesting image by combining Grounding DINO and Segment Anything which aims to detect and segment Anything with text inputs.

The SAM generates precise object masks from an input like points or boxes, trained on 11M images and 1.1B masks, with excellent zero-shot performance on diverse segmentation tasks.

!wget https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth

!wget https://github.com/IDEA-Research/GroundingDINO/releases/download/v0.1.0-alpha/groundingdino_swint_ogc.pth

!export CUDA_VISIBLE_DEVICES=0

!python grounded_sam_demo.py \

--config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py \

--grounded_checkpoint groundingdino_swint_ogc.pth \

--sam_checkpoint sam_vit_h_4b8939.pth \

--input_image "Your input image here" \

--output_dir "outputs" \

--box_threshold 0.3 \

--text_threshold 0.25 \

--text_prompt "Your text prompt here" \

--device "cuda"

3. Grounding DINO + SAM + Stable diffusion (Detect, segment, and replace any object in an image with another using a Text prompt)

The aim of this model is to detect an object in an image using Grounding DINO, segment it using SAM, and replace an object in an image with another using stable diffusion with the text inputs.

Stable Diffusion is a latent text-to-image diffusion model.

!CUDA_VISIBLE_DEVICES=0

!python grounded_sam_inpainting_demo.py \

--config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py \

--grounded_checkpoint groundingdino_swint_ogc.pth \

--sam_checkpoint sam_vit_h_4b8939.pth \

--input_image "Your input image here" \

--output_dir "outputs" \

--box_threshold 0.3 \

--text_threshold 0.25 \

--det_prompt "Text prompt for the object to be detected" \

--inpaint_prompt "Text prompt of new object" \

--device "cuda"

4. Grounded-SAM + Tag2Text (Automatically Labeling System with Superior Image Tagging)

This model will give captions for the input image along with detection and segmentation.

Tag2Text achieves superior image tag recognition ability of 3,429 commonly human-used categories. It is seamlessly linked to generating pseudo labels automatically.

!wget https://huggingface.co/spaces/xinyu1205/Tag2Text/resolve/main/tag2text_swin_14m.pth

!export CUDA_VISIBLE_DEVICES=0

!python automatic_label_tag2text_demo.py \

--config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py \

--tag2text_checkpoint ./Tag2Text/tag2text_swin_14m.pth \

--grounded_checkpoint groundingdino_swint_ogc.pth \

--sam_checkpoint sam_vit_h_4b8939.pth \

--input_image "Input image here" \

--output_dir "outputs" \

--box_threshold 0.25 \

--text_threshold 0.2 \

--iou_threshold 0.5 \

--device "cuda"

To summarize, the combination of SAM, Grounding Dino, and Stable Diffusion offers potent resources for precise and aesthetically pleasing segmentation and enhancement of images.

Advantages of Grounding DINO

- Zero-shot object detection

- Referring to Expression Comprehension (REC)

- Elimination of hand-designed components like NMS

Conclusion

Grounding DINO establishes a novel benchmark for object detection by providing a remarkably adaptable and flexible zero-shot detection framework. Its potential is to recognize objects beyond its training dataset and comprehend both visual and linguistic content.

Grounding DINO is undoubtedly a remarkable achievement that will open up avenues for more advanced implementations in object detection and related fields.

References

- Git-hub repository for Grounded-Segment-Anything model

- Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection

If you found this article insightful, follow me on LinkedIn and Medium.

Stay tuned !!!

Thank you !!!

You can also read my other blogs

The Art of Prompting: How Accurate Prompts Can Drive Positive Outcomes.

Takeaways from the newly available free course offered by DeepLearning.AI and OpenAI regarding Prompt engineering for…

pub.towardsai.net

Unraveling the Complexity of Distributions in Statistics

Navigating the Complex World of Distribution

pub.towardsai.net

A Comprehensive Guide for Handling Outliers

Part: 2

pub.towardsai.net

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.