Fighting Style Collapse: Reinforcement Learning with Bit-LoRA for LLM Style Personalization

Author(s): Roman S

Originally published on Towards AI.

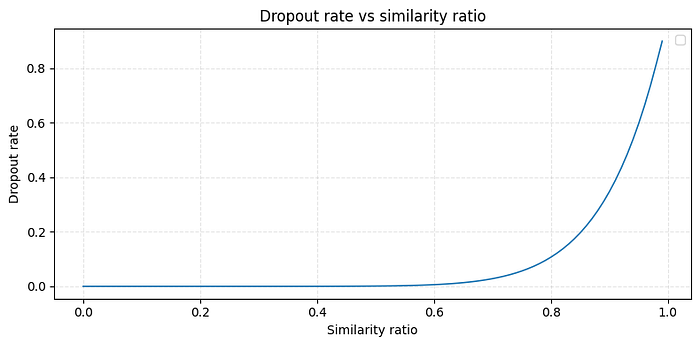

Reward dropout and Bit-LoRA regularization effects.

Abstract

Here I introduce and experiment with a novel reinforcement learning-based framework for LLM style personalization that uniquely addresses the challenge of style collapse. Unlike existing few-shot prompting methods, this approach ensures both high style fidelity and remarkable diversity, critically requiring only minimal data. Central is a novel style reward dropout mechanism, seamlessly integrated with continuous style similarity rewards, effectively mitigating the limitations of conventional few-shot prompting and preventing the model from converging to a single dominant style. Experiments demonstrate improved style consistency compared to direct GPT-4o-mini prompting, alongside competitive style similarity. Furthermore, here I pioneer the exploration of Bit-LoRA for quantization-aware adaptation within this RL context, achieving memory savings while fully preserving performance and introducing a beneficial regularization effect leading to better generalization (that was revealed accidentally).

Introduction

Text style personalization is a fundamental task in natural language generation, that has historically been approached via two primary paradigms: model training-based methods (often relying on supervised fine-tuning requiring extensive datasets) and training-free approaches (typically employing few-shot examples, easy to implement but often yielding limited effectiveness).

In this article, I introduce an original reinforcement learning (RL) framework that bridges the gap between these two paradigms. The approach achieves high effectiveness comparable to training-based methods while requiring as little data as few-shot prompting. This represents an advancement over direct LLM prompting with few-shot examples, which often suffers from style collapse — a critical issue where the model’s output becomes less diverse and overly homogeneous.

The key innovations and contributions are:

- A novel RL framework for LLM style personalization: an end-to-end RL pipeline that enables precise and diverse style adaptation.

- Introduction of a unique style reward dropout mechanism: this mechanism specifically addresses and mitigates the problem of style collapse, ensuring generated texts maintain broad stylistic diversity by preventing the policy from fixating on easily mimicked or dominant styles.

- Integration of continuous style similarity rewards: this provides a dense training signal, accelerating convergence and overcoming the inherent sparsity limitations of discrete rewards in style personalization.

- Empirical demonstration of superior style consistency and diversity: the framework outperforms direct GPT-4o-mini prompting, particularly in maintaining stylistic variety.

- Pioneering investigation into Bit-LoRA for quantization-aware adaptation in RL: here I show that Bit-LoRA not only enables substantial memory savings but also introduces a beneficial regularization effect, preventing reward hacking and encouraging more generalizable policies.

Related Work

Text style, broadly defined as the consistent linguistic characteristics that differentiate texts from one another beyond their content, is a multifaceted concept. I argue that the style of a text is a combination of the stylistic properties of its constituent parts, particularly sentences. The style of each part can be robustly defined by elements such as vocabulary choice, punctuation patterns, and grammatical structures. Building on this understanding, for the current experiments, I leverage the StyleDistance approach to quantify text styles. While alternative methods exist, such as utilizing hidden states from text semantics classifiers as embeddings, I focus on StyleDistance due to its demonstrated efficacy and its design for content-independent style representation, which I consider highly pertinent to the objectives I have here.

Current approaches to style cloning predominantly rely on Large Language Model (LLM) prompting, often augmented with techniques like attention masking. While attention masking can enhance the results of few-shot LLM prompting, it typically necessitates extensive additional supervised training. Consequently, I employ direct LLM prompting for style cloning as a baseline in this experiment. Furthermore, I provide empirical evidence that such prompting with style few-shots leads to a “style collapse”, that is an instance of the broader LLM collapse problem, where the model’s output progressively loses diversity and becomes increasingly homogeneous.

In the realm of reinforcement learning (RL), a variety of algorithms have emerged, with methods based on learning from verifiable rewards proving particularly efficient. Notably, modifications that exclude Kullback-Leibler (KL) divergence components, as seen in DAPO, have shown significant promise. These methods trace their origins to algorithms like GRPO. The authors of DAPO motivate the removal of the KL component by its inherent requirement for chain-of-thought training, which can diverge from the original model’s alignment objectives. I hypothesize that style modification similarly benefits from KL component removal, as it reduces restrictions on deviating from the initial policy. However, unlike many RL applications, the framework I use here utilizes a continuous reward value based on the similarity between generated and reference text styles. While this continuous reward mitigates training signal sparsification and accelerates convergence, it simultaneously introduces risks of reward hacking, overfitting. For instance, in preliminary experiments, I observed the policy exploiting the reward function through the excessive and incorrect insertion of punctuation.

Style Encoder Characterization and Granularity Analysis

To effectively quantify and manipulate text style within the reinforcement learning framework, it is crucial to first characterize the chosen style encoder and determine the optimal granularity for style assessment. This preliminary analysis aims to answer two key questions:

- Can the selected style encoder effectively distinguish diverse text styles across the chosen domains?

- Should style embeddings be computed at the sentence-level or the whole-text level for reward modeling?

For style encoding, I utilize the StyleDistance/mstyledistance model, a multi-language transformer encoder designed to produce text embeddings where high cosine similarity indicates similar styles and low similarity indicates dissimilar styles. However, the model’s description indicates it was primarily trained on short sentences. This raises a potential training-inference shift when applied to longer texts or documents. To mitigate this, I explore the efficacy of working with style embeddings at the sentence level.

The analysis was conducted using a diverse collection of English-language datasets, with additional background processing on other languages for broader insights (though the primary focus remains English in this work). The datasets selected represent various communication domains:

- Enron emails data;

- Personalized emails;

- Marketing email samples;

- Twitter posts;

- Reddit posts;

- Text messages;

- Persona-based chat messages.

From each dataset, 100 random samples were drawn. Placeholder filling and synthetic translation (from English to another language using GPT-4o-mini with the prompt: “You are a helpful assistant. Translate the user message from English to {X}. Maintain the same style in the translated text as in the original input. Start your answer with the word ‘Translated: ‘“) were performed where applicable to ensure data readiness and explore cross-lingual style consistency.

This analysis comprised several experiments:

- Assessing the internal stylistic coherence by computing cosine similarity of sentence-level embeddings within a single document.

- Comparing sentence-level embeddings to whole-document-level embeddings to understand stylistic aggregation.

- Evaluating the overall stylistic diversity by computing cosine similarity across all sentences from all documents, complemented by manual qualitative checks.

The quantitative results of this analysis are summarized in Table 1. Note that calculations for “Inner document sentences average cosine similarity” and “All sentences average cosine similarity” exclude self-similarity (which is 1).

The statistics in Table 1 reveal that sentences within a single text often exhibit distinct stylistic properties when evaluated by the StyleDistance encoder. For instance, the “Inner document sentences average cosine similarity” (0.783 for English) suggests a degree of stylistic variance even within a cohesive document. This finding supports the decision to calculate rewards at the sentence-level during reward modeling, allowing for finer-grained stylistic control and capturing the multi-style nature of real-world texts. Furthermore, the strong similarity in style distributions between English and synthetically translated documents (e.g., 0.820 for inner document similarity in translated texts) suggests the style encoder’s robustness across languages, which is a promising direction for future multilingual extensions.

An example from the Enron dataset illustrates this observed stylistic variability:

“We have received the executed EEI Master Power Purchase and Sale Agreement dated 2/1/2001 from the referenced CP. Copies will be distributed to Legal and Credit.”

Here, the first sentence uses active voice, while the second sentence notably employs passive voice, a distinction detectable by the style encoder, contributing to the observed stylistic differences within the document.

Methodology

My approach to LLM style personalization is a two-stage process: an initial supervised warm-up phase followed by a reinforcement learning (RL) alignment phase. This sequential design is motivated by the need to first imbue the language model with basic text rewriting capabilities, thereby reducing the computational overhead typically associated with prompts for task instruction, especially for small language models. The subsequent RL stage then precisely aligns the model’s output with the desired stylistic attributes.

Warm-up Stage: Supervised Fine-Tuning for Rewriting

The warm-up stage involves supervised fine-tuning of a 3B Llama-Instruct model. This phase consists of 1000 training steps, utilizing a learning rate of 2e-5 and a batch size of 1. The training dataset is composed of pairs of short texts, where each pair comprises an original input text and its rewritten version generated by a larger language model (specifically, GPT-4o-mini). This supervised learning objective effectively initializes the language model to perform the core task of text rewriting.

Reinforcement Learning for Style Alignment

Following the warm-up, the model undergoes reinforcement learning to precisely align its text generation with target style requirements. I employ a variant of the GRPO algorithm, specifically Dr.GRPO, configured with 4 PPO-epochs and crucially, without a Kullback-Leibler (KL) divergence component. The exclusion of the KL component is motivated by its potential to constrain exploration and deviate from desired policy changes, particularly when the objective, like style transfer, requires significant modification from the base model’s default generation tendencies, akin to rationales in prior works like DAPO. As an online, on-policy RL algorithm, Dr.GRPO necessitates a reward function that evaluates each generated text during training.

Reward Modeling

The total reward R(Tg, Sr) for a generated text Tg (that consists of separate sentences — sg) with respect to a set of reference style sentences Sr is a composite function designed to optimize style similarity while incorporating auxiliary components to ensure coherence and prevent reward hacking. The primary reward component, R_style, is directly aligned with the objective of style personalization:

R_style(Tg, Sr) = sum over sg in sentences(Tg) of max over sr in Sr of cos_sim(encode(sg), encode(sr))

where sentences(Tg) denotes the set of sentences extracted from the generated text Tg (as justified by the analysis in earlier), encode(·) is the StyleDistance encoder, and cos_sim(·, ·) computes the cosine similarity between two style embeddings. This formulation encourages each generated sentence to stylistically match some of the reference sentences, capturing the multi-style nature of texts.

In addition to R_style, auxiliary reward components are incorporated to stabilize training and prevent undesirable generative behaviors (reward hacking):

- Length of the Generated Text (R_length): Uncontrolled text length can be a form of reward hacking, especially when the KL component is removed. I apply the mechanism to signal the model about the hard cutoff for this component (applying gradually increasing penalties), penalizing generations that exceed a predefined length threshold, following DAPO.

- Named Entities Overlap (R_ne_overlap): This component helps maintain factual correctness and semantic fidelity during rewriting. I found controlling named entities overlap to be sufficient for the goal, ensuring the core meaning is preserved.

- Number of Punctuation Characters (R_punctuation): Preliminary experiments revealed a tendency for the policy to exploit the style similarity reward by excessively inserting punctuation (e.g., commas), generating out-of-domain text for the style encoder. Similar to the length component, a mechanism to signal the model about the hard cutoff is applied to penalize abnormal punctuation counts.

The final total reward R_total is then computed as the sum of these components:

R_total(Tg, Sr) = R_style(Tg, Sr) + R_length(Tg, Sr) + R_ne_overlap(Tg, Sr) + R_punctuation(Tg, Sr)

While other auxiliary rewards (e.g., semantic similarity, language preservation as in Magistral) could be integrated, the current focus is solely on English-language style personalization. On a side note, it is important to mention that I noticed the high importance of the language preservation reward component (following Magistral) during some of the preliminary multi-language experiments.

Style Reward Dropout

Auxiliary reward components mitigate general reward hacking; however, the task of style personalization itself presents a specific vulnerability: the generation of sentences consistently adhering to a single, dominant, or easily mimicable style, leading to style collapse. To counteract this behavior, I introduce a novel style reward dropout mechanism. This mechanism selectively zeros out style similarity rewards for certain reference styles during inference, encouraging the model to explore and reproduce a broader range of styles present in the reference.

The mechanism operates as follows:

- Sentence Segmentation and Encoding: Given a reference text, it is segmented into constituent sentences. Each sentence is then encoded into its style embedding.

- Inter-Reference Similarity Matrix: A cosine similarity matrix is computed where for all embedded sentences.

- Style Dominance Indicator: For each reference sentence, a style dominance score is calculated as the sum of its similarities to all other reference sentences. This score indicates how “central” or “dominant” a particular reference style is within the provided examples.

- Individual Dropout Rate Calculation: Individual dropout rates for each reference style are calculated based on their relative dominance. Let D_max be the maximum dominance score. The base ratio r_i is r_i = D_i / D_max. This ratio is then weighted by a hyperparameter gamma and clipped to a predefined range [epsilon_min, epsilon_max] to find the final dropout rates:

p_i = clip(r_i^gamma, epsilon_min, epsilon_max)

where gamma is a weighting factor, epsilon_min is the minimum dropout rate, and epsilon_max is the maximum dropout rate. In this study, I used a static dropout rate per style, with gamma = 10, epsilon_min = 0.0, and epsilon_max = 0.9.

These hyperparameters were selected based on observed distributions of style similarity ratios, which primarily exceeded 0.6, resulting in calculated dropout rates ranging from 5% to 90%.

5. Dropout Application: During reward calculation, for each generated sentence, if its closest matching reference style is selected for dropout (based on its calculated p_i and a random draw), its contribution to the style similarity reward R_style is zeroed out.

Additionally, I implemented a safeguard: if, in a given training step, all style similarity contributions for a generated text were dropped due to this mechanism, the dropout is entirely canceled for that specific step, ensuring the model always receives some style signal.

While more sophisticated dynamic dropout rate schedules or complex dominance detection methods could be explored, this study focuses on a simple yet effective static design.

Training Data for Reinforcement Learning

The reinforcement learning stage of the framework leverages a diverse collection of English-language digital communication datasets. To ensure sufficient training data and capture a wide range of stylistic variations for the style personalization task, these raw datasets were augmented using GPT-4o-mini (mainly to exclude template-specific elements and placeholders). The selection of these datasets aims to cover a broad spectrum of common digital communication domains, reflecting the varied contexts in which style personalization is applied.

The specific English-language datasets utilized for the RL stage include:

- Enron emails data;

- Personalized emails;

- Text messages;

- Persona-based chat messages.

These datasets collectively provide a rich source of conversational, formal, and informal text styles, which are crucial for training a robust style personalization model.

For evaluation, a non-overlapping with the train part subset was subsampled from these same datasets, ensuring that the test set remains unseen during training and provides a robust measure of generalization.

Experiments

This section details the experimental setup, evaluation metrics, and results of the proposed reinforcement learning framework for LLM style personalization, including a comparative analysis with direct LLM prompting and an investigation into Bit-LoRA adaptation.

Experimental Setup

For all experiments, LoRA (Low-Rank Adaptation) adapters, following QLoRA, were applied to all linear layers of the base model, but the base model remained unquantized. Unless otherwise specified, for each experiment the model was trained for 2500 steps with Dr.GRPO reinforcement learning algorithm. The full text used as the style reference for the models is provided in Appendix A.

During each experiment, the model was tasked with rewriting an input text to match the stylistic properties implied by the reference text. This training inherently acknowledges the assumption that a single written text can encapsulate a combination of different styles (while each sentence can be mapped to the individual style), and the model should learn to reproduce this stylistic diversity. For evaluation, an unseen subset of samples was drawn from the same data sources used during training, ensuring no overlap between training and evaluation data.

Evaluation Metrics

I evaluate model performance using a comprehensive set of metrics designed to capture both style fidelity and diversity:

- Average Style Similarity to Reference: This metric quantifies how closely the style of the generated text matches the provided style reference. It is calculated as the average of the main style reward component, R_style, as defined before, across all evaluation samples. The baseline average style similarity of input texts (before rewriting) to the reference is also provided for context.

- Not Rewritten Percentage: This indicates the proportion of input samples for which the trained model did not generate a significantly different output, implying the input already matched the target style. While not an indicator of correctness, it provides insight into the model’s alignment and potential overfitting.

- Style Vector Consistency: This crucial metric assesses the diversity and consistency of the styles adopted by the generated output relative to the input and the reference. It is defined as the cosine similarity between the normalized distributions of closest reference styles for input and generated texts. Specifically:

(1) Each reference sentence is indexed, and its style encoded using StyleDistance.

(2) For every sentence in both the input and generated texts, I identify the index of the most similar reference style sentence.

(3) Vectors are constructed for input and generated texts, counting the occurrences of each reference style index.

(4) For these vectors their cosine similarity is computed.

(5) A high style consistency value (closer to 1) indicates that the model is maintaining the diversity of styles present in the input, rather than collapsing to a few preferred styles. This metric is calculated only for samples that were rewritten by the model (ignoring those that were not rewritten). - GPT Judge Preference: To provide an independent assessment of qualitative output quality, I employ GPT-4.1-mini as an LLM judge to compare the outputs of the models against those of GPT-4o-mini prompting. To mitigate known biases in LLM-as-a-judge setups, I implemented random shuffling of answer order and used a different model (GPT-4.1-mini) from the one used for data generation (GPT-4o-mini). The metric represents the percentage of outputs from the models preferred by the judge.

Main Results

Table 2 presents the evaluation results comparing the reinforcement learning approaches with direct GPT-4o-mini prompting.

As observed in Table 2, direct GPT-4o-mini prompting yields the highest average style similarity to the reference (0.793). However, this high similarity comes at the cost of significantly lower style vector consistency (0.828), indicating that the prompted model tends to converge on a limited set of preferred styles, thereby decreasing stylistic diversity. This observation supports the “style collapse” hypothesis. In contrast, both the direct reinforcement learning and the variant incorporating style reward dropout achieve remarkably high style vector consistency (0.988 and 0.990, respectively), demonstrating their effectiveness in preserving and reproducing the diverse stylistic patterns from the reference. While their average style similarity is slightly lower than GPT-4o-mini prompting, the enhanced diversity represents a critical advantage for true style personalization.

The GPT Judge preference scores, around 37–38% for the 3B models, are reasonable given the significant size difference compared to GPT-4o-mini. This suggests the models produce outputs of comparable quality in many cases, despite the judge’s potential bias towards outputs from models within the same family. The “Not Rewritten” percentage (42–43%) suggests that the RL-tuned policies sometimes determine that the input text already sufficiently matches the desired style, or it might indicate a degree of reward overfitting. Should consistent rewriting be a strict requirement, the reward function could be adjusted.

Bit-LoRA Experiment

As a separate investigation into memory efficiency and training dynamics, I explored the applicability of Bit-LoRA within the reinforcement learning framework. Bit-LoRA enables quantization-aware training of LoRA adapters, reducing their precision to 1.58 bits, inspired by recent advances in 1.58-bit neural networks. This offers substantial memory savings for adapter deployment. I conducted experiments with Bit-LoRA using the same setup as before (also applying style reward dropout), including a separate run for 7500 steps (compared to the standard 2500) to investigate whether quantized policies, due to potentially reduced exploration capabilities, required extended training to achieve comparable reinforcement learning performance.

The results of the Bit-LoRA training experiments are presented in Table 3.

The Bit-LoRA approach demonstrates performance comparable to regular LoRA, indicating its feasibility within this RL framework without necessarily requiring significantly extended training steps. Interestingly, Bit-LoRA models exhibit a noticeably lower percentage of “not rewritten” samples (22–28%) compared to regular LoRA models (42–43%). This suggests a stronger adherence to the initial rewriting objective (obtained on the supervised finetuning stage), acting as a form of regularization that prevents over-reliance on existing input styles.

Further analysis of training metrics revealed a consistent regularization effect for Bit-LoRA. I tracked two key variances related to style similarities:

- The average standard deviation of all style similarities for each selected sentence in a batch, indicating how precisely (or specifically) each generated sentence stylistically aligns with particular reference styles within the set.

- The average standard deviation of similarities for a selected style across all sentences in a batch, indicating the formation of dominant styles in case it goes down.

For regular LoRA models, the first metric slowly increased during training, while the second (dominant style formation) decreased (faster when no style reward dropout applied). In contrast, both metrics remained remarkably stable for Bit-LoRA (graphics in Appendix B). These findings lead us to conclude that quantization-aware training, particularly Bit-LoRA, exhibits significant regularization effects in an RL setup. This aligns with recent research suggesting that quantization noise can enhance generalization. I argue that this quantization noise in the RL context acts similar to entropy regularization, discouraging reward hacking and promoting the exploration of broader, more generalizable trajectories for policy optimization.

Conclusion

In this article, I presented a novel reinforcement learning framework for LLM style personalization. The approach demonstrably outperforms direct few-shot prompting methods by achieving superior style diversity and consistency, all while requiring only minimal data for effective alignment. By leveraging StyleDistance embeddings and performing style evaluation at the sentence-level, the methodology successfully captures and reproduces the inherently multi-style nature of real-world texts, addressing a critical limitation of previous approaches. A cornerstone of the framework is the proposed style reward dropout mechanism, which effectively mitigates the issue of style dominance and prevents the model from collapsing into homogeneous outputs. Complementary auxiliary reward components further ensure faithful content rewriting and prevent undesirable reward exploitation. The experimental results robustly confirm that while conventional prompting methods might yield slightly higher average style similarity scores, they inherently fail to preserve stylistic diversity — a crucial area where the RL-based approach significantly excels.

Beyond the core framework, the exploration into the application of Bit-LoRA within this reinforcement learning context yielded significant insights. I showed that quantization-aware training not only provides substantial memory footprint reduction for LoRA adapters but also introduces a beneficial regularization effect (as well as the style reward dropout mechanism). This leads to more stable and generalizable policies by preventing reward hacking and encouraging broader exploration. These compelling findings suggest that the noise introduced through quantization can indeed play a role analogous to entropy regularization in reinforcement learning, thereby fostering improved exploration and reducing overfitting to specific stylistic patterns.

Looking ahead, future work will extend this robust framework to address multilingual and domain-specific style alignment challenges.

Appendix A. Style Reference Text

The following text was used as the comprehensive style reference for all experiments, from which target style characteristics were extracted by the StyleDistance encoder for reward modeling.

Once upon a time in a bustling little town, there lived a cat named Whiskers who had a peculiar talent — he could play the piano! Every evening, as the sun dipped below the horizon, Whiskers would leap onto the old, creaky piano in the corner of his owner’s cozy living room and serenade the neighborhood with his enchanting melodies.

One day, a talent scout passing through the town heard whispers of the musical feline. Intrigued, he decided to pay a visit. As night fell, the townsfolk gathered outside the window, their faces illuminated by the warm glow of the lights within. Whiskers, sensing the audience, began to play a lively tune that made everyone tap their feet and clap along.

The talent scout couldn’t believe his eyes. He rushed inside, exclaiming, “This cat is a star! We must take him to the big city!” But Whiskers, caught up in the joy of his performance, simply meowed, as if to say, “This is where my heart belongs.”

From that night on, Whiskers continued to play, bringing the community together in laughter and song, proving that true stardom lies not in fame but in the joy shared with those you love. And so, the little town became known as the home of the piano-playing cat, where every evening was a concert under the starlit sky.

Appendix B. Average standard deviation of styles similarity metrics. Inner for inner sentence, but different styles, outer for different sentences but one style

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.