Exploring the Power of Small Language Models

Author(s): Sunil Rao

Originally published on Towards AI.

In the rapidly evolving landscape of Artificial Intelligence, Large Language Models (LLMs) have captivated the public imagination with their remarkable capabilities in understanding and generating human-like text. From crafting creative content to answering complex queries, LLMs have pushed the boundaries of what’s possible. However, their immense size and computational demands present significant challenges, paving the way for the emergence of a new paradigm: Small Language Models (SLMs).

Why Do We Need SLMs?

The rise of LLMs, while impressive, has inadvertently created a new set of hurdles. These models, often comprising billions or even trillions of parameters, require vast amounts of data for training, enormous computational resources, and specialized hardware for deployment:

- Training and running LLMs consume great amounts of energy and necessitate access to powerful GPU clusters, making them expensive to develop and operate.

- The energy consumption associated with LLMs contributes to a significant carbon footprint, raising concerns about sustainability.

- The financial and technical barriers to entry limit LLM development and deployment to a handful of well-resourced organizations, hindering broader innovation and democratizing AI.

- The sheer size of LLMs can lead to considerable latency, making them less suitable for applications requiring real-time responses, such as on-device AI or low-power edge computing.

- Deploying LLMs in sensitive environments can raise data privacy issues, especially when data needs to be sent to remote servers for processing.

- The black-box nature of massive models makes them difficult to fully understand and control, which is crucial for applications requiring high reliability and accountability.

These challenges form the core motivation behind the development of SLMs. The background of SLMs is rooted in the pursuit of more efficient, accessible, and sustainable AI solutions that can bridge the gap between cutting-edge research and practical, real-world applications.

What is an SLM?

At its core, a Small Language Model (SLM) is a language model designed to achieve high performance on specific tasks or domains with significantly fewer parameters and computational requirements compared to their larger counterparts (LLMs). While there isn’t a universally agreed-upon threshold for what constitutes “small,” SLMs typically range from a few million to a few billion parameters, in contrast to LLMs that often exceed tens or even hundreds of billions.

The “small” in SLM doesn’t necessarily imply limited capability. Instead, it signifies a strategic approach to model design and deployment. SLMs leverage various techniques to achieve efficiency, including:

- Efficient Architectures: Utilizing more compact neural network designs that are optimized for specific tasks.

- Knowledge Distillation: Training a smaller model (the “student”) to mimic the behavior of a larger, more powerful model (the “teacher”).

- Quantization: Reducing the precision of the numerical representations within the model, leading to smaller memory footprints and faster computations.

- Pruning: Removing less important connections or neurons from the model to reduce its size without significant performance degradation.

- Specialized Training Data: Focusing training on smaller, high-quality, task-specific datasets rather than vast, general corpora.

- Domain-Specific Optimization: Tailoring the model and its training to excel in a particular domain or application, sacrificing broad generality for targeted expertise.

Essentially, SLMs represent a pragmatic shift towards “right-sizing” AI models for their intended purpose, moving away from a one-size-fits-all approach.

Advantages of SLMs

The benefits of embracing Small Language Models are multifaceted and hold the potential to democratize AI and expand its reach into previously inaccessible domains:

- Lower training and inference costs due to fewer parameters and simpler architectures, making AI development and deployment more affordable.

- Significantly lower energy consumption, contributing to a reduced carbon footprint and more environmentally friendly AI solutions.

- Lower resource requirements open the door for smaller companies, startups, and individual developers to build and deploy sophisticated AI applications.

- Smaller models process information more quickly, enabling real-time applications and responsiveness crucial for interactive systems.

- SLMs can run directly on resource-constrained devices like smartphones, IoT devices, and embedded systems, enabling offline capabilities and enhanced privacy.

- By processing data locally on devices, the need to transmit sensitive information to cloud servers is reduced, significantly improving privacy and security.

- Their smaller size makes SLMs more amenable to fine-tuning on specific datasets, allowing for easier customization to unique business needs or niche applications.

- While still complex, smaller models can be somewhat easier to analyze and understand, aiding in debugging, bias detection, and ensuring responsible AI deployment.

- The reduced training times and computational overhead can accelerate the iterative development process for AI solutions.

- SLMs can be highly specialized for particular tasks, achieving expert-level performance in their niche without the overhead of general-purpose models.

Small Language Models (SLMs) Vs Large Language Models (LLMs)

The core difference between SLMs and LLMs boils down to scale, and the implications that scale has on their capabilities, resource requirements, and ideal use cases. While both are designed to understand and generate human language, they operate at different ends of the spectrum.

Here’s a detailed breakdown of their key differences:

The decision of whether to use a SLM or a LLM is a critical one and depends heavily on your specific needs, resources, and the nature of the task at hand. It’s not about one being inherently “better” than the other, but rather about choosing the right tool for the job. Here’s a guide to help you decide:

When to Use Large Language Models (LLMs)

LLMs are the powerhouses of generative AI, capable of broad, general-purpose tasks and complex reasoning. You should consider using an LLM when:

- Complex brainstorming and idea generation.

- Answering questions across a vast array of subjects, including those requiring common sense reasoning or up-to-date information (if connected to real-time data).

- Summarizing articles, books, research papers from various domains.

- Writing code snippets, refactoring, and identifying bugs across different programming languages.

- Chatbots that need to maintain context and engage in long, free-flowing dialogues.

- For tasks where subtle linguistic cues, deep contextual understanding, and highly accurate, human-like responses are crucial, LLMs generally outperform SLMs.

- LLMs, due to their vast pre-training, are excellent at few-shot learning, meaning they can perform new tasks with only a few examples, without extensive fine-tuning. This is valuable for rapidly prototyping or handling novel tasks.

- If you’re building a tool that needs to handle a wide, unpredictable range of requests without being limited to a specific domain, an LLM is the appropriate choice.

When to Use Small Language Models (SLMs)

SLMs offer efficiency, cost-effectiveness, and specialization. They are increasingly viable alternatives to LLMs for many practical applications. You should opt for an SLM when:

- When training and inference costs need to be minimized. SLMs dramatically reduce the financial burden of deploying AI.

- For applications where a small cost per inference multiplied by millions of inferences becomes prohibitive with LLMs.

- Running models directly on smartphones, smart home devices, IoT sensors, or edge computing devices where internet connectivity might be limited or latency is unacceptable.

- Chatbots for customer service that need instant responses, or applications where a user expects immediate feedback.

- When sensitive data cannot leave the user’s device or an on-premise server. SLMs enable local inference, mitigating privacy risks associated with sending data to cloud-based LLMs.

- In sectors like healthcare, finance, or legal, where strict data governance is required.

- A chatbot trained specifically on a company’s product documentation, internal policies, or a legal database.

- An SLM fine-tuned to understand sentiment related to a particular product or brand.

- For well-defined classification tasks where the categories are known.

- Extracting specific entities (names, dates, addresses) from documents in a particular format.

- Summarizing medical reports, financial statements, or legal contracts.

- An SLM trained to generate code for a limited set of functions or frameworks.

Hybrid Approaches

It’s also increasingly common to see hybrid architectures that combine the strengths of both:

- An SLM can be used to query a knowledge base (RAG) for factual information, and then generate responses based on that retrieved information. This allows the SLM to answer questions outside its initial training data by “looking up” answers, effectively augmenting its knowledge.

- An SLM handles the majority of routine, high-volume requests, and only “escalates” more complex, ambiguous, or general queries to a larger, more expensive LLM in the cloud.

- SLMs run on local devices for immediate responses and privacy, while occasionally syncing with a cloud LLM for model updates, more complex tasks, or when a fallback is needed.

Prominent Small Language Models:

The field of SLMs is rapidly expanding, with new models being released frequently, often focusing on efficiency and specific use cases. Here’s a list of prominent Small Language Models:

- Phi Series (Microsoft )(e.g., Phi-3-mini, Phi-3-small, Phi-3-medium): Microsoft’s Phi models are a family of highly capable and cost-effective SLMs. Phi-3-mini, for instance, has only 3.8 billion parameters but is designed to perform on par with models twice its size, excelling in reasoning and coding tasks while being optimized for on-device deployment.

- Mistral 7B and Mixtral 8x7B (Mistral AI):

Mistral 7B is a powerful 7.3 billion parameter model known for its strong performance across various tasks while being highly efficient. Mixtral 8x7B is a “Mixture of Experts” (MoE) model with 46.7 billion parameters in total, but it only activates 12.9 billion parameters per token, offering LLM-level performance with SLM-like efficiency. - Llama 3 (Meta) (specifically the smaller variants like 8B):

While Llama 3 includes very large models, its smaller variants, like the 8 billion parameter version, are considered highly capable SLMs. These models are part of Meta’s open-source initiative, offering strong performance on reasoning and various NLP benchmarks, often designed for broad accessibility. - Gemma Series (Google)(e.g., Gemma 2B, Gemma 7B, Gemma 2):

Gemma models are a family of lightweight, open-source models from Google DeepMind, built from the same research and technology used to create the Gemini models. They come in various sizes, with the 2B and 7B variants being excellent examples of SLMs focused on responsible AI and efficient deployment. Gemma 2 offers even larger SLM sizes like 9B and 27B, pushing the boundaries of what’s considered “small” while maintaining efficiency. - DistilBERT (Hugging Face):

DistilBERT is a distilled version of Google’s BERT model. It’s 40% smaller and 60% faster than BERT, while retaining about 97% of BERT’s performance in language understanding tasks, making it highly efficient for tasks like text classification and sentiment analysis. - TinyBERT (Huawei):

Specifically designed for resource-constrained environments like mobile and edge devices, TinyBERT significantly reduces the size of the BERT model while maintaining competitive performance on language understanding benchmarks through advanced distillation techniques. - Qwen Series (Alibaba Cloud) (e.g., Qwen1.5–0.5B, Qwen1.5–1.8B, Qwen2):

The Qwen series offers a range of open-source models, including several highly compact SLMs (e.g., 0.5 billion to 7 billion parameters for Qwen1.5, with Qwen2 extending this). These models are known for their strong performance and multilingual capabilities, making them versatile for various applications, including mobile and browser-based scenarios. - Pythia (EleutherAI):

Pythia is a suite of openly accessible LLMs, with many variants falling into the SLM category (e.g., from 160 million to 2.8 billion parameters). It’s notable for being trained on publicly available data, making it a valuable resource for research and development in language models. - MobileLLaMA / TinyLlama:

These models represent efforts to create extremely lightweight language models optimized specifically for mobile devices and other low-power environments. They focus on achieving decent performance for on-device inference without heavy computational demands. - OpenELM (Apple):

OpenELM (Open-source Efficient Language Models) from Apple provides a family of open-source language models ranging from 270 million to 3 billion parameters. They are designed for efficient on-device inference and offer layer-wise scaling, making them adaptable for various hardware constraints.

Limitations of Small Language Models:

- SLMs are often trained on smaller, more domain-specific datasets. This means they excel within their trained niche but typically lack the broad general knowledge, common sense reasoning, and understanding of diverse topics that LLMs possess.

- They may struggle to generate accurate or coherent responses to queries that fall significantly outside their training distribution or require knowledge across multiple, disparate domains.

- Due to fewer parameters and less extensive training data, SLMs might produce less nuanced, less creative, or more generic text compared to LLMs. They may struggle with tasks requiring abstract thinking, subtle humor, or highly creative writing.

- While some SLMs are being developed with improved reasoning capabilities (e.g., through specific training methods like “recipes” used for Phi models), they generally do not match the advanced reasoning, problem-solving, and multi-step inference abilities of the largest LLMs.

- SLMs can be more prone to errors or less robust in ambiguous scenarios or when faced with highly complex, multi-faceted prompts where deep contextual understanding is paramount.

- While an SLM can be fine-tuned on specific facts, it doesn’t inherently possess the vast “world knowledge” embedded in LLMs through their massive pre-training on the entire internet. To overcome this, SLMs often rely on Retrieval-Augmented Generation (RAG), where they query an external knowledge base to get up-to-date or specific information before generating a response. Without RAG, their knowledge is strictly limited to their training data.

- While easier to fine-tune in terms of computational resources, SLMs often require more focused and high-quality domain-specific data to achieve peak performance for a given task. This means more effort in data collection and curation might be needed to make them truly effective in a niche.

- LLMs, by contrast, can often perform well on new tasks with very few examples (few-shot learning) due to their vast pre-training, whereas SLMs might require more dedicated fine-tuning data to reach similar levels of task proficiency.

- Like all AI models, SLMs can inherit and even amplify biases present in their training data. While their smaller datasets might make it easier to identify and potentially mitigate these biases compared to the opaque nature of massive LLMs, the risk still exists, especially if the curated training data itself is skewed.

Model Architectures of SLM

Small Language Models (SLMs) differentiate themselves from their larger counterparts by employing specific architectural designs and optimization techniques to achieve high performance with significantly fewer parameters and reduced computational demands. These architectural choices are crucial for enabling SLMs to run efficiently on resource-constrained devices and for specialized applications.

Here’s a breakdown of the key model architectures and design considerations for developing SLMs:

Lightweight Architectures

Lightweight language model architectures are intrinsically designed for efficiency, minimizing parameters and computational overhead. They are ideal for deployment on devices with limited resources, such as mobile phones, edge devices, and embedded systems. These architectures often build upon the fundamental Transformer structure, optimizing its components.

- Lightweight Encoder-Only Architectures: These models are primarily optimized versions of the BERT architecture. Encoder-only models are best suited for tasks requiring a deep understanding of the input text, such as:

Text Classification: Categorizing documents or messages (e.g., spam detection, sentiment analysis).

Named Entity Recognition (NER): Identifying specific entities like names, dates, and locations.

Extractive Question Answering: Finding the answer directly within a given text. - Ex: MobileBERT: Introduces an inverted-bottleneck structure within the Transformer blocks. This design helps maintain a balance between the self-attention mechanism and the feed-forward networks, leading to a substantial 4.3x size reduction and a 5.5x speedup compared to the base BERT model while preserving much of its performance.

DistilBERT and TinyBERT: These models achieve significant size reductions (more than 60% for DistilBERT, even more for TinyBERT) by using knowledge distillation techniques. They are trained to mimic the behavior of a larger BERT “teacher” model, retaining over 96% of the original BERT’s performance. - Lightweight Decoder-Only Architectures: These models follow the autoregressive structure of language models like the GPT and LLaMA series, focusing on generating coherent text sequences. They are optimized for generative tasks such as:

Content Generation: Creative writing, generating marketing copy, email drafts.

Dialogue Systems: Powering chatbots and virtual assistants that engage in conversational exchanges.

Generative Question Answering: Generating natural language answers to questions. - Ex: Microsoft Phi Series (e.g., Phi-3-mini): These models are designed to be highly capable despite their small size. They often emphasize “data recipes” and targeted training on high-quality, curated data to achieve strong reasoning and coding abilities with a minimal parameter count.

Google Gemma Series (e.g., Gemma 2B, 7B): Derived from the same research as Google’s larger Gemini models, Gemma leverages a decoder-only Transformer architecture with optimizations like interleaved attention and efficient positional embeddings (RoPE) for better memory management and performance on mobile-optimized variants.

Mistral 7B: A highly efficient 7.3 billion parameter model that demonstrates strong generative capabilities for its size, often performing comparably to larger LLMs. It utilizes techniques like Grouped-Query Attention (GQA) and Sliding Window Attention (SWA) to enhance efficiency. - Smaller Llama 3 Variants (e.g., 8B): While the Llama series also includes very large models, its smaller versions are designed with efficiency in mind, often benefiting from extensive pre-training on vast datasets while being optimized for various deployment scenarios.

Techniques commonly employed in these decoder-only SLMs include:

Knowledge Distillation: Transferring knowledge from a larger “teacher” model to a smaller “student” model.

Memory Overhead Optimization: Reducing the memory footprint during inference, crucial for edge devices.

Parameter Sharing: Reusing parameters across different layers or components of the model to reduce the total parameter count.

Embedding Sharing: Sharing embedding layers for different modalities or tasks to reduce redundancy.

Efficient Self-Attention Approximations

The self-attention mechanism, a core component of the Transformer architecture, has a computational complexity that scales quadratically (O(N2)) with the sequence length (N). This becomes a significant bottleneck for processing long sequences, both in terms of computation and memory. SLMs and efficient Transformer variants employ approximation strategies to reduce this cost:

- Reformer: Improves the complexity of self-attention from O(N2) to O(NlogN) by replacing the standard dot-product attention with one that uses Locality-Sensitive Hashing (LSH). LSH groups similar queries and keys into “buckets,” allowing attention to be computed only within or between these buckets, rather than across all possible pairs.

- Sparse Attention Mechanisms: Many approaches aim to reduce the quadratic complexity to linear (O(N)) by restricting the attention pattern. Instead of every token attending to every other token, these methods introduce sparsity, allowing each token to attend only to a relevant subset of others.

- Linear Attention Mechanisms: Several works propose techniques to achieve O(N) complexity. These often involve rethinking the attention operation to avoid the explicit N×N attention matrix computation, for instance, by reordering operations or using kernel methods.

- Sparse Routing Modules: Some models, like those proposed by Roy et al. (2021), use techniques such as online k-means clustering to select a sparse set of keys for each query, effectively reducing the computational burden of attention.

These approximations allow SLMs to process longer contexts more efficiently, which is crucial for maintaining performance on complex language tasks without incurring the prohibitive costs of full self-attention.

Neural Architecture Search (NAS) Techniques

Neural Architecture Search (NAS) involves automating the process of designing optimal neural network architectures. For SLMs, NAS is used to discover the most efficient model architectures tailored for specific tasks and hardware constraints.

Historically, NAS has primarily focused on vision tasks and smaller NLP models like BERT because their relatively fewer parameters made the search process computationally feasible.

Applying NAS directly to LLMs (with billions or trillions of parameters) is extremely computationally intensive and costly due to their massive scale. This presents a significant challenge in automatically finding smaller, more efficient LLM variants.

Strategies for SLMs in NAS:

- Defining a more constrained search space that focuses on known efficient patterns or variations suitable for SLMs.

- Using techniques like weight sharing (training a “super-network” that contains all possible architectures), learning curve extrapolation (predicting final performance from early training), or proxy tasks (evaluating on smaller datasets) to reduce the cost of evaluating candidate architectures.

- Evolutionary Algorithms or Reinforcement Learning: Using these methods to explore the architecture search space efficiently, often guided by cost constraints (e.g., FLOPs, parameter count) alongside performance metrics.

NAS aims to move beyond manual architectural design, leveraging computational power to discover novel and highly optimized SLM structures that are perfectly adapted to their deployment environment.

Small Multi-modal Models (SMMs)

The principles of SLM development are extending to the realm of multi-modal AI, leading to Small Multi-modal Models (SMMs). These models combine language understanding with other modalities, primarily vision, while retaining the efficiency characteristics of SLMs.

- Recent Large Multi-modal Models (LMMs) have demonstrated that significant parameter reduction is possible while maintaining performance, often by optimizing how different modalities (e.g., text and images) are integrated. This progress is directly influencing SMM development.

- SMMs often use the efficient language models discussed above (like Gemma and Phi-3-mini) as their core text processing components.

- A key strategy for creating SMMs is to reduce the size and computational load of the vision encoder (the part of the model that processes images).

- Models like InternVL2 leverage outputs from intermediate layers of large visual encoders, discarding later, more computationally intensive blocks, thereby capturing essential visual features with fewer operations.

- Smaller multi-modal models such as PaliGemma and Mini-Gemini adopt inherently lightweight vision encoders that are specifically designed for efficiency without sacrificing crucial visual understanding for the task.

- Some SMMs go even further by completely eliminating a separate visual encoder. Instead, they use lightweight architectures to directly generate “visual tokens” from image inputs that can then be processed by the language model, creating a more unified and efficient architecture.

Training Techniques

Training Small Language Models (SLMs) involves a blend of established techniques from the broader field of deep learning and specialized strategies designed to maximize efficiency and performance despite their reduced size. While the fundamental concepts of pre-training and fine-tuning are similar to those for Large Language Models (LLMs), SLM training places a stronger emphasis on resource-efficient approaches.

Here’s an explanation of key training techniques for SLMs:

Pre-training Techniques

Pre-training is the initial, unsupervised phase where a language model learns general language patterns, grammar, semantics, and some world knowledge from a vast amount of unlabeled text data. For SLMs, the focus during pre-training is on making this process as efficient as possible.

Mixed Precision Training

Mixed precision training is a cornerstone technique for enhancing the efficiency of pre-training for both SLMs and LLMs. It leverages lower-precision numerical representations (e.g., 16-bit or 8-bit floating-point numbers) for most computations during forward and backward propagation, while typically maintaining higher-precision weights (e.g., 32-bit floating-point) for parameter updates.

How it Works:

- Reduced Memory Footprint: Using lower precision (e.g., FP16 or BFLOAT16) for intermediate calculations significantly reduces the memory required to store activations and gradients. This allows for training larger models or larger batch sizes on the same hardware.

- Faster Computation: Modern GPU architectures (like NVIDIA’s Tensor Cores) are specifically designed to accelerate operations with lower-precision formats, leading to substantial speedups in training time.

- Maintaining Accuracy: The “mixed” aspect ensures that critical operations, such as weight updates (which can be sensitive to small changes), are still performed in higher precision (FP32 or BFLOAT16) to prevent loss of information or convergence issues.

Evolution of Precision Formats:

- Automatic Mixed Precision (AMP): Initially, AMP kept a “master copy” of weights in FP32 while performing calculations in FP16. This was a significant step, but FP16’s limited numerical range could sometimes lead to accuracy losses (e.g., underflow of small gradients).

- Brain Floating Point (BFLOAT16): Developed to address FP16’s limitations, BFLOAT16 offers a greater dynamic range by allocating more bits to the exponent (similar to FP32) while sacrificing some precision in the mantissa. This often provides a better balance for deep learning, leading to superior training stability and representation accuracy compared to FP16.

- 8-bit Floating-Point (FP8) : The latest advancements, particularly with NVIDIA’s Hopper architecture, introduce support for FP8 precision. This enables even greater computational efficiency and memory savings for large-scale models, pushing the boundaries of what’s possible with reduced precision.

By smartly managing numerical precision, mixed precision training makes the demanding pre-training phase of SLMs (and LLMs) more feasible and energy-efficient.

Fine-tuning Techniques

Fine-tuning adapts a pre-trained language model to a specific downstream task or domain using a smaller, task-specific dataset. For SLMs, efficient fine-tuning is paramount given that resources are often limited, and the goal is to specialize the model without extensive retraining.

a. Parameter-Efficient Fine-Tuning (PEFT)

PEFT methods are a crucial set of techniques that allow for fine-tuning large pre-trained models (including SLMs derived from larger models or SLMs themselves) by updating only a small subset of parameters or by adding a few lightweight, trainable modules, while keeping the majority of the original model’s parameters fixed (“frozen”). This approach offers several benefits for SLMs:

- Only a fraction of the parameters are updated, drastically cutting down on computation, memory, and training time.

- By keeping most of the base model frozen, PEFT helps prevent “catastrophic forgetting,” where the model loses its general knowledge learned during pre-training when fine-tuned on a new task.

- With fewer trainable parameters, the model is less prone to overfitting on smaller, task-specific datasets.

- Enables quick adaptation to new tasks without the need to store multiple full copies of the fine-tuned model.

- LoRA (Low-Rank Adaptation): LoRA introduces small, trainable low-rank matrices into the Transformer’s attention layers. Instead of directly modifying the large weight matrices of the pre-trained model, LoRA learns small “adaptation” matrices whose product, when added to the original weights, effectively fine-tunes the model. This significantly reduces the number of trainable parameters.

- Prompt Tuning: This method keeps the entire pre-trained model frozen and instead inserts a small sequence of learnable “soft prompts” directly into the model’s input. The model then learns to perform a specific task by adjusting these prompts, which guide its behavior, rather than modifying its internal weights.

- Llama-Adapter: Specifically designed for the LLaMA series, Llama-Adapter adds learnable “prompts” or adapter modules to the attention blocks of the LLaMA architecture. This allows the model to be efficiently adapted for instruction following tasks.

- Dynamic Adapters: These are more advanced PEFT methods that go a step further. They can automatically combine multiple adapters (e.g., as a Mixture-of-Experts) to enable multi-tasking, dynamically activate relevant adapters based on the input, and prevent forgetting across different fine-tuning tasks (Han et al., 2024; Yang et al., 2024).

b Data Augmentation

Data augmentation is a powerful strategy to increase the size, diversity, and quality of training data, leading to improved generalization and performance on downstream tasks, especially crucial when training data is limited (a common scenario for SLMs). For language models, this involves generating new text samples that are variations of existing ones.

- AugGPT: This technique leverages large, powerful LLMs (like ChatGPT) to rephrase existing training samples into multiple conceptually similar but semantically diverse versions. This effectively expands the training dataset with high-quality, human-like variations.

- Evol-Instruct: This method uses LLMs to generate diverse and increasingly complex open-domain instructions through a multi-step “evolutionary” process. It aims to create a richer instruction-following dataset by iteratively revising instructions based on predefined criteria (e.g., adding constraints, deepening, increasing reasoning steps).

- Reflection-tuning: This technique focuses on enhancing data quality and instruction-response consistency. It uses a “reflector” LLM (e.g., GPT-4) to refine both instructions and their corresponding responses based on predefined quality criteria, ensuring the fine-tuning data is of the highest caliber.

- FANNO: FANNO augments instructions and generates responses by incorporating external knowledge sources through Retrieval-Augmented Generation (RAG). This allows the creation of more factually grounded and diverse data by retrieving relevant information before generating augmented examples.

- LLM2LLM: This is an iterative data augmentation strategy. It fine-tunes a “student” SLM on an initial dataset, identifies samples where the student performs poorly, and then uses a more capable “teacher” LLM to generate more “hard” or challenging samples specifically related to those difficult cases. These newly generated hard samples are added back to the training data, allowing the SLM to focus on areas where it needs more learning.

Model Compression Techniques

Model compression techniques are fundamental to the development and deployment of Small Language Models (SLMs). These methods aim to reduce the size, memory footprint, and computational complexity of large pre-trained language models (LLMs) or even existing SLMs, while striving to maintain their performance. Effectively, they allow us to distill the “knowledge” of a larger, more resource-intensive model into a more compact form suitable for resource-constrained environments or specific applications.

Here’s a breakdown of the primary model compression techniques, categorized into pruning, quantization, and knowledge distillation:

Pruning Techniques

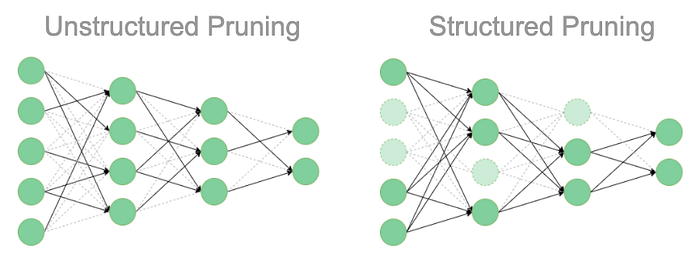

Pruning is a model optimization technique that reduces the number of parameters in a neural network by removing “less significant” weights, neurons, or connections. The goal is to achieve computational efficiency and lower memory usage without a substantial drop in performance.

Unstructured Pruning: This approach involves removing individual weights within the model, regardless of their position in the network structure. It offers fine-grained control and can lead to very high sparsity (a large percentage of zero weights).

Benefits: Can achieve the highest compression ratios and potentially greater accuracy retention for a given sparsity level because it can remove exactly the least important connections.

Challenges: The resulting sparse matrices are often “irregular” (meaning the zero values are scattered randomly), which can be challenging for standard hardware (like GPUs) to accelerate efficiently. Specialized hardware or software (e.g., NVIDIA’s Tensor Cores with specific sparse patterns) is often needed to fully leverage the computational benefits.

Ex: SparseGPT: Formulates the pruning task as a sparse regression problem. It prunes weights in a layer-wise fashion, optimizing both the remaining and pruned weights to minimize reconstruction error. It’s efficient enough to handle very large models like OPT-175B and BLOOM-176B.

Structured Pruning: Unlike unstructured pruning, structured pruning removes entire groups of parameters, such as entire neurons, channels, or even layers. This results in a “denser” (but smaller) remaining network that is much easier for standard hardware to accelerate because it avoids irregular sparsity.

Benefits: Leads to more predictable and often greater real-world speedups on general-purpose hardware, as it creates smaller, dense sub-networks.

Challenges: Can sometimes lead to a greater drop in performance for a given compression ratio compared to unstructured pruning, as it’s less granular in what it removes.

Ex: Neuron Sparsity: Many structured pruning approaches focus on identifying and removing less important neurons. For instance, Li et al. (2023b) observed that feed-forward networks in LLMs often exhibit prevalent sparsity in neurons, suggesting they can be pruned.

Contextual Sparsity: Proposes using small neural networks to dynamically prune parts of the model based on the specific input, allowing for adaptive compression.

Activation Function Modification: Changes the activation functions in pre-trained models to ReLU and then fine-tunes them. This encourages more activation sparsity, which can then be exploited by structured pruning methods.

Quantization

Quantization is a technique that reduces the numerical precision of a model’s weights and/or activations, typically converting them from high-precision floating-point numbers (e.g., 32-bit FP32 or 16-bit FP16/BFLOAT16) to lower-precision integers (e.g., 8-bit INT8 or even 4-bit INT4).

Benefits:

- Lower precision numbers require less memory to store, making models smaller and enabling deployment on memory-constrained devices.

- Integer arithmetic operations are generally much faster and more energy-efficient than floating-point operations on modern hardware.

- Reduced data size means less data transfer, which can speed up overall inference.

Key Approaches:

- Weight-Only Quantization: Focuses on quantizing only the model’s weights.

- GPTQ: A widely adopted post-training quantization method. It focuses on layer-wise, weight-only quantization and uses an approximate inverse Hessian matrix to minimize the reconstruction error when quantizing weights to low bitwidths (e.g., INT4). This allows for very aggressive compression with minimal accuracy loss.

- Weight and Activation Quantization: To fully leverage the benefits of fast integer matrix multiplication units (e.g., on GPUs), quantizing both weights and activations is increasingly important.

- AWQ (Activation-aware Weight Quantization): Recognizes that not all weights are equally important and that “salient” weights (those critical for performance) should be protected. AWQ takes activation distributions into account to assess weight importance and scales salient weight channels to minimize quantization error, enabling effective low-bit weight-only quantization.

- ZeroQuant: Another method that considers activations when determining weight importance for quantization, leading to better optimization for aggressive weight quantization.

- K/V Cache Quantization: Specifically quantizes the Key and Value (K/V) cache, which stores intermediate attention states. This is crucial for efficient long-sequence length inference in generative models, as the K/V cache can consume significant memory.

- Addressing Activation Outliers: A common challenge in activation quantization is the presence of “outliers” — a few activation values that are much larger than the rest of the distribution. These outliers can severely degrade the quality of low-bit quantization.

- SmoothQuant : Addresses activation outliers by “smoothing” them. It mathematically transforms the problem by migrating the quantization difficulty from activations to weights. This means it scales down the activation outliers by scaling up the corresponding weights, making both activations and weights more amenable to low-bit (e.g., 8-bit) quantization without significant accuracy loss.

Knowledge Distillation Techniques

Knowledge distillation is a technique where a smaller, more efficient model (the “student”) is trained to replicate the behavior and knowledge of a larger, more complex model (the “teacher”). The student learns not just from the “hard labels” (e.g., the correct class in a classification task), but also from the “soft targets” (the probability distributions or logits) produced by the teacher model. This allows the student to learn a more nuanced and generalizable representation.

- Classical Distillation: The student model is trained with a loss function that combines the standard cross-entropy loss with a distillation loss that measures the similarity between the student’s output probabilities and the teacher’s “soft” probabilities (often with a temperature scaling to soften them further).

- Deriving SLMs from LLMs: Knowledge distillation is a powerful approach for creating SLMs from LLMs. The LLM acts as the teacher, guiding the training of a much smaller student model.

Babyllama: Was one of the first works to develop a compact 58M parameter language model by distilling knowledge from a larger Llama model (the teacher). A key finding was that distillation from a robust teacher can outperform traditional pre-training of a similarly sized model on the same dataset, suggesting that the teacher effectively guides the student’s learning process.

Introduced modifications in the distillation loss itself to enable student models to generate higher-quality responses, with improved calibration and reduced exposure bias (issues where models generate errors that compound over time). - Addressing Practical Issues in Distillation:

Tokenizer Mismatch/No Teacher Data: A common challenge is that traditional distillation works best when the teacher and student share the same tokenizer and the teacher’s pre-training data is available. Boizard et al. (2024) addressed this by proposing a universal logit distillation loss inspired by optimal transport theory, making distillation more robust to such mismatches. - Combining Distillation with Other Techniques:Distillation + Pruning: Researchers have shown that an iterative approach of pruning a large language model and then retraining it with distillation losses can lead to very strong smaller models. This leverages the benefits of both compression techniques.

- Beyond Logit Distillation (Additional Supervision): Recent advancements have explored incorporating more granular or semantic supervision during distillation to enhance the student’s learning.

“Rationales” as Supervision: Found that providing “rationales” (explanations or intermediate steps of reasoning) as an additional source of supervision during distillation makes the process more sample-efficient. The distilled model, in this case, even outperformed larger models on certain reasoning benchmarks (NLI, Commonsense QA, arithmetic reasoning), indicating that the student can learn not just “what” to output, but “why.”

Other Forms of Supervision: Similar efforts are exploring distilling knowledge related to attention mechanisms, hidden states, or specific intermediate representations of the teacher model to guide the student’s internal learning process.

Evaluation

Evaluating Small Language Models (SLMs) is a nuanced process that goes beyond just traditional accuracy metrics. Because SLMs are often designed for specific constraints — like limited computational resources, privacy concerns, or on-device deployment — their evaluation must consider these factors alongside their linguistic performance. The process involves selecting appropriate datasets and metrics that directly assess how well an SLM meets these practical requirements.

Datasets for SLM Evaluation

The choice of datasets for evaluating SLMs is critical as they need to reflect the real-world conditions and tasks for which the SLM is intended. These datasets are often categorized based on the specific constraints they help address:

Efficient Inference: This setting prioritizes speed and responsiveness. Datasets used here are designed to measure how quickly an SLM can process input and generate output, with minimal latency and high throughput. Tasks typically include:

- Question Answering (QA): Such as SQuAD (Stanford Question Answering Dataset), which involves answering questions based on provided text passages.

- Conversational QA: Like CoQA (Conversational Question Answering), where models must answer a series of interconnected questions within a conversation, requiring context tracking.

- Knowledge-intensive QA: Including Natural Questions (NQ) and TriviaQA which test a model’s ability to answer questions that require broad knowledge, often by retrieving information from large corpora.

- General Language Understanding Benchmarks: SuperGLUE is a more rigorous benchmark than its predecessor, GLUE, designed to test a model’s general language understanding across a diverse set of challenging tasks, providing a comprehensive assessment for models needing fast response times.

- Other datasets also contribute to evaluating the speed of SLMs across various NLP tasks.

Privacy-Preserving: These datasets are crucial for developing SLMs that can operate on sensitive information while upholding privacy standards. They often incorporate techniques like differential privacy or are curated from de-identified data.

- PrivacyGLUE: A benchmark dataset specifically designed to evaluate NLP models’ language understanding capabilities within the domain of privacy policies, often applying differential privacy techniques to common tasks like sentiment analysis.

- Anonymized Clinical Datasets: Examples like MIMIC (Medical Information Mart for Intensive Care) and n2c2 datasets contain de-identified clinical notes. These datasets are vital for training medical SLMs that can process patient data without compromising personal health information.

- Federated Datasets (e.g., LEAF): These datasets are structured to support federated learning frameworks, where data remains distributed across local devices (e.g., smartphones) rather than being centralized. This inherent “privacy by design” approach allows SLMs to be trained on sensitive user data without the data ever leaving the user’s device.

TinyML and On-device Deployment: This setting focuses on deploying SLMs in highly resource-constrained environments. Evaluation datasets and frameworks here are tailored to assess performance under severe memory, computation, and power limitations.

- TinyBERT Framework: TinyBERT, a distilled version of BERT, is not just a model but also a framework optimized for training and evaluating SLMs for on-device applications. It ensures models are optimized for both size and speed, meeting minimal latency requirements typical of embedded systems.

- OpenOrca Subsets: These subsets provide carefully curated datasets that balance performance needs with resource constraints. They are designed to help train and evaluate SLMs that can run effectively on devices with limited capabilities.

Metrics for SLM Evaluation

Beyond traditional NLP metrics like F1-score, accuracy, or BLEU/ROUGE for generation, SLMs are evaluated using a specialized set of metrics that directly quantify their efficiency and adherence to resource or privacy constraints.

Latency: Measures the speed of model operation.

- Inference Time: This metric quantifies the time taken for a model to process an input and generate an output. It’s crucial for user-facing applications where immediate feedback is necessary (e.g., real-time chatbots, voice assistants). Often measured in milliseconds per token or per query.

- Throughput: Evaluates the number of tokens or samples a model can process within a given period (e.g., tokens per second, samples per hour). High throughput is vital for large-scale tasks, batch processing, or applications requiring a high volume of responses.

Memory: Assesses the model’s memory efficiency.

- Peak Memory Usage: Captures the maximum amount of memory (RAM or VRAM) consumed by the model during inference. This is a critical metric for deployment on devices with strict memory limits.

- Memory Footprint: Measures the total size of the model (parameters, architecture, etc.) when loaded into memory. A smaller memory footprint directly translates to easier deployment on resource-constrained devices.

- Compression Ratio: Quantifies the efficiency of compression techniques applied to the model (e.g., original size / compressed size). A higher ratio indicates more effective compression, allowing the model to be more compact without (ideally) sacrificing performance.

Privacy: Metrics specifically designed to quantify how well an SLM protects sensitive information.

- Privacy Budget: Rooted in differential privacy, this metric quantifies the maximum amount of information that could potentially be leaked about any single data point used during training or inference. A lower privacy budget indicates stronger privacy guarantees.

- Noise Level: Measures the amount of random noise added to a model’s outputs or gradients to ensure differential privacy. It highlights the trade-off between privacy (more noise for stronger privacy) and accuracy (more noise can reduce accuracy).

Energy Optimization: Metrics that evaluate the energy consumption of SLMs, crucial for embedded systems and mobile devices.

- Energy Efficiency Ratio: Evaluates the energy consumed by the model relative to its overall performance (e.g., Joules per inference or Joules per accurately processed token). This provides insights into the operational energy intensity of an SLM.

- Thermal Efficiency: Measures how effectively the model’s computations convert electrical energy into useful work, rather than waste heat. High thermal efficiency is important to prevent devices from overheating.

- Idle Power Consumption: Measures the energy consumed by the model or the device when the model is loaded but not actively processing tasks. This is crucial for applications that are always “on” or need to respond quickly from a low-power state.

Small Language Models are the Future of Agentic AI

Small Language Models (SLMs) being the future of Agentic AI is robust and multifaceted, rooted in their evolving capabilities, economic advantages, operational flexibility, and how agents inherently constrain the functionality of even the largest language models. The provided text, along with the diagram, provides a clear framework for understanding this shift.

SLMs Are Already Sufficiently Powerful for Use in Agents

The core premise here is that recent advancements have brought the capabilities of SLMs much closer to those of previous LLMs, making them perfectly suitable for many agentic tasks.

- Steeper Scaling Curve: While the general “scaling laws” (where larger models perform better) still hold, the rate at which capabilities improve with size is becoming steeper for SLMs. This means a relatively small increase in SLM size or architectural refinement can lead to significant gains in performance, often rivaling older, much larger models.

- Meeting or Exceeding Prior LLM Performance: Well-designed SLMs are demonstrating an ability to match or surpass the task performance previously achievable only by models significantly larger. This is a critical development, as it removes the performance bottleneck that once necessitated LLMs for complex tasks.

- Key Capabilities for Agentic Context: The text highlights specific capabilities where SLMs are proving their aptitude:

Commonsense Reasoning: An indicator of basic understanding and the ability to navigate real-world situations, crucial for agents.

Tool Calling and Code Generation: As shown in the provided Figure 1, agents heavily rely on the LM’s ability to “call” external tools or generate code to interact with the environment. SLMs’ proficiency in this area (correctly communicating across the model → tool/code interface) is vital for agentic workflows.

Instruction Following: The agent’s ability to correctly interpret and act upon directives (responding back across the code ← model interface) is fundamental. SLMs’ improved instruction following means they can reliably execute the steps planned by an agentic system..

SLMs Are More Economical in Agentic Systems

Economic efficiency is a major driver behind the shift towards SLMs in agentic workflows. Agents, by their nature, involve multiple sequential steps and interactions, making cumulative costs a significant factor.

Inference Efficiency:

- 10–30x Cheaper: Serving a 7 billion parameter SLM is dramatically more cost-effective (10–30 times cheaper) in terms of latency, energy consumption, and Floating Point Operations (FLOPs) compared to a 70–175 billion parameter LLM. This is a game-changer for deploying agents at scale, where hundreds or thousands of LM inferences might occur for a single complex task.

- Real-time Responses: Lower latency enables real-time agentic responses, which is crucial for interactive applications and seamless user experiences.

- Reduced Infrastructure Costs: SLMs require less or no parallelization across multiple GPUs and nodes, leading to lower maintenance and operational expenses for the serving infrastructure.

- Support from Inference OS: Tools like NVIDIA Dynamo are emerging to explicitly support high-throughput, low-latency SLM inference in both cloud and edge deployments, further solidifying their economic viability.

Fine-tuning Agility:

- GPU-Hours, Not Weeks: Parameter-efficient fine-tuning techniques (e.g., LoRA, DoRA) for SLMs require only a few GPU-hours. This means new behaviors can be added, bugs fixed, or the model specialized overnight, enabling rapid iteration and adaptation of agent capabilities. This agility is unachievable with the weeks-long fine-tuning cycles often needed for LLMs.

Edge Deployment:

- Local Execution: Advances in on-device inference systems (like ChatRTX) allow SLMs to execute locally on consumer-grade GPUs. This provides real-time, offline agentic inference with lower latency and significantly stronger data control, as sensitive information doesn’t need to leave the device.

Parameter Utilization:

- More Efficient Activation: LLMs, despite their massive parameter count, often only engage a fraction of their parameters for any single input (sparse activation). SLMs, conversely, show more subdued sparse behavior, suggesting that a larger proportion of their parameters are actively contributing to the inference cost. This implies SLMs are fundamentally more efficient for the computation they do, with less “wasted” overhead from inactive parameters.

SLMs Are More Flexible

Flexibility is paramount for agentic systems that need to adapt to evolving user needs, integrate new tools, or handle diverse routines. SLMs offer superior operational flexibility due to their lower associated costs.

- Affordable Specialization: The reduced pre-training and fine-tuning costs make it much more affordable and practical to train, adapt, and deploy multiple specialized expert SLMs for different agentic routines. Instead of one giant, generalized model, you can have a fleet of highly efficient specialists.

- Rapid Iteration and Adaptation: This efficiency enables rapid iteration and adaptation. New behaviors can be quickly added, new output formats supported, or specific agentic routines refined without the prohibitive costs and time associated with adapting a massive LLM. This allows agentic systems to evolve quickly with business demands.

Agents Expose Only Very Narrow LM Functionality

This argument fundamentally challenges the necessity of LLMs for most agentic tasks by highlighting how agents, by design, constrain the underlying language model’s broad capabilities.

- LLM as a “Restricted Gateway”: An AI agent effectively acts as a heavily instructed and externally choreographed gateway to a language model. Through meticulously written prompts and orchestrated context management, even a powerful generalist LLM is restricted to operate within a very small subset of its vast skill set.

- SLM Sufficiency: The argument posits that if an LLM is being intentionally constrained to perform a narrow function (e.g., extracting specific entities, generating a particular API call, following a precise instruction), then an appropriately fine-tuned SLM can achieve the same result. The SLM, having been optimized for that specific narrow function, will perform it with the added benefits of increased efficiency and greater flexibility.

LLM-to-SLM Agent Conversion Algorithm

LLM-to-SLM Agent Conversion Algorithm outlines a strategic and practical pathway for transitioning an agentic AI system from relying on a large, general-purpose Large Language Model (LLM) to a more efficient and specialized architecture leveraging Small Language Models (SLMs). This algorithm is designed to make the transition “painless” by systematically capturing, processing, and leveraging real-world usage data.

The core idea is to observe how a generalist LLM is used within an agent (which, as discussed, often means it’s being “constrained” to narrow tasks), then identify these narrow tasks, and finally train specialized SLMs to handle them more efficiently.

Here’s a step-by-step explanation of the algorithm:

Secure Usage Data Collection

The initial and crucial step involves setting up robust logging to observe how the existing agentic system (powered by an LLM) operates in the real world.

- Instrumentation: Implement logging mechanisms to capture all non-Human-Computer Interaction (HCI) agent calls. This means logging everything the agent does internally, such as:

- Input prompts: The specific queries or instructions the agent receives or passes to its internal LM.

- Output responses: The language model’s generated replies or actions.

- Contents of individual tool calls: What data or instructions the agent sends to external tools (e.g., API calls, database queries).

- Latency metrics (optional): Recording how long each step takes can be valuable for later optimization and identifying bottlenecks.

- Security and Privacy: It’s paramount to implement secure logging practices:

- Encrypted logging pipelines: To protect data in transit and at rest.

- Role-based access controls: To restrict who can access the sensitive usage data.

- Anonymization: Crucially, all data should be anonymized with respect to its origins (e.g., user IDs) before storage to prevent data leaks and protect privacy, especially when this data will later be used to train SLMs that might serve different user accounts.

- Illustration: The “logger” component in Figure 1 (from the previous context) visually represents this step, capturing interactions between the LM and various tools.

Data Curation and Filtering

Once a substantial amount of usage data has been collected, the next step is to clean and prepare it for training.

- Collection Threshold: Continue collecting data until a sufficient volume is accumulated. A rule of thumb suggested is 10k-100k examples, which is generally enough for fine-tuning SLMs.

- Sensitive Data Removal/Masking: This is a critical privacy and security step. All Personally Identifiable Information (PII), Protected Health Information (PHI), or any other application-specific sensitive data must be removed or masked. If this data were to be included in the SLM’s training, it could lead to data leaks or unintended exposure across different user accounts.

- Automated Tools: Utilize popular automated tools for dataset preparation to detect and mask/remove common sensitive data types.

- Paraphrasing/Obfuscation: For application-specific inputs (e.g., legal documents, internal company memos), automated paraphrasing techniques can be used to obfuscate named entities and numerical details. The goal is to retain the general information content relevant for the task while eliminating sensitive specifics.

Task Clustering

This step involves analyzing the collected and cleaned data to identify distinct tasks or operational patterns that can be offloaded to specialized SLMs.

- Unsupervised Clustering: Apply unsupervised clustering techniques to the collected prompts and agent actions. The aim is to group similar requests or internal operations together.

- Identify Recurring Patterns: Look for clusters that represent common agent behaviors.

- Define Candidate Tasks: These clusters will naturally define candidate tasks suitable for SLM specialization. The granularity of these tasks can vary depending on the diversity of the agent’s operations.

Common Examples of Specialized Tasks:

- Intent Recognition: Identifying the user’s underlying goal (e.g., “book flight,” “check order status”).

- Data Extraction: Pulling specific information (e.g., dates, names, product IDs) from unstructured text.

- Summarization of Specific Document Types: Generating summaries for specific kinds of documents (e.g., legal contracts, customer reviews).

- Code Generation (with respect to tools): Generating specific API calls or code snippets for pre-defined tools used by the agent.

SLM Selection

For each identified specialized task, the next step is to choose suitable SLM candidates from the available pool.

Criteria for Selection: This involves a multi-criteria evaluation:

- Inherent Capabilities: Does the SLM possess the core abilities needed for the task (e.g., strong instruction following, good reasoning for its size, sufficient context window size)?

- Benchmark Performance: How does the SLM perform on public benchmarks relevant to the task type?

- Licensing: Is the SLM’s license compatible with your deployment and usage plans (e.g., open source, commercial)?

- Deployment Footprint: Crucially, assess the SLM’s memory consumption and computational requirements during inference to ensure it fits the target environment (e.g., edge device, specific server configuration).

Candidate Models: Referencing a list of known efficient SLMs (like those discussed in Section 3.2 of the original text, such as Phi, Gemma, Mistral 7B, etc.) provides a good starting point for selection.

Specialized SLM Fine-Tuning

This is the core training phase, where the selected SLMs are adapted to their specific tasks using the curated data.

- Task-Specific Dataset Preparation: For each selected SLM candidate and its corresponding task, create a specific training dataset from the curated data gathered in steps S2 and S3.

- Fine-tuning: Train the chosen SLMs on these specialized datasets.

- Parameter-Efficient Fine-Tuning (PEFT): Techniques like LoRA or QLoRA are highly recommended. They significantly reduce computational costs and memory requirements for fine-tuning, making the process much more accessible and faster. This involves only updating a small fraction of the model’s parameters.

- Full Fine-tuning (Optional): If resources permit and maximal adaptation to the new task is absolutely critical (e.g., for very subtle linguistic nuances), full fine-tuning (updating all parameters) can also be considered, but it’s more resource-intensive.

- Knowledge Distillation (Optional but Recommended): In some cases, it’s highly beneficial to use knowledge distillation. Here, the specialized SLM (student) is trained to mimic the outputs and behaviors of the more powerful generalist LLM (teacher) on the task-specific dataset. This allows the SLM to “inherit” some of the more nuanced capabilities and reasoning patterns of the larger LLM, even for a specific task.

Iteration and Refinement

The conversion process is not a one-time event but an ongoing cycle of improvement.

- Continuous Improvement Loop: Periodically retrain the specialized SLMs and, importantly, the “router model” (the component that decides which model or tool to use for a given input) with new usage data.

- Adaptation to Evolving Patterns: This continuous improvement loop ensures that the SLMs remain performant and adapt to evolving user needs, new tools, or changes in usage patterns.

- Returning to Earlier Steps: The cycle can return to step S2 (collecting more data for re-curation) or step S4 (re-evaluating and selecting new SLMs if current ones are no longer optimal for a task) as needed, creating a robust and adaptive agentic system.

As we’ve explored the fascinating world of Small Language Models, from their fundamental basics and unique architectures to their pivotal role in shaping agentic AI, it’s clear that these compact powerhouses are not just a trend — they’re the future of efficient and accessible artificial intelligence.

Thank you for taking the time to delve into this exciting topic. If you found this article insightful, please consider clapping to show your support and leaving a comment with your thoughts or questions.

See you until next time..

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.