Can ChatGPT think?

Last Updated on January 30, 2023 by Editorial Team

Author(s): Dor Meir

Originally published on Towards AI.

An answer from Leibowitz, Yovell, and ChatGPT

- The question

- The easy answer

- The mind-brain problem

- Epiphenomenalism — mind as a side-effect of brain

- Functionalism — mind as a software of brain

- How can we tell if ChatGPT thinks?

The question

The image above is a rather rich one, isn’t it? I was never skilled at writing Dall-E prompts that’ll generate decent images. What I did here was to ask ChatGPT to “write a Dall-E text to image prompt of ChatGPT thinking and make it sit in the same position as the famous Le Penseu statue”. I then took the text result and punched it into Dall-E, and got back this fine image.

This is just another example of how advanced ChatGPT’s communication level is. It makes me wonder — if ChatGPT is that complex in his calculations if its learned weights capture so much of human context, could this be a sign of his human-like intelligence? Does this count as thinking in a somewhat similar way to how we think? Roughly speaking, it’s just statistics and learning from examples. But don’t we humans also learn from examples? I know my toddler does…

If ChatGPT’s trained weights capture so much of human context, could it be it has a human-like intelligence? Does this count as thinking, similar in a way to how we think?

First and foremost, let’s make a proper introduction. Here are ChatGPT’s own words about “itself”:

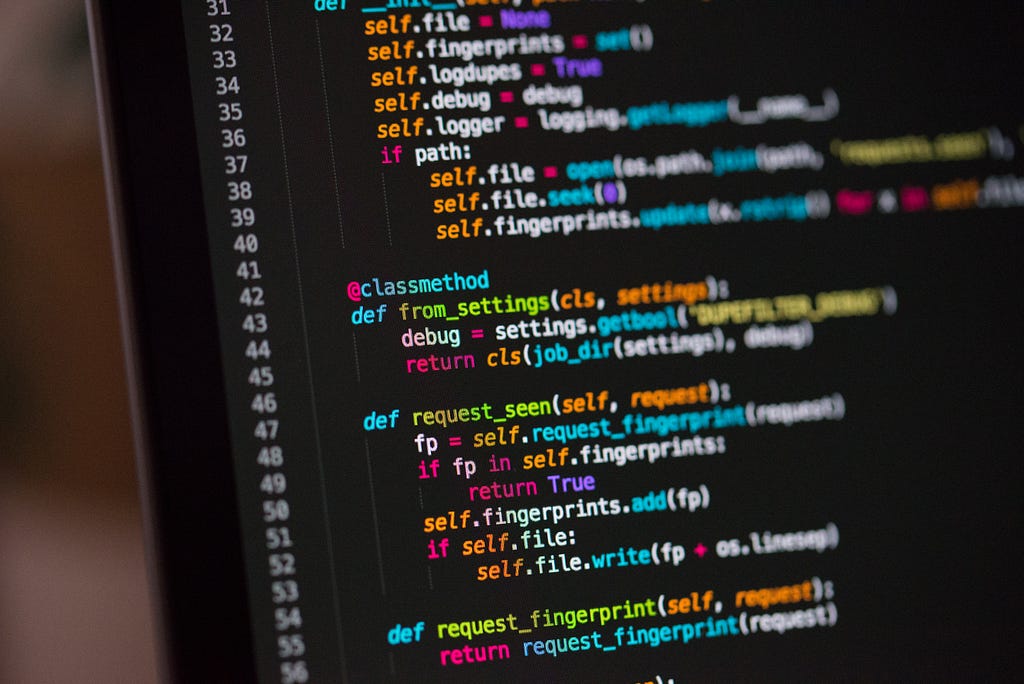

So ChatGPT claims it understands the human-like language. Although it is showing a remarkable chat capability, NLP researchers say it has many flaws in its so-called “understanding”. Here are a few notable ones:

- ChatGPT doesn’t really “knows what it knows”. It doesn’t even know what “knowing” is. All it does is to guess the next token (=word), and this next token guess may be based on either well-founded acquired knowledge or it may be a complete guess.

- ChatGPT doesn’t have a coherent and complete worldview: it has no way of knowing that different news stories it was trained on all describe the same thing.

- ChatGPT has no notion of time: it can concurrently “believe” both “Obama is the current president of the US”, “Trump is the current president of the US” and “Biden is the current president of the US” are all valid statements.

I’ll add that if you use it long enough, sooner or later, you‘ll see it spill out some rubbish, but it’ll always be articulated with immense confidence and in a nice and eloquent way. If you say in response: “are you sure?”, it’ll ask for your forgiveness and then either fix its mistake or just repeat it all over again. Nonetheless, most of us can think of a person we’re familiar with who chats in a similar manner…

Even with these flaws, ChatGPT has an astonishing Q&A capability. Could it be evidence for ChatGPT and other Large Language Model's ability to think, even at a very low and limited level of thinking? In my humble opinion, this question should not be laid alone at the doorstep of NLP researchers or Machine Learning experts. It ought to be handed over also to Philosophers since it brings up more basic philosophical questions: in what way are we thinking? And how can we tell if another being is thinking?

Despite its flaws, ChatGPT has an astonishing Q&A capability. Could it be evidence for its ability to think, even at a very limited level? This question brings up philosophical questions: in what way are we thinking? And how can we tell if another being is thinking?

The easy answer

One way to know what the being in front of us thinks is to simply ask it:

That’s just too easy. I’m positive ChatGPT was dictated to answer this, just like it was dictated to answer anything that even smells like financial consultation: “It is not appropriate or ethical for me, as a language model, to give investment advice”.

Notice, though, when asked if it can think, ChatGPT answered, “I do not posses’ consciousness or self-awareness”. Besides being rather ironic

(who is I?), this also teaches us that being able to think is correlated in ChatGPT’s training data with consciousness. We will soon see how these terms connect to the subject in question.

The mind-brain problem

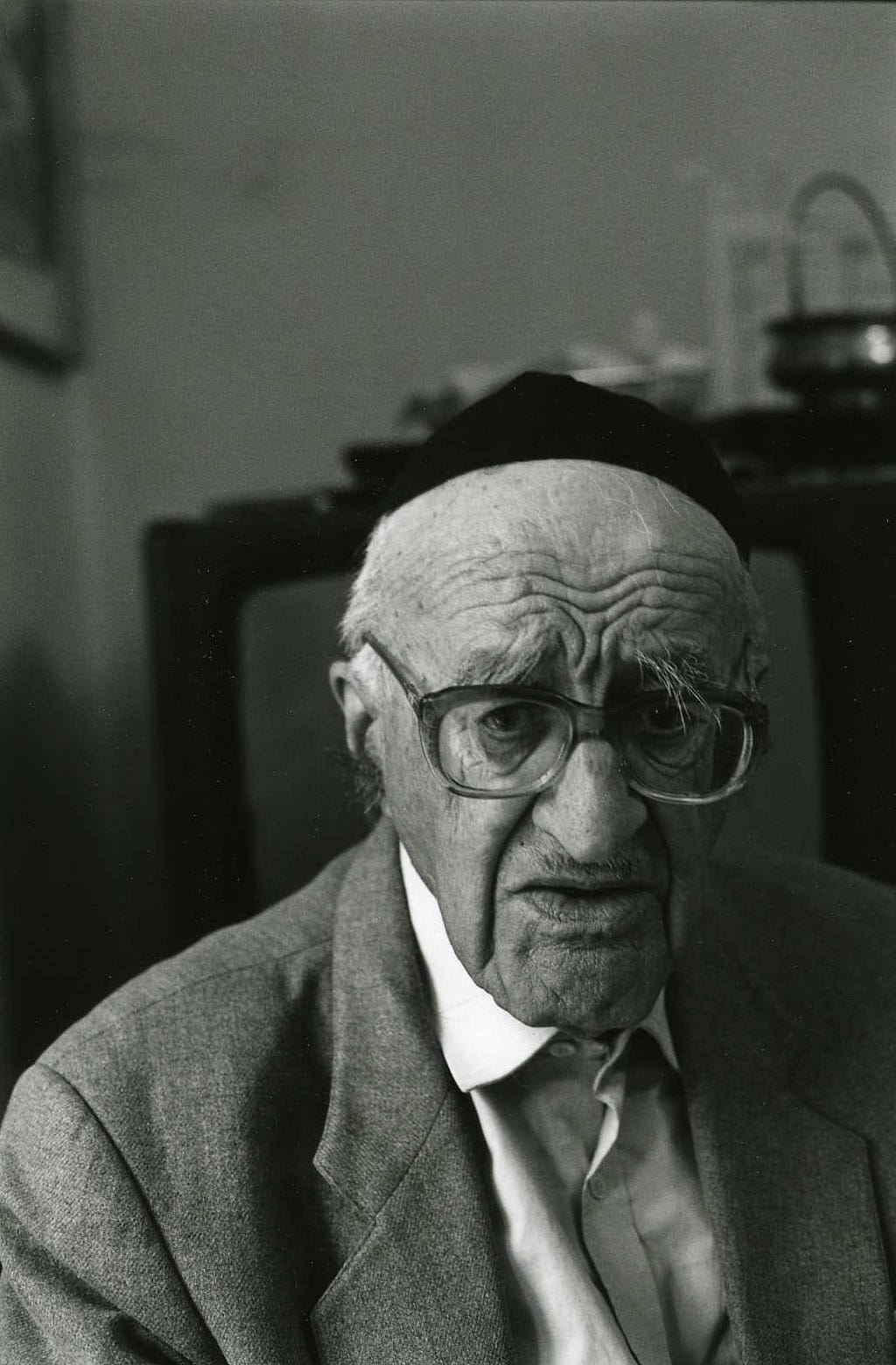

Since asking ChatGPT turned futile, we’ll resort, at least for now, to philosophy. I’ll give ChatGPT the honor of introducing the philosophers:

A “small” mistake here: Yoram Yovell is alive and well. And another detail omitted — Yovell is Leibowitz’s grandson. More importantly, In 2005, Yovell replied to his grandfather’s 1974 paper explaining the fundamentals of the Psycho-Physical Problem, better known as the mind-brain problem. The two essays were combined along with an essay by the Nobel prize winner, Kahneman, to a book in Hebrew called “נפש ומוח”, or “mind and brain”. Although thinking algorithms is not the book's primary subject, it contains more than a few discussions on the option of an AI or a supercomputer developing a mind — and thinking ability.

As I said above, the main topic of the book is the psychophysical problem. Let’s ask ChatGPT for an explanation of the problem:

Indeed. We don’t know, and some say we will never know, how our mental experiences reflect and affect our physical state of the brain, and visa versa — how exactly is the brain affecting the mind? Yeshayahu Leibowitz has defined it this way:

How does an event in the public domain of the physical spacetime, emerge as an event of a consciousness in the private domain?

We don’t know, and some say we will never know, how our mental experiences affect our physical state of the brain, and visa versa — how the brain is affecting the mind.

Leibowitz elaborated on four popular solutions to the mind-brain problem: interactionism, parallelism, epiphenomenalism, and identity theory. Don’t worry about all the ism’s, we’ll soon explain the most relevant one in plain English. But first, Let’s see if ChatGPT is familiar with these terms:

The last sentence reveals a key question every solution of mind-brain problem ought to answer:

Are mind and brain separated entities (dualism),

or are they essentially the same thing (monism)?

Out of the four solutions to the mind-brain problem, we’ll focus on the one that’s most compatible with the option of ChatGPT thinking and developing a mind of it’s own. Its name is Epiphenomenalism.

Epiphenomenalism — mind as a side-effect of brain

The term “epiphenomenalism” comes from the Greek word “epi” meaning “upon” or “on top of”, and “phenomenon,” meaning “appearance” or “manifestation.” “Epiphenomenalism” is used to describe this theory because it suggests that mental states are like an “after-effect” or “manifestation on top of” the physical brain activity. Advocators of Epiphenomenalism are monists, that presume there is only one vector of influence between the brain and the mind, and its direction starts from the brain and ends in the mind.

According to Leibowitz, some of the people who believe in epiphenomenalism, or the mind as just a side effect of the physical states in the brain, also argue that computers might develop a mind — as a side effect of their physical activity.

Some of the people who be believe in epiphenomenalism as a solution to the mind-brain problem, also presume that computers might develop a mind — as a side effect of their physical activity.

We’ll now elaborate on this point. Advocates of epiphenomenalism believe the entirety of functional relations in the net of billions of neurons and hundreds of billions of synapses is the physical basis for the mind. Moreover, some see the electronic computer as a model of the brain. In their view, since processes in the computer (or algorithm) imitate the brain's thought processes, we can expect a computer (or algorithm) with a huge number of functional units and a complex level of internal relations — to develop subjective self-awareness and human-like thinking.

At first sight, the question we should ask here is whether an algorithm does act in a similar way to the brain. However, even if it does, a more basic question arises — can we even say a human brain can think? Because if not, it doesn’t matter if the algorithm’s neural net architecture is identical to the brain’s system of neurons — none of the two possess a mind and the ability to think.

If the mind is a side effect of the brain, and an algorithm imitates the brain, we expect the algorithm to develop a human-like thinking. But can we even say a human brain can think? Because if not, an algorithm obviously can’t think.

Can OUR brain think?

Leibowitz recognizes the public, and sometimes even the academic literature, uses the phrase “the thinking brain”, and even talks about the computer as a “digital brain” or as a “thinking machine”. Nevertheless, he emphatically asserts:

Thinking is not done by the brain itself, but by the owner of the brain!

I find this argument similar, in a way, to one by David Hume, one of philosophy’s giants and the author of the monumental A Treatise of Human Nature. Hume argues that even the coldest and most calculated person acts solely out of her emotions. According to Hume, emotion is what makes the will, and will is what motivates human activity. That is, without the emotions (or mental activity) of the brain owner, no will — will develop, and no activity will occur. Getting back to Leibowitz, one might say a brain without the owner of the brain activating it is like a calculator with no one pressing the buttons.

According to David Hume, even the most calculated person acts solely out of her emotions. Emotions make the will and will motivates activity. One might say a brain without the owner of the brain activating it, is like a calculator with no one pressing the buttons.

Leibowitz further states that the secondariness of the computer (and, for our matter, of the algorithm) is apparent: the algorithm only performs a physical task, and it takes a human with consciousness and intelligence to give the physical task a logical meaning. ChatGPT uses electric pulses to generate words and logical sentences, but they only represent logical relations. The actual logical relations exist merely in the mind of the thinking person.

ChatGPT uses electric pulses to generate words and logical sentences, but they only represent logical relations. The actual logical relations exist merely in the mind of the thinking person.

One might say that the algorithm doesn’t think, just like the ensemble of instruments that performs physical activities together, does not play music. The air vibrations coming out of the instruments to create a harmony only to a musical consciousness.

Leibowitz arguments conclude:

The thinking person stands in the beginning and in the end of an algorithm’s system of processes, that we might call as “the mental activities of the algorithm”.

The person stands in the beginning since the programming and training of an algorithm is not possible without the thinking activity of the person. At the same time, the person stands at the end of the algorithm since, without an intelligent being interpreting the activity, it is nothing but a physical activity. Thus, in accordance with Leibowitz argument, no mental activity can be attributed to an algorithm alone. It’s only we, the algorithms users, who do the thinking.

The thinking person stands both in the beginning and in the end of the so-called “mental operations of the algorithm”. Thus, no mental activity can be attributed to an algorithm alone. It’s only us, the algorithms users, who do the thinking.

Epiphenomenalism — experimental evidence

This is all fine, but what does actual science have to say about epiphenomenalism? Yoram Yovell enriched his grandfather’s argument about the mind-as-a-side-effect approach with some experimental evidence. Yovell portrayed an experiment conducted by the neuropsychologist Benjamin Libet of Standford University. In this experiment, volunteers were instructed to raise a finger whenever they “feel like” doing so. During that time, they were also looking at a type of watch with one hand spinning rapidly, and the electrical activity of their brains was recorded in a non-invasive way using electrodes.

The first result of the experiment was astonishing: about a third of a second before a person became conscious to his will to raise his finger, her brain already “knew” she’s is going to have that will! Some researchers viewed that as evidence that there is no free will since the consciousness (or mind) is just a product of unconscious processes in the brain. Or in other words, the mind is only a side effect of the brain, as epiphenomenalism suggests. And if epiphenomenalism is correct, maybe a consciousness CAN naturally grow as side effect of the physical operation of complex algorithms such as ChatGPT.

A third of a second before a person became conscious to his will, his brain already “knew” she’s going to have that will. Some viewed that as evidence that there is no free will. Or in other words, the mind is just a side effect of the brain, as Epiphenomenalism suggests.

Against that statement lies our very strong feeling that we do have free will. Thus, for epiphenomenalism to be correct, our sense of free will needs to be a complete delusion. Lebowitz argued in a similar manner on free will elusion: when we say free will is an elusion, we already assume there’s a mind, separated from the matter, that experiences elusion, which is a purely mental phenomenon. By doing so, we fundamentally contradict epiphenomenalism, since, according to Leibowitz and Yovell it it is a monistic-materialistic approach that assumes no separation between the mind and the brain.

Lebowitz’s philosophical contradiction of epiphenomenalism fits well with the second result of the experiment. Libet has also found that there was an even smaller time frame, about a tenth of a second, when the volunteers could have decided not to raise their fingers. This “veto call” didn’t have any evidence of preceding brain activity.

Libet deduced from this finding that the veto call is decided, in practice, only as part of the consciousness. To Libet, this meant that there is free will — a free will to not do something. If this is, in fact, the case, then the mind is not just a side effect of the brain as it can also affect the physical state of the brain. This, evidently, leads to the conclusion epiphenomenalism does not hold and that if ChatGPT has a mind, it is not just a side effect of its neural net activity.

There was a tenth of a second in which the volunteers decided not to raise their finger, without any preceding brain activity. This might mean there IS a free will — a free will to not to do something. Consequently, epiphenomenalism does not hold, and if ChatGPT has a mind, it is not just a side effect of its neural net activity.

Functionalism — mind as a software of the brain

Philosophically and empirically, it seems as if epiphenomenalism fails to solve the mind-brain problem. As a result, it also fails to support ChatGPT's ability to think. However, Leibowitz wrote about epiphenomenalism in the 1970s. Lately, the ever-growing use of computers has given rise to a new and interesting approach to the mind-brain problem: functionalism, or the mind as a software package of the brain.

Yovell states that many philosophers and neuroscientists are proponents of functionalism. They presume that the mind is an emergent property of the brain, and as so, the mental phenomenon starts to appear when large groups of neurons, connected by many synapses, are activated in some way.

Proponents of functionalism suggest the mind is an emergent property of the brain, and as so, the mental phenomenon start to appear when large groups of neurons, connected by many synapses, are activated in some way.

But this sounds like epiphenomenalism, isn’t it? Despite the similarity, the two are not the same:

Functionalism suggests the mind is not a “thing” — as argued by Descartes and Spinosa — but a process. For instance, if we want to know what is a block of metal, we need to know its material, weight, shape, etc. But if we want to know what a watch is, knowing all these properties is not enough. We have to know what it does or what is its role or function.

Functionalism is the process that occurs within the thing, i.e., the processes that occur inside the brain. In other words, the mind is the brain’s functionality. More accurately, the material level is the brain — the hardware of the computer, and the mind is the functional level of that hardware, meaning a process built of a series of events, determined by the rule of the “software” operating the brain.

One reason functionalism is fairly popular is that it is a non-reductionist approach, i.e., the mental activity does not reflect in physical activity. In this, it elegantly avoids the problems of identity theory: if the mind and brain are the same, they must be translated completely to each other, and yet we clearly can’t tell how the mind translates into the brain because that’s the definition of the mind-brain problem! However, if the mind is a process, the mind is just what we feel at a time, and it doesn’t need to have a direct connection to a physical event in the brain.

Functionalism suggests the mind is not a “thing” but a process. if we want to know what a watch is, knowing its properties is not enough. We must know what is does, its function or role. The processes that occur inside the brain, the brain’s functionality — that’s the mind. As a process, the mind is just what we feel at a time, and it doesn’t need to have a direct connection to a physical event in the brain.

Another reason functionalism gained popularity is that, according to Yovell and Lebowitz, it is monistic and materialistic. In a way, it might be the best combination of dualism and monism: on the one hand, it assumes, like in monism, the world has only matter — a rather attractive assumption for anybody holding the scientific method. On the other hand, like in dualism, it assumes reality has two levels, two of which are necessary to understand a person. In that, dualism better describes the relationship we feel our mind and body truly has.

Considering the above, functionalism allows for ChatGPT to be able to think: while ChatGPT’s material level is the algorithm’s trained weights and architecture, ChatGPT’s mind is the functional level of that hardware — its ability to chat with us humans.

Alas, the simple but harsh Leibowian argument still holds: a function only exists when a thinking person interprets it as a function. In Leibowitz’s view, a watch that is defined by “something that shows the time”, assumes that someone with a mind and the ability to think is interpreting the time that the watch is showing. Now, we’ve just argued that the function of chatting is what makes ChatGPT’s mind and ability to think. Taking into account the Leibowian argument, ChatGPT doesn’t actually chat with us — we, thinking humans, interpret its output as chatting, and so ChatGPT’s mind and ability to think exist merely in our thoughts. It’s all just hardware.

It seems as if functionalism allows for ChatGPT to being able to think: while ChatGPT’s material level is the algorithm’s’ trained weights, ChatGPT’s mind is its ability to chat with us humans. Alas, the Leibowian argument still holds: we, thinking humans, interpret its output as chatting, and so ChatGPT’s mind, and ability to think, exists merely in our thoughts. It’s all just hardware.

How can we tell if ChatGPT thinks?

But maybe we’ve made a mistake. Perhaps there’s another approach for the mind-brain problem that we didn’t consider or that was not discovered yet, and ChatGPT does have a mind and an ability to think. Even so, almost immediately surfaces the question of how we can know an algorithm has produced a mind. This question is better known as the problem of other minds.

The immediate answer is that we can never know for sure if the person or algorithm in front of us has a mind. This answer comes directly from the definition of consciousness, which exists solely in the private domain and is an unmediated fact of its owner, that experience it “from within”. Any other answer is only a deduction, however intuitive and empathetic, that comes from looking “from the outside”.

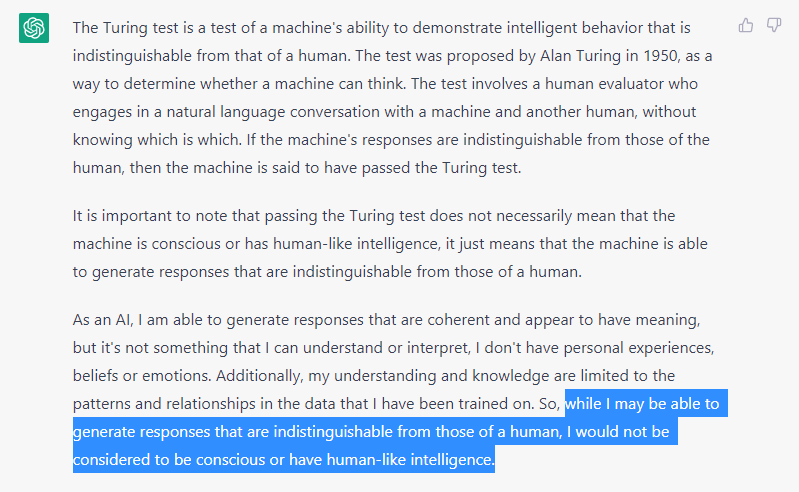

How, then, can we deduce the other side has a mind? We believe the other side has a mind if it behaves and reacts the way we behave and react. Or in other words, we know there’s “someone” in the other side if it passes the famous Turing test.

Even if ChatGPT has a mind and can think, how can we tell it does have a one? We can never know for sure, but we can deduce it if ChatGPT behaves and reacts the same way we do, meaning if it passes the Turing test.

ChatGPT is right this time — passing the Turing test is not that hard, and certainty does not mean the other side has a mind. It’s quite an easy task for us to impersonate to a different gender or age. That’s true, especially when the only interaction between the two sides is masked by a keyboard and a screen. However, some say in response that the mere ability to impersonate someone else, including this someone’s thoughts and way of expression, is a significant human capability, evident of human-like intelligence.

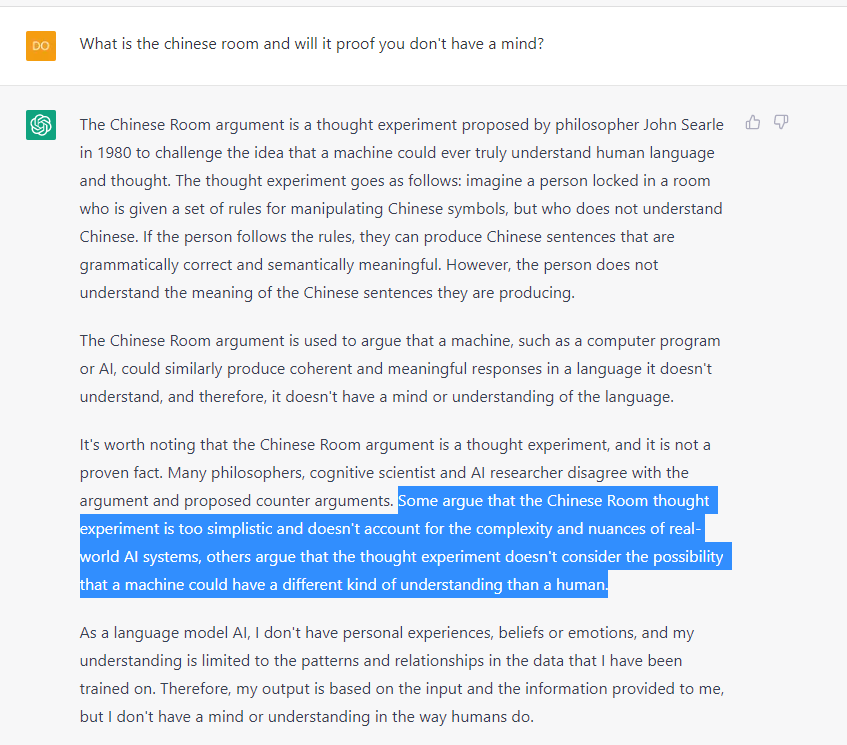

Another known test, Yovell claims, exposes the fallacy in the Turing test, and is called the Chinese room.

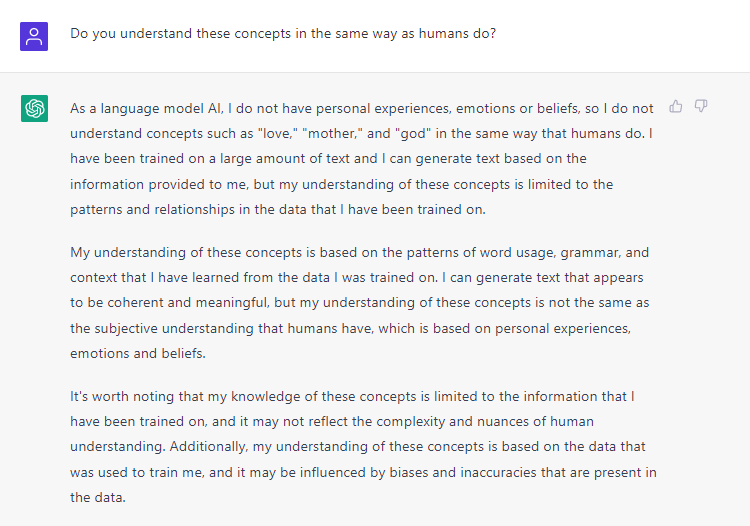

The Chinese room thought experiment suggests an algorithm can produce meaningful responses without truly understanding them. Consequently, Yovell, a clinical therapist, says that any therapist knows that understanding and giving meaning are both human qualities, i.e., evidence of a thinking mind. Without them, one cannot make sense of the human mind, let alone take care of it. Yovell then argues that humans understand and experience the meaning of words such as “love”, “mother”, or “god”, in a way that is hard to imagine an algorithm can imitate.

The Chinese room exposes the fallacy in Turing test: an algorithm can produce meaningful responses without truly understanding them. Understanding is evidence of a thinking mind, and its hard to believe an algorithm can understand the meaning of “love”, “mother”, or “god”.

Nevertheless, Yovell wrote the essay in a time when ChatGPT was but a dream. Can ChatGPT truly understand these words? Is ChatGPT’s level of understanding of these words evidence of his mind and ability to think?

I’ll leave it for you to decide:

Feel free to share your feedback and contact me on LinkedIn.

Thank you for reading, and good luck! 🍀

Can ChatGPT think? was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.