“AutoML, NAS and Hyperparameter Tuning: Navigating the Landscape of Machine Learning Automation”

Last Updated on July 17, 2023 by Editorial Team

Author(s): Arjun Ghosh

Originally published on Towards AI.

“AutoML is one of the hottest topics in the field of artificial intelligence, with the potential to democratize machine learning and make it more accessible to non-experts.” — Andrew Ng, Co-founder of Google Brain and Coursera.

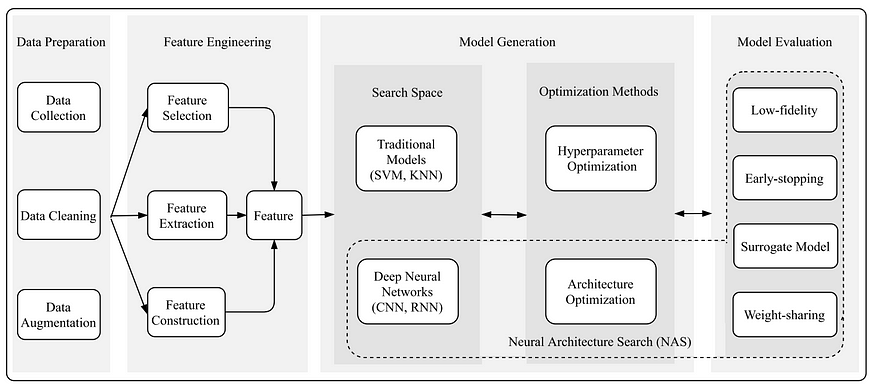

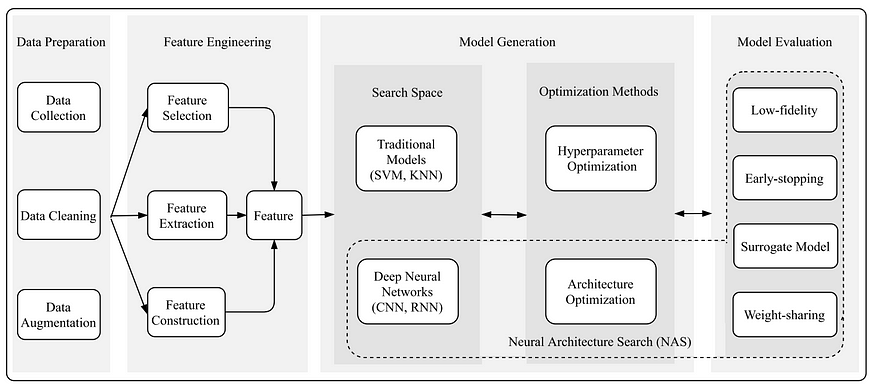

AutoML, neural architecture search (NAS), and hyperparameter tuning are three important techniques in automated machine learning. In this story, we will explore and compare these techniques with the help of several research papers.

AutoML

The term "Automated Machine Learning," or "AutoML," was first introduced in 2015 by researchers at the University of Freiburg in Germany. The researchers defined AutoML as “the use of machine learning to automate the entire process of selecting and training a machine learning model, including feature engineering, model selection, and hyperparameter optimization.”

Since then, AutoML has gained popularity due to its potential to significantly reduce the time and effort required for developing machine learning models. AutoML techniques have been applied to various domains, including computer vision, natural language processing, and healthcare.

AutoML is considered an important area of research as it can democratize machine learning by making it accessible to a wider audience, including those with limited machine learning expertise. This can have significant implications for various fields, including healthcare, where the use of machine learning can lead to improved patient outcomes and reduced costs. AutoML has gained significant attention in recent years due to its potential to simplify the machine learning process and make it more accessible to non-experts. One of the main advantages of AutoML is its ability to automatically search through a large space of models and hyperparameters to find the best-performing model for a given dataset. In a recent study by Feurer et al. (2019), AutoML outperformed human experts in a large-scale empirical study of hyperparameter optimization on 29 datasets.

However, AutoML also has some limitations. It can be computationally expensive, especially when searching through large and complex model spaces. AutoML is also limited by the set of models and hyperparameters that are pre-defined in the search space, which may not include the best-performing models for a given problem. In addition, AutoML may not be able to incorporate domain-specific knowledge or constraints into the search process.

Neural Architecture Search (NAS)

The term "Neural Architecture Search" (NAS) was first introduced in a research paper titled “Neural Architecture Search with Reinforcement Learning” by Barret Zoph and Quoc Le, published in 2017. The paper introduced a novel approach to automatically search for optimal neural network architectures using reinforcement learning.

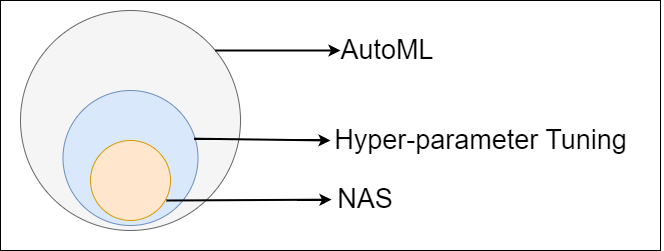

NAS is a technique for automating the design of neural network architectures, which can be considered a subset of AutoML. NAS aims to find an architecture that is tailored to a specific task and can achieve better performance than manually designed architectures. NAS has been successfully applied to various computer vision and natural language processing tasks and has achieved state-of-the-art performance in many cases.

One of the main advantages of NAS is its ability to discover novel and efficient architectures that may not have been considered by human experts. NAS can also incorporate domain-specific knowledge or constraints into the search process, which can improve the performance and efficiency of the discovered architectures. In a recent study by Zoph et al. (2018), NAS was used to discover a new architecture for image classification that outperformed previous state-of-the-art models on the CIFAR-10 and ImageNet datasets.

However, NAS also has some limitations. One of the main challenges of NAS is the search space, which can be very large and complex, especially for more complex tasks such as video recognition. NAS can also be computationally expensive, which limits its practical applicability in some cases. In addition, NAS may suffer from the problem of reproducibility, as different search methods or hyperparameters can lead to different architectures and results.

Hyperparameter Tuning (HPT)

The term "hyperparameter tuning" has been around for several decades, and it is a fundamental aspect of machine learning. Hyperparameter tuning is an important aspect of automated machine learning, which aims to find the best hyperparameters for a given model architecture and dataset. Hyperparameter tuning is often performed using grid search, random search, or Bayesian optimization techniques. One of the main advantages of hyperparameter tuning is its ability to improve the performance of a given model without changing the model's architecture. In a recent study by Yang et al. (2020), hyperparameter tuning was found to be the most effective technique for improving the performance of deep neural networks on various computer vision tasks.

However, hyperparameter tuning also has some limitations. It can be computationally expensive, especially when searching a large space of hyperparameters. Hyperparameter tuning can also suffer from the curse of dimensionality, where the number of hyperparameters grows exponentially with the number of model parameters, making the search space very large and difficult to explore. In addition, hyperparameter tuning may not be able to capture complex interactions between hyperparameters, which can limit its ability to find the best-performing set of hyperparameters.

How does NAS differ from AutoML or hyperparameter tuning?

AutoML is a rapidly growing field that aims to automate every aspect of the machine learning (ML) workflow, making machine learning more accessible to non-experts. While AutoML covers a broad range of problems related to ETL, model training, and deployment, hyperparameter optimization is a primary focus. This involves configuring the internal settings that determine the behaviour of an ML model to produce a high-quality predictive model.

Hyper-parameter Tuning (HPT) (sometimes called hyper-parameter optimization) is closely related to neural architecture search (NAS), despite the latter being considered a new problem. NAS searches larger spaces and controls different aspects of neural network architecture but ultimately seeks to find a configuration that performs well on a target task. As such, we consider NAS a subset of the hyperparameter optimization problem.

Neural Architecture Search (NAS) is a subfield of AutoML that seeks to automate the laborious task of designing architectures. It is often conflated with Hyper-parameter Tuning(HPT), which is another subfield of AutoML. HPT assumes a given architecture and focuses on optimizing its parameters, such as the learning rate, activation function, and batch size. On the other hand, NAS concentrates on optimizing architecture-specific parameters such as the number of layers, the number of units, and the connections between these units.

While neural network architecture exploration and tuning are essential for high-quality deep learning applications, specialized NAS methods are not yet ready for widespread use. They introduce algorithmic and computational complexities without demonstrating significant improvements over high-quality hyperparameter optimization algorithms.

References:

My Previous Story:

[Neural Architecture Search (NAS) and Reinforcement Learning (RL)]

[The Fundamentals of Neural Architecture Search (NAS)]

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.