At the crossroad of Neuroscience, Data Science and Machine Learning — edition #1

Last Updated on September 10, 2022 by Editorial Team

Author(s): Kevin Berlemont, PhD

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

At the crossroads of Neuroscience, Data Science and Machine Learning — edition #1

Welcome to this bi-weekly/monthly newsletter, where I review and summarize a few research papers that were published recently. All the papers that will be covered through the different editions will be linked in one way or another to Machine Learning, Data Science, and Neuroscience.

This first edition will focus on three papers covering the following themes:

- Are words encoded within the brain activity? A new deep learning architecture to decode speech from EEG recordings [1].

- How does the brain recall information? An RNN architecture mimics some properties of neural recording during a recall of an information task [2].

- How do the correlations impact the information contained in a neural network? A study of noise and correlations in brain recordings using support vector machines decoders [3].

Decoding speech from non-invasive brain recordings — A. Defossez et al. [1]

Are individual words encoded within the brain activity? The authors use M/EEG recordings (non-invasive) coupled with a deep learning architecture to perform a zero-shot classification of neural data. The model is composed of two blocks:

- A pretrained self-supervised model that extracts contextual representation of speech signals

- A convolutional network that gives representations of the brain activity

The training of this full architecture is performed using a contrastive loss method. This type of learning, called CLIP tries to match latent representations in two different modalities, in this case, a speech representation and a brain activity representation. For every positive sample, N – 1 negative samples are sampled in order for the network to learn to map low probabilities to those samples during the training. This results in data augmentation as instead of just increasing the probabilities of response to correct samples, the network will learn to decrease the probability of response to wrong answers at the same time.

It is worth noting that the authors tried to use a regression cost function but mention that [1]:

We reasoned that regression might be an ineffective loss as it departs from … decoding speech from brain activity

I would like to read more research papers mentioning the steps in training that went wrong and how they overcame them like this one!

After training, the network is able to achieve an impressive 72.5% of accuracy (and 44% top 1 accuracy) on 3 seconds MEG signals when indicating which is the most probable set of words corresponding to this brain activity segment. This is an exciting development in the field of AI in neuroscience as it opens a path toward real-time decoding of language using non-invasive brain recordings.

A recurrent neural network model of prefrontal brain activity during a working memory task — E. Piwek et al. [2]

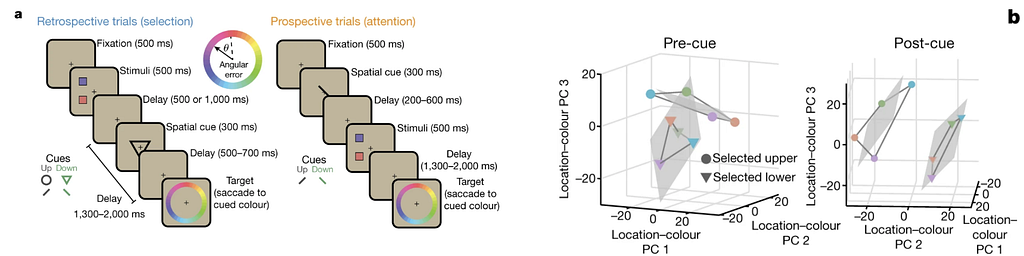

How is brain activity organized during a recall of information? Recent recordings from macaques [3] in the lateral prefrontal cortex have shown that the stimuli are represented in perpendicular neural subspace during the delay. However, after the presentation of the cue indicating which stimulus should be considered, this representation shifts toward a common subspace where the cues are in parallel planes.

In this paper, the authors investigate if a recurrent neural network (RNN) can exhibit this shift of representation during the recall task. The network is trained with stochastic gradient descent on a cost function that minimizes the difference between neural and network activity as well as performance in the task. This is necessary to make sure that the network is not just reproducing the dynamics but is actually solving the task too.

After training, the RNN presents the orthogonal (independent) geometry before the presentation of the cue (Panel b, figure above). This has the tendency to minimize interference between the different items that are kept in memory. However, during the post-cue interval, the representations in the RNN shift toward parallel subspace in a similar way to the experimental results. This is useful as the same mechanism can now be used to perform the readout of the information.

This research sheds light on how different representational properties could be learned by the brain as the RNN develops a similar geometry as the neural activity. Looking for the transition in neural geometry in neural recordings during training opens the door to understanding if biological learning follows similar principles to training RNNs.

Noise correlations in neural ensemble activity limit the accuracy of hippocampal spatial representations — O. Hazon et al. [4]

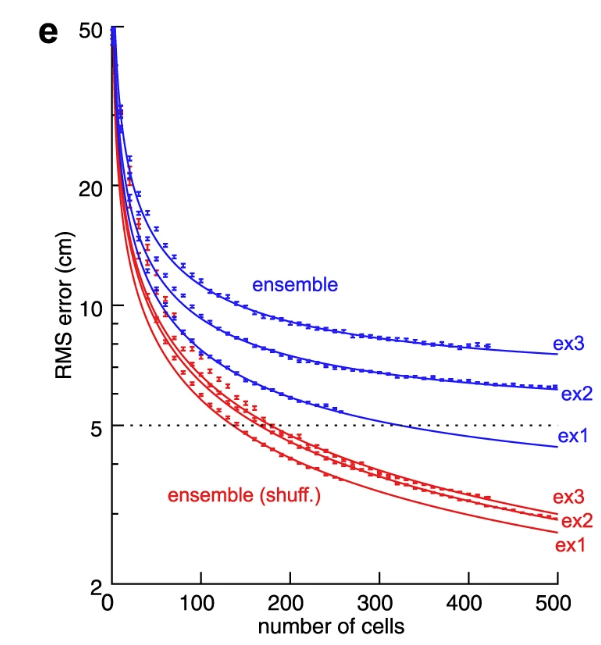

Hippocampal neurons are known to encode the position of an animal or subject in an environment. However, an inherent feature of biological neural networks is the presence of noise and variability in the activity. This leads to an immediate question about the network’s encoding capacity: Does the noise correlation of the neurons impacts the decoding of the position?

Indeed, if the noise is not correlated, then it is possible to average all the dynamics of all the neurons, and the noise will be averaged out, leading to accurate decoding of the position. On the contrary, in the case of noise correlation, the response of the neurons could deteriorate while doing the average, thus leading to inaccurate decoding. Thus the question of the noise correlations between units in neural networks is necessary to capture their efficiency in information retrieval.

The authors analyzed the accuracy of the spatial representation of up to 500 simultaneous neurons in mice. In order to obtain the effect of the noise correlations on the spatial decoding accuracy, they construct support vector machines (SVM) to estimate the position using the neural activity. In order to measure the effect of noise correlations on position decoding, they compare the decoding accuracy of the SVMs with the shuffled across trials neural activity (without noise correlations) and the one with correlations.

They show that, in the case of noise correlation, the decoding error reaches an asymptotic error (around 5cm, Figure above) but not in the case of no correlations. Thus hippocampal neurons exhibit information-limiting noise correlation, and it consists in a necessary feature to take into account when quantifying information increase during the learning of a task.

References

[1] Défossez, Alexandre, et al. “Decoding speech from non-invasive brain recordings.” arXiv preprint arXiv:2208.12266 (2022).

[2] Piwek, Emilia P., Mark G. Stokes, and Christopher P. Summerfield. “A recurrent neural network model of prefrontal brain activity during a working memory task.” bioRxiv (2022).

[3] Panichello, Matthew F., and Timothy J. Buschman. “Shared mechanisms underlie the control of working memory and attention.” Nature 592.7855 (2021): 601–605.

[4] Hazon, Omer, et al. “Noise correlations in neural ensemble activity limit the accuracy of hippocampal spatial representations.” Nature Communications 13.1 (2022): 1–13.

If you would me to review a specific research paper, don’t hesitate to send it to me at kevin.berlemont@gmail.com so I can see if it’s suitable for this newsletter.

At the crossroad of Neuroscience, Data Science and Machine Learning — edition #1 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.