AI & Ethics — Where Do We Go From Here?

Last Updated on July 25, 2023 by Editorial Team

Author(s): Ryan Lynch

Originally published on Towards AI.

AI & Ethics, Into the Future U+007C Towards AI

The topic of ethics comes up a lot when we talk about Artificial Intelligence. “How do we teach an AI to make ethical decisions?”, “Who decides what’s ethical for an AI to do?” and a big one: “Who is responsible if an AI does something considered unethical?”

Surely we can’t hold the AI accountable? It’s only a machine. Is it the programmer? They were only creating something to the specification their manager gave them. So, the manager then? But they were just creating the product ordered by the client. Is it the client? But they didn’t fully understand how the AI would make decisions.

So… no one? That doesn’t seem quite right. Does that mean that we just have to trust that AI won’t be used unethically?

And on that point, what counts as unethical? A lot of companies that work with artificial intelligence have their own “ethics guidelines” to assure us that they’re doing the right thing and so far we all seem to be fine with it. But really, we’re just taking their word for it.

But… Aren’t There Laws For That?

No. There aren’t.

As it stands, there are no laws in place to govern artificial intelligence or other similar technologies. You’re probably thinking “well why not? They should really get working on that.” But hold your horses, they’re trying!

Laws take months or even years to create whereas technology advances nearly daily. So you can see why lawmakers are struggling to keep up. How do you make a law to govern something that hasn’t even been thought up yet?

There are some regulations such as GDPR to protect the data rights of people inside the EU.

Ok so I know how boring that big chunk of legal-ese is but it’s quite important. What that first paragraph says is that no person can have a decision made about them by a computer working on its own (e.g. by artificial intelligence).

See that last part though?

Article 22, Paragraph 2, Part c.

It says that if a person consents to it, the protection I just talked about doesn’t apply. Sounds reasonable, right? But think about how often you give away that consent. Every service online now has its own long and complicated terms of service, not once have we ever read one. Using any app or website on your phone or computer counts as “explicit consent” to that app or website. Either you sign your rights away, or you just don’t use the service at all.

It’s why we really should have been reading the Terms of Service every time. Once you click agree, you tell the company you’re fine with them taking away any and all rights are given to you in Paragraph 1 of Article 22.

For an example of this, you only have to look at the huge trend that just hit there in the last couple of weeks — FaceApp. Have a look at this small bit of fine print in the terms and conditions you agree to by using the app.

“ You grant FaceApp a perpetual, irrevocable, nonexclusive, royalty-free, worldwide, fully-paid, transferable sub-licensable license to use, reproduce, modify, adapt, publish, translate, create derivative works from, distribute, publicly perform and display your User Content and any name, username or likeness provided in connection with your User Content in all media formats and channels now known or later developed, without compensation to you.”

Basically, for the sake of seeing what you might look like when you get older, you gave this random Russian company the right to your own face… forever.

This seems like a really bad situation to be in, and, to a lot of people, it is. But to a lot more, it’s just the norm.

Another Take On Artificial Intelligence

In most western countries, we value individual privacy and transparency above pretty much everything else when it comes to companies and AI. And this is reflected in the ethics guidelines that companies write for themselves.

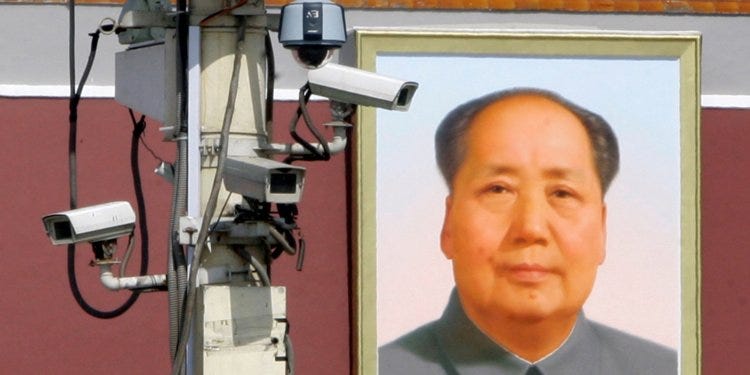

In China, it’s a different story. They value the collective good as highly as the individual good. For the most part, they believe what’s best for the many as being what’s best.

Because of this approach, you see advancements in the way China uses technology in people’s day-to-day lives. They not only have facial recognition built into a lot of their street surveillance systems but now in some cities, they also have gait analysis up and running despite it still being a relatively new technology (at least a relatively new working technology).

A lot of shops in China now let you pay with your face instead of a card, even the subway is trying it out. Convenient, right? I can imagine it’s a lot harder to forget your face in the morning than it is to forget your wallet.

So… Which Is Better?

I’ll answer that question with a question (and then I’ll give a vague, open-ended answer to that question). Better for what?

The “Western Approach” is arguably better for the individual in terms of privacy and control over one’s own data whereas the approach that China and multiple other Eastern countries are taking is better purely for the development and advancements of the technology itself.

This leads to a lack of privacy though. The government in China knows where each of its citizens is, what they are doing and who they’re with. To many, it would seem to that technology used like this is incompatible with an open and free democracy.

A trade-off has to be made, individual privacy versus the convenience that these technologies can bring. For the most part, we value individual privacy. Eastern countries make a different choice in that same trade-off.

Personally, I value my privacy as an individual. Of course, that’s because I’ve grown up with western ideals. I can’t really say it’s better, especially when I can see the appeal of being able to implement artificial intelligence into everyday life to make things easier, for the government, companies and the people using the technology.

What Do We Do Then?

That’s a good question dear reader, thank you for asking.

The problem is, I don’t know. We, as one global community, need to decide (and fast) what rules and laws need to be in place to make sure someone is accountable, to draw the line that marks what is too far. Are we going to build laws from the western or eastern views on AI ethics?

As I said, I personally value my privacy. But, to move forward I believe we need to take on board the fact that, eventually, we’ll need to merge our ideals, work together and find a better way to govern AI and to move forward. The two fundamentally different approaches to ethics cannot exist beside each other as the world becomes a smaller and smaller place with technology advancing as it has been.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.