Pause for Performance: The Guide to Using Early Stopping in ML and DL Model Training

Last Updated on January 25, 2024 by Editorial Team

Author(s): Shivamshinde

Originally published on Towards AI.

Table of Content

- Introduction

– What are Bias and Variance?

– What is Overfitting, Underfitting, and Right Fit in Machine Learning?

– What is Regularization? - What is Early Stopping?

- Pros and Cons of Early Stopping

- Using Early Stopping in Machine Learning Models

– Using Custom Code

– Using Scikit-Learn Library in GradientBoostingRegressor

– Using Scikit-Learn Library in SGDRegressor - Using Early Stopping in Deep Learning Models

- Outro

- Codes and References

Introduction

Before diving directly into early stopping, let’s take a detour and revise some basic terminologies required to understand early stopping.

What are Bias and Variance?

Let’s understand two different kinds of errors that are necessary to understand underfitting and overfitting.

- Bias error: A bias error is an error that we find using the training data and a trained model. In other words, here we are finding the error using the same data that is used for training the model. An error can be any kind of error such as mean squared error, mean absolute error, etc.

- Variance error: A variance error is an error that we find using the test data and a trained model. Again here, the error can be any type of error. Even though we can use any type of error to find the variance, we use the same error that we used for bias finding because that way we can compare the bias and variance values.

Note that the ideal condition of our trained model is having low bias and low variance.

What is Overfitting, Underfitting, and Right Fit in Machine Learning?

The machine learning model is said to be overfitted when it performs very well on the training data but poorly on the test data (low bias and high variance).

On the other hand, when a machine learning model performs poorly on both training and testing data (high bias and high variance), it is said to be underfitted to the data.

The right fit machine learning model gives good performance on both training and test data (low bias and low variance).

The following two diagrams might help understand the concepts of underfitting, overfitting, and right fit.

Regression Example:

Classification Example:

I have written a whole article on the concepts of overfitting, underfitting, and the right-fit models in machine learning. You can go through that article if you want to learn more about these concepts. You can find the link to said article below.

Striking the Right Balance: Understanding Underfitting and Overfitting in Machine Learning Models

This article will explain the basic concept of overfitting and underfitting from the machine learning and deep learning…

pub.towardsai.net

What is Regularization?

The gas stove that we use for everyday cooking in the kitchen has a regulator attached to it. Such a regulator is used to control the flame produced by the gas stove.

Similarly, Regularization, as the name suggests, is used to regularize or control. In the context of machine learning, regularization techniques are used to control the power or learning ability of an algorithm. This is done to handle underfitting and overfitting situations and lead our model towards the right fit.

In the context of machine learning,

When we say ‘increase the regularization’, we mean ‘decrease the learning ability of the algorithm’ or ‘use simpler algorithm’

When we say ‘decrease the regularization’, we mean ‘increase the learning ability of algorithm’ or ‘use more complex algorithm’

For example, To increase the regularization in the random forest classification algorithm, we can decrease the value of the maximum depth of trees.

What is Early Stopping?

Early stopping is one of the most famous regularization techniques. It is used to control the overfitting of the algorithm to the data.

In early stopping, we stop the training of the machine learning model at the point where the loss or error in the validation data is at its minimum value.

Let’s understand this using the loss vs number of iterations diagram.

The above diagram shows the process of training an algorithm on training data and validating it on the validation data. The X-axis indicates the number of iterations of the algorithm training. The Y-axis indicates the loss or error that the machine learning model gives on the training and validation data set.

In the graph, Initially, both the training set error and the validation set error (or development set error) keep decreasing.

But at one point, the validation set error (or development set error) hits its smallest value, and then it starts increasing. After this point, the model will start overfitting to the training data. So, to avoid overfitting, we stop the training at the smallest value of the validation set error (or development set error).

Pros and Cons of Early Stopping

Pros:

- Early Stopping helps to avoid overfitting and thereby increases the accuracy of trained models on the test or new data.

- Since we are stopping the training early, training time decreases when we use early stopping.

- It is quite easy to implement using the code

Cons:

- Since the working of early stopping depends on the validation data set, if we don’t choose the validation set properly, early stopping won’t give the desired results

- If we stop too early, then we might run into the situation of underfitting. This could happen when our model training is stuck at some plateau. To avoid this issue, we could use learning rate schedulers to change the learning rate while training is going on.

Using Early Stopping in Machine Learning Models

We can easily implement the early stopping using the Scikit-Learn library in the algorithms such as Stochastic Gradient Descent or Gradient Boosting. Also, we can use our custom code to implement the early stopping.

Let’s use Kaggle’s advertising dataset for the demonstration.

Let’s complete the data preprocessing first.

## Importing required libraries

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.pipeline import Pipeline

from sklearn.base import clone

from sklearn.preprocessing import PolynomialFeatures, StandardScaler

from sklearn.linear_model import SGDRegressor

from sklearn.metrics import mean_squared_error, r2_score

## Reading the data

df = pd.read_csv('/kaggle/input/advertising-dataset/Advertising.csv')

## Removing ID column

df = df.iloc[:,1:]

## Splitting the data

X, y = df.iloc[:,:-1] , df.iloc[:,-1]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2,random_state=234)

## Preprocessing

poly_scalar = Pipeline([

('poly_feat',PolynomialFeatures(degree=3,include_bias=False)),

('std_scalar',StandardScaler())

])

X_train_transformed = poly_scalar.fit_transform(X_train)

X_test_transformed = poly_scalar.transform(X_test)

Early Stopping using Custom Code

## Creating a model

reg = SGDRegressor(tol=1e-4,warm_start=True,penalty=None,learning_rate='constant',eta0=0.0005)

## Implementing early stopping

minimum_value_error = float('inf')

best_epoch = None

best_model = None

for epoch in range(1000):

reg.fit(X_train_transformed,y_train)

y_pred = reg.predict(X_test_transformed)

val_error = mean_squared_error(y_test,y_pred)

if val_error < minimum_value_error:

minimum_value_error = val_error

best_epoch = epoch

best_model = clone(reg)

## Making predictions using the best model

for epoch in range(best_epoch):

best_model.fit(X_train_transformed,y_train)

y_pred = best_model.predict(X_test_transformed)

## creating a function to find the r2 adjusted score

def r2_adj(r):

n = df.shape[0]

m = df.shape[1]

result = 1 - ((1-r**2)*((n-1)/(n-m-1)))

return result

## Printing the results

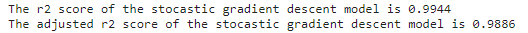

print(f"The r2 score of the stocastic gradient descent model is {np.round(r2_score(y_test,y_pred),5)}")

print(f"The adjusted r2 score of the stocastic gradient descent model is {np.round(r2_adj(r2_score(y_test,y_pred)),5)}")

Using Early Stopping in Gradient Boosting Regressor:

We need to provide the values for the following three parameters of the GradientBoostingClassifier class to implement the early stopping.

validation_fraction: This parameter represents the fraction of training data that needs to be set aside as a validation data set. The value of this parameter is a fraction between 0 and 1.

n_iter_no_change: This parameter is used to decide if early stopping will be used to terminate training when the validation score is not improving. By default, it is set to None to disable early stopping. If set to a number, it will set aside the validation_fraction size of the training data as validation and terminate training when the validation score is not improving in all of the previous n_iter_no_change numbers of iterations. The value of this parameter is an integer greater than 1.

tol: When the loss is not improving by at least tol for n_iter_no_change iterations, the training stops. The value of this parameter is a fraction greater than or equal to zero.

## Creating a model

reg2 = SGDRegressor(random_state=2374,learning_rate='constant',early_stopping=True,penalty=None, tol=1e-4,validation_fraction=0.20,n_iter_no_change=10,max_iter=2000)

## Training the model

reg2.fit(X_train_transformed,y_train)

## Testing the model

y_pred = reg2.predict(X_test_transformed)

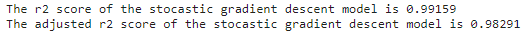

print(f"The r2 score of the stocastic gradient descent model is {np.round(r2_score(y_test,y_pred),5)}")

print(f"The adjusted r2 score of the stocastic gradient descent model is {np.round(r2_adj(r2_score(y_test,y_pred)),5)}")

Using Early Stopping in SGDRegressor:

We need to provide parameters similar to those of GradientBoostingClassifier to implement the early stopping in SGDRegressor, too.

## Creating a model

from sklearn.ensemble import GradientBoostingRegressor

gbc = GradientBoostingRegressor(n_estimators=100, learning_rate=1,max_depth=1, random_state=0,

validation_fraction=0.2, n_iter_no_change=15, tol=1e-4)

## Training the model

gbc.fit(X_train_transformed,y_train)

## Testing the model

y_pred = gbc.predict(X_test_transformed)

print(f"The r2 score of the gradient boosting regressor model is {np.round(r2_score(y_test,y_pred),5)}")

print(f"The adjusted r2 score of the gradient boosting regressor model is {np.round(r2_adj(r2_score(y_test,y_pred)),5)}")

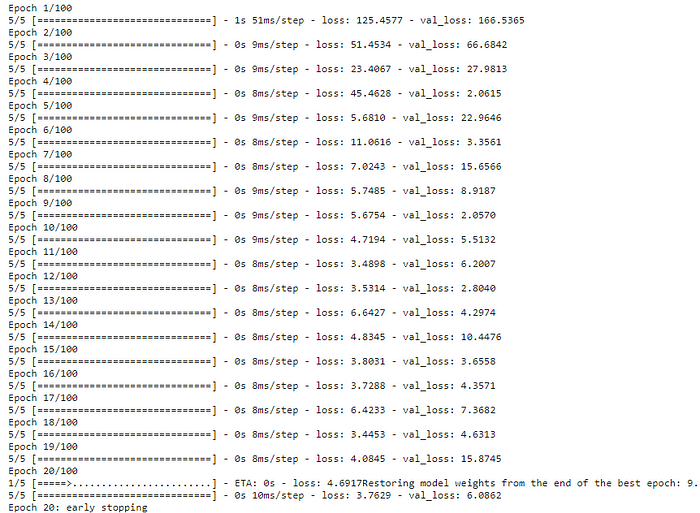

Using Early Stopping in Deep Learning Models

To implement the early stopping along with the deep learning models, we use something known as callbacks.

While creating a callback, we need to give values to the following parameters:

monitor: This parameter is a quantity that we monitor during early stopping.

patience: This parameter indicates the number of epochs for which we will overlook no change in the monitored quantity.

min_delta: The value of the monitored quantity is considered as changed from its previous value if it is changed by at least min_delta.

mode: In ‘min’ mode, the training will stop when the monitored quantity stops decreasing.

verbose: When the verbose value is equal to 1, the model will show the message when early stopping kicks in.

restore_best_weights: If True, the model weights from the epoch with the best value of the monitored quantity will be restored.

Check out the following example for the implementation of early stopping.

## Importing required libraries

import tensorflow as tf

# Preprocessing

scaler = StandardScaler()

X_train_transformed = scaler.fit_transform(X_train)

X_test_transformed = scaler.fit_transform(X_test)

## Creating a model

model = tf.keras.Sequential()

model.add(tf.keras.Input(shape=(3,)))

model.add(tf.keras.layers.Dense(8))

model.add(tf.keras.layers.Dense(8))

model.add(tf.keras.layers.Dense(8))

model.add(tf.keras.layers.Dense(1))

## Creating a callback

es_callback = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=20, min_delta=1e-4, mode='min', verbose=1, baseline=0.75, restore_best_weights=True)

## Compiling a model and fitting it on training data

model.compile(optimizer='sgd', loss='mse')

history = model.fit(X_train_transformed, y_train, validation_data=(X_test_transformed,y_test), callbacks=[es_callback],epochs=100)

## Checking the results

df = pd.DataFrame()

df['loss'] = history.history['loss']

df['val_loss'] = history.history['val_loss']

df.plot(xlabel='epochs', ylabel='loss and val_loss', title='Early Stopping')

Outro

Thank you so much for reading. If you liked this article don’t forget to press that clap icon as many times as you want. Keep following for more such articles!

Code and References

Code

Early Stopping

Explore and run machine learning code with Kaggle Notebooks U+007C Using data from Advertising dataset

www.kaggle.com

References

Regularization by Early Stopping – GeeksforGeeks

A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and…

www.geeksforgeeks.org

tf.keras.callbacks.EarlyStopping U+007C TensorFlow v2.15.0.post1

Stop training when a monitored metric has stopped improving.

www.tensorflow.org

sklearn.linear_model.SGDRegressor

Examples using sklearn.linear_model.SGDRegressor: Prediction Latency SGD: Penalties

scikit-learn.org

sklearn.ensemble.GradientBoostingRegressor

Examples using sklearn.ensemble.GradientBoostingRegressor: Gradient Boosting regression Plot individual and voting…

scikit-learn.org

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.