This AI newsletter is all you need #86

Author(s): Towards AI Editorial Team

Originally published on Towards AI.

What happened this week in AI by Louie

This week, our eyes were on a flurry of new regulations and policies surrounding using and identifying Generative AI content. This includes new AI-voice bans from the FCC, White House cryptographic verification of its statements to combat deepfakes, and new watermarks at OpenAI on DALL-E 3 content.

There have been several worrying uses of text-to-speech recently, including scams targeting individuals via family member’s voice impersonation and AI-generated robocalls that mimicked President Joe Biden’s voice to discourage people from voting in the state’s first-in-the-nation primary last month. As fallout from the latter, the Federal Communications Commission (FCC) unanimously ruled against AI-generated voices in robocalls. The ruling targets robocalls made with AI voice-cloning tools under the Telephone Consumer Protection Act, a 1991 law restricting junk calls that use artificial and prerecorded voice messages. The White House has also confirmed that it is currently exploring ways to cryptographically verify the statements and videos that it puts out in an effort to combat the rise of politically motivated deepfakes.

While on one front, we have seen a new ban on AI-generated voices in robocalls, ElevenLabs has taken a different approach by introducing Voice Actors — a platform where artists and individuals can permit and monetize the use of their voices. Voice artists can share their professional voice clones with the ElevenLabs community, earn rewards in a completely self-serve way, and work on licensing deals with ElevenLabs to create high-quality ElevenLabs Default Voices. Artists can also decide what content categories their voice can be used for.

In other news, OpenAI’s image generator DALL-E 3 will add watermarks to image C2PA metadata as more companies roll out support for standards from the Coalition for Content Provenance and Authenticity (C2PA). C2PA is an open technical standard that allows publishers, companies, and others to embed metadata in media to verify its origin and related information. This move is aimed as a step towards improving the trustworthiness of digital information.

Why should you care?

We think there will be a delicate balance between positive and negative uses of Generative AI content and a delicate balance between over and under-regulation. Clearly, urgent measures have to be taken to combat new scams and election manipulation enabled by new text-to-voice capabilities, but is a blanket ban a clumsy measure that’s unlikely to be obeyed by the really bad actors? Regardless of regulation, we think it is important that AI content can be differentiated from human content and that people are given more power to permit, restrict, or monetize their own data, content, and voices. So, these moves from Elevenlabs and OpenAI are positive. Still, many powerful open-source models are now available, and the open-source models are likely the ones that will prove more challenging to manage.

– Louie Peters — Towards AI Co-founder and CEO

Hottest News

The WSJ reported that OpenAI CEO Sam Altman is in talks with investors, including the UAE government, to raise funds for a wildly ambitious tech initiative that would boost the world’s chip-building capacity, expand its ability to power AI, among other things, and cost several trillion dollars. The project could require raising as much as $5 trillion to $7 trillion. This seems a wildly unbelievable figure relative to current global GDP and capital investment, and we expect there has been some misinterpretation. However, it wouldn’t be surprising that Sam expects the chip market to grow far beyond current levels and for this to require huge associated investment (including energy demands).

2. Yann LeCun on How an Open Source Approach Could Shape AI

LeCun, chief AI scientist at Meta, is a staunch advocate of open research. LeCun regards it as a moral necessity. “In the future, our entire information diet is going to be mediated by [AI] systems,” he says. “ They will constitute basically the repository of all human knowledge. And you cannot have this kind of dependency on a proprietary, closed system.”

3. Deepfake ‘Face Swap’ Attacks Surged 704% Last Year, Study Finds

Deepfake technology advancements have resulted in a significant rise in ‘face swap’ attacks, with a 704% increase in the second half of the year, driven by accessible GenAI tools such as SwapFace and DeepFaceLive. These tools enhance the ability to produce undetectable deepfakes, facilitating anonymity and contributing to a spike in deepfake-enabled crimes, including a notable financial scam in Hong Kong.

4. OpenAI Reportedly Developing Two AI Agents To Automate Entire Work Processes

OpenAI is reportedly developing two types of AI agents to automate complex tasks. According to The Information, one agent could use a user’s device to transfer data between documents and spreadsheets or fill out expense reports. The second agent is web-centric: It performs web-based tasks such as collecting public data, creating travel itineraries, or booking airline tickets.

5. FCC Officially Declares AI-Generated Voice Robocalls Illegal

Following calls to pass a law at the federal level, the Federal Communications Commission (FCC) has officially declared AI-created robocalls illegal. This isn’t a new law but an expansion of protections already applied to consumers under The Telephone Consumer Protection Act, which outlines legal procedures for actors charged with issuing robocalls.

Five 5-minute reads/videos to keep you learning

This article presents the results from the first hands-on tests of Gemini Ultra and Google’s new Gemini Advanced platform. Early testing shows Gemini Ultra matches or exceeds GPT-4’s performance.

2. Thinking about High-Quality Human Data

High-quality, detailed human annotations are crucial for creating effective deep learning models, ensuring AI accuracy through tasks such as content classification and language model alignment. This article shared the practices and techniques for improving data quality.

3. FunSearch: Making new discoveries in mathematical sciences using LLMs

FunSearch is a method to search for new mathematics and computer science solutions. It pairs a pre-trained LLM, whose goal is to provide creative solutions in the form of computer code, with an automated “evaluator,” which guards against hallucinations and incorrect ideas.

The NPHardEval leaderboard offers a benchmark for evaluating the reasoning capabilities of LLMs on a set of 900 algorithmic problems, focusing on NP-Hard and less complex tasks. It undergoes monthly updates with new challenges to maintain the assessment’s integrity and prevent model overfitting.

5. How Good Is Google Gemini Advanced?

Gemini Advanced is a multimodal model with data analysis capabilities and improved reasoning compared to Gemini Pro. It is better than GPT-4 in some cases, but GPT-4 is still more sophisticated. This article presents a few hypotheses that could help explain the mixed evaluations of Gemini Ultra.

Repositories & Tools

- MetaVoice-1B is a 1.2B parameter base model trained on 100K hours of speech for text-to-speech.

- SPIN is the official implementation of Self-Play Fine-Tuning.

- Together AI is a cloud platform for building and running generative AI.

- Vision Arena, inspired by the Chatbot Arena, is a web demo for testing Vision LMs like GPT-4V, Gemini, Llava, Qwen-VL, etc.

- Uform is a pocket-sized multimodal AI for content understanding and generation across multilingual texts, images, and video.

Top Papers of The Week

DeepMind has developed a 270M parameter transformer model that attained Grandmaster-level chess proficiency without reliance on traditional search techniques. Trained on a dataset of 10 million games with action-value insights from Stockfish 16, the model notched up a Lichess blitz Elo of 2895 and displayed the capability to solve advanced chess puzzles.

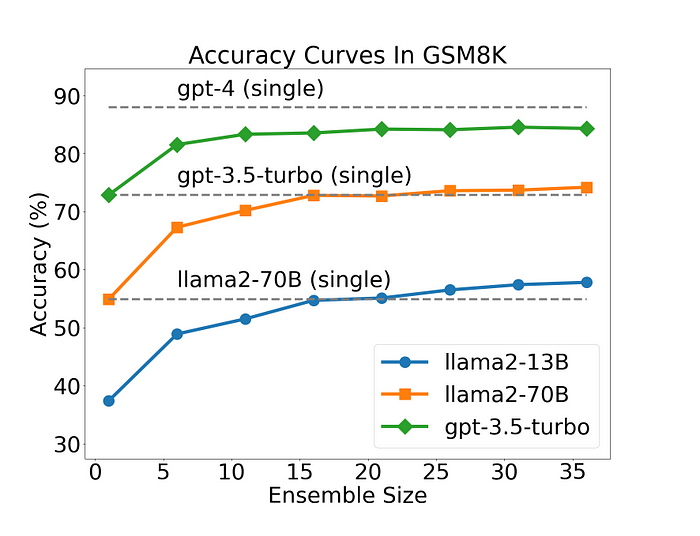

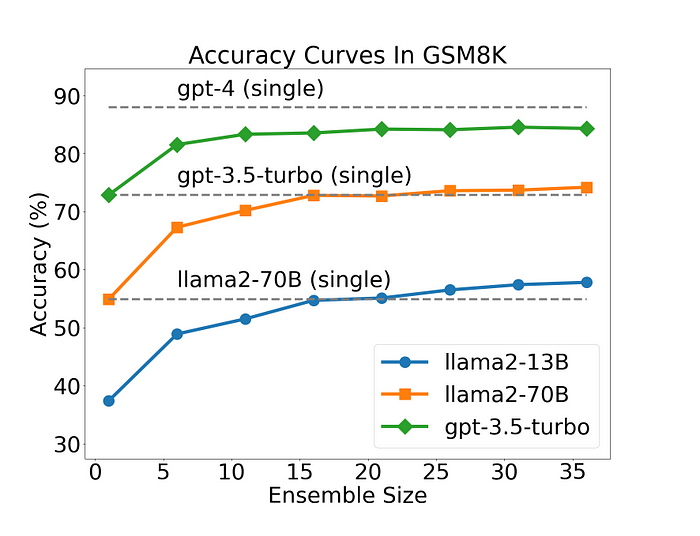

2. More Agents Is All You Need

This paper finds the performance of LLMs scales with the number of agents instantiated via a sampling-and-voting method. However, the degree of enhancement is related to the task difficulty.

3. Guiding Instruction-based Image Editing via Multimodal Large Language Models

This paper presents MLLM-Guided Image Editing (MGIE). It learns to derive expressive instructions and provides explicit guidance. The model jointly captures this visual imagination and performs manipulation through end-to-end training.

4. AnyTool: Self-Reflective, Hierarchical Agents for Large-Scale API Calls

AnyTool is an LLM agent designed to revolutionize the use of many tools in addressing user queries. It utilizes over 16,000 APIs from Rapid API, assuming that a subset of these APIs could resolve the queries. AnyTool outperforms ToolLLM by +35.4% in terms of the average pass rate on ToolBench.

5. Memory Consolidation Enables Long-Context Video Understanding

The paper proposes re-purposing existing pre-trained video transformers by fine-tuning them to attend to memories derived non-parametrically from past activations. The memory-consolidated vision transformer (MC-ViT) extends its context far into the past and exhibits scaling behavior when learning from longer videos by leveraging redundancy reduction.

Quick Links

1. Brilliant Labs launched $349 Frame glasses said to give multimodal “AI superpowers.” The open-source eyewear helps with AI translations, web searches, and visual analysis right in front of your eyes.

2. Perplexity AI announced a partnership with Vercel. This allows developers using Vercel to integrate Perplexity’s LLMs into the applications they develop on the platform — essentially using them as a knowledge support system.

3. Meta will introduce labels for AI-generated images on Instagram, Facebook, and Threads. They currently label images created with its Meta AI feature and intend to extend this labeling to AI images created by other industry leaders like Google and OpenAI.

4. OpenAI’s image generator DALL-E 3 will add watermarks to image metadata as more companies roll out support for standards from the Coalition for Content Provenance and Authenticity (C2PA).

Who’s Hiring in AI

Senior Software Engineer, ChatGPT Model Optimization @Open AI (San Francisco, CA, USA)

AIML — Sr Engineering Program Manager, ML Compute @Apple (Seattle, WA, USA)

Bridge Fellow — Associate Data Scientist @Metropolitan Council (USA/Temporary)

Head Of AI Implementation @Eltropy (Milpitas, CA, USA)

Generative AI Specialist @FalconSmartIT (Dover, DE, USA/Freelancer)

Jr. Data Analyst / Data Analyst @Assembly (Mumbai, India)

Data Engineer, Amazon Prime @Amazon (Seattle, WA, USA)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.