Inside XGen-Image-1: How Salesforce Research Built, Trained, and Evaluated a Massive Text-to-Image Model

Last Updated on August 15, 2023 by Editorial Team

Author(s): Jesus Rodriguez

Originally published on Towards AI.

One of the most efficient training processes for text-to-image models ever implemented.

I recently started an AI-focused educational newsletter, that already has over 160,000 subscribers. TheSequence is a no-BS (meaning no hype, no news, etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers, and concepts. Please give it a try by subscribing below:

TheSequence U+007C Jesus Rodriguez U+007C Substack

The best source to stay up-to-date with the developments in the machine learning, artificial intelligence, and data…

thesequence.substack.com

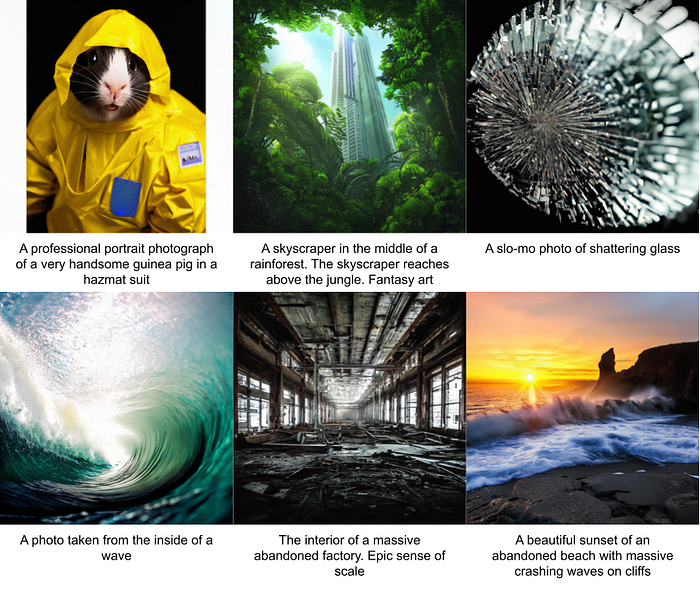

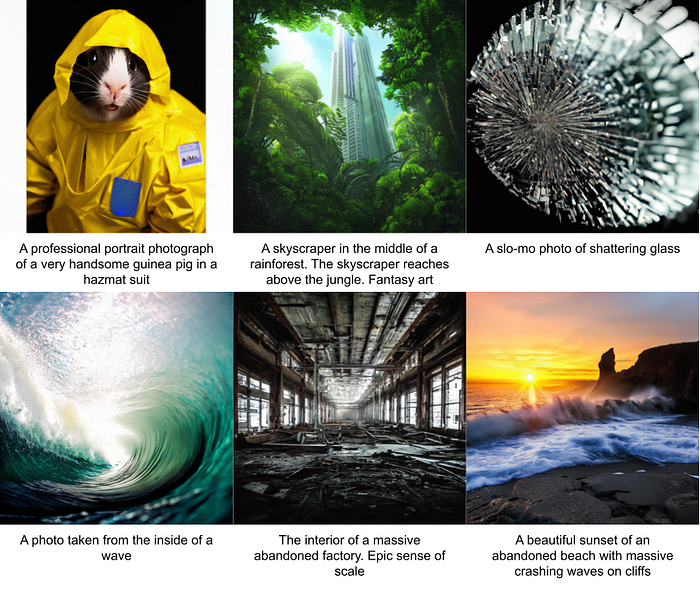

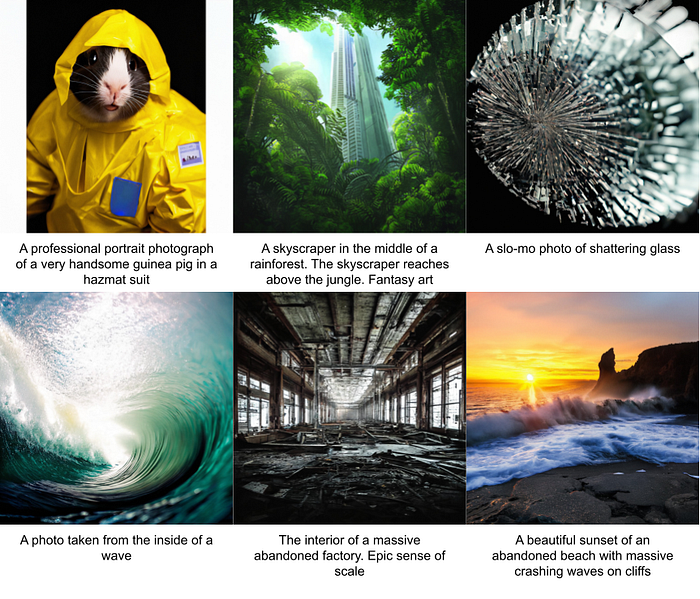

Salesforce has been one of the most active research labs in the new wave of foundation models. In recent months, Salesforce Research has released a variety of models across different domains such as language, coding and computer vision. Recently, they unveiled XGen-Image-1, a massive text-to-image model that shows state-of-the-art performance across different computer vision tasks. Together with the release, Salesforce Research provided a lot of detail into the training methodology and best practices used in XGen-Image-1. Today, I would like to deep dive into some of those details.

The process of building XGen-Image-1 involved a series of very tricky decisions ranging from strategic design choices and training methodologies to performance metrics concerning their inaugural image generation models.

- Model Training: From the architecture perspective, XGen-Image-1 is a latent diffusion model boasting 860 million parameters. For training, Salesforce Research leveraged Leveraging the 1.1 billion publicly available LAION dataset of text-image pairs.

• Resolution Management: XGEn-Image-1 uses latent Variable Autoencoder (VAE) with readily available pixel upsamplers facilitated training at notably low resolutions, thereby mitigating computational costs effectively.

• Cost-Efficiency: Cost is always a concerning variable when it comes to text-to-image model training. In the case of XGen-Image-1, Salesforce Research established that a competitive image generation model could be cultivated using Google’s TPU stack for a modest investment of approximately $75k.

• Performance Parity: Notably, XGen-Image-1 showcased alignment performance akin to Stable Diffusion 1.5 and 2.1, models that stand among the vanguards of image generation prowess.

• Automated Refinement: A notable facet emerged in the form of automated enhancements on specific regions through touch-ups. For instance, regions like “face” underwent refinements, effectively enhancing the generated images.

• Inference Enhancement: By integrating rejection sampling during inference, the quality of results saw a significant elevation, bearing testament to the power of thoughtful methodological augmentation.

Training

Most text-to-image models have pioneered their own training methodology. XGen-Image-1, charted a different course, focusing not on the straightforward challenges of conditioning, encoding, and upsampling but rather on the extent of their reusability. This distinctive perspective led them to experiment with the thresholds of efficient training, probing the boundaries of low-resolution training via the amalgamation of pretrained autoencoding and pixel-based upsampling models.

As the pipeline illustratively depicts, a pretrained autoencoder combined with optional pixel-based upsamplers empowers low-resolution generation while producing images of grandeur (1024×1024). Their outlook extends to further exploration of the practical lower bounds of resolution. Quantitative evaluation occurs at the 256×256 juncture, directly following the VAE phase devoid of upsampling. Qualitative evaluation leverages a “re-upsampler” journeying from 256 to 64 to 256, mirroring SDXL’s “Refiner,” along with a 256 to 1024 upsampler.

Training Infrastructure

Salesforce Research undertook model training on TPU v4s. Throughout the training process, the team harnessed Google Cloud Storage (GCS) for preserving model checkpoints. Additionally, Gloud-mounted drives were harnessed for storing expansive datasets. A robust training regimen unfolded on a v4–512 TPU machine, spanning 1.1 million steps over the course of approximately 9 days, encompassing hardware costs approximating $73k, which is super cheap compared to alternatives!

The process was not without challenges. The model’s loss exhibited erratic fluctuations from step to step, despite generous batch sizes. The root cause was related to the uniform seeding across all workers, which resulted in this volatility. The resolution materialized through random seeding, aligned with each worker’s rank, fostering a balanced dispersion of noise steps and ushering in smoother loss curves.

Evaluation

To evaluate XGen-Image-1, Salesforce Research used a methodology that encompasses a dual-axis representation, with the x-axis portraying CLIP Score — an embodiment of alignment to prompts — while the y-axis encapsulates FID (Fréchet Inception Distance), an indicator of appearance similarity across the dataset. This evaluation transpires across 15 guidance scales, involving 30,000 image-prompt pairs in the initial figure. For inter-checkpoint comparison, this scope narrows to 1,000 pairs. Data pairs are meticulously sampled from the COCO Captions dataset, wherein captions are enriched with “A photograph of” to mitigate FID penalties linked to disparate graphic styles.

Salesforce Research also used human evaluation to benchmark XGen-Image-1. This evaluation, conducted through Amazon Mechanical Turk, involves participants discerning image-prompt alignment. With a resolute dedication to precision, respondents engage with all 1,632 prompts across six distinct trials, culminating in a substantial repository of ~10k responses per comparison.

Generating High-Quality Images

An important part of the XGen-Image-1 building was to ensure that it generates high-quality images. This process was based on two well-known tricks.

The initial strategy revolved around generating a substantial array of images and subsequently cherry-picking the most optimal. Upholding the steadfast principle of maintaining a one-prompt to one-output structure, Salesforce Research delved into automating this methodology. Exploration of this approach led them to delve into rejection sampling — a technique encompassing the generation of multiple images, followed by an automated selection of the most fitting candidate. An array of metrics were initially considered, encompassing aesthetic score and CLIP score. Ultimately, the team found PickScore to be a robust overarching metric. This metric, as substantiated within the literature, showcased a remarkable alignment with human preference — a pivotal trait. Following this methodology, XGen-Image-1 generates 32 images (in a 4×8 grid) within approximately 5 seconds on an A100 GPU and selects the one that scores the highest.

The second technique, manifesting as an exemplar of image improvement, materialized through inpainting regions that presented suboptimal aesthetics.

- Segmentation masks, extrapolated from prompts, encapsulated the target object.

2. Objects were duly cropped based on these segmentation masks.

3. The crop underwent expansion.

4. Employing img2img operations on the crop, paralleling the segmentation prompt with matching captions (e.g., “a photograph of a face”), facilitated regional refinement.

5. The segmentation mask played a pivotal role in seamlessly blending the augmented crop back into the original image.

The combination of both methods led to high-quality image creation in XGen-Image-1.

XGen-Image-1 is not a breakthrough in the text-to-image model, but it still provides some very useful contributions. The biggest one is to showcase that it is possible to reuse many pretrained components to achieve state-of-the-art performance in this type of model. X-Gen-Image-1 achieved performance similar to StableDiffusion while using a fraction of the training computation resources. Very impressive indeed.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.