How Neuroscience Inspires AI

Last Updated on September 13, 2020 by Editorial Team

Author(s): Andreea Bodnari

Artificial Intelligence, Neuroscience

Can we invent artificial intelligence ex nihilo, without peeking at human intelligence for inspiration?

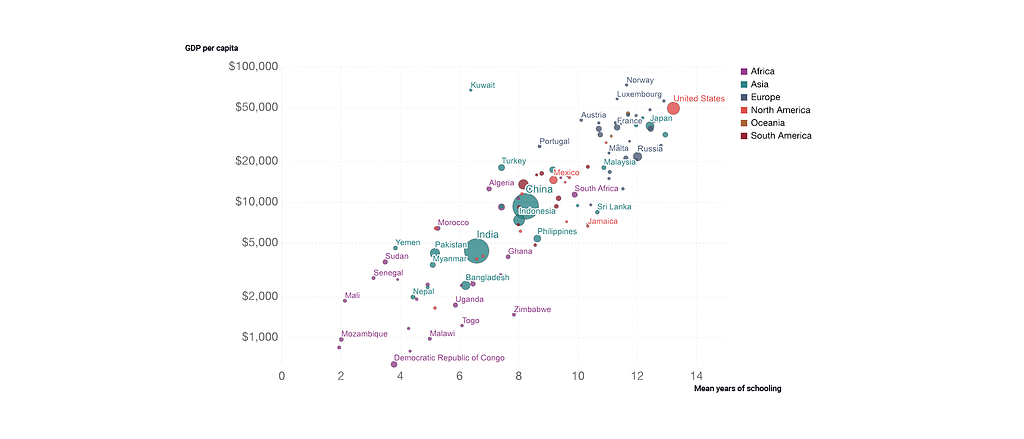

Intelligence is a determining factor for success and prosperity. Children rarely attend school out of a burning desire, but because their parents know that education is a passport to the future. The economic output says it all: there is a strong correlation between how much time we spend in school and the gross domestic product per capita. Through education, we harvest a more innovative and productive society.

Every person inherits some stock of intelligence. But without an investment strategy, intelligence will not carry dividends. Financial gurus ranging from Warren Buffet to Ray Dalio will tell you that the secret to investing is diversification. As a civilization, we take this advice to heart and to a whole other level: we diversify by inventing artificial intelligence that can assist, augment, or automate.

A Starting Point

Can we invent artificial intelligence ex nihilo, without peeking at human intelligence for inspiration? It’s not for lack of trying that AI scientists landed on the human brain as a starter blueprint. The brain is a stunning device capable of computation, prediction, storage, and more. As the old cliche has it, imitation is the sincerest form of flattery.

The brain is complex machinery. Elaborate networks of neurons power our ability to navigate dynamic environments and memorize information. Neuron networks are like a chatty crowd — they constantly “fire” news and instructions through electrical signals. If you wanted to download the neuron updates, how would you interrogate the chatter? Novices might try inserting a wire into the brain to capture the electrical signal of a single neuron. But the isolated electrical readout from a single neuron is the equivalent of feeling the bass and thinking you’re experiencing a full song. Studying the brain as a conglomerate of neurons will not solve the problem of intelligence, because in the big picture neurons stop being distinct entities.

Recent breakthroughs in computational techniques for studying the brain enable us to visualize the neural connections in the brain more holistically. Such virtual mappings of the brain circuitry help us imagine what it takes to design general intelligence. We can already point to two brain regions that hold the key to the AI future: the neocortex and the hippocampus.

Neocortex: Prediction, Inference, and Behavior

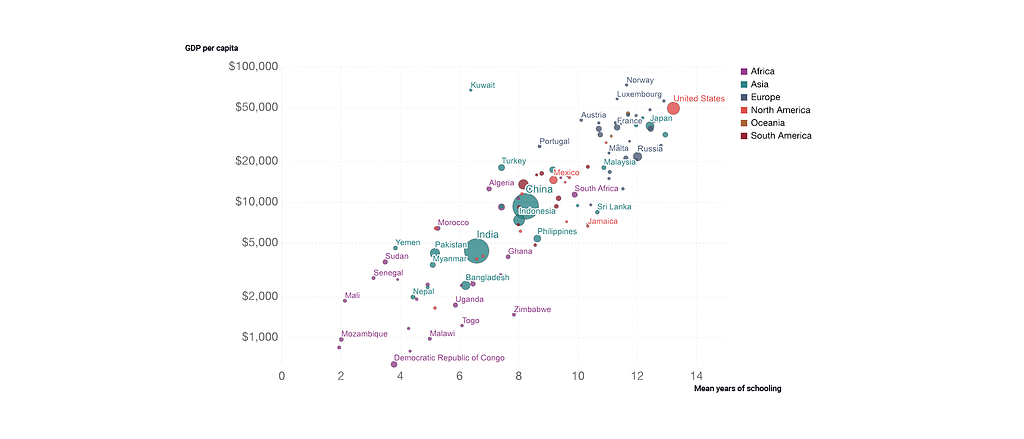

Similar to digital computers, the brain processes information using electrical signals exchanged by neurons. Neural computations can happen faster than the blink of an eye (pun intended) so we can react quickly in a rapidly changing world. These logical computations take place in the outer layer of the brain — the cerebral cortex. The cerebral cortex is home to our conscious thoughts and actions. In the human brain, the neocortex is the largest part of the cerebral cortex with the allocortex making up the rest. The allocortex performs primary functions like receiving inputs for hearing, seeing, and bodily sensations.

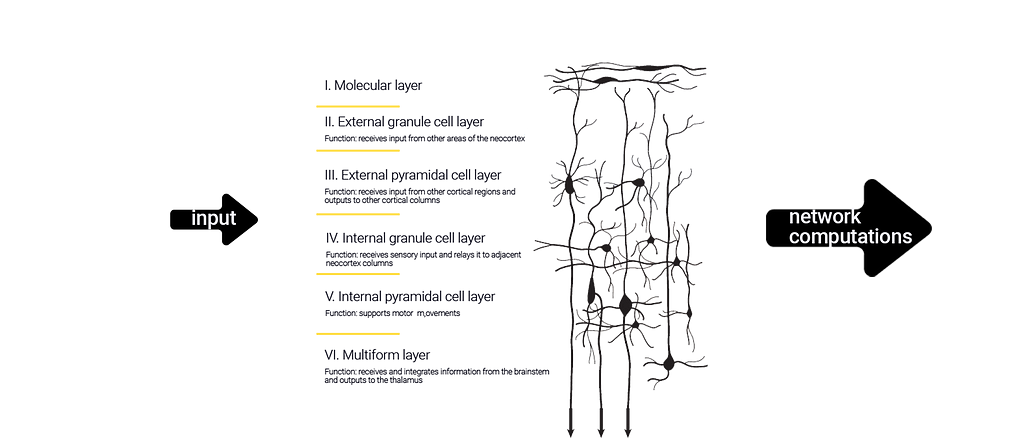

The neocortex is a multi-functional computation device. Different parts of the neocortex specialize in specific cognitive functions. Despite the apparent specialization of neocortex regions, they are all interconnected and highly dependent on each other.

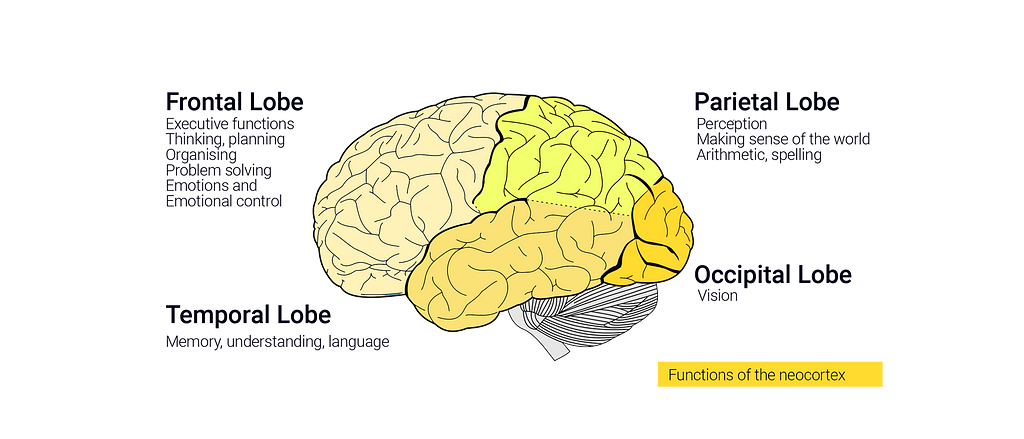

As the center of cognition, the neocortex consists of six layers with assorted neurons specialized in different types of information processing. The neurons in the neocortex take as input information coded as signals. These input signals activate entire networks of neurons. Once a neuron network processes the input, it returns a transformed version of the original input that can be recognized and consumed by neighboring neuron networks. The output of a network computation can contain activation signals, inhibitory signals, or just information encoded for processing by a different neuron network.

Hippocampus: Memory Hub

The brain processes data, but it also accumulates information as we learn and interact with the world around us. Memories allow our brains to construct a model of the world, which influences quick, millisecond processing. Memories can last a long time because neurons process information as quickly as within milliseconds and as slow as across years.

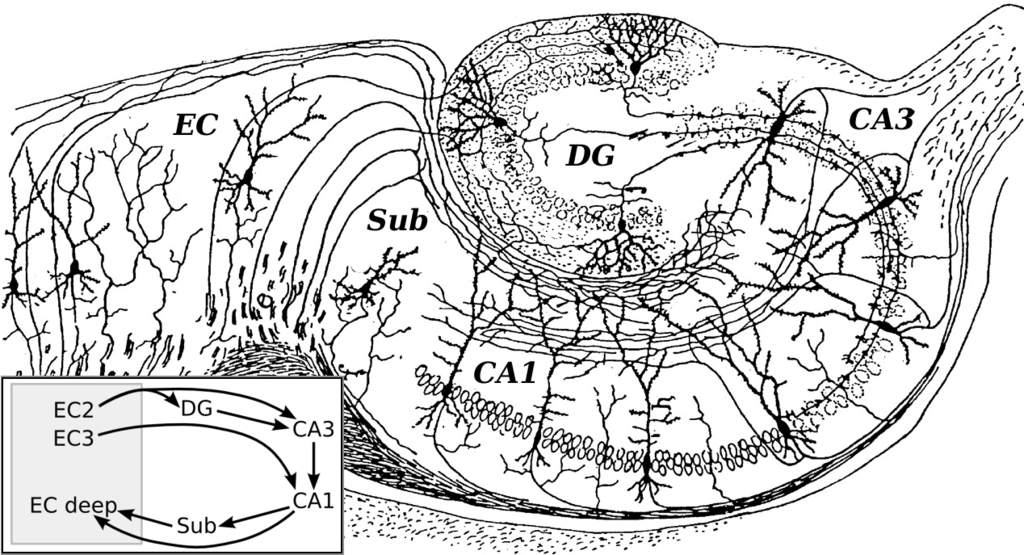

The hippocampus assists with the formation of new memories by consolidating information from short-term memory to long-term memory. It underpins spatial memory that enables navigation. As a jack of all trades, the hippocampus also supports computations affiliated with learning and emotions. The hippocampus contains two main interlocking parts: the hippocampus proper (regions CA1–4) and the dentate gyrus. Keep reading for a glimpse into the critical role these regions play in the greater computation machinery.

Neurons: Hall of Fame

Scientists cannot precisely count the number of neurons in the brain — imagine trying to count the number of blinking lights in your Christmas tree. Based on techniques used to estimate the number of stars in the Milky Way galaxy, we estimate that the human brain consists of a staggering 86 billion neurons. A significant number of neurons squeezed into a relatively small surface. Now, remember the “wrinkles” on your brain? Having many folds increases the surface area of the brain while keeping the volume relatively consistent. The brain folds allow more neurons to fit into the same space.

Neurons fall into multiple classes, a dozen or more clustered in each of the approximately 1,000 regions of the brain. In the neuron hall of fame, several neurons (cells) have superstar status:

- Martinotti cells sit in the neocortex where they act as a safety device. With all the brain chatter that goes on between neurons, you might wonder how the brain avoids ending up in a state of chaos. Martinotti cells are part of the answer. When a Martinotti cell receives signals above a certain electrical frequency, it responds by sending back inhibitory signals that moderate the activity of surrounding cells. I like to think of Martinotti cells as the yoga instructor teaching the brain the latest breathing techniques.

- Neocortex pyramidal cell layer 5–6 is one of the most extensively studied neurons in the neocortex and a benchmark for information processing in excitatory neurons. This neuron type is the talk of the town because of the critical role it plays in cognition. Specifically, it integrates input across all neuron layers in the neocortex and is the principal gatekeeper funneling information to regions of the brain below the cortex.

- CA1 pyramidal cell sits in the hippocampus and plays a critical role in parallel processing of information, autobiographical memory, mental time travel, and autonoetic consciousness. I’ll zero in on the last function played by the CA1 cell since it drives a fascinating property of the human brain. Autonoetic consciousness is the ability to mentally place yourself in the past, future, or hypothetical situations, and to examine your thoughts within such abstract circumstances. Without autonoetic consciousness, we could not reason in the abstract.

- CA3 pyramidal cells are part of the hippocampus responsible for the encoding, storage, and retrieval of memories. The CA3 neurons enable an optimal filling system with excessive storage space.

- Dentate gyrus granule cells are foundational cells in the hippocampus. They preprocess incoming information using classification and pattern recognition techniques. The dentate gyrus distinguishes new memories from old ones and prepares content for efficient storage in the CA3 hippocampus region.

Artificial Intelligence vs Biology

In their quest for artificial intelligence, researchers started by building minimalistic renditions of the neural networks found in living brains. These artificial neural networks (ANNs) made the academic headlines in the 1940s but had a slow takeoff, primarily due to computational limitations. However, artificial neural networks observed a renaissance in the 2010s when graphics processing units (GPUs) joined the central processing units (CPUs) in accelerating general-purpose scientific computing. GPUs made practical new ANNs learning techniques like deep learning.

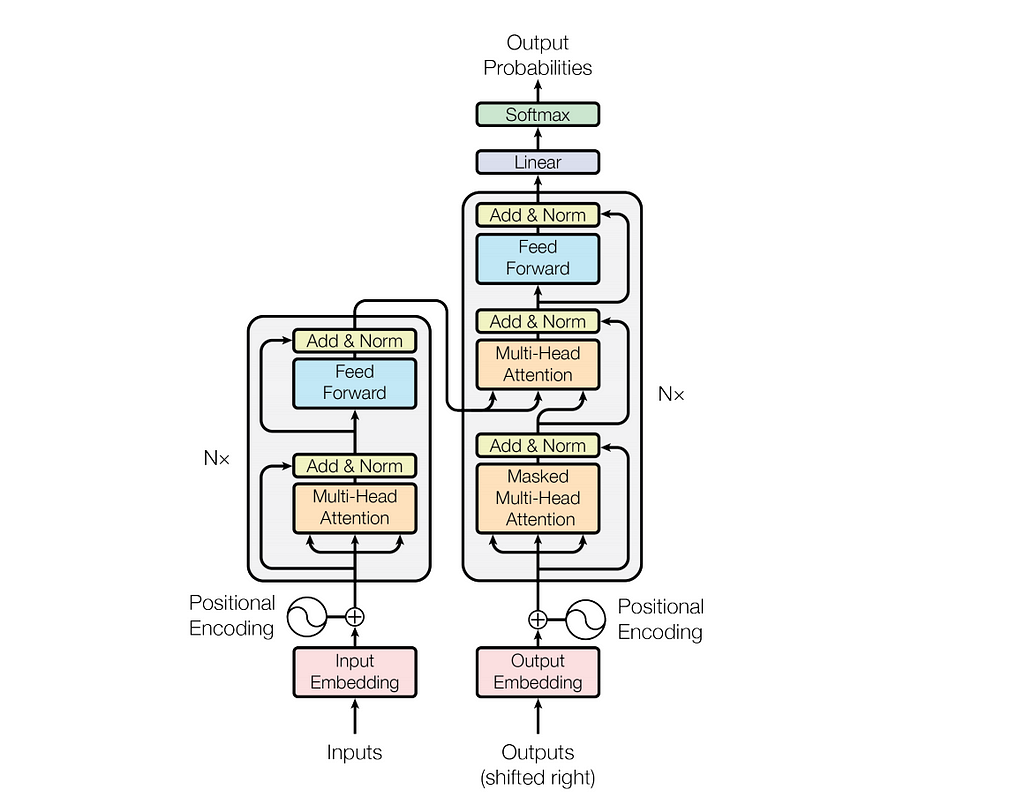

GTP-3 is an exciting example of deep learning applied to language understanding. Unlike prior language understanding models that focus on just identifying information from language, GTP-3 can also generate information. Essentially an AI prodigy, GTP-3 caused heated controversy in the news because it can generate text that reads so well you’d think a human wrote it.

Judging by the architecture of an artificial neural network like GTP-3, you’d think scientists are making all the right steps in the right direction. The GTP-3 information circuitry looks intricate, with different input gates and multiple protocols for data transformation. Yet, the complexity of GTP-3 information circuits pales in comparison to the sophistication of the brain.

The time is now for AI scientists and engineers to take an integrated approach to their practice. Brain scientists or AI scientists, the same goal is the same: diverse intelligence.

How Neuroscience Inspires AI was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.