Policy Learning — Deep Reinforcement Learning

Last Updated on January 21, 2025 by Editorial Team

Author(s): Sarvesh Khetan

Originally published on Towards AI.

A. Problem Statement

We have already seen the problem statement here and saw how to solve it using Q learning approach but since Q learning has its own drawbacks, researchers innovated this approach called policy learning

B. Solution Algorithms for Discrete Action Space — Policy Gradient / Gradient Policy Optimization (GPO)

When the action space is discrete i.e. our game has actions like move left / move right / …. (Ping Pong falls into this category)

Now to train the above model we need to know the actual probabilities so that we can calculate cross entropy loss

There are many methods to find the actual probabilities for a given state, but we will see the most prominent ones :

- Method 1 : Increase the Probability of Good Trajectories

- Method 2 : Increase the Probability of Good Trajectories iff it is above baseline model

- Method 3 : Increase the probability of good actions in good trajectories and decrease the probability of bad actions in bad trajectories

C. Solution Algorithms for Continuous Action Space

Above ping pong game had a discrete action space and we saw techniques to solve such games which have discrete action space but what if the game has continuous action space like move left with a speed of 23mph / jump at an angle of 23 degree / … In such a case instead of learning a discrete probability distribution we will learn a continuous probability distribution i.e. Gaussian Distribution defined by its mean and variance. Hence the architecture will look as follows

Now to find optimal weights in this architecture you can use all the methods that we have done for discrete action space !!

[Method 1] : Increase the Probability of Good Trajectories

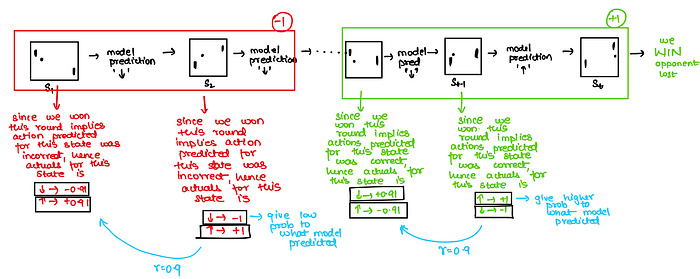

The idea here to calculate the actuals for a state is simple and can be understood by below example

Hence to summarize the intuition to finding actuals here is :

- If we won the round, we’d like our network to generate more of the such trajectories/rollouts/episodes. That is to say that we would like our network to take more such actions which leads to more such winning trajectories. Hence increase the probability of such actions.

- Alternatively, if we lose, we’re going to try and generate less of these trajectories/rollouts/episodes (consequently actions) by decreasing it’s probability.

General Formulation

Now to solve this optimization problem we will use gradient ascent algorithm

Now in GPO, we approximate this expectation with a sample mean by collecting a set of N trajectories

Issues — Computationally inefficient

Therefore we need to somehow reduce these calculations to make our algorithm work faster !!

Solutions to reduce calculations !!

[Method 2] : Increase the Probability of Good Trajectories iff it is above baseline model

Issue with Method 1 : High Variance Issue

Above we saw that in GPO we approximate the expectation using mean i.e. instead of calculating the expected reward over all the possible trajectories we consider a sample of trajectories and take mean of all the rewards associated with trajectories in this sample.

This expectation approximation with sample mean leads to low bias high variance problem (overfitting problem) if sample size is small!! And due to computation constrains most of the time the sample size has to be kept low…

Idea

Hence to solve this issue researchers thought of

- Increasing the probability of the paths that are better than some baseline average

- And decreasing the probability of the paths that are worse than some baseline average

Generalize Formulation

[Method 3] : Increase the probability of good actions in good trajectories and decrease the probability of bad actions in bad trajectories

The idea of reward discounting is simple : —

- In rounds that we win, we know something worked but dont know exactly what worked. Hence in method 1 we associated this win to all the actions taken to get to this win but in this method we will say that the end actions caused the win (hence increase their prob) while the early actions did not led to the win (hence decrease their prob). Think about it this way — if you moved up at the first frame of the episode, it probably had very little impact on whether or not you win. However, closer to the end of the episode, your actions probably have a much larger effect as they determine whether or not your paddle reaches the ball and how your paddle hits the ball.

- In rounds that we lose, we know something did not work but dont know exactly what did not work. Hence in previous method we associated this loss to all the actions taken to get to this loss but in this method we will say that the end actions caused the loss (hence decrease their prob) while the starting actions worked (hence increase their prob)

Code Implementation

CV/reinforcement_learning/ping_pong_proximal_gradient.ipynb at main · khetansarvesh/CV

Implementation of algorithms like CNN, Vision Transformers, VAE, GAN, Diffusion …. for image data …

github.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.