Multilingual Emotion Recognition — Full Experimental Report

Last Updated on November 2, 2024 by Editorial Team

Author(s): Lorenzo Pozzi

Originally published on Towards AI.

Humans are quite good in recognizing each other emotions. We evolved to do the best we can to maximize our social bonds and increase the chance of survival in the wild. Nowadays, this component is not as prominent, but we still want to know the feelings of people around us.

In some cases, the number of information that we have to process overcomes our cognitive abilities, and that’s when we turn to machines for assistance. Emotion recognition has many practical applications, several of which are already widely used in the market. Here are some examples.

One is surely marketing and brand monitoring. Following the idea that a product that does not evolve is sooner or later going to perish, by analyzing social media or customer reviews, businesses can track public sentiment, understand brand perception, and adjust their marketing strategies in response to audience emotions. Also, in mental health, emotion recognition is being used to monitor users’ emotional states through journaling apps or therapy chatbots, providing personalized support and even detecting signs of depression or anxiety early.

Recognizing how valuable and practical it is to understand and analyze emotions, I decided to write this article to share some knowledge I gained while developing an emotion recognition system.

Moreover, in my case, I had to work with Italian data, which made many out-of-the-box solutions unusable since they work strictly in English. Being in the long tail of language distribution can sometimes be hard, but there are solutions available. In this article, I will discuss the one I found.

Human Emotions

Classifying emotion is not an easy task. Starting from the thier formalization. In emotion recognition, one widespread framwrok is the Plutchik’s wheel of eight emotions: joy, trust, fear, surprise, sadness, disgust, anger and anticipation. For my project, however, I wanted to explore a broader range of emotions. After conducting some research, I discovered the GoEmotions dataset, developed by Google, which offers a more nuanced classification of emotions.

This is the largest manually annotated dataset of emotions, containing 58k English Reddit comments, labeled for 27 emotion categories or Neutral.

The taxonomy proposed in GoEmotion is broader than standard classification, and more detail means higher precision when we’ll need to create a report.

To the best of my knowledge, there are two possible technical solution to this task which I refer to as specialized solution and zero-shot solution. For the specialized approach, I picked models that were trained on the GoEmotion dataset. More specificlly, SamLowe/roberta-base-go_emotions · Hugging Face and SamLowe/roberta-base-go_emotions-onnx · Hugging Face. The problem with these is that they only work in English. As workaround I will use a local translator from the target language to English. The second solution use multilingual zero-shot classifiers, i.e. Zeroshot Classifiers — a MoritzLaurer Collection, that do not require this extra step but has no familiarity at all with this kind of task.

I will leave the full notebook I used for this analysis. You can open it in Google Colab and replicate all the results. You can find it at the end of the article.

Dataset Overview

First, we need to load the dataset using the datasets library from Huggingface 🤗. For all the analysis in this article, I used the first 100 sentences of the test split.

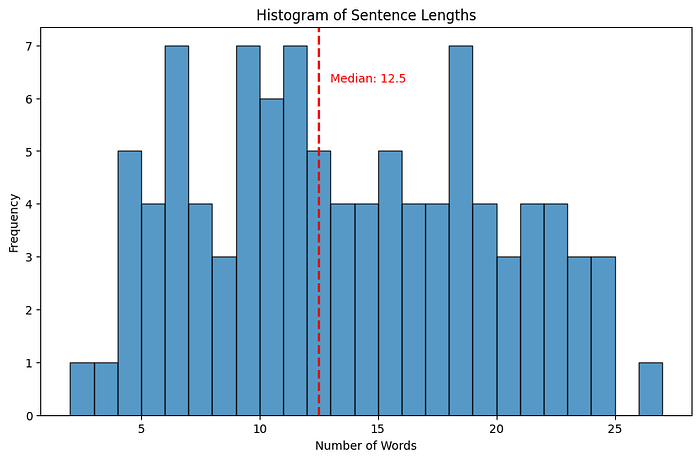

It is always useful to first analyse the data we are working with. Given that I am planning to use the same system on different scenarios I need have a clearer idea of the distribution of data I’m working with. One important factor to consider is the length of the sentences. Recognizing emotions in short social media comments can be quite a different task from detecting emotions in longer documents such as newspapers or blog posts. Although machine learning models are used for their generalization capabilities they are still bound by their training data. Hence the importance of knowing the staring distribution.

With that said, here is the code to plot a histogram of the sample:

dataset_name, dataset_config_name = "go_emotions", "simplified"

emotion_dataset_dict = datasets.load_dataset(dataset_name, dataset_config_name)

import matplotlib.pyplot as plt

import numpy as np

# Example list of sentences

sentences = emotion_dataset_dict[split_name]["text"][:100]

# Step 1: Calculate the length of each sentence in words

sentence_lengths = [len(sentence.split()) for sentence in sentences]

# Step 2: Plot the histogram of sentence lengths

plt.figure(figsize=(10, 6))

plt.hist(sentence_lengths, bins=range(min(sentence_lengths), max(sentence_lengths) + 2), alpha=0.75, edgecolor='black')

# Step 3: Highlight the median length

median_length = np.median(sentence_lengths)

plt.axvline(median_length, color='red', linestyle='dashed', linewidth=2)

plt.text(median_length + 0.5, max(np.histogram(sentence_lengths, bins=range(min(sentence_lengths), max(sentence_lengths) + 2))[0]) * 0.9,

f'Median: {median_length}', color='red')

plt.title('Histogram of Sentence Lengths')

plt.xlabel('Number of Words')

plt.ylabel('Frequency')

plt.show()

Experiment and Results

Hardware specifics

Hardware requirements always represent a major bottleneck in any machine learning pipeline. To have a more complete view of the possibilities of the system I was developing, I run the benchmarks both on CPU and GPU locally. I leave the specifics for reproducibility:

- CPU: Intel(R) Core(TM) i7–8750H

- GPU: Quadro P1000

Time results

As a Machine Learning engineer, it is not only important to consider how good a model is. But we also need to value, once the optimal system will be deployed, how fast it’ll work in inference. In my particular use case, there is the need to work with hundreds of thousands of sentences, hence the choice of the final model is heavily influence by how fast it can process the text in input.

To do this we an use these few lines of code:

hypothesis_template = "The emotion expressed is {}"

classes_verbalized = labels

zeroshot_classifier = pipeline("zero-shot-classification", model="MoritzLaurer/deberta-v3-base-zeroshot-v2.0", device='cuda')

%%time

model_outputs = zeroshot_classifier(emotion_dataset_dict[split_name]['text'][:100], classes_verbalized, hypothesis_template=hypothesis_template, multi_label=True)

In the notebook I wrote some more function to compute times also considering the 7 main emotions: : joy, trust, fear, surprise, sadness, disgust, anger. The authors of GoEmotions give a mapping of their 27 classes to the standard categories. The result obtained:

The full test split of the go-emotions dataset is approximately 5k sentences.

The roberta-base-go_emotions-onnx model is a quantized version that was made available by the author in the same repository. Quantized model typically have slightly lower performance but are often used in real-world applications due to their high inference speed. Given the importance of processing time in this project, I gave it a tried.

Let’s first consider the scenario with the full set of emotions. Using the debert-v3-base and debert-v3-large models on a GPU would require ≈1h 30min and ≈5h, respectively. For practical reasons only the wall time of debert-v3-base is reported; on CPU it would require ≈4h 30min. Comparatively speaking, the non-quantized roberta-based models would require only ≈30 min on CPU. The quantized version even less: ≈2min on CPU!

Decreasing the number of labels also reduce the time needed to run over the test set. We can estimate that it would take ≈30min (on GPU) and ≈1h 10min (on CPU) for debert-v3-base. While the larger version would conclude the task in ≈1h 15min (on GPU) and ≈4h 30min (on CPU).

It wouldn’t be a fair comparison if we didn’t account for the time required for translation in the specialized solution. Remember that the fine-tuned models only work with English language. The EasyNMT library https://github.com/UKPLab/EasyNMT was used with it’s two most best models according to the author https://github.com/UKPLab/EasyNMT#translation-quality , i.e. opus-mt, m2m_100_1.2B and m2m_100_418M (second best of this class).

from easynmt import EasyNMT

model = EasyNMT('opus-mt', device='cpu')

# model = EasyNMT('m2m_100_418M', device='cpu')

# model = EasyNMT('m2m_100_1.2B', device='cpu')

%%time

print(model.translate(emotion_dataset_dict[split_name]["text"][:100], target_lang='it'))

Translation were made on the same dataset split of the previous experiment (N=100), from English to Italian. Sensibly, the same estimates could be considered valid for other languages.

“Time-wise” we have all the needed information for making some conclusion. I’ll leave the final comment on the after presenting all the results.

Performance results

Evaluation of the multi-label output (of the 28 dim output via a threshold of 0.5) using the full dataset test split (≈5k). Here is the code:

from sklearn.metrics import precision_recall_fscore_support

threshold = .5

y_pred_all = np.zeros((num_items, num_labels), dtype=int)

for i, pred in enumerate(model_outputs):

for label, score in zip(pred["labels"], pred["scores"]):

label_index = emotion2idx[label]

y_pred_all[i, label_index] = 1 if score>threshold else 0

p, r, f1, _ = precision_recall_fscore_support(y_targets_all, y_pred_all, average='macro')

print(f'Precision: {p}')

print(f'Recall: {r}')

print(f'F1: {f1}\n')

p, r, f1, s = precision_recall_fscore_support(y_targets_all, y_pred_all, average=None)

print(f'Precision: {p}')

print(f'Recall: {r}')

print(f'F1: {f1}')

print(f'Support: {s}')

and the results obtained:

NB: I didn’t calculated performance for deberta-large after seeing the processing time that this model required. An earl exclusion that simplified the analysis.

Conclusions

Best model: roberta-base-go_emotions

The zero-shot model are not available in quantized version and perform in much high inference time. For the case study I was working with, I had the hard constrain of working on CPU, making them unsuitable. Moreover, the results, also in terms of crude performance, did not match my expected KPI. These data was already enough to pick the optimal model for my project. However, it is also worth mentioning that these models’s performance may also vary depending on the prompt used.

In conclusion, given the results from the analysis of the processing time and the performance report, I would suggest using the following combination of models: m2m_100_418M + roberta-base-go_emotions-onnx.

The translations of the first model seem to work quite well while the emotion model have good performance (almost the same of it’s non quantized version) but it is 2x faster than its non quantized version.

The estimate on CPU for 5k sentences with this configuration is ≈1h 30min

This is close to the estimate for the zero-shot classification model (≈1h 15min) but performance of the latter are worse in terms of precision (which in this scenario is more important than recall) and do not reach the same level of detail.

Future Improvements

To my opinion there are some improvements that can be made. First of all the time needed for processing bigger corpora might be took much (especially if we consider thousands of documents that can result in hundred of thousands of sentences). In this case, the time required on CPU can probably be reduced with parallelization.

I was not completely satisfied with the performance of the models for emotion detection. I believe the model would require further training. An optimal solution would be having 60% of the classes with a F1-score above 75%. Moreover, the go-emotions dataset only focus on Reddit comments. The performance could further drop on different domains.

The full notebook is available on my GitHub profile:

GitHub – lopozz/go-emotion-multilingual-analysis: Analysis of specialized and zero-shot models…

Analysis of specialized and zero-shot models under a multilingual setting. The task is emotion identification using the…

github.com

If you have any feedback, comments or would like more clarifications about this article, you’re welcome to reach out to me✌️

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.