Maximizing Machine Learning: How Calibration Can Enhance Performance

Author(s): Cornellius Yudha Wijaya

Originally published on Towards AI.

The not-so-much talked method to improve our machine-learning model.

Image by Author

Many machine learning model outputs are a probability of certain classes. For example, the model classifier would assign a probability to the ‘churn’ class and ‘not churn’ class — say 90% for ‘churn’ and 10% for ‘not churn’.

Generally, the probability would be translated into the prediction. Then, we evaluate our model using standard measurements taught in class, such as accuracy, precision, recall, logloss, etc. These measurements were based on discrete outputs such as 0 or 1.

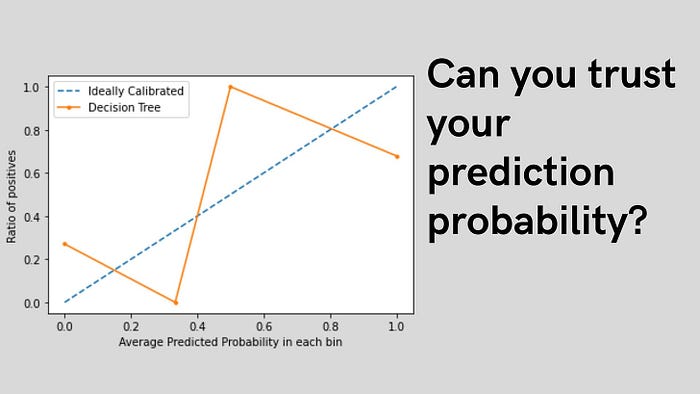

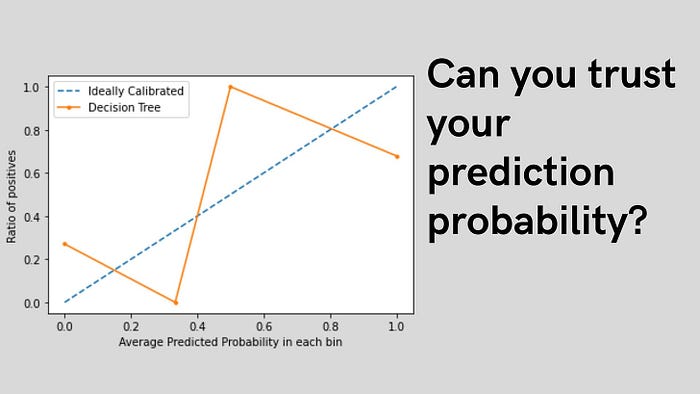

But how do we measure our prediction probability? And how trustworthy is our model probability? To answer these questions, we can use calibration techniques to tell us how much we can trust our prediction probability model.

Calibration in machine learning is a method that refers to the action of adjusting the probabilistic model output to match the actual output. What does it mean to match the actual output?

Let’s say we have a classification model predicting churn with a 70% probability for each prediction; it means it ‘should’ be correct 7 out of 10 times. The model is well-calibrated if we take data for 10 customers and find that 7 customers were churned.

However, the probability output needs to be calibrated in more classification models. Often,… Read the full blog for free on Medium.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.