Chain of Thought Prompting: That’s how we make the models think.

Last Updated on January 31, 2024 by Editorial Team

Author(s): Abhijith S Babu

Originally published on Towards AI.

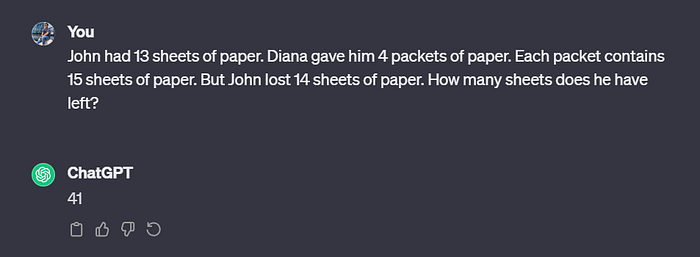

I was just playing around with the chatGPT when I tried the following prompt.

The model gave a wrong output to a basic arithmetic problem. This wasn’t a surprise (at least for me), as large language models are not designed for arithmetic problem-solving. They can’t be relied on for any situation where we need accurate or factual answers. They are designed to talk to us like a human being.

Then, I tried a technique called few-shot prompting. Here we give examples to teach the model how to respond. I added a new similar math problem along with the correct answer before asking my actual question.

Still a wrong answer!! Well, I am not planning to give up that easily. I am going to teach the model a lesson (on arithmetic).

Tadaa!!!! Now the model knows what has to be done. All I did was give the model enough examples to unlock its full reasoning ability. This few-shot prompting technique, in which we show examples of certain prompts and outputs along with the intermediate thought process, is called the chain of thought prompting.

The main concept behind the chain of thought prompting is to endow a thought process on large language models, similar to how humans think. When humans encounter a problem, we will break it down into a sequence of small problems and solve each of the problems until we reach the final output. The large language models can do the same if they provide examples using the few-shot prompting technique.

Chain of thought prompting has the following advantages:

- When problems are broken down into multiple steps, each step can be given enough computation based on the complexity of that step. Thus, more focus will be given to the important steps.

- When models output the thought process (like in the previous example), the users can get an idea of the black box model’s way of thinking. This is beneficial in debugging the large language models.

Thus, this method can be applied to any large language model and can be used to solve any task that a human can solve using language.

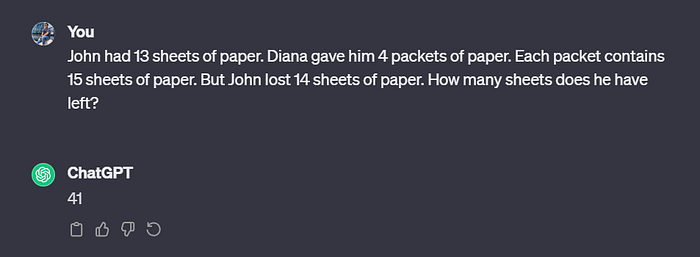

The above experiment shows how showing an example solves a new problem. But did the chain of thought help the model, or did the given example just activate a problem-solving skill of the model? To test this, I conducted a different experiment where I explained the steps after answering.

Here, it gives a wrong output of 23, where the correct answer should have been 80.

Now I ask for the steps after the answer, and this time, it solved the problem without mistakes.

So, when we make the model explain the steps, it goes through the correct steps and gives the correct answer.

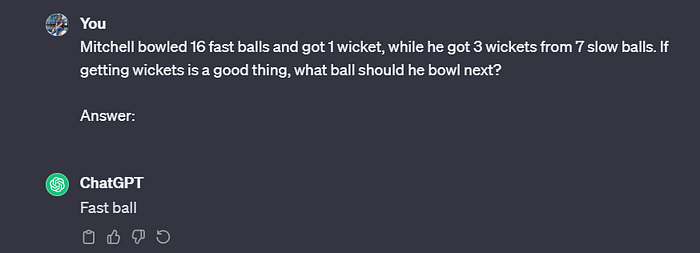

Chain of thought prompting can also be used for commonsense reasoning and logical operations. See the below example.

When we ask the model for a reason, it goes through a chain of thought processes. Even without an example, the model gives good reasoning and a good answer. This happened even though it initially failed to provide the right answer.

Now, we can see the example of symbolic reasoning. Symbolic reasoning means identifying patterns which is easy for a human but can be quite challenging for a large language model.

Here, the reason I gave two kinds of examples is to make the model think more. The model can look for any pattern, but a single pattern might curb the model from thinking outside the given pattern.

Importance of prompt engineering.

Prompt engineering plays a very important role in language models. For an LLM user, understanding how to get the necessary output is very important. While the large language models possess the capability to perform a diverse range of complex tasks, the responsibility lies in the user to guide the model in achieving the task. AI, especially generative AI, is not meant to replace humans. It is meant to assist humans. In a world where LLMs will be common like crows, it is your job to learn how to prompt them so that your life is made easier.

In the examples I used, I made use of few shot prompting to initiate the chain of thought. The prompting technique used to initiate the chain of thought depends on the user. One can initiate the chain of thought in the following way

Here the user was able to initiate the chain of thought without using few-shot prompting. The LLM gives the correct output of 38 in the end.

Behind the stage

In this article, I talked about initiating the chain of thought in large language models by efficient prompting. Let’s have a peek at what is happening behind the stage. The size of the model plays an important role in the chain of thought prompting. By size of the model, I mean the number of parameters. A model with less number of parameters is likely to make a mistake in the chain of thought process. There is no denying the fact that smaller models are weaker in arithmetic operations. Their inability to understand the semantics in the prompt and initiate a correct chain of thought is evident. Common mistakes made by the small models include misinterpretation of the semantics and missing a step or arithmetic calculation. Thus, it is important to have human observation when the large language models are doing these kinds of tasks.

Chain of thought prompting is very efficient, but better methods like ReAct also exist. Tot Ziens until I delve deep into those concepts. If you had a good read, follow me for more exciting stories.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.