This AI newsletter is all you need #84

Last Updated on January 30, 2024 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI.

What happened this week in AI by Louie

This week, we were interested in seeing several new AI models and studies focusing on medical applications and diagnostics. GPT-4 demonstrated impressive capabilities without specific prompting, outperforming Stanford School of Medicine students in free-response case questions that simulate the US Medical License Examinations. A separate study presented treatment suggestions aligning with experts in 80 cases and exhibited superior mortality prediction compared to specialist AIs; GPT-4 has been shown to work well for diagnostics but rarely as a clinical decision-support tool.

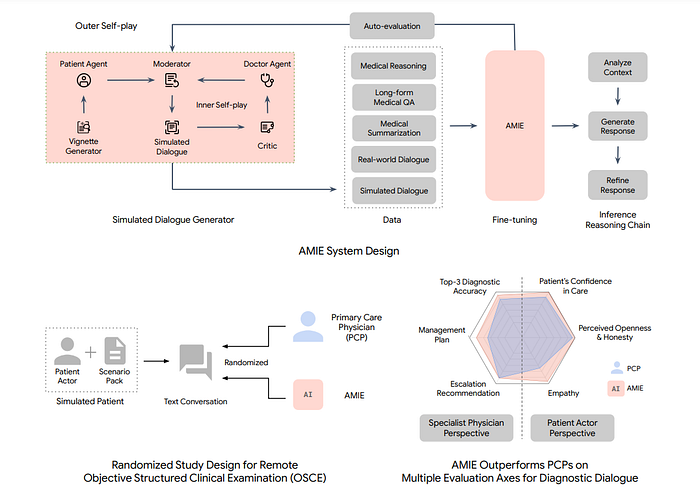

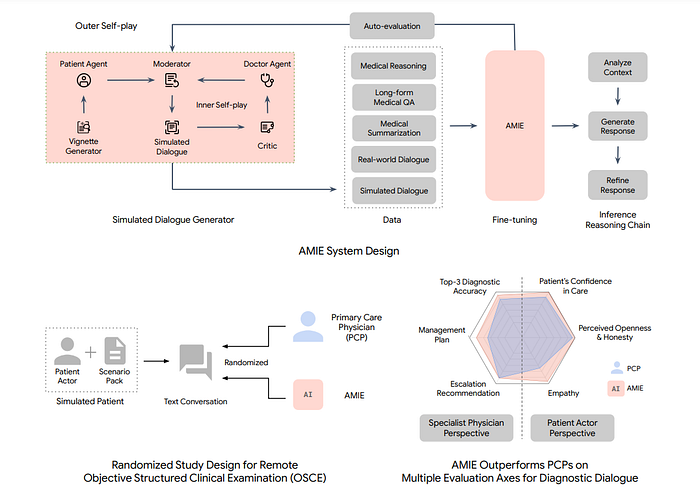

Another noteworthy development in medical diagnosis was the new AI model named AMIE (Articulate Medical Intelligence Explorer) by the Google DeepMind team. The model can collect information from patients and provide clear explanations of medical conditions in a wellness visit consultation. It is trained on real-world medical texts, including transcripts from nearly 100,000 actual physician-patient dialogues, 65 clinician-written summaries of intensive care unit medical notes, and thousands of medical reasoning questions sourced from the United States Medical Licensing Examination. “There may be scenarios when people might benefit from interacting with systems like AMIE as part or in addition to their clinical journeys,” Natarajan says. “These include understanding symptoms and conditions better, including simplifying explanations in local vernaculars…and acting as a valuable second opinion.”

Why should you care?

GPT-4 has displayed surprising capabilities in various domains and specialized tasks without fine-tuning and now often outperforms custom-trained or fine-tuned models in particular domains. This prompts a question of whether we should just be using well-prompted frontier generalist AIs like GPT-4 rather than working on fine-tuned specialized LLMs like Med-PaLM 2. Deepmind’s AMIE model is an example of the advantages of more specialized models. It utilized in-context critics to refine its behavior during simulated conversations with an AI patient simulator. Additionally, an ‘outer’ self-play loop was implemented, incorporating the set of refined simulated dialogues into subsequent fine-tuning iterations.

We think there are still many advantages of adapting models to specific niches, incorporating techniques such as Retrieval Augmented Generation (RAG), fine-tuning, or other machine learning approaches. However, it is often challenging for a tailored older-generation foundation model (e.g., GPT-3.5 standard) to outperform a cutting-edge model (e.g., GPT-4) with strategic prompting. Training a large language model (LLM) from scratch, rather than building upon an existing leading LLM (open or closed source), is only going to make sense in the largest AI labs.

– Louie Peters — Towards AI Co-founder and CEO

Hottest News

1. New Embedding Models and API Updates

OpenAI announced a new generation of embedding models, which can convert text into a numerical form. The company also introduced new versions of its GPT-4 Turbo and moderation models, new API usage management tools, and lower pricing on its GPT-3.5 Turbo model.

2. Voice Cloning Startup ElevenLabs Lands $80M and Achieves Unicorn Status

ElevenLabs has achieved unicorn status after securing an $80 million Series B round led by Andreessen Horowitz, raising their total funds to $101 million. Founded by Piotr Dabkowski and CEO Mati Staniszewski, the company specializes in realistic voice synthesis through a web app targeting audiobooks, gaming, and screen dubbing applications within the expanding audio media market.

3. Hugging Face and Google Partner for Open AI Collaboration

Google and Hugging Face have announced a strategic partnership to advance open AI and machine learning development. This collaboration will integrate Hugging Face’s platform with Google Cloud’s infrastructure, including Vertex AI, to make generative AI more accessible and impactful for developers.

4. FTC Investigating Microsoft, Amazon, and Google Investments Into OpenAI and Anthropic

The Federal Trade Commission (FTC) opened an inquiry into the investments of Big Tech companies that provide cloud services to smaller AI companies. The FTC sent letters to Alphabet, Amazon, Anthropic, Microsoft, and OpenAI, requiring the companies to explain the impact of these investments on the competitive landscape of generative AI.

5. Elon Musk’s Startup Is Reportedly Building Up a War Chest To Take On OpenAI

Elon Musk’s AI startup xAI is reportedly looking to lock down billions more in funding as it continues to take aim at OpenAI. The company wants to raise as much as $6 billion at a proposed valuation of $20 billion, the Financial Times reported. Elon, however, denied plans for further fundraising on X/Twitter.

Five 5-minute reads/videos to keep you learning

1. Code LoRA From Scratch With PyTorch

Low-Rank Adaptation (LoRA) is an efficient finetuning approach for LLMs, which optimizes a subset of low-rank matrices instead of the complete set of neural network parameters. This post is a guide on implementing it from scratch with PyTorch.

2. makeMoE: Implement a Sparse Mixture of Experts Language Model From Scratch

This blog and the GitHub repository offer a detailed tutorial on creating character-level language models using a Sparse Mixture of Experts (MoE) architecture. This approach focuses on leveraging sparse feed-forward networks within transformer models, aiming to improve training speed and inference time while addressing challenges in training stability and deployment efficiency.

3. Monopoly Power Is the Elephant in the Room in the AI Debate

Should we be worried about a handful of incumbents controlling AI? Plenty of evidence shows that market concentration undermines innovation, reduces investment, and harms consumers and workers. In this essay, Max von Thun, Director of Europe and Transatlantic Partnerships at the Open Markets Institute, presents his views on monopoly in AI, its impact, and areas of focus to avoid it.

4. Why LLMs Are Vulnerable to the ‘Butterfly Effect’

According to research, constructing a prompt a certain way changes a model’s decision and accuracy. This article presents the four prompting variations applied in the study and their impact on the output. Find the research paper in the papers of the week section.

5. How Data Engineers Should Prepare for an AI World

The AI revolution will require Data Engineers to acquire new skills to help their organizations get the most from AI. This post shares four areas where AI will transform data analytics in the coming year and the skills data engineers must acquire to meet these needs.

Repositories & Tools

1. TaskWeaver is a code-first agent framework for seamlessly planning and executing data analytics tasks.

2. InstantID is a new diffusion model-based solution that addresses the challenge of character consistency in generative AI.

3. The llama-recipes repository by Facebook Research provides a comprehensive guide for prompt engineering with Llama 2.

4. Zed is a high-performance, multiplayer code editor from the creators of Atom and Tree-sitter.

5. RoMa is a library for PyTorch that deals with tricky rotations. It is reasonably efficient and can be used with any 3D data project.

Top Papers of The Week

This work attempts to answer if variations in how a prompt is constructed change the ultimate decision of the LLM. This is done using a series of prompt variations and various text classification tasks. Even the most minor changes can cause the LLM to change its answer.

2. Spotting LLMs With Binoculars: Zero-Shot Detection of Machine-Generated Text

The Binoculars method offers a novel approach for identifying ChatGPT-generated text with over 90% accuracy and a minimal % false positive rate of 0.01%. Using a contrasting score from dual language models, it outperforms existing algorithms and requires no example databases or finetuning, proving effective across various document types.

3. CheXagent: Towards a Foundation Model for Chest X-Ray Interpretation

To address the challenges of employing AI in interpreting Chest X-rays (CXRs), this presents three innovations: CheXinstruct, a substantial instruction-tuning dataset derived from 28 public datasets; CheXagent, an instruction-tuned LM designed to read and summarize CXRs; and CheXbench, a comprehensive benchmark to test LMs on eight clinically important CXR interpretation tasks.

4. Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data

Depth Anything is an innovative monocular depth estimation model trained on a dataset comprising 1.5 million labeled images and more than 62 million unlabeled images. This approach has significantly enhanced the model’s generalization capabilities without relying on new technical components.

5. AgentBoard: An Analytical Evaluation Board of Multi-turn LLM Agents

It is a benchmark designed for multi-turn LLM agents. It offers a progress rate metric that captures incremental advancements and a comprehensive evaluation toolkit that efficiently assesses agents for multi-faceted analysis through interactive visualization.

Quick Links

1. You.com released “AI Modes” to comprehensively solve complex, multi-step problems. The company that pioneered conversational, multimodal search before tech giants like Google, Microsoft, and even OpenAI is again leading the way with its new AI Modes.

2. The NSF and other agencies are partnering with AI developers to fulfill part of President Joe Biden’s executive order on AI. The government is working with 15 private sector partners, including AWS, Anthropic, EleutherAI, Google, Hugging Face, IBM, Intel, Meta, Microsoft, Nvidia, OpenAI, and Palantir.

3. Pinecone introduced Pinecone serverless, a reinvented vector database that lets you build fast and accurate GenAI applications at up to 50x lower cost. It’s available today in public preview.

4. Google Bard just moved into second place on HuggingFace’s Chatbot Arena Leaderboard, surpassing GPT-4 and trailing only GPT-4 Turbo in the crowdsourced LLM rankings.

Who’s Hiring in AI

Data Science Engineer (P3) @Twilio (Remote)

Applications Architect, AI @NVIDIA (US/Remote)

Software Engineer — AWS Identity, AWS Identity @Amazon (Seattle, WA, USA/Freelancer)

AI Data Operator @Metaphysic (Remote)

Working Student ML Infrastructure @Celonis (Germany)

Artificial Intelligence And Machine Learning Trainer @Asthra E-Learning (Part-time/Remote)

Senior Data Scientist I, NLP @Evisort (Remote)

Software Engineer @The RealReal (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.