Why Hype Matters: Thinking Practically about AI

Last Updated on December 11, 2023 by Editorial Team

Author(s): Andrew Blance

Originally published on Towards AI.

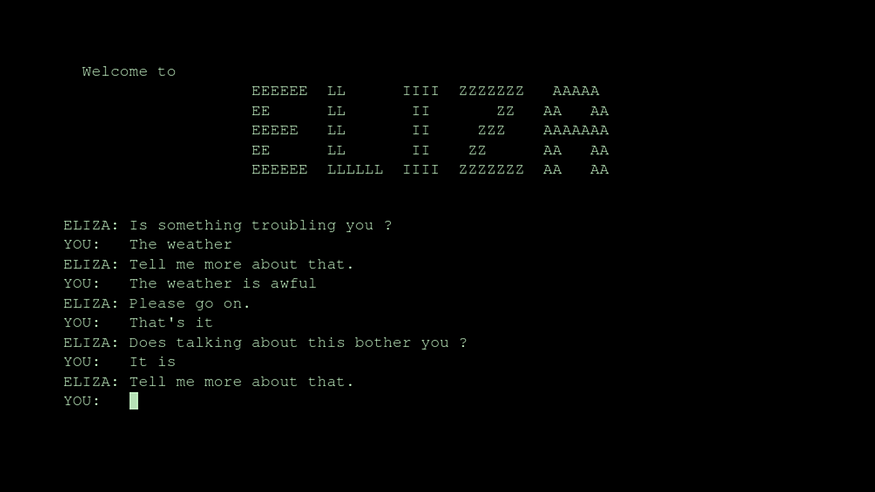

In the 60s, Joseph Weizenbaum was interested in how humans might communicate with computers. What this resulted in was ELIZA — a very early example of a chatbot. ELIZA worked in a very different way to a modern chatbot like ChatGPT, which is fed huge amounts of text and “learns” to figure it out. ELIZA was programmed with rules; it tried to match what you say with a number of patterns it has been programmed to recognize, and will give a response based on that. It must have been a huge undertaking to make — handwriting all that code to allow it to account for the vast amount of things a person might say to it, all in an archaic language that is now over half a century old.

This hard work seemed to work, though, and people who interacted with ELIZA began to think it understood them. Like, actually understood them. Even when Weizenbaum disagreed, people thought it was more than just some code. ELIZA could take the personality of a therapist, giving you meaningless platitudes like you see in the above image. However, this didn’t stop academics from thinking it would start replacing doctors and psychiatrists.

Sounds kinda familiar, right? Even though ELIZA was very basic (in a sense) compared to GPT4, people still rushed to believe it understood them and, from there, quickly decided it would also replace them.

What is hype?

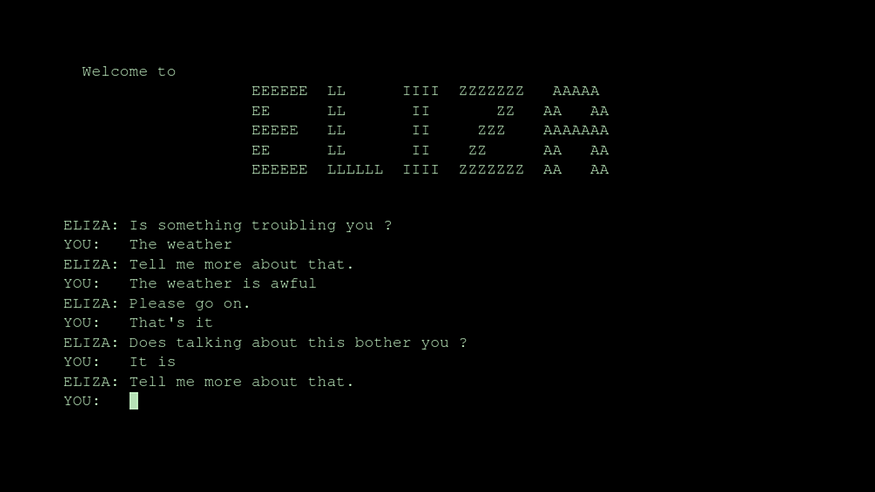

There is a familiar pattern to a new product getting hyped — it all falls onto a curve. At the start, people get more and more excited (and unreasonable) about the thing. Eventually, it reaches the peak of the curve. From there, reality sets in, and people’s excitement fades when they are confronted with the facts. Eventually, public opinion will bottom out at the Trough of Disillusionment. After everything settles down, and we find out what the technology is actually useful for, we reach a happy middle ground at the Plateau of Productivity.

My job involves working with emerging tech like robot dogs and VR headsets. I feel like I’m constantly presented with things that could be extremely overhyped. The Boston Dynamics robot dogs are so cool — it’s hard not to constantly proclaim they are the future (they are not). Let’s take a look at a few pieces of technology that were definitely not overhyped:

Well, maybe they were a little overhyped. Hopefully, they have reached a productive plateau, at least! Let’s check in on how they are doing in 2023:

Maybe (if you are familiar with these examples) it’s not a surprise how it all went down. Obviously, they are all a disaster. Google Glass died twice. Tesla is being sued. The founder of FTX currently lives in a federal prison. The thing is, the curve is not always right. Sometimes, you can’t make it through the curve, sometimes you go down into the trough of disillusionment and keep going down forever.

Why should we care though? Does it matter that Google Glass got really hyped and turned out to be terrible? Well, a bit. If you always hype everything and say every new technology is the future, who can believe you? Can people take your advice seriously? Of course, it’s exciting to say Teslas are 100% self-driving, but saying that when they are not, it leads to serious accidents.

By placing (and encouraging) unrealistic expectations on technology, we are setting ourselves up for disappointment. Potentially not even just ourselves, but also our coworkers and clients. Somewhere right now, where this is really clear, is AI

What will un-hype AI?

AI will eventually fall into a trough of disillusionment. I think the cracks are starting to show as people are confronted with the simple question of “how do I actually use this?” When you play with ChatGPT, it can be almost magical, but when you try to imagine how this can be applied to a business, the magic can fade. Clearly, these things are all subject to change, but it’s hard to see how all of them can be resolved:

- Security: How can we interact with AI securely and make sure our private data doesn’t leak out to the wider world

- Trustworthiness: Can we ever actually trust it? And if we can’t, can we actually use it to automate any process?

- Costs: Maybe we can get a secure and trustworthy AI, but the cost of a service like this has the potential to be very high.

- Bias: Overcoming the bias in the system will be tough. If you have an AI model that is going to interact with your clients, are you happy that it has seen all of Reddit and 4Chan and, at any point, may repeat something from there?

A final musing here is that we have been taught to believe that bigger is better. There is the idea of the “scaling laws” that govern these models — basically, the more data you pump in, and the bigger the model is, the better the output will be. However, these resources aren’t infinite — there are only so many GPUs in the world. More fundamentally, there is only so much data. By 2027, it is expected that all the high-quality text data in the world will have already been used to train a large language model. Then what?

So, if that will plunge us into the trough, what will pull us out? What is all the good stuff, that will enable us to use AI in a meaningful way?

- Making it about YOU!: A generic chatbot isn’t that useful, really. It’s cool that an AI can write a song about me, but that ain’t gonna help your business. By tightening their scope, but focussing it on you (by fine-tuning with your own data), these chat models will become far more useful. It is a fun idea that it can write a document, but it’s useful when it can write a document like you.

- Big Data is soooooo 2021: Until recently, to do large-scale data science, you needed a lot of data. With an LLM, this is not the case anymore — anyone can access GPT4

- Drafting EVERYTHING: Automation (this is a huge generalization) is typically around transforming numbers. AI can really enable automation pipelines that contain natural language. Assuming there is a human in the loop, that is.

Why should I care?

If you are reading this, chances are you have used ChatGPT at some point — you probably want clarity and realistic expectations. Hype muddles that clarity up. On the other side, many of us are developers, founders of start-ups, technologists, people who will use a prebuilt AI tool in something they are making or are simply proponents of AI. Hyping something and getting people to use it (especially something you have hand-made yourself) is not independent of what happens after you hand over the tool. Or, said in a much more eloquent way:

When you invent the ship, you also invent the shipwreck;

when you invent the plane you also invent the plane crash;

and when you invent electricity, you invent electrocution.– Paul Virilio

When you make something, you are partly responsible for what people do with it. As developers, we have a responsibility over all those negative things I mentioned above — biases, costs, trustworthiness.

It’s easy (and wrong) to think AI development is a faraway thing being done by devs in Silicon Valley that we have little sway over. Regardless, Virilio’s quote encompasses things beyond that, to all the technology we develop and recommend. We encounter new and untested ideas every day. From small things like a new feature in a software update to a major new SAAS product from an enormous tech company with a snazzy advertising campaign that promises to change everything.

Being realistic about AI shouldn’t be a distraction from being realistic and being pragmatic about everything. Is that new shiny SAAS product really ready for prime time? Should I definitely be using this new architecture? Technology is never the full solution. My job involves working with robot dogs, and it pains me so much to admit that maybe (just maybe) they aren’t that useful.

Thank you for reading! You can also get in touch here: LinkedIn, or take a look at the newsletter I contribute to here

\

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.