A Brief History of Neural Nets

Last Updated on July 25, 2023 by Editorial Team

Author(s): Pumalin

Originally published on Towards AI.

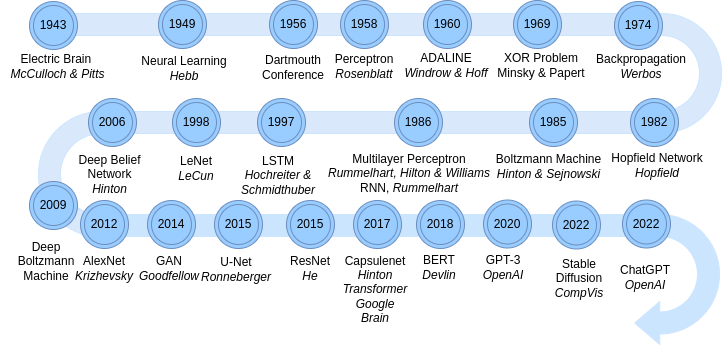

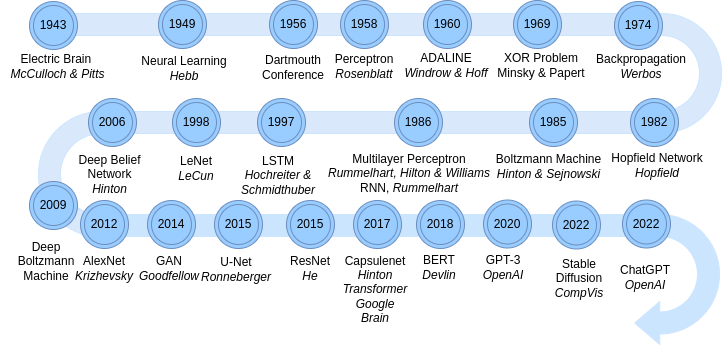

Important dates in the History of Neural Nets

This post gives a short overview about important dates in the development of Neural Networks. It is by no means meant to be complete.

- 1943: Warren Mc Culloch and Walter Pitts created a computational model for Neural networks. They developed a simple neural network using electrical circuits to show how neurons in the brain might work.

- 1949: Donald O’Hebb presents a learning hypothesis of (biological) neurons. He argues, that neural pathways are strengthened each time they are used. In other words, if two nerves fire at the same time, the connection between them is enhanced. The theory is often summarized as “Cells that fire together wire together.”. This became later known as Hebbian Learning.

- 1956: Scientists meet at the Dartmouth Conference. This conference is the Birth of Artificial Intelligence as a scientific field.

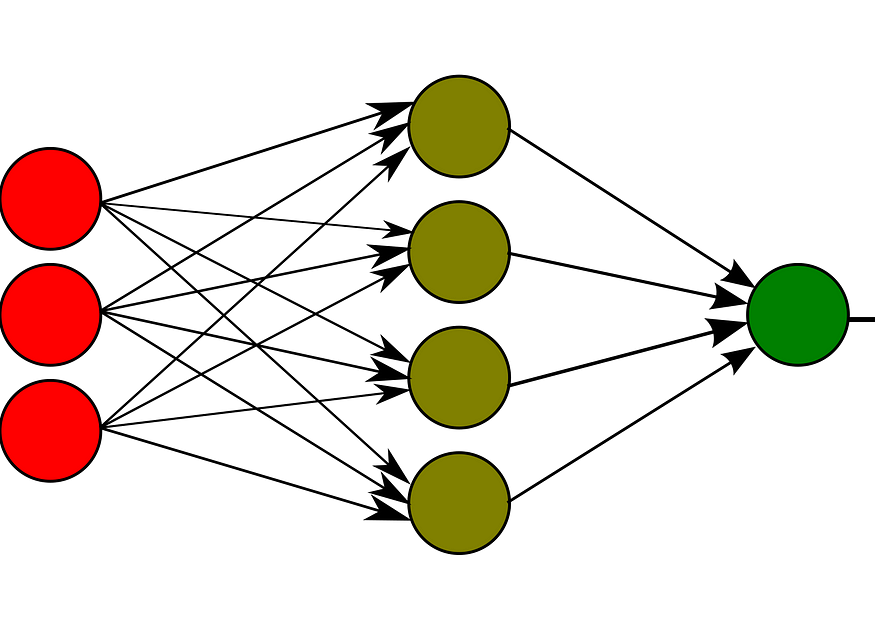

- 1958: Frank Rosenblatt develops the perceptron (single-layer neural network) inspired by the way neurons work in the brain. The Mark I Perceptron was the first successful implementation of this algorithm. This computer was able to recognize simple numbers.

- 1960: Bernard Widrow and Tedd Hoff develop the ADALINE (Adaptive Linear Neuron or later Adaptive Linear Element) network, which is a single-layer neural network. The physical device, where it was implemated carries the same name.

- 1969: Marvin Minsky and Seymour Papert analyze the perceptron mathematically and show that important problems were not able to be solved, as e.g. processing of XOR-operators. This leads to a stagnation in the research of Neural Nets.

- 1974: Paul Werbos describes the algorithm of training a Neural Net through Backpropagation.

- 1982: John Hopfield develops the Hopfield Network, a recurrent Neural Net, which describes relationships between binary (firing or not-firing) neurons

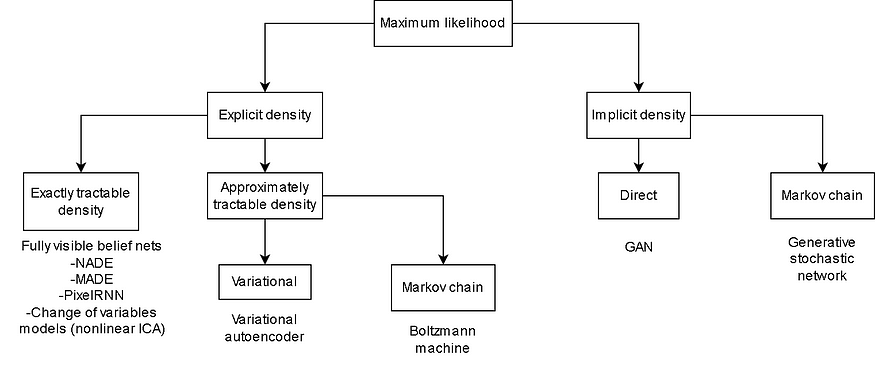

- 1985: Geoffrey Hinton and Terrence J. Sejnowski develop the Boltzmann Machine — named after the Austrian scientist Ludwig Boltzmann. A Boltzmann Machine is a type of Recurrent Neural Net. As in the Hopfield Net the neurons take binary values only, further connections are bidirectional.

- 1986: Rummelhart, Hilton and Williams propose the Multilayer Perceptron – a multilayer neural network. In contrast to the previously described Perceptron, non-linear (but differentiable) activation functions allowed non-binary neuron values. In the same year the concept of Recurrent Neural Networks (RNN) was introduced in a work by David Rummelhart. RNNs intent to improve the memory of a network, especially when working with sequential data. This is done by using the output of neurons as well as inputs.

- 1997: Hochreiter and Schmidthuber introduce the Long short-term Memory (LSTM) network. LSTMs build on RNNs, but improve their memory by partially solving the vanishing gradient problem.

- 1998: LeNet-5 — a Convolutional Neural Network was developed by Yann LeCun et al.. Convolutional Neural Nets are especially suited for image data. They recognize patterns in images through the application of (learned) filters. The original form of the LeNet was already presented in 1989.

- 2006: Geoffrey Hinton creates the Deep Belief Network, a generative model. They can be created by stacking layers of Restricted Boltzmann Machines.

- 2009: Ruslan Salakhutdinov and Geoffrey Hinton present Deep Boltzmann Machine, a generative model similar to a Deep Belief Network, but allowing bidirectional in the bottom layer.

- 2012: Alex Krizhevsky develops the AlexNet. A Convolutional Neural Network.

- 2014: Ian Goodfellow designs the Generative Adversarial Network (GAN). A GAN is able to create new data with the same statistics as the training data. The main idea of a GAN is to use two networks, that compete against each other. The first one — the Generator- creates new data. To the second one — the Discriminator — real and generated data is shown and it decides to which category they belong. In this way the quality of the generated data is improved.

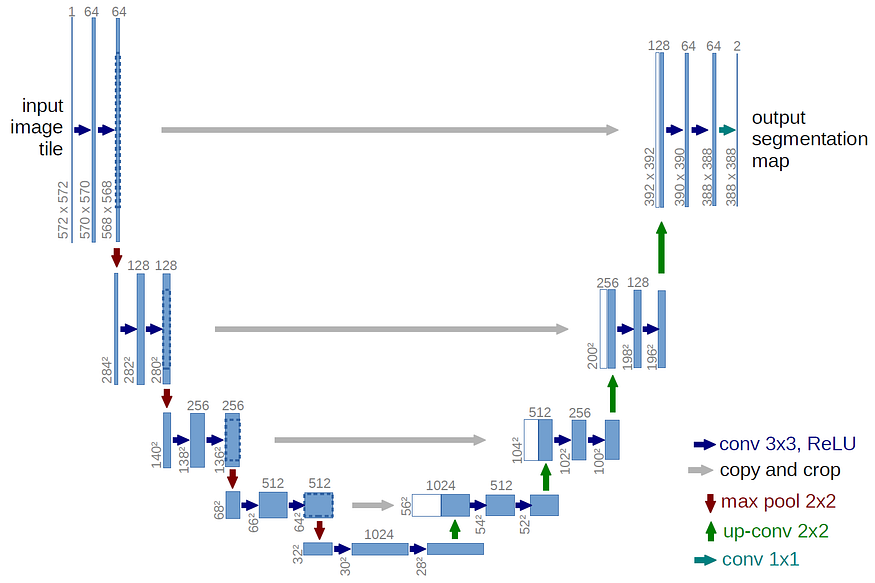

- 2015: Olaf Ronneberger et al. created the U-Net for biomedical image segmentation. The U-Net consists of a encoder convolutional network connected with a decoder network to upsample the image. An important feature of the U-Net is that these two parts are connected with so-called skip connections.

- 2015: Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun present the Residual Neural Network (ResNet). ResNet is a deep convolutional network that improves the vanishing gradient problem by introducing so called skip connections or shortcuts.

- 2017: Geoffrey Hinton, Sara Sabour, and Niholas Frosst present Capsulenet, a Neural Net consisting of capsules instead of single neurons. They intent to take orientation and spatial relatioship in an image into account. In the same year the Team Google Brain introduced the Transformer model. A Deep Learning model mainly used for Natural Language Processing. It parallelizes sequential data and is based on the self-attention mechanism.

- 2018: Jacob Devlin and his colleagues from Google published BERT (Bidirectional Encoder Representations from Transformers) — a transformer based model for Natural Language Processing (NLP).

- 2020: OpenAI publishes Generative Pre-trained Transformer 3 (GPT-3), a deep learning model to produce human-like text. The input is an initial text and it produces text that continues the given input.

- 2022: Stable Diffusion is a text-to-image model that generates images from text developed by the CompVis group at the Ludwig Maximilian University Munich, Germany.

- 2022: OpenAI develops and publishes ChatGPT an advanced chat bot. It builds on previous GPT models, but is much more developed. It is able to remember previous parts of the conversation and can give complex answers. It is even able to generate code as in the example below. The input phrase in this case was: “write a python script that calculates the percentage of a number with respect to a second number”.

Looking forward to what the future brings! U+1F680

References

- Neural Networks: History https://cs.stanford.edu/people/eroberts/courses/soco/projects/neural-networks/History/history1.html

- Melanie Lefkowitz, “Professor’s perceptron paved the way for AI — 60 years too soon”, Cornell Chronichle (2019)

- Asifullah Khan, Anabia Sohail, Umme Zahoora, and Aqsa Saeed Qureshi, “A Survey of the Recent Architectures of Deep Convolutional Neural Networks”, Artificial Intelligence Review (2020), DOI: https://doi.org/10.1007/s10462-020-09825-6

- Max Pechyonkin, “Understanding Hinton’s Capsule Networks. Part I: Intuition”, Medium (2017)

- Stable Diffusion Web Application: https://huggingface.co/spaces/stabilityai/stable-diffusion

- ChatGPT web application: https://chat.openai.com/chat

Get an email whenever Frauke Albrecht publishes.

Get an email whenever Frauke Albrecht publishes. By signing up, you will create a Medium account if you don't already…

medium.com

Join Medium with my referral link – Frauke Albrecht

Read every story from Frauke Albrecht (and thousands of other writers on Medium). Your membership fee directly supports…

medium.com

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.