Prompt-Based Automated Data Labeling and Annotation

Last Updated on May 2, 2023 by Editorial Team

Author(s): Puneet Jindal

Originally published on Towards AI.

Generate your large training dataset in just less than an hour!

What is the problem statement?

80% of the time goes in data preparation ……blah blah….

garbage in garbage out for AI model accuracy…..blah blah……..

In short, the whole data preparation workflow is a pain, with different parts managed or owned by different teams or people distributed across different geographies depending upon the company size and data compliances required.

If you have landed here, I assume you already know the problem. if you still haven’t understood the problem, you can read the below section otherwise, feel free to skip the below section

More detailing on the problem

- Most of the teams are collecting and just dumping the data into their cloud without even a plan to analyze it due to various obvious and unobvious reasons. They are not aware of what is in the data and do not have strategies in place for what to do with it. Data can reveal insights into what your customers want!

- Even if they know what's in the data on a high level, it is not enough to identify a business use case or a monetization opportunity. A very strong leadership intent is required to invest time in such an effort as it takes weeks to months just to uncover basic patterns in the data, which is potentially at least a few 1000 US dollars… and that too for a version 1 analysis.

- Once a list of potential use cases is identified, it takes months to just finalize what use case to pursue. for e.g., if a manufacturing or logistics company is collecting recording data from CCTV across its manufacturing hubs and warehouses, there could be a potentially a good number of use cases ranging from workforce safety, visual inspection automation, etc. Because you need to invest in building a POC or hire a very, very expensive expert who has already done that, but still, the experience might not suffice. 99% of consultants will rather ask you to actually execute these POCs.

- By this time, it's already months or years of efforts that have gone by without concrete results where AI is working at scale with its impact driving the bottom or top line. Management or leadership becomes impatient, and it becomes harder to convince for more budgets since time is running out.

- Let's assume you finally pick 1–3 use cases to pursue further on a large scale. The first challenge is to prepare the data as in selecting it out of a huge pile of redundant data such that highly accurate models can be trained while ensuring selecting only the data that matters to the model accuracy improvement. Because selecting it judicially reduces the data movement, data processing computation, and data labeling costs downstream

- Then once the data is collected, synchronized, and selected, it needs to be labeled, which, again, no one from the AI team wants to do. Nothing in the world motivates a team of ML engineers and scientists to spend the required amount of time in data annotation and labeling. There are a lot more complexities at this stage. if it needs to be outsourced, it will take its own time trying and managing different vendors, managing tasks and collating the data, reading the progress and ensuring data annotation quality, etc. You can read a very famous publication by the Google research team titled “Everyone wants to do the model work, not the data work”.

- Even post, this data needs to be collated in such a way that it is easy to consume inside the AI ML training engine such as AWS Sagemaker, GCP vertex AI, Azure ML, or even Jupyter Notebook on your VMs, etc.

There are a lot more, and I can go on and on …… I would be happy to connect with you about what sets of challenges you experienced in your workflow for your problem statements.

Now if you see, it's a complete workflow optimization challenge centered around the ability to execute data-related operations 10x faster.

Within this data, annotation and its quality is the messiest part of the problem. and this article is focused on discussion around the same below.

You must have heard about the recent disruptions around GPT and ChatGPT, where users can interact with the system in the form of prompts. for eg, I can ask DALLE-2 to generate new images for me with some simple text prompts. for this, you can read the famous article by one of my friends, Ritika

Understand the tech: Stable diffusion vs GPT-3 vs Dall-E

The world of Artificial Intelligence (AI) is rapidly innovating with the development of new algorithms, models, and…

www.labellerr.com

There is another foundation model SAM by Meta AI, where I can send a cursor prompt on an image, and the model will segment that area of the object. etc. You can read this article mentioned below.

SAM from Meta AI — the chatGPT moment for computer vision AI

It’s a disruption.

pub.towardsai.net

In a way, prompt-based interfaces are the new generation of interfaces. It's a new need now. We asked ourselves, what if we leverage the zero-shot capability of LLMs, or large foundation models and our own proprietary algorithms fine-tuning auto labeling layer?

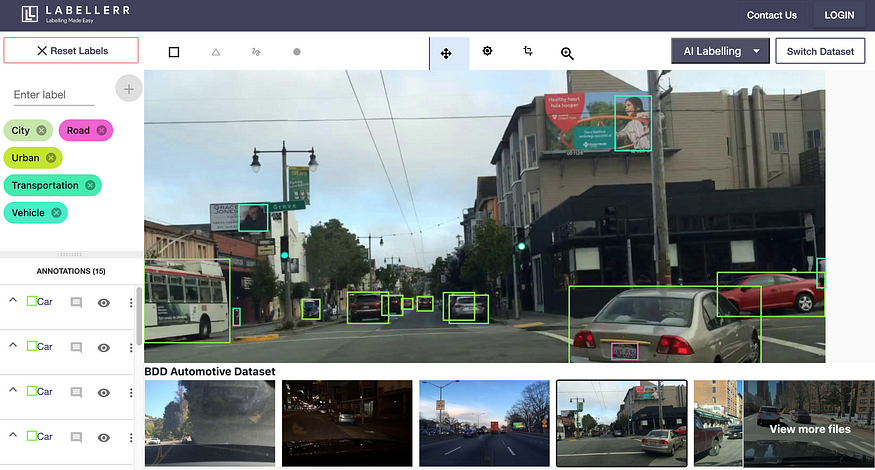

By putting this in a mixture and we end up creating a Simple interface as below where

- You just connect your thousands of images and videos, provide a set of labels as prompts that you want to label or annotate, and just hit Submit button.

- Within a few moments, the system will generate the results for you. Now in the case of Chatgpt, it even gives you wrong facts very confidently, which is the very limitation. But here system will flag the results for you where the system is confident and where it needs your attention.

- Finally, as a user, you can select the confident images and other not-confident but accurate ones to feed to your model in the format required with just a click. Or you can choose to fine-tune your labels with your model or our label-tuning premium capability or still at last, you can choose to outsource the last bit of very rigid subjective images and labels, which are still hard for automation sort of very unknown edgy subjective edge cases.

So what’s the benefit you got with this finally?

- You don’t need to outsource all and only outsource the very subjective edgy cases etc.

- Your time spent is hardly hours and not months, i.e., 90%+ lesser time.

- 1 single team member could run the labeling workflow instead of a team, and so it reduces the costs and complexity, making team members' life easier through a very simple interface

- We cut through the noise and hype around large foundation models and abstracted that out to you with a click and prompt-based interface. you could make the true max potential of this hype.

- Most importantly, you don't need to do all of the data-related workflows in Jupyter Notebooks or write API integrations.

My final question to you!

Do you feel that data preparation and AI development will be 11x more fun with the prompt-based interfaces?

Isn’t it a direction of Jarvis like capabilities for data preparation!

I would like to share what I think of it. Let’s connect over Linkedin as I write interesting and new aspects in computer vision data preparation, data ops, data pipelines, etc., and I am happy to chat on the same. Only technical deep dive!

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.