This AI newsletter is all you need #9

Last Updated on August 23, 2022 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

What happened this week in AI

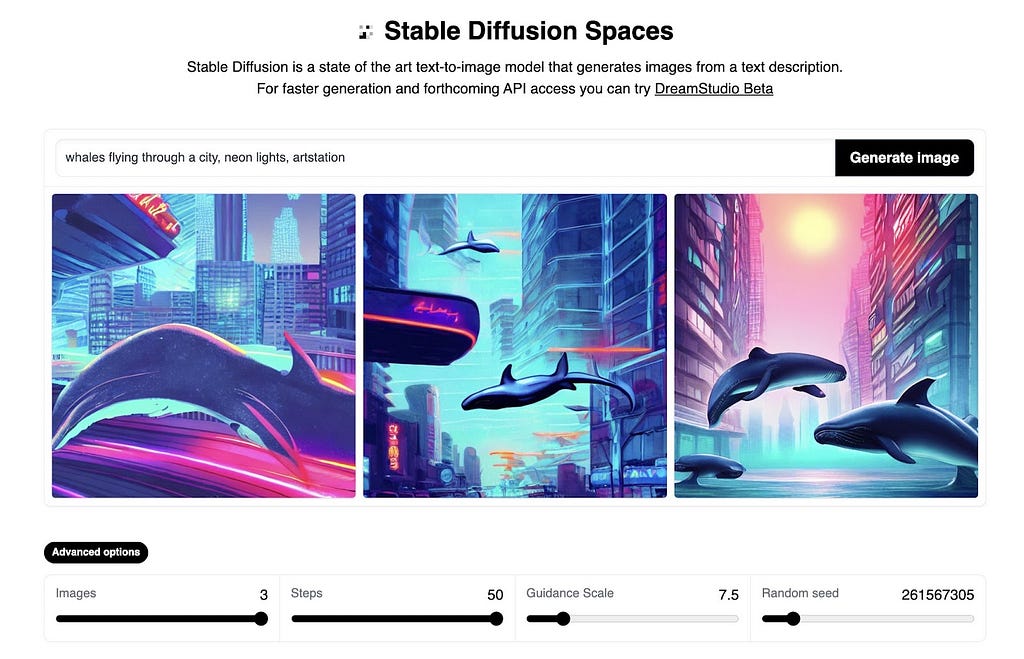

This week we had our attention turned from Dalle to a new open-source model called Stable Diffusion. Stable Diffusion comes from researchers at Stability AI, a London- and Los Altos-based startup, along with RunwayML, LMU Munich, EleutherAI, and LAION. The big deal: the AI model and code will be published as open source. Yes, it is open source, which means you have access to its code compared to DALLE, which is closed source.

Stable diffusion is very similar to DALLE 2 in terms of architectural choices, though much lighter due to their use of latent diffusion. Latent diffusion is a powerful way of using the diffusion process, which consists of taking the noise and generating an image, but does that in the latent space using an encoder and a decoder to go from the latent space to the image space. This enables anyone to implement their code as it can run on a single GPU, and it also allows for much faster inference times, which means you won’t have to wait for two minutes to get funny results as with DALLE or Craiyon anymore! It’s also an important step for the community to implement powerful state-of-the-art text-to-image models and experiment with the code, creating a lot of opportunities to bring the research forward with diffusion models and image models. We are very excited to follow the news and work done with Stable Diffusion! If you implement their code, please let us know and share your creations on our discord server!

You can also play with the Stable Diffusion API right now!

Hottest News

- Engineers from Stanford have developed a chip that does AI processing within its memory (improving computing efficiency)

“A novel resistive random-access memory (RRAM) chip that does the AI processing within the memory itself, thereby eliminating the separation between the compute and memory units. Their “compute-in-memory” (CIM) chip, called NeuRRAM, is about the size of a fingertip and does more work with limited battery power than what current chips can do.” - Stable Diffusion: An Open-Source image generator AI model!

Stable Diffusion comes from researchers at Stability AI, a London- and Los Altos-based startup, along with RunwayML, LMU Munich, EleutherAI, and LAION. The big deal: the AI model and code has been published as open source. Play with it here. - TikTok introduced “AI greenscreen”, their text-to-image generator

The results aren’t those of Dalle 2 or Midjourney yet, but it is quite cool and they are certainly getting into this race. Right now, AI greenscreen generates abstract “blobs” and swirling imagery focusing on creating nice backgrounds and not photorealistic images.

Most interesting papers of the week

- Paint2Pix: Interactive Painting based Progressive Image Synthesis and Editing

A novel approach that predicts (and adapts) “what a user wants to draw” from rudimentary brushstroke inputs, by learning a mapping from the manifold of incomplete human paintings to their realistic renderings. Get the code! - UPST-NeRF: Universal Photorealistic Style Transfer of Neural Radiance Fields for 3D Scene

A novel 3D scene photorealistic style transfer framework for transferring photorealistic style from an input image to a 3D scene, merging a 2D style transfer approach with voxel representations. - Transframer: Arbitrary Frame Prediction with Generative Models [deepmind paper]

Transframer: A general-purpose framework for image modeling and vision tasks based on probabilistic frame prediction, combining U-Net and Transformer components.

Enjoy these papers and news summaries? Get a daily recap in your inbox!

Announcements

At Towards AI Inc we have exciting news to announce — we have acquired Confetti AI. Confetti AI was founded by Mihail Eric and Henry Zhao in 2020 and has grown to 6,000 active users with a content library of over 350 questions. Confetti AI was built on a decade of experience in artificial intelligence and hundreds of hours of discussions with experts in the field. It aims to curate the leading library of machine learning and data science interview questions, focusing on both conceptual understanding and practical applications. Learn more about the news.

The Learn AI Together Community section!Hey

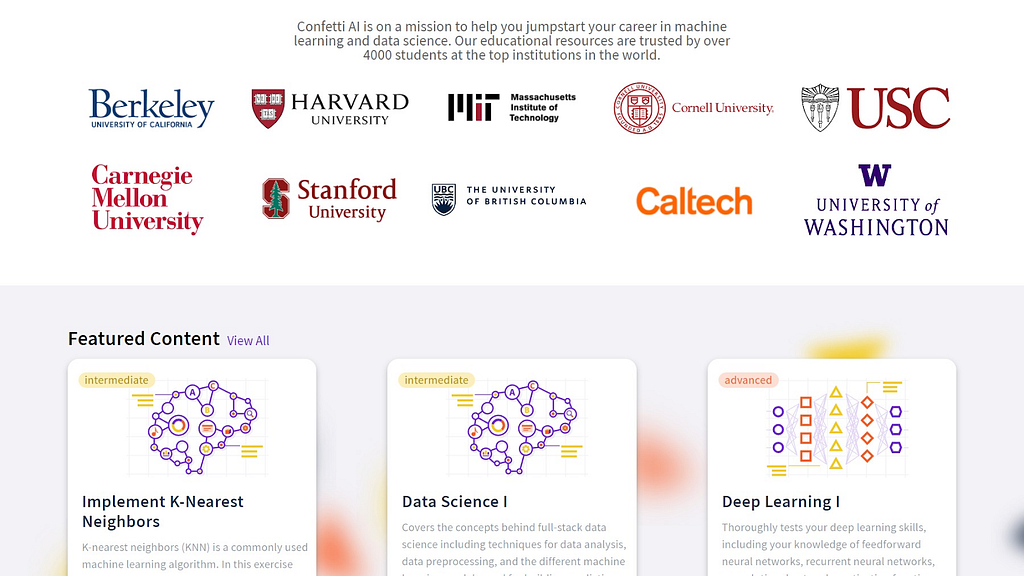

Meme of the week!

What an awesome way to evaluate the actual technical knowledge of a recruiter 😂. Of course, do not do that, it’s just a meme! Meme shared once again by one of our fantastic moderators with great humor, DrDub#0108. Join the conversation and share your memes with us!

Featured Community post from the Discord

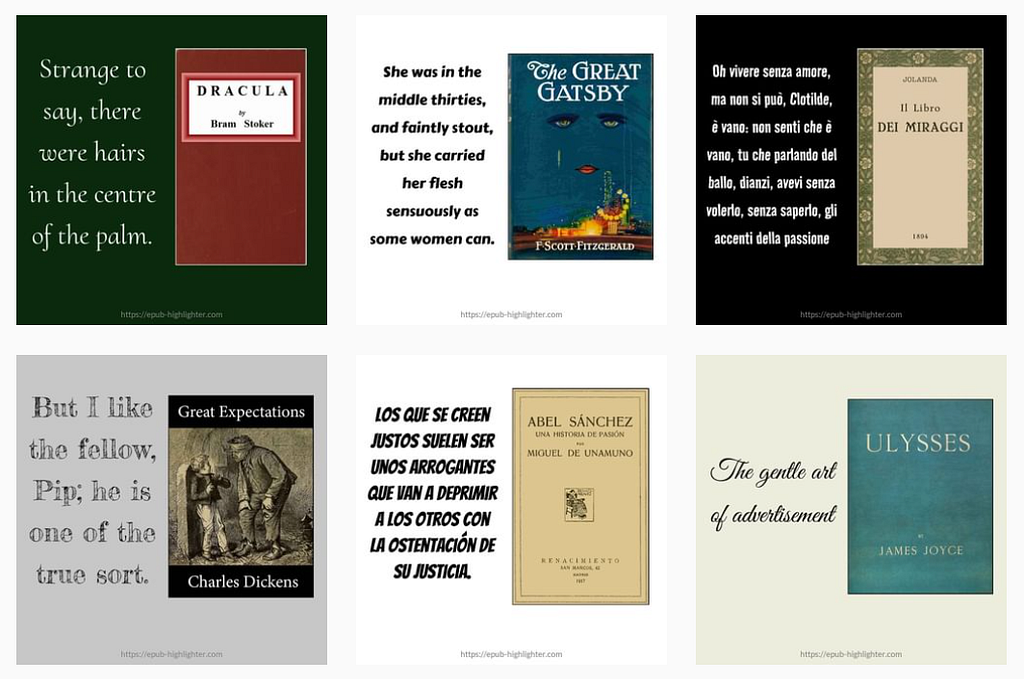

Awesome! One of our moderators just launched a super cool product!

“my product of book->IG post generator is inching towards launch.” DrDub#0108

DrDub’s product is all about using AI to generate Instagram posts from a book. Authors can use it for free to automatically generate and share quotes and interesting ideas for their books on Instagram. How cool is that!

Watch some of the results on its Instagram page, and don’t forget to share your own projects or products with the community if you’d like to be featured in the newsletter as well!

AI poll of the week!

TAI Curated section

Article of the week

Which NLP Task Does NOT Benefit From Pre-trained Language Models?

There is such a vast history of massively influential pre-trained generic language models that we take then for granted. Still, they are an absolutely required basis for most NLP applications. However, the author of the article we wanted to highlight claims that there are cases in which a pre-trained general model is ineffective, backing up his claims with excellent analysis.

If you are interested in publishing with us at Towards AI, please sign up here, and we will publish your blog to our network if it meets our editorial policies and standards.

Lauren’s Ethical Take on Stanford’s NeuRRAM

Stanford’s progress on NeuRRAM is an incredible feat! I highly encourage reading the entire article on it if you haven’t already. Although compute-in-memory technology is not new, its application beyond mere simulation is. Combined with doubled energy efficiency and promising performance on AI benchmarks such as MNIST and CIFAR-10, the NeuRRAM chip has incredible potential for good.

Based on its specifications, I can foresee that one of its major uses will be biomedical implantation. Compute power, size and efficiency are factors that are always points of friction with developing implants, so these improvements could easily result in direct benefits to implantees with no compromise in the device’s abilities. This advancement will come with its own set of bioethical puzzles in addition to engineering ones, such as ensuring information security and patient autonomy. Stanford has always been a leader in medical device innovation as well as ethics, making it well-equipped to lead this next wave of AI-powered implants.

Another huge benefit that stems from the development of NeuRRAM is the environmental impacts of reduced power consumption and hardware needs. The power required for large-scale computation and the toxicity/difficult disposal of tech waste makes our beloved machines a serious environmental burden, for both human lives and the lives we share the planet with. NeuRRAM’s success as a physical product shows that these advancements are possible and necessary for innovation. My hope is that this starts a trend focusing on doing more with less.

I want to highlight that in the article, H.-S Philip Wong mentions that the innovation of NeuRRAM was made possible through an international team with diverse expertises and backgrounds, which speaks well of the value of collaboration. I’m so excited to see where else NeuRRAM takes us!

Job offers

Research Scientist — Machine Learning @ DeepMind (London, United Kingdom)

Senior Data Scientist @ EvolutionIQ (Remote)

Senior ML Engineer — Algolia AI @ Algolia (Hybrid remote)

Senior ML Engineer — Semantic Search @ Algolia (Hybrid remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net or post the opportunity in our #hiring channel on discord!

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

This AI newsletter is all you need #9 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.