This AI newsletter is all you need #5

Last Updated on August 2, 2022 by Editorial Team

What happened this week in AI

The big news: DALL-E 2 is now in beta! OpenAI just announced the release of DALL-E 2 to 1 million people, ten times more than the pre-beta model. They successfully mitigated the risks of letting anyone use their image generation model as a product. You can no longer spam generations to have funny memes for free — it is now nearly $300 for the same amount of free generations you had pre-beta.

We had some terrific publications this past week like NUWA, BigColor, and Mega Portraits, all advancing the image generation field with fantastic approaches and results — as well as the ICML 2022 event that released its outstanding papers that are worth the read.

Last but not least, listen to this podcast hosted by one of our community members in this iteration!

Hottest News

- OpenAI Is Selling DALL-E to one Million People

If you’re lucky, you were one of the 100,000 people that have played with DALL-E 2 since its invite-only launch in April. This week, OpenAI opened the model to 10 times as many as they convert the AI into a paid-for service. The time of spamming images for memes is over — you now need to pay for it. - The WorldStrat Dataset

An open high-resolution satellite imagery dataset that curates nearly 10,000 sq km of unique locations. An accompanying open-source Python package is also provided to rebuild and extend the dataset — with application to super-resolution. - PLEX: a framework to improve the reliability of deep learning systems

Google introduced PLEX, a framework for reliable deep learning, as a new perspective on a model’s abilities; this includes a number of concrete tasks and datasets for stress-testing model reliability. They also introduce Plex, a set of pre-trained large model extensions that can be applied to many different architectures.

Most interesting papers of the week

- NUWA-Infinity: Autoregressive over Autoregressive Generation for Infinite Visual Synthesis

NUWA-Infinity: a generative model for infinite visual synthesis, i.e. high-definition, of any resolution, image synthesis, or “infinite” video generation from an image.

Code - BigColor: Colorization using a Generative Color Prior for Natural Images

BigColor: a novel colorization approach that provides vivid colorization for diverse in-the-wild images with complex structures by learning a generative color prior to focusing on color synthesis given the spatial structure of an image. - MegaPortraits: One-shot Megapixel Neural Head Avatars

They bring megapixel resolution to animated face generations (neural head avatars), focusing on the “cross-driving synthesis” task: when the appearance of the driving image is substantially different from the animated source image.

Enjoy these papers and news summaries? Get a daily recap in your inbox!

Looking for hands-on experience and a cool project?

Why not join aggregate intellect’s working Group on ML in Weather forecasting!

Starting 30th July!

Our friends at aggregate intellect will provide you with an in-depth understanding of the theory behind two state-of-the-art models for weather forecasting: FourCastNet by NVIDIA and NowCasting by DeepMind, and learn how to incorporate physics-based knowledge in ML forecasting models using the NVIDIA Modulus platform. This is a really cool project, and we are convinced it will benefit many of you.

Why join?

- You’ll get an immersion into that community & walk out with some cool new friends.

- Learn and get hands-on experience in Forecasting

- Contribute to a repository that will be seen/used by many

- Potentially publish an article on the topic

Learn more about it and register for free

The Learn AI Together Community section!

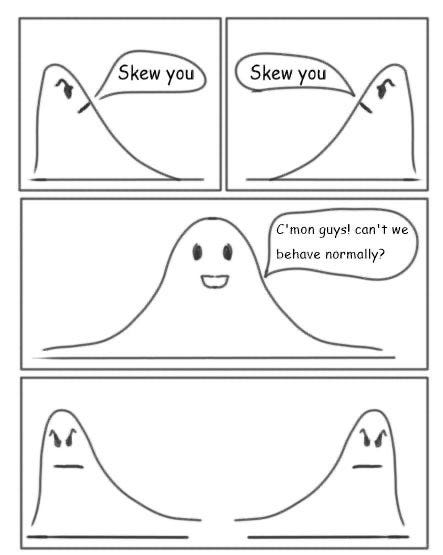

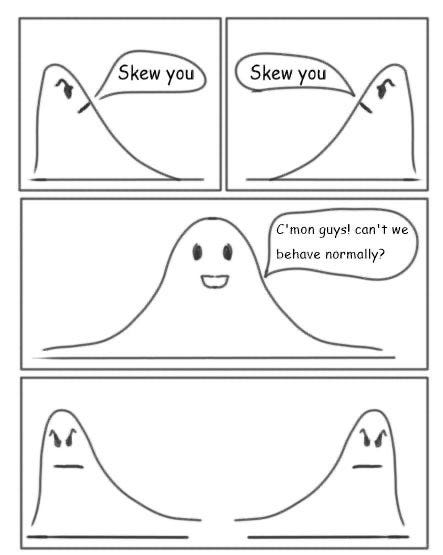

Meme of the week!

Featured community post from the Discord

One of our community members has a podcast: “The Artists of Data Science”.

As the host of the podcast, Harpreet Sahota, says, “The purpose of this podcast is clear: to make you a well-rounded data scientist. To transform you from aspirant to practitioner to leader. A data scientist that thinks beyond the technicalities of data and understands the impact you play in our modern world.”

If that sounds interesting, you can listen to his podcast here.

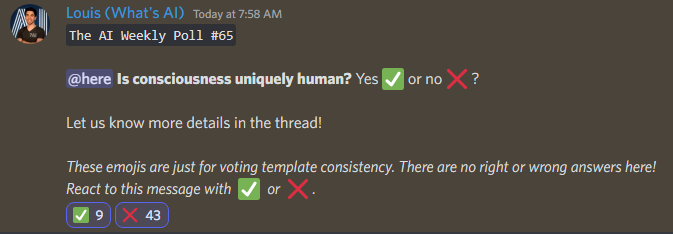

AI poll of the week!

TAI Curated section

Article of the week

Model Explainability — SHAP vs. LIME vs. Permutation Feature Importance: Understanding our machine learning model is crucial, and many engineers fail to do so. Learning more about complicated models enables us to comprehend how and why a model makes a choice and which characteristics were crucial in coming to that conclusion. This article covers how to understand models using post-hoc, local, and model-neutral strategies. There is extensive coverage of topics like Shapley Additive Explanations, Local Interpretable Model-agnostic Explanations, and Permutation Feature Importance.

This week at Towards AI, we published 29 new articles and welcomed four new writers to our platform. If you are interested in writing for us at Towards AI, please sign up here, and we will publish your blog to our network if it meets our editorial policies and standards.

Lauren’s Ethical Take on PLEX

Uncertainty is something we seem to constantly be trying to reduce with technology, and this is especially the case with AI. This idea of eliminating that notoriously uncomfortable gray area is aspirational for many models because expecting and elevating accuracy is how we’ve made such progress and offloaded some responsibility for decision-making. But not everything can be easily categorized, and you can hardly go through life without uncertainty. Now, Google AI’s PLEX framework is reflecting that there’s more to reliability than high accuracy. By providing options for uncertainty, it provides an added sense of realness and connection to a sometimes unchangeable facet of existence.

Recent years of tech literature have hypothesized on trust and trustworthiness of AI (such as this structure put forth by Jeanette M. Wing). These were adapted from trust in computing frameworks from the early 2000s, with most concluding that reliability is an essential aspect of building trust. This may help explain the technical shift toward reliability in an effort to achieve higher trust.

In these hypotheses specific to AI trustworthiness, some consider ethics to be a component of trust. I tend to support the notion that trust is a part of ethics and that trustworthiness in a mainstream application will build on itself to encompass larger and more difficult concepts such as moral responsibility.

Featured jobs this week

Senior Machine Learning Scientist @ Atomwise ( San Francisco — USA)

Senior ML Engineer — Algolia AI @ Algolia (Hybrid remote)

Senior ML Engineer — Semantic Search @ Algolia (Hybrid remote)

Machine Learning Engineer @ Gather AI (Remote — India)

Deep Learning Engineer (R&D — Engineering) @ Weights & Biases (Remote)

Senior Computer Vision Engineer @ Neurolabs (London & Remote)

Principal Machine Learning Engineer | AI Product @ Jasper.ai (Remote)

Machine Learning Engineer @ Runway (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net or post the opportunity in our #hiring channel on discord!

This AI newsletter is all you need #5 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.