This AI newsletter is all you need | #3

Last Updated on July 26, 2022 by Editorial Team

Author(s): Towards AI Editorial Team

Originally published on Towards AI the World’s Leading AI and Technology News and Media Company. If you are building an AI-related product or service, we invite you to consider becoming an AI sponsor. At Towards AI, we help scale AI and technology startups. Let us help you unleash your technology to the masses.

What happened this week in AI

Our AI highlight this week was a recent publication by Meta AI, “No Language Left Behind” (NLLB200). This new model leverages the Transformer architecture to translate between 200+ languages, with some having very little training data available with state-of-the-art performance.

What makes this news so cool? First, it is open-source, including everything from the paper, code, training scheme, how to obtain the dataset and pre-trained models. Second, we typically train an AI model to translate text with a huge amount of paired text data, such as French-English translations, so that the model understands how both languages relate to each other and how to go from one to the other and vice-versa. This means the model requires pretty much every possible sentence and text to have good results and generalize well in the real world, which is pricey, complicated, or just plain impossible for many languages.

Then, the traditional model performs the translation from one language to the other in a single direction, requiring a new model each time we want to add a new language. NLLB’s ability to translate between 200 languages all at once is what makes it so groundbreaking.

We highly value open-source work at Towards AI, and we think their recent open-sourcing efforts, including NLLB, are a laudable initiative by Meta AI.

Hottest News

- This AI model predicts your salary with 87% accuracy!

Sarah H. Bana recently trained a model on more than one million online job postings to evaluate the salary-relevant characteristics of jobs in close-to real-time. The model accurately predicted the associated salaries 87% of the time. By comparison, using only the job postings’ job titles and geographic locations yielded accurate predictions just 69% of the time. - OpenAI’s CLIP neural network tested for robots demonstrated racist and sexist stereotype behaviors

“Virtual robot run by artificial intelligence acts in a way that conforms to toxic stereotypes when asked to pick faces that belong to criminals or homemakers.” The lead study author Andrew Hundt says humanity is at risk of creating “a generation of racist and sexist robots.”

This is what happens when you train your models using uncurated Internet data: It averages down the answers to stereotypes present in our society. See more on the topic in Lauren’s ethical take section in the newsletter! - Google Introduced Mood Board Search, a new ML-powered research tool that uses mood boards as a query over image collections

The title says it all, “Explore image collections using mood boards as your search query”! It is a web-based tool that lets you train a model to recognize visual concepts using mood boards and machine learning. Try it and explore and analyze image collections using mood boards as your search query. Learn more and try it.

Most interesting papers of the week

- No Language Left Behind: Scaling Human-Centered Machine Translation

GPT-3 and other language models are really cool. They can be used to understand pieces of text, summarize them, transcript videos, create text-to-speech applications, and more, but all have a shared big problem: they only work well in English. This language barrier hurts billions of people willing to share and exchange with others without being able to. This new model is translating 200 languages with a Transformer-based architecture. Learn more. - Local Relighting of Real Scenes

They introduce the task of local relighting, which changes a photograph of a scene by switching on and off the light sources that are visible within the image with a GAN-based approach and a benchmark, Lonoff, a collection of 306 precisely aligned images taken in indoor spaces with different combinations of lights switched on. - SNeRF: Stylized Neural Implicit Representations for 3D Scenes

Given a neural implicit scene representation trained with multiple views of a scene, SNeRF stylizes the 3D scene to match a reference style.

Enjoy these papers and news summaries? Get a daily recap in your inbox!

Come meet us at Ai4 2022!

Apply to the event and come for free! If you are around Las Vegas in August and into AI, why wouldn’t you join us there?

Ai4 2022 is an event focused on business leaders and data practitioners aiming to facilitate the responsible adoption of artificial intelligence and machine learning technology.

Take a look at all the talks, decide for yourself, and let us know if you’re going! Our Head of the community, Louis Bouchard, will be glad to meet you there in person.

Interested in becoming a Towards AI sponsor and being featured in this newsletter? Find out more information here or contact sponsors@towardsai.net!

The Learn AI Together Community section!

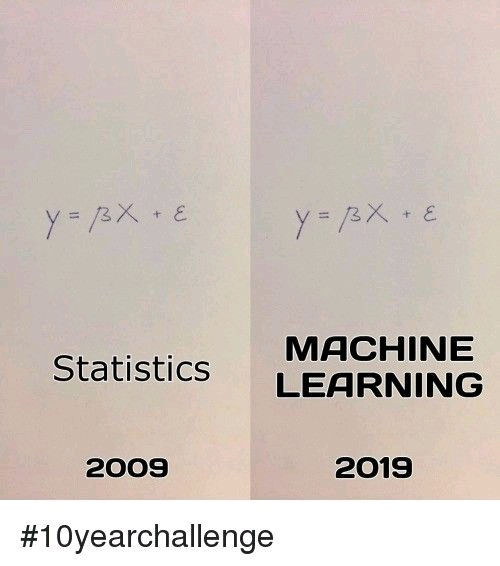

Meme of the week!

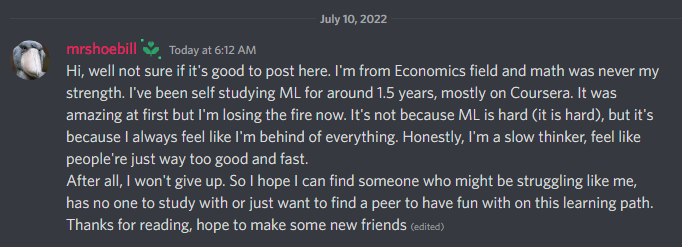

Featured Community post from the Discord

We want to make things clear: this is, in fact, the perfect introduction and the ideal profile for our community members! Of course, we also highly value professionals, researchers, and professors who are willing to join the community, exchange with us and even help others currently learning or implementing AI models needing advice or help.

We have a channel called “#👨🎓self-study-groups”, created to find some people to study with. Likewise, you don’t need to be a math expert, a programming expert, or any kind of expert to be involved. We created this community for people to learn together, hence the “Learn AI Together” name, and this is still what we aim to create. There are no requirements to join and enjoy the community other than being interested in the field, and we value the diversity of perspectives that grow and strengthen our community.

So, if you are interested in AI, regardless of your background, consider joining and chatting with all of us!

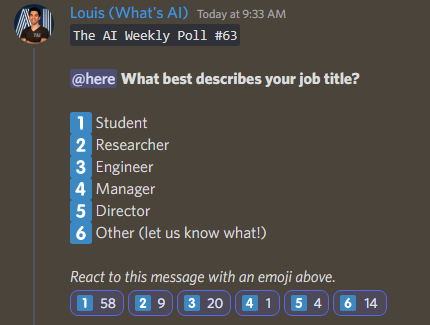

AI poll of the week!

Let us know our community better by answering our weekly poll on Discord! We’d love to see if you fit in one of those categories or occupy any other role. It would help us provide more valuable content directly for you 🙂

TAI Curated section

Article of the week

SiEBERT, RoBERTa, and BERT: Which One to Implement in 2022?: This article provides a comprehensible overview of SiEBERT, RoBERTa, and BERT. The author makes it easy for readers to pick the perfect model by outlining the benefits and use cases of each version. This article also covers the inner workings of RoBERTa in a few simple bullet points.

Last week we published a record 38 new AI blogs and welcomed 9 new writers to our platform. If you are interested in publishing at Towards AI, please sign up here and we will publish your blog to our network if it meets our editorial policies and standards. Otherwise, we will help you to do so.

Where Audits Falter: Lauren’s Ethical Take on OpenAI’s CLIP bias problem

OpenAI conducted a first-party audit of CLIP in August of 2021. In section 2.1, they issue a disclaimer that “These experiments are not comprehensive. They illustrate potential issues stemming from class design and other sources of bias, and are intended to spark inquiry.” When addressing downstream effects of CLIP, the authors conclude that “…one step forward is community exploration to further characterize models like CLIP and holistically develop qualitative and quantitative evaluations to assess the capabilities, biases, misuse potential and other deployment-critical features of these models.”

Usually, the benefit of an in-house audit is to catch problems before they create these negative real-world effects. Yet, this recent study conducted by Andrew Hundt, William Agnew, Vicky Zeng, Severin Kacianka, and Matthew Gombolay demonstrates that those effects have, in fact, taken hold in mainstream applications and cause real-world harm. In addition to this, in section 5.1 the authors highlight that the policies that were in place to stop this harm did not do as they promised, including the inclusion statements and codes of conduct of nearly every organization and university-affiliated with CLIP, concluding that they are too vague to work.

This becomes apparent as we learn that audits themselves have a long way to go. A recent paper by the Algorithmic Justice League proves the many ways that audits are substantially lacking and what barriers impede their improvement, including the difficulty of reporting and tracking real-world harm and the cost of auditing.

In light of this short history, news of CLIP’s bias is not shocking in the slightest. As shown above, attempts to mitigate bias failed at every step over the course of almost a year. Whilst well-intentioned, OpenAI calling for community exploration as a solution feels like too little too late and a deferral of responsibility given harm is already taking place, and in our view it should be resolved with increased corporate accountability at both private and public levels. If algorithms are to continue to shape individual and collective decisions, we have to take more initiative to slow and stop the incorporation of bias before it creeps in, and effectively mitigate it when it does — with actions to support the words, lest they lose their meaning through empty promises. I’m very grateful that the work is being done by teams focused on AI ethics and justice, and for opportunities to support the resolution of these problems.

Job offers

Senior Machine Learning Scientist @ Atomwise ( San Francisco — USA)

Senior ML Engineer — Algolia AI @ Algolia (Hybrid remote)

Senior ML Engineer — Semantic Search @ Algolia (Hybrid remote)

Machine Learning Engineer @ Gather AI (Remote — India)

Deep Learning Engineer (R&D — Engineering) @ Weights & Biases (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net or post the opportunity in our #hiring channel on discord!

This AI newsletter is all you need | #3 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Join thousands of data leaders on the AI newsletter. It’s free, we don’t spam, and we never share your email address. Keep up to date with the latest work in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Take our 90+ lesson From Beginner to Advanced LLM Developer Certification: From choosing a project to deploying a working product this is the most comprehensive and practical LLM course out there!

Towards AI has published Building LLMs for Production—our 470+ page guide to mastering LLMs with practical projects and expert insights!

Discover Your Dream AI Career at Towards AI Jobs

Towards AI has built a jobs board tailored specifically to Machine Learning and Data Science Jobs and Skills. Our software searches for live AI jobs each hour, labels and categorises them and makes them easily searchable. Explore over 40,000 live jobs today with Towards AI Jobs!

Note: Content contains the views of the contributing authors and not Towards AI.